Chris Downs

Members-

Posts

67 -

Joined

-

Last visited

-

Days Won

7

Chris Downs last won the day on April 20 2022

Chris Downs had the most liked content!

Recent Profile Visitors

2028 profile views

Chris Downs's Achievements

Advanced Member (3/3)

12

Reputation

-

You could put the drive through some read/write operations, perhaps using a disk benchmarking tool. See if any other issues arise - if not, probably fine to use again, but I'd keep unduplicated files off it. It's fairly normal for a disk to end up with bad sectors, and it sounds like it's re-mapped the disk to exclude them. Once the drive firmware has re-mapped, scanner won't see the errors (nor should it be capable).

-

Shane reacted to an answer to a question:

Why isn't XYZ Provider available?

Shane reacted to an answer to a question:

Why isn't XYZ Provider available?

-

dsteinschneider reacted to an answer to a question:

Windows 10 support etc

dsteinschneider reacted to an answer to a question:

Windows 10 support etc

-

Chris Downs started following Can't sign in, and my apps are disconnected/unlicensed now.

-

Got a license warning from Scanner and Drivepool, and I went to check on stablebit.cloud, went through sign-in (Google account), and it then hangs on a not-responding page: Then can't access the site at all unless I clear cookies ("sign out"). Tried on multiple browsers. Also tried manually entering my license keys into Drivepool - says invalid (I guess because it can't reach the server?). Help! [EDIT] Managed to log in from a PC offsite and remove everything from cloud - original activation ID works manually. Guess it stopped liking my home IP address?!

-

Check that the HBA is not overheating. If it's not in a high-airflow server chassis, you'll need a dedicated fan blowing air over it. Had this issue myself once - the controllers have puny heatsinks, as they are assumed to be in a chassis with 6000rpm fans.

-

Very odd! How do you know Drivepool deleted the data? I don't think it's something Drivepool can even do? @Christopher (Drashna) any ideas?

-

Archive is where data will end up after its time on the SSDs is up. I could be wrong, but I think Archive also means that if you were to re-balance data, the drives *without* archive set are prioritized for re-writes and data moving. Archive means "leave data here unless absolutely necessary". eg, if you have any SMR HDDs, you don't really want data being moved about on them.

-

Having done this a few times in the past, and having to bug support as a result... the best way is to uninstall Drivepool *first*, then make the move and install on the new machine... ;-)

-

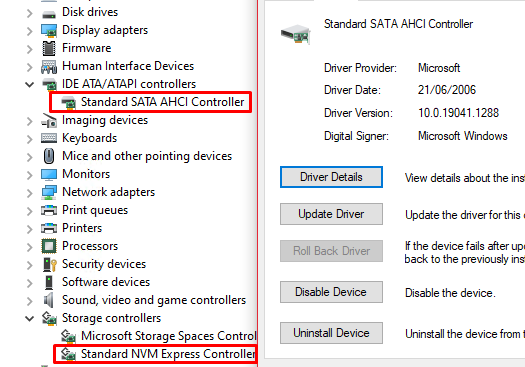

For add-in SATA PCIe cards, you should generally use the default Windows drivers, and NOT the manufacturer ones in most cases. Same goes for NVME. See screenshot. I've experienced the exact issues you describe when trying the official Asmedia drivers with PCIe cards - random issues that all disappeard on letting W10 decide on the drivers. I would certainly not use W7 in 2022 either, regardless of whether or not users log in... if it's got Internet access, it's too risky. Once you de-clutter W10, it runs very nicely. There is a free tool called "Geek Uninstaller" that can remove programs cleanly, and the newer Windows Store style apps - works very nicely. There are also several tools for removing other clutter if needed, search "Windows 10 debloat".

-

There is a Throttling tab in Scanner settings... tick "Do not interfere with disk access" and set a mid or high sensitivity. Set the priority to background if it isn't already.

-

Ultradianguy reacted to an answer to a question:

Why isn't XYZ Provider available?

Ultradianguy reacted to an answer to a question:

Why isn't XYZ Provider available?

-

Why isn't XYZ Provider available?

Chris Downs replied to Christopher (Drashna)'s question in Providers

Any update on WebDAV? Is there a specific issue preventing it being added? It's been a while... -

Same here, with OneDrive. So far, box and S3 are ok.

-

Same issue here on multiple machines - must have been a bad update. Reset all settings from within Scanner, and it should start working again. Scanner.Service.exe Information 0 [Ipc] Start scan of disk '\\.\PHYSICALDRIVE2'... 2021-07-29 14:54:16Z 234711190962 Scanner.Service.exe Information 0 [DisksScanner] Added new disk \\.\PHYSICALDRIVE2 to queue and starting scan. 2021-07-29 14:54:16Z 234712171016 Scanner.Service.exe Information 0 [Scanner] Starting scan of '\\.\PHYSICALDRIVE2' at 29/07/2021 15:54:16... 2021-07-29 14:54:16Z 234712208936 Scanner.Service.exe Warning 0 [Scanner] Error in scanning thread: Arithmetic operation resulted in an overflow. 2021-07-29 14:54:16Z 234712265777 Scanner.Service.exe Information 0 [Main] Disk \\.\PHYSICALDRIVE2 scan ended. 2021-07-29 14:54:16Z 234712266861 Scanner.Service.exe Information 0 [Main] All disk scans ended. 2021-07-29 14:54:16Z 234712528965 Scanner.Service.exe Information 0 [NotificationsCloud] Clear ScanningSurface 2021-07-29 14:54:18Z 234731905167 Log provided for reference, but I don't think it's anything other than a bad update at some point. Working fine after a reset.

-

Chris Downs reacted to a post in a topic:

Microsoft Storage Space Drives not detecting

Chris Downs reacted to a post in a topic:

Microsoft Storage Space Drives not detecting

-

KlausTheFish reacted to a post in a topic:

Microsoft Storage Space Drives not detecting

KlausTheFish reacted to a post in a topic:

Microsoft Storage Space Drives not detecting

-

Google Drive, and many others (especially free ones), have upload bandwidth restrictions (mb/hr) that you may be exceeding. The first stage is a limiter on speeds if you go over it. I believe the time window is 2 hours, but I do not know the current data figures. There also is a per-day limit of 750GB, but that's a hard cap that blocks uploading completely for a day. What speeds do you get if you use the native Google Drive app? As my upstream Internet is only 20mbit, I can't help by testing mine. I stopped using Google Drive in my Cloud Drive setup a while back, as even with my lower upstream speeds, I was hitting some restrictions (perhaps on number of files?) with Cloud Drive. It may just be that you need to tweak the Cloud Drive settings, like block size etc, to reduce the number of files created?

-

Try this: Get hold of "Geek Uninstaller" here: https://geekuninstaller.com/download Use that to a) see if the plugin is in the list and b) remove all trace of it if it's there. Then disable your AV software and re-install SSD Optimizer, and reboot. (This particularly applies if you use Windows Defender, as W10 20H2 has some significant security changes that have caused issues for many non-Microsoft programs.)

-

I wonder, is this something that could be implemented by means of a plugin at all? Or does the API not expose/allow things at that level? If it can, then perhaps a community led solution might be possible, with a little guidance?

-

Microsoft Storage Space Drives not detecting

Chris Downs replied to KlausTheFish's topic in Compatibility

It can't be added. The "RAID" setup presents the resulting drive to the OS as a volume - it has no SMART data. The individual disks may well be accessible as hardware devices, but they do not present as accessible volumes/drives. WD Dashboard works because it is specifically designed to access the relevant hardware at a low level. Each manufacturer does this a little differently, so it's probably not feasible to add to Scanner, which works on a software level.