Search the Community

Showing results for tags 'clouddrive'.

-

This is almost certainly my own error, and part of the problem may be that I can't find the exact words to describe the problem. I have gradually been moving some local storage into the CloudDrive framework, because it's on a second networked machine, and I wanted to use DrivePool. I run 3 different DrivePools, and it's taken me a bit of organising and reorganising to get the right drives in the right pool. I think that's where things may have gone wrong. Situation: I have a physical HDD, mounted on its host machine as W:\. On my CloudDrive host machine, I have created a 4TB File Share Cloud Drive on the network share of that drive, named Cloud-SS-W. I have mounted that drive locally as C:\MountCloudW, so I can browse to it. CloudDrive says that Cloud-SS-W has 3.15TB 'Cloud used'. This makes sense to me, given the data that I believed was on it. When I browse to W:\ in the filesystem of the drive's host machine, it has 3.15TB of files in a StableBit Cloud Share Data folder. When I browse to C:\MountCloudW in the filesystem of the CloudDrive host machine, it says it has 4TB free, and no files beyond the Recycle Bin and System Volume Information system folders. How do I recover the files that have been chunked by CloudDrive, and are sitting in the Cloud Share Data folder? The attachment and metadata files are still there too, along with 32999 100MB part1-chunk-###.dat files

- 1 reply

-

- clouddrive

- recovery

-

(and 1 more)

Tagged with:

-

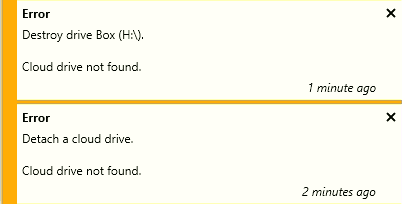

I cannot even mount any drives with pending data at this point. This is in the service log and on this and other machines it just keeps getting longer (I think it was up to several hours at one point). Do you know if there have been any changes at Box? Please advise: 0:01:55.1: Information: 0 : [ServiceStoreSynchronization] Initial synchronization complete. 0:01:55.1: Information: 0 : Adding firewall rule 'StableBit Cloud - UDP (CloudDrive.Service.exe)'. 0:01:55.2: Information: 0 : [Main] Starting notifications... 0:01:55.3: Information: 0 : [Main] Starting BitLocker... 0:01:55.4: Information: 0 : [Main] Enumerating disks... 0:01:55.5: Information: 0 : [SignalClient.Controller] Connected to 173.68.147.210:34160. 0:01:55.5: Information: 0 : [ServiceStoreSynchronizationSignal] [signal] Connected to Signal server (173.68.147.210:34160). 0:01:56.0: Information: 0 : [ServiceStoreSynchronization:27] [change-tracking] Set CloudServiceInfo on cloud (Result=Saved, LocalCloudRevision=, CloudRevision=1) 0:01:56.0: Information: 0 : [ServiceStoreSynchronization:27] [change-tracking] SetBatch on cloud (T=00:00:00.1781305) 0:01:56.1: Information: 0 : [ServiceStoreSynchronization:27] [change-tracking] Set UpdateInfo on cloud (Result=Saved, LocalCloudRevision=187, CloudRevision=188) 0:01:56.1: Information: 0 : [ServiceStoreSynchronization:27] [change-tracking] Set UpdateInfo on cloud (Result=Saved, LocalCloudRevision=277, CloudRevision=278) 0:01:56.1: Information: 0 : [ServiceStoreSynchronization:27] [change-tracking] Set UpdateInfo on cloud (Result=Saved, LocalCloudRevision=727, CloudRevision=728) 0:01:56.1: Information: 0 : [ServiceStoreSynchronization:27] [change-tracking] SetBatch on cloud (T=00:00:00.1627609) 0:01:56.1: Information: 0 : [ServiceStoreSynchronizationSignal:18] [signal] Cloud storable 0x3ab8b3c3d6c5fac1070e41136c9c21548f67b485 changed (CloudRevision=278). 0:01:56.2: Information: 0 : [ServiceStoreSynchronizationSignal:25] [signal] Cloud storable 0x6fcdb1ee9ababd38601c473df38c947206b7daab changed (CloudRevision=188). 0:01:56.2: Information: 0 : [ServiceStoreSynchronizationSignal:5] [signal] Cloud storable 0x60674b24d33d720f08a81e39f1eac17b56dd8b4a changed (CloudRevision=728). 0:02:02.6: Information: 0 : [Disks] Updating disks / volumes... 0:02:03.4: Information: 0 : [Main] Updating free space... 0:02:03.4: Information: 0 : [Main] Service started. 0:02:03.4: Information: 0 : [Main] Synchronizing cloud drives... 0:02:05.4: Information: 0 : [CloudDrives] Synchronizing cloud drives... 0:02:05.5: Information: 0 : [CloudDrives] Valid encryption key specified for cloud part 3b9fdbb9-b92f-43e2-a148-f6b32737475c. 0:02:07.0: Information: 0 : [ServiceStoreSynchronization:46] [change-tracking] Set CloudProviderInfo on cloud (Result=Saved, LocalCloudRevision=, CloudRevision=1) 0:02:07.0: Information: 0 : [ServiceStoreSynchronization:46] [change-tracking] Set CloudDriveInfo on cloud (Result=Saved, LocalCloudRevision=, CloudRevision=1) 0:02:07.0: Information: 0 : [ServiceStoreSynchronization:46] [change-tracking] Set CloudDiskStatisticsInfo on cloud (Result=Saved, LocalCloudRevision=, CloudRevision=1) 0:02:07.0: Information: 0 : [ServiceStoreSynchronization:46] [change-tracking] Set CloudDriveStatusInfo on cloud (Result=Saved, LocalCloudRevision=, CloudRevision=1) 0:02:07.0: Information: 0 : [ServiceStoreSynchronization:46] [change-tracking] Set CloudMountingInfo on cloud (Result=Saved, LocalCloudRevision=, CloudRevision=1) 0:02:07.0: Information: 0 : [ServiceStoreSynchronization:46] [change-tracking] Set CloudUnmountableInfo on cloud (Result=Saved, LocalCloudRevision=, CloudRevision=1) 0:02:07.0: Information: 0 : [ServiceStoreSynchronization:46] [change-tracking] SetBatch on cloud (T=00:00:00.3990282) 0:02:13.8: Warning: 0 : [ApiBox] Server is throttling us, waiting recommended 1,716,000ms and retrying.

- 2 replies

-

- clouddrive

- box

-

(and 1 more)

Tagged with:

-

Hi there, Is it possible to use a DrivePool made up an SSD (1TB) and an HDD (10TB) as the cache drive for a CloudDrive? I would love to use the SSD Optimizer plugin with DrivePool to create a fast and large cache drive. This would give the best of both worlds, newer and more frequently used files would be stored on the SSD, older files would slowly be moved to the HDD, you would only need to download from the CloudDrive if you are accessing an older file that is not in the cache... Is this possible?

- 8 replies

-

- clouddrive

- drivepool

-

(and 1 more)

Tagged with:

-

Hey all, Tried to search google / forum for a solution to this but no dice. So I've got clouddrive setup on one PC but I'm trying to migrate it to another machine. I deactivated clouddrive on machine 1 and activated it on machine 2, as well as authorized google drive on machine 2. Problem is, it does not load the settings from the previous machine install w/attached drives. I tried to move all 4 (cloudpart.*) drives which are shown as 60TB a piece (though around 2gb on disk) with no luck. Hoping someone here has a solution so I can get these drives working on machine 2 so I can finally power down machine 1 and save energy / wear'n'tear, thanks!

- 2 replies

-

- migrating

- clouddrive

-

(and 1 more)

Tagged with:

-

I have been using clouddrive and drivepool with google drive but my reeds go up to 9 MB/s and drop to 1 MB/s which won't allow me to play UHD ISO files. I have expandable cache on 16tb EXO DRIVES. ANYONE HAS ANY ADVICE?

-

Avira is telling me CloudDrive.UI.exe contains TR/Dropper.MSIL.Gen2 is it telling me the truth? This is the installer file I am using StableBit.CloudDrive_1.0.0.403_x64_BETA.msi

-

I am a complete noob and forgetful on top of everything. I've had one pool for a long time and last week I tried to add a new pool using clouddrive. I added my six 1TB onedrive accounts in cloudpool and I may or may not have set them up in drivepool (that's my memory for you). But whatever I did was not use drivepool to combine all six clouddrives into a single 6TB drive and I ran out of space something was duplicating data from one specific folder in my pool to the clouddrive. So I removed the clouddrive accounts, added them back, and did use drivepool to combine the six accounts into one drive. And without doing anything else, data from the one folder I had and want duplicated to the clouddrive started duplicating again - but I don't know how and I'd like to know how I did that. I've read nested pools, hierarchy duplication topics but I'm sure that's not something I set-up because it would have been too complicated for me. Is there another, simpler way that I might have made this happen? I know this isn't much information to go on and I don't expect anyone to dedicate a lot of time to this, but I've spent yesterday and today trying to figure out what I did and I just can't. I've checked folder duplication under the original pool, and the folder is 1x because I don't want it duplicated on the same pool so I assume that's not what I did.

- 2 replies

-

- clouddrive

- drivepool

-

(and 1 more)

Tagged with:

-

-

Hey, I've set up a small test with 3x physical drives in DrivePool, 1 SSD drive and 2 regular 4TB drives. I'd like to make a set up where these three drives can be filled up to their brim and any contents are duplicated only on a fourth drive: a CloudDrive. No regular writes nor reads should be done from the CloudDrive, it should only function as parity for the 3 drives. Am I better off making a separate CloudDrive and scheduling an rsync to mirror the DrivePool contents to CloudDrive, or can this be done with DrivePool (or DrivePools) + CloudDrive combo? I'm running latest beta for both. What I tried so far didn't work too well, immediately some files I were moving were being actually written on the parity drive even though I set it to only contain duplicated content. I got that to stop by going into File Placement and unticking parity drive from every folder (but this is an annoying thing to have to maintain whenever new folders are added). 1) 2)

- 26 replies

-

- drivepool

- clouddrive

-

(and 1 more)

Tagged with:

-

Reading through these steps I originally missed the tidbit at the end: So, to be clear, does this mean I am unable to switch an existing drive to use my newly setup API keys. I went through this process 3 or 4 days ago and I just signed back into the Google Cloud Console and looking at the API monitoring sections it shows no usage, which is what triggered me to go reread the document. I am looking to confirm if the changes made in the ProviderSettings.json will not kick in until I make a NEW DRIVE connection and what that means for a drive that was mounted/connected BEFORE going through this process. Basically is there a way to use my keys with a drive that was original setup using the applications embedded pool of API keys or will I have to create a new drive and transfer everything from one to the other? Forgive me if this seems like a stupid question, I know enough to be dangerous so I cannot imagine why I would have to go through a transfer process but that could just be my ignorance showing. Thanks in advance all!

- 2 replies

-

- googledrive

-

(and 2 more)

Tagged with:

-

I have a 500GB SSD dedicated to stablebit cloud drive. The cloud drive is a google drive. I have the cache set at 470GB. I'll copy over say 350GB(ish) at a time. once it finished uploading, if I clear cache and stablebit tells me there's nothing in "local" I go to transfer another 350GB. I get an I/O error in stablebit, the transfer between hard drives slows or stops because 350+350=700 which is more than the cache of 470. but I cleared the cache so the first 350 should be gone, and I should have that space back for the next set of 350GBs. makes sense? But I end up having to "detach drive" and then reattach it every single time I want to do transfers like this. I feel like I am doing something wrong, or am missing a setting or something. I shouldn't have to detach the drive every time like this. it makes no sense. any suggestions would be amazing, thank you.

-

Hello, I am in need of help in this matter: I had a 975 GB cloud drive set up on my OneDrive for Business account and I deleted some old files then uploaded new ones. Then in the Storage Metrics on OneDrive I noticed that the size of the cloud drive folder reached 993 GB so I paused uploading immediately. I then resized the drive to 900 GB to try to fix the problem, it resized correctly and cleaned up except that my Storage Metrics page is still showing 993 GB used! Hopefully there is something I can do to reduce the size because I'm only actually using ~700 GB of the space and whenever I upload more the size of the cloud drive folder just goes up. Is there anything I can do without just deleting the whole thing and reuploading everything? I have attached some screenshots to make things clearer. Thanks.

-

Hello I am going to explain what I intend to do. I have a google Drive account with 40 tb and a Synology NAS with 60 tb. The NAS just broke down beyond repair It is my intention to have a clouddrive in my PC of 40 tb seen by my WINDOWS machine as a local Drive. Is it possible to have a Drive that large? How would I then transfer my google Drive data to the clouddrive, could I justo mover the data within google drive and it would be updated in my Windows machines? Would a program like sonarr see this clouddrive as a local disk? If that is possible I would use my HD with drivepool as backup of the cloud (what program World you recommend) TIA

-

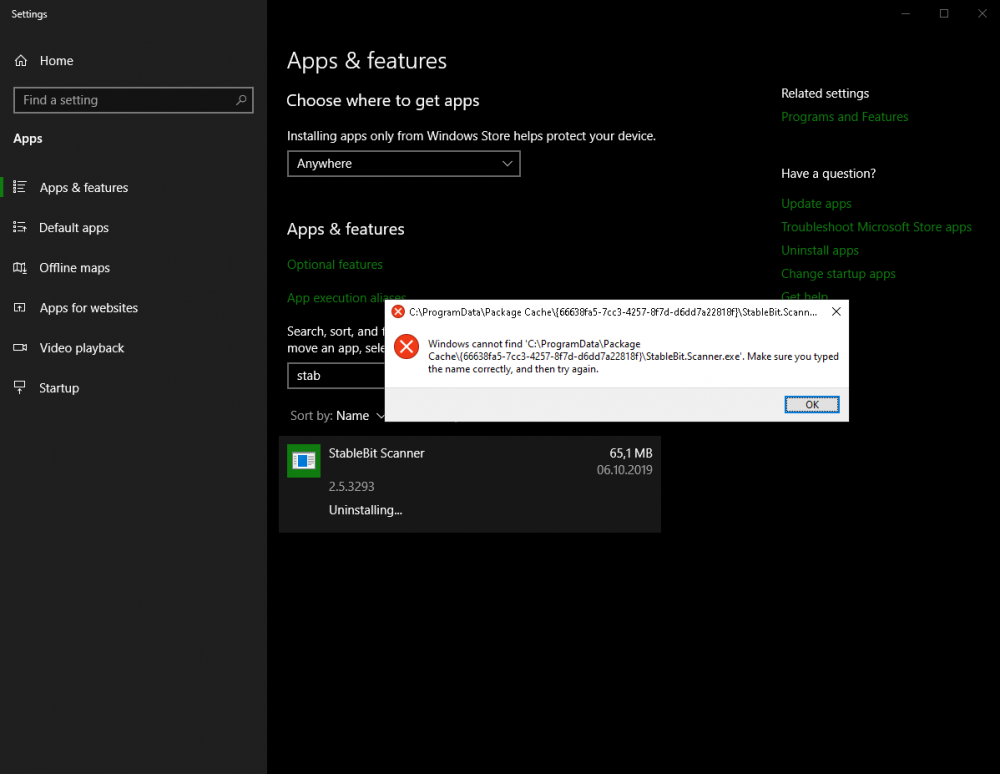

So I installed CloudDrive, then Scanner, then messed with both (both worked fine), then uninstalled CloudDrive, tried to uninstall Scanner, and got this c:\Program Files\StableBit\CloudDrive\Data\ReadModifyWriteRecovery\ still have some *.dat files (will remove manually) c:\Program Files (x86)\StableBit\Scanner\* have binaries in there (so it's not uninstalled indeed)

- 2 replies

-

- uninstall

- clouddrive

-

(and 1 more)

Tagged with:

-

Is Apple's iCloud storage service something that is on the development team's radar for future support in clouddrive?

-

Curious if there is a way to put the CloudDrive folder, which defaults to: (root)/StableBit CloudDrive, in a subfolder? Not only for my OCD, but in a lot of instances, google drive will start pulling down data and you would have to manually de-select that folder per machine, after it was created, in order to prevent that from happening. When your CloudDrive has terabytes of data in it, this can bring a network, and machine to its knees. For example, I'd love to do /NoSync/StableBit CloudDrive. That way, when I install anything that is going to touch my google drive storage, I can disable that folder for syncing and then any subfolder I create down the road (such as the CloudDrive folder) would automatically not sync as well. Given the nature of the product and how CloudDrive stores its files (used as mountable storage pool, separate from the other data on the cloud service hosting the storage pool AND not readable by any means outside of CloudDrive), it seems natural and advantageous to have a choice of where to place that CloudDrive data. Thanks, Eric

-

Hello, I have had Drivepool for about a year now and have just installed a second copy on another physical machine. Each machine has ~12TB of data on it. is it possible to use CloudDrive to put the two pools together if data already exists on each? I am thinking of creating a 1TB cloud on machine ONE, pointing it to a Windows share on machine TWO. Then, I want to add the CloudDrive to to the pool on machine ONE... will that show me the existing data from TWO in the pool on ONE? Can the clouddrive be smaller than the data on TWO? Thanks for any help you can provide! TD

- 6 replies

-

- clouddrive

- drivepool

-

(and 2 more)

Tagged with:

-

Hello everyone! I plan to integrate DrivePool with SnapRAID and CloudDrive and require your assistance regarding the setup itself. My end goal is to pool all data drives together under one drive letter for ease of use as a network share and also have it protected from failures both locally (SnapRAID) and in the cloud (CloudDrive) I have the following disks: - D1 3TB, D: drive (data) - D2 3TB, E: drive (data) - D3 3TB, F: drive (data) - D4 3TB, G: drive (data) - D5 5TB, H: drive (parity) Action plan: - create LocalPool X: in DrivePool with the four data drives (D-G) - configure SnapRAID with disks D-G as data drives and disk H: as parity - do an initial sync and scrub in SnapRAID - use Task Scheduler (Windows Server 2016) to perform daily synchs (SnapRAID.exe sync) and weekly scrubs (SnapRAID.exe -p 25 -o 7 scrub) - create CloudPool Y: in CloudDrive, 30-50GB local cache on an SSD to be used with G Suite - create HybridPool Z: in DrivePool and add X: and Y: - create the network share to hit X: Is my thought process correct in terms of protecting my data in the event of a disk failure (I will also use Scanner for disk monitoring) or disks going bad? Please let me know if I need to improve the above setup or if there is soemthing that I am doing wrong. Looking forward to your feedback and thank you very much for your assistance!

- 1 reply

-

- drivepool

- clouddrive

-

(and 1 more)

Tagged with:

-

I suspect the answer is 'No', but have to ask to know: I have multiple gsuite accounts and would like to use duplication across, say, three gdrives. The first gdrive is populated already by CloudDrive. Normally you would just add two more CloudDrives, create a new DrivePool pool with all three, turn on 3X duplication and DrivePool would download from the first and reupload to the second and third CloudDrives. No problem. If I wanted to do this more quickly, and avoid the local upload/download, would it be possible to simply copy the existing CloudDrive folder from gdrive1 to gdrive2 and 3 using a tool like rclone, and then to attach gdrive2/3 to the new pool? In this case using a GCE instance at 600MB/s. Limited of course by the 750GB/day/account. And for safety I would temporarily detach the first cloud drive during the copy, to avoid mismatched chunks.

- 2 replies

-

- duplicate

- duplication

-

(and 3 more)

Tagged with:

-

How do I get CloudDrive to forget my encryption key after a reboot?

blaineeasy posted a question in General

I must have accidentally checked the box that stores the encryption key because now CloudDrive mounts my drive when the machine boots up (even after a full shutdown). How do I tell it to ask for my key again at startup? Thanks.- 1 reply

-

- encryption

- reboot

-

(and 3 more)

Tagged with:

-

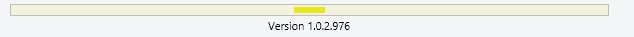

Hello, I am on version 1.0.2.976 and i tried to update to the newest version (1.1.0.1030). As you can see on the screenshot the shown version isnt updating correctly. I tried to install it again with admin rights but its not possible cause the installer let me only chose "repair" and "uninstall". Can i uninstall CloudDrive and install it again without losing my settings? Its not only the version which isnt updating, im missing some features which are on my 2nd pc are there (auto retry when drive gets disconnected,..). OS: Win Server 2012 (up2date). No security solution or firewall installed. regards

- 5 replies

-

- cloud

- clouddrive

- (and 7 more)

-

So I'm a bit confused with the HybridPool concept. I have a LocalPool with about 6 hard drives totalling 30TB. I have about 25TB populated and with this new hybrid concept I'm trying to know if I can use another pool (cloudpool) for duplication. The part that I'm a litte confused is when I create a hybridpool with my cloudpool and my localpool I have to add all my 25TB of data on the new Hybridpool drive. On the localpool all my data appear in the "Other" section. I really have to transfer all the data from the localpool to the hybridpool, or am I missing something? Thanks

- 1 reply

-

- hybridpool

- clouddrive

-

(and 2 more)

Tagged with:

-

Hello ! I would like to ask, what are the best settings for Cloudrive with Google Drive ? I have the following Setup / problems I use CloudDrive Version 1.0.2.975 on two computers: I have one Google Drive Account with 100TB of avaiable space. When I Upload some data to the drive the Upload Speed is perfect. On the frist Internet line I got an Upload Speed of nearly 100 MBit/sec On the Second Internet line (slower one) I also got full Upload Speed. (20 MBit/sec) When I try to download something the download Speeds are incredible slow. On the first line I have a download speed of 3 MB/sec. It should be 12,5 MB/sec (max Speed of the Line) On the Second Line I have a download Speed of 5 MB/sec. It should be 50 MB/sec (max Spped of the Line) I already set the Upload/download Threads to 12 on both Computers. I also set the minimum download size to 10.0 MB in the I/O Performance settings. What are the best settings to increase the download Speed ? Thank you very much in advance for any help. best regards Michael

-

I just wanted to drop a note here on the community forum for folks who may be considering StableBit Cloud Drive and are researching the support forum prior to making a purchasing decision. I've had questions on two occasions since I've been a license holder that led me to open support cases for assistance. Both times I received prompt, personal and highly knowledgeable engagement from support. For such a cutting edge (in my mind) product, that is so affordable (in my opinion), I couldn't ask for a better support system. The forums here are great too. My two cents -- thanks team for a great product backed by a great staff.

-

I have 10TB expandable drive hosted on Google Drive with a 2TB cache. Whenever I add a large amount of data to the drive (for example I added 1TB yesterday) CloudDrive will occasionally stop all read and write operations to do "metadata pinning". This prevents Plex from doing anything while it does its thing, and took over 3 hours to do yesterday. I don't want to disable metadata pinning, but I would like to be able to schedule it, if necessary, for 3AM or something like that. In the meantime, is there a drawback to having such a large local cache? Would it improve operations like metadata pinning and crash recovery if I decreased it?

- 13 replies

-

- plex

- clouddrive

-

(and 2 more)

Tagged with: