red

Members-

Posts

51 -

Joined

-

Last visited

-

Days Won

1

red last won the day on January 11 2018

red had the most liked content!

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

red's Achievements

Advanced Member (3/3)

1

Reputation

-

Google Drive + Read-only Enforced + Allocated drive

red replied to fjellebasse's question in Providers

No, I could not, as it was part of a DrivePool and CloudDrive wouldn't let me. I just paused the uploads (they unpaused a few times by themselves, though). I was able to get all data out and have now removed the disks. -

red reacted to an answer to a question:

Google Drive + Read-only Enforced + Allocated drive

red reacted to an answer to a question:

Google Drive + Read-only Enforced + Allocated drive

-

Google Drive + Read-only Enforced + Allocated drive

red replied to fjellebasse's question in Providers

Thanks for the added info. I'll add for anyone else reading this that it seems like everything works just fine even though Google itself has marked the drive read-only. I've set balancing rules now so that the LocalPool can contain unduplicated & duplicated, and CloudPool may only contain duplicated data. Triggering "Balance" after that change has continued the process of moving files from cloud to local. So far in that mode, I've been able to move a bit over 3TB, with around 4TB to go 👍 -

Google Drive + Read-only Enforced + Allocated drive

red replied to fjellebasse's question in Providers

I'm going to be hit by this issue 28th of August myself, and I was planning to set read only a few days before, but to my dismay, once I tried it, StableBit CloudDrive notified me that "A cloud drive that is part of a StableBit DrivePool pool cannot be made read-only." I've been downloading data (that's only stored in cloud) for four weeks now, but Google is giving me around 40-50mbit/s downstream and with this pace, I'm still fifteen days shy of grabbing all my data. It's almost as if they anticipated people attempting to migrate away from their ecosystem. Before I started the process, I would easily hit 500-600mbit/s with multiple threads. Now I'm thinking if I just need to take the scary route of hitting "Remove disk" and let DrivePool handle fetching any unduplicated data from CloudPool to LocalPool. Does anyone know how exactly "Duplicate files later" works in conjunction with a CloudPool? -

In the past few months, electricity prices have skyrocketed. I've set my PC too sleep automatically after 10 minuutes of idle time. I use wake-on-lan to wake my PC up so that Plex is available when I want to watch stuff. My library consists of 20TB local data, and around 40TB of cloud data. I've set my CloudDrive cache at 500GB to a dedicated SSD. This means that when I want to watch a series that's purely in the cloud, there's ample space to cache whatever I play, and it stays cached for a long time. This setup has served me well for a few years. Queue setting sleep timer for my PC. No issues. Then I added a smart power plug as well. I remotely tell my PC to shutdown, and after 30 minutes, AC power is removed. This rids my setup of any access to electricity. This has resulted in the scenario, that most days as I cold boot my PC, drive decides to undergo recovery. I'm not sure why, because when I shut down, I don't abort the service, and my PC shuts down at it's own leasure. EDIT: Unless the issue is that 30min is not enough time for the CloudDrive service to gracefully shut down? I kind of understand why recovery uploads the cache back to cloud, but a 500GB upload every few days? Whoa. How can I sort the issue out without turning my cache down to a measly few GBs? The large cache size has really sped up when Plex decides to do a full folder scan of the complete structure. I've around 25k series files, and 2k movie files, plus assorted .srt and .jpg files per folder for metadata.

-

red reacted to a post in a topic:

Is dark mode planned for DrivePool & CloudDrive?

red reacted to a post in a topic:

Is dark mode planned for DrivePool & CloudDrive?

-

Simple question. Been hoping to get dark mode support for ages so I finally thought to toss the question here as well.

-

red reacted to an answer to a question:

How to see what specific data is being written into cloud at the moment?

red reacted to an answer to a question:

How to see what specific data is being written into cloud at the moment?

-

I thought I found the issue out by sorting Windows Search of the Cloud Drive contents via latest changes, and there were some few thousand small changed files which should have been going to a whole another drive, I fixed that, and I see no actual changes in the data for the past 30 minutes. Yet the upload counter is sitting at around 50MB now and pushing upload @ 40Mbit. The upload size left seems to be decreasing at a very small speed though, like one megabyte a minute. I'll continue investigating. Thanks for the info about the block level stuff, makes sense. This is most likely a mistake by yours truly.

-

I'm seeing some weird constant uploading, while "to upload" is staying at around 20 t o 30MB. Tried looking through technical details, toggling manyt things to verbose in the service log and looking at Windows resource monitor, but all I can see are the chunk names. So is there something I can enable to see what exact files are causing the I/O? Edit: I was able to locate the culprit by other means, but I'll leave the thread up since I'm interested to know wether what I asked is possible via Cloud Drive.

-

Updated to latest beta and same thing. I issued a bug report now.

-

Quick details: 2x Cloud Drives = CloudPool 5x Storage Drives = LocalPool CloudPool + Localpool = HybridPool. Only balancer enabled on HybridPool is usage limiter that forces that unduplicated files are stored in cloud. So I recently upgraded my motherboard and cpu, and upgradfed to 2.2.4.1162, today I noticed that if I disable read striping, no video files opened via Windows File Explorer (or other means) from the HybridPool play at all. The file appears on DrivePools "Disk Performance" part for a moment wiht 0/b read rate. If I open a small file, like an image, it works. If I enable read striping, the file is primarily read from LocalPool as expected, but I never want it to be read from Cloud if it exists in Local, and sometimes under a bit of load, the file playback from cloud is triggered. I'm at my wits end how to debug / sort this issue out by myself. Any help would be greatly apperciated. Edit: new beta fixed this

-

chaostheory reacted to a question:

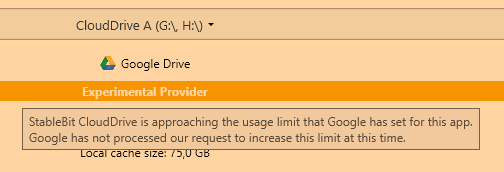

Google Drive is suddenly marked as experimental provider?

chaostheory reacted to a question:

Google Drive is suddenly marked as experimental provider?

-

-

Just out of interest, why do you split the Cloud drive into multiple volumes?

-

To briefly return to this topic: if I set Plex to read from HybridPool, the scanning of my library takes well over 2 hours. If I only point Plex to LocalPool, it's over in a couple of minutes. So something is causing the drive to let Plex chug via CloudDrive instead of just getting it done fast via LocalPool, maybe the amount of read requests Plex is generating? Any ideas how I could debug why it's doing so?

-

I've had to restart the service now daily. I'm now running the troubleshooter before restarting the service yet again. I think I'll need to schedule automatic restarts to it every 12 hours or so if this keeps up. Since last restart, I was able to upload 340GB succesfully (100Mbit up).

-

I'm still experiencing this on 1.1.2.1177 I went and wiped my cloud drive and started from scratch on 1174 couple of weeks ago, and so far uploaded 9TB of data, but this issue arose again two days ago. After restarting my PC yesterday the uploads continued, until today they are stuck with I/O errors again after half a TB or so. Instead of a reboot I attempted to restart the clouddrive service, and now it's uploading again.

-

I was finally able to fix the situtation by detaching the cloud drive, then removing the cloud drive from the pool, rebooting, and re-attaching and adding it to the pool. Erroneous 0kb files that could not be accessed are now gone.