Philmatic

Members-

Posts

26 -

Joined

-

Last visited

-

Days Won

1

Philmatic last won the day on October 18 2014

Philmatic had the most liked content!

Philmatic's Achievements

Member (2/3)

3

Reputation

-

Philmatic started following VMWare NVMe Driver - bad data and Why isn't Drivepool smarter about removing drives?

-

I am in the process of migrating some drives from one server with a DrivePool pool to another server with ZFS. The current drive layout is 4x 10TB, 4x 8TB and 24x 3TB. I needed the 4 10's to be pulled out so I can move them over to the other server. I didn't have enough free space to remove 40TB straight up, so I had to disable duplication. My first grumble: If I'm removing drives, and I don't have enough space. I have to disable duplication first, which fre's up the space but does it in a indiscriminate way, removing some duplicated files from the drives I want to keep and keeping some on the drives I plan to remove. So when I remove the drives, it has to move them from the drive I'm removing to drives I'm keeping. I understand this is how it is supposed to work, but I am suggesting an enhancement. If this step could be combined somehow, then it would be smart enough to disable duplication, but remove the duplicates from the drives I'm removing. Then removing the drives should be instantaneous. My second grumble, somewhat related to the first: Removing drives in batches isn't smart enough to realize that there are other drives in the removal queue, so when I am removing drives, it moves data from the drive I am removing, to the drive that's queued up to be removed next. Doubling and tripling the movement of files. If the remove process could be a tad big more intelligent, it should know not to move files to drives that are already in the removal queue. Other than that, I've been a loyal customer since the early days (1/2012). Love the product, and the simplicity of the product. I wish it did more, but I'm not complaining (Except my two complaints above of course! :))

-

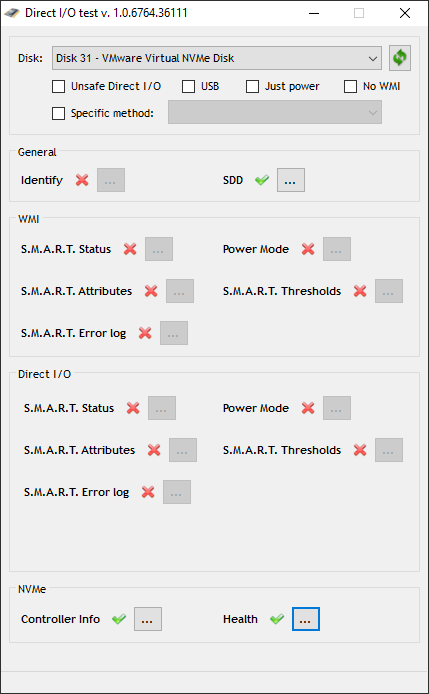

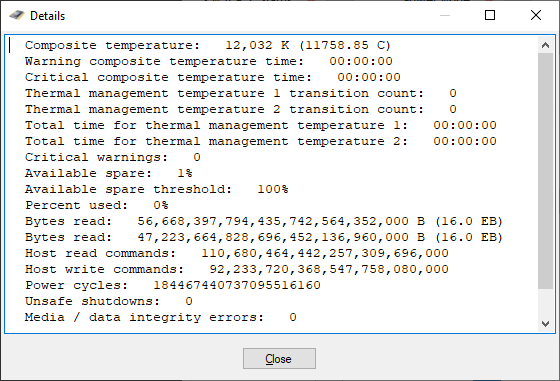

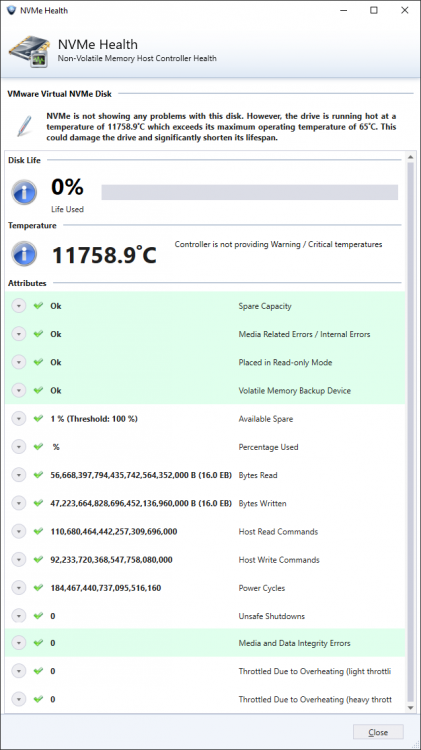

I'm running a Windows Server 2019 VM on VMware ESXi, 6.7.0. The host is a dual proc E5-2670 v1 whitebox. The VMWare datastore is an NVMe disk (HP EX920 512GB) and the guest VM is using the VMWare Virtual NVME controller device driver. The smart data coming from the virtual NVMe driver is... interesting. I'm not expecting it to work, I just figured Alex may want some denug data so it can be fixed. Attached are my test details.

-

Are you asking if DrivePool is compatible on a Hyper-V guest running on a Hyper-V host? If so, yes. I run it this way and it works fine, you just have to pass each disk individually and the guest OS (With DrivePool) sees it just fine. In fact, I went form a full physical setup on Server 2012 and Virtualized Server 2019 on Hyper-V, passed all the disks (Was a huge pain, not being able to pass thru controllers is a huge PITA, VMWare FTW here) and DrivePool picked up the entire pool (32 disks) within a minute or so and that was it. Or are you asking if DrivePool is compatible with the Hyper-V Hypervisor itself (Either in Hyper-V Server or Server Core with the Hyper-V role) as in you want to pool drives inside the Hypervisor and present them as large disks to guests? I've tried this, and it DOES work, but it's not a good idea. DrivePool is not a traditional RAID technology can isn't well suited for large random writes, it's just not recommended. It's best to pass the disk individually and let DrivePool on the guest pool everything together.

-

With Windows Server 2016 RTM right around the corner, I am re-evaluating my server software and looking to upgrade whenever possible. Do the improvements in DrivePool to support Windows 10 extend automatically to Windows Server 2016? I would imagine they would considering the shared kernel, but I just wanted to make sure. Has this been tested?

-

gringott reacted to an answer to a question:

The Largest Stablebit Drivepool In The World!!

gringott reacted to an answer to a question:

The Largest Stablebit Drivepool In The World!!

-

I'm over here sitting on 32.7TB like I'm king of the world, lol.

-

Christopher (Drashna) reacted to an answer to a question:

Migrating from WHS2011 to Windows 8

Christopher (Drashna) reacted to an answer to a question:

Migrating from WHS2011 to Windows 8

-

Yep, it really is magical.

-

I wouldn't recommend Alex spend any time fixing this as even Microsoft have given up on the Windows 8/8.1 way of syncing OneDrive. Windows 10 will use a separate executable that syncs directories, like the client for Windows 7 did, versus use the junction points and symbolic links that Windows 8/8.1 uses. As a user, I MUCH prefer the Windows 8/8.1 way of doing it, but I guess there are issues with it under the hood.

-

The defragger built into Windows Server 2012 can defrag multiple disks at the same time, just select them all like you would select multiple files and hit Optimize. Worked fine for my 24 drive server, it did all 24 at the same time.

-

Alex reacted to an answer to a question:

Drivepool good with torrent activities?

Alex reacted to an answer to a question:

Drivepool good with torrent activities?

-

Yes, totally. I have a 150mbps downstream connection running 50 or so torrents at a time and DP has absolutely no issues with it. I do two things though, to be safe: I disable duplication on the folder I download to, it's just not needed I increase the cache on uTorrent and tell it to use up to 1GB of my System RAM for write caches. I have never seen "Disk Overloaded".

-

Yep, I did exactly this. You don't even need to pull the drives, but I do just to be safe. Make sure you don't forget to release the license on the old machine before formatting. Otherwise you will have to open a ticket with support so they can release it on their end.

-

Steps #1, #2, and #3 will work just fine. It's that simple. Drivepool with recognize that your folders are duplicated and will already know what to do.

-

Upgrading (?) Windows 7 > 8.1, how to handle existing pool?

Philmatic replied to montejw360's question in General

That's all you need to do, it'll work exactly like that. -

I've always done a "Reinitialize disk surface" using Hard Disk Sentinel and it has never failed me, it always forces the hard drive to reallocate the bad sectors. It's not free, but well worth the cost. I expect any software that can write zeros to the entire drive (Low level format) would work too, I have never been able to get chkdsk to work to fix the sectors.

-

.copytemp files are the temporary files DrivePool creates as it's copying the file. It will disappear when the file has completed duplication.