fluffybunnyuk

Members-

Posts

25 -

Joined

-

Days Won

2

Everything posted by fluffybunnyuk

-

Thanks i'll open a support ticket, as it adds to new drives added to pool correctly, but doesnt remove data from overfilled drives.

-

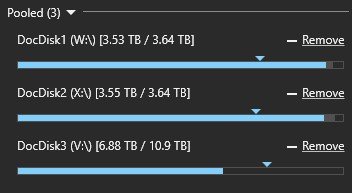

ok, so i disable all plugins except disk space equalizer set to 80%.... the diamonds that mark the rebalance size move to the right location....i.e. 80%... then nothing happens... 3:19:03.2: Information: 0 : [FileMover] Nothing to move from source=DocDisk2 (X:\), we're done. 3:21:05.0: Information: 0 : [Rebalance] (ToMoveRatio=0.9503) 3:21:05.0: Information: 0 : [CalculateBalanceRatio] Calculated ratio: 1.0000 I dont even think either of those ratios reported are correct. I've re-started, re-measured, re-balanced i've run with debug mode, and gone through all the logs. And for whatever reason it decides that because theres a W/X mirror going on, then its not going to bother moving data out to V. As far as its concerned because the mirror is intact, its got no interest in freeing up disk space.

-

Cant for the life of me get the smaller drives to offload data to the big drive. Stuff is W/X mirrored, and it should be moving to W/V or X/V mirror. I set the equalisation to 80% drive capacity, and stuff doesnt move... just aggravates me with building a bucket list... 0:35:14.9: Information: 0 : [Rebalance] Unprotected: 0 B / 12,003,767,819,797 B (0.00%), Delta = 0 B, Limit = 1.0 0:35:14.9: Information: 0 : [Rebalance] Protected: 9,208,741,611,396 B / 12,003,767,819,797 B (76.72%), Delta = 1,645,611,121,007 B, Limit = 1.0 0:35:14.9: Information: 0 : [Rebalance] 3d54470a-024d-4d97-8944-860a4e80e6f3 (DocDisk2 (X:\)): Fill = 3,069,219,330,888 B / 4,000,785,100,800 B (76.72%) 0:35:14.9: Information: 0 : [Rebalance] Unprotected: 0 B / 4,000,785,100,800 B (0.00%), Delta = 0 B, Limit = 1.0 0:35:14.9: Information: 0 : [Rebalance] Protected: 2,935,598,234,456 B / 4,000,785,100,800 B (73.38%), Delta = -831,259,473,080 B, Limit = 1.0 0:35:14.9: Information: 0 : [Rebalance] 53caeb3e-e60e-42a3-86e1-7e87bcdc88d0 (DocDisk1 (W:\)): Fill = 3,069,219,330,886 B / 4,000,785,100,800 B (76.72%) 0:35:14.9: Information: 0 : [Rebalance] Unprotected: 0 B / 4,000,785,100,800 B (0.00%), Delta = 0 B, Limit = 1.0 0:35:14.9: Information: 0 : [Rebalance] Protected: 2,981,921,134,923 B / 4,000,785,100,800 B (74.53%), Delta = -814,351,647,930 B, Limit = 1.0 0:36:23.5: Information: 0 : [CalculateBalanceRatio] Calculated ratio: 0.8912 Its driving me crazy plugins DUL, DSE, all-in-one should be moving 800GB off W/X but just doesnt.

-

Hi, I've had it running happily for over a year, But in the last week i've had it tell me it needs license transferring. So i transfer the license. It says ok.... then 3 days later tells me theres a license problem. Any ideas?

-

i use dpcmd check-pool-fileparts F:\ 4 > check-pool-fileparts.log to fix it. Then i read the log, and if theres no issues other than with drivepools record, i recheck duplication, and it goes back to what i'd expect 19.6TB duplicated, 8.99mb unusable for duplication for that pool. For me its more to do with drivepools index developing an issue rather than there being an actual duplication issue. But thats why i check the dpcmd log to make sure. I use notepad++ to open the logfile, and search /count "!" , "*", and "**" Usually it reports no inconsistencies at the end. At least i've never had one outside of when testing, and deliberately trying to trash a couple of test drives.

-

I had an inconsistency in my duplication, and use dpcmd to fix it. This is why i bought the software. It works, and is reliable . Drivepool that is. Cause: Modifying all drives involved in duplications file tables at the same time... Really dumb thing to do i know. But i only have a short maintenance window ,and pushed things to fit the time. So just dropped by to say thanks to the author for the work done to make it reliable.

-

You could set it to balance every say 168 hours. That'd give you a week until the next balance runs.

-

File Recovery Tools compatible with DrivePool?

fluffybunnyuk replied to jhhoffma3's question in Nuts & Bolts

A good slightly techincal hack to avoid this is to create/use an account with the delete permission for deleting, and remove delete permission from the limited account you use for general use or drivepool access. All my files across the drivepool have "normal-user account", and i have a special account just for deletion. So when i want to delete i have to provide credentials to authorize the delete. It makes whoops where did my data go moments a little harder. Naturally its a custom permission, and i hate custom permissions as they sometimes go bad... and mostly get annoying. -

OK to do multiple drive add/removes at the same time?

fluffybunnyuk replied to bnhf's question in General

If your removing a drive from pool A, and a drive from pool B then yes. Same pool, then probably no... chances are if you remove 2 drives from same pool, a certain law dictates you'll pull 2 with the same data on, and lose the data Slow is good if the data remains intact. Me, i always yank one drive, and see where i stand before pulling another. -

Thats never going to happen with any software like drivepool. Theres a write read overhead(balancing) that multiplies with fragmentation. My best recommendation is assuming your using large core xeons or threadrippers, is to open the settings.json. Change all the thread settings upto 1 per core, to allow all cores to scoop I/O from the SSD. Give that a whirl. If that doesnt shift it. Try defragging the mft, and providing a good reserve so the balancing has a decent empty mft gap to write into. My system reports the SSD balancer is slow as a dog which is why i uninstalled it. Its a cheap version of sas nvme support, except instead of the controller handling the nvme I/O spam, the cpu does. (Which isnt a good idea the larger the system gets). Have you tried using sym links, and using a pair of ssd per symlink so your folder structure is drives inside drives i.e. C:\mounts\mountdata(disk-ssd)\mountdocs(disk-ssd);C:\mounts\mountdata(disk-ssd)\mountarchive(disk-ssd)\; So your data is transparently in one file structure but chunks are split over seperate drivepools. So the dump from the ssds are broadly in parallel? I have to set aside 2 hours/day for housekeeping to keep drivepool in tip-top condition. Large writes rapidly degrade the system for me from the optimal 200mb/sec/drive+ at the moment its running at 80mb/sec/drive (which is the lowest i can afford to go) mostly because i've hammered the mft with writes, and theres a couple of tb i need to defragment. I wish you all the best trying to resolve it.

-

8 drives in raid-6 gets me 1600mb/sec and saturates a 10gbe, it allows me to write that out again at 800mb/sec to my backing store (4 drives so less speed). No write cache ssd in sight. Granted i'm not on the same scale as you, but i'm not far off with 24 bay boxes. But then my network, and disk i/o is offloaded away from the cpu. With that many drives, ssd just sucks cos as soon as it bottlenecks it bottlenecks bad usually when it decides to do housekeeping. I'd retest using 8 drives in hardware raid-0 (I/O offloaded), and use drivepool to balance to a pool of 4 drives. See what happens. I think the SSD with its constant cpu calling saying I/O is waiting is gimping your system. Fun fact: A good way to hang a system is to daisy chain enough nvme to constantly keep the CPU in a permanent I/O wait state.

-

At 6TB/day regular workload i'd be recommending 6 or 8 drive hardware raid with nvme cache, with a jbod drive pool as mirror, if like me you want to avoid reaching for the tapes. A 9460-8i is pricey (assuming using expander) but software pooling solutions dont really cut it with a medium to heavy load. Hardware raid is just fine so long as sata disks are avoided, sas disks have a good chance of correction if T10 is used. I've had the odd error once in a blue moon during a rebuild that'd have killed a sata array , whereas in the sas drive log it just goes in as a corrected read error, and carries on. I cant afford huge sas spinning rust, its very expensive.... so i have to compromise.... i run sas drive raid, and back it with large sata jbod in a pool. So its useful in a specific niche use case. In my case sustaining a tape drive write over several hours. The big problem with drivepool is the balancing , and packet writes, and fragmentation. All those millisecond delays add up fast. What i've said isnt really any help. But what you wrote is exactly why i had to bite the bullet and suck it up , and go sas hardware raid.

-

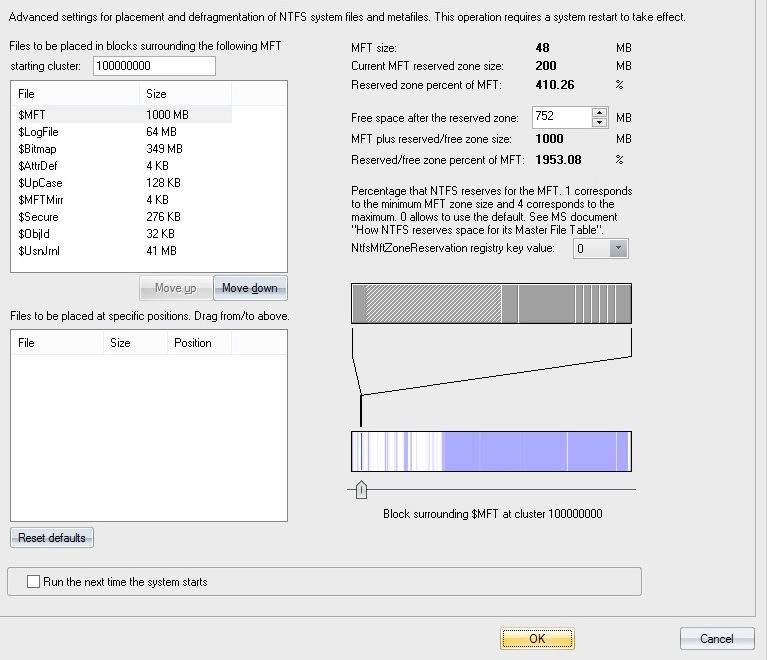

Ultimate defrag has a boot time defrag for drive files like $MFT etc I go into tools/settings and then click the boot time defrag option. I set the starting cluster to 100000000 (12tb disk)(you can set your own location but 5% in seems reasonable for 0% to 10% track seek for writes, 10% into the disk would be optimal(0-20% seek) .and leave the order as the default. I change the free space after the reserved zone to a reasonable size (in my case 752mb to round it to a 1GB MFT) I click the run the next time the system starts box and click ok. Naturally a warning needs to be attached to this. Your playing with your disks file tables.... It can go really bad (unlikely but possible if your system is unstable). So to mitigate that risk a chkdsk /F MUST be run on the drive BEFORE. And preferably the disk needs as little fragmentation as possible( preferably a complete fragmented file defrag pass first).. As can be seen from the pic. Most of my data is moved to the rear , so all my writes happen at the start of the disk near the MFT, this means the head doesnt move too far when updating the MFT. Contiguous Reads i dont care about (i dont need speed other than pesky tape drive feeding), and writes are crucial to me to be executed asap since i dont use cache ssd.

-

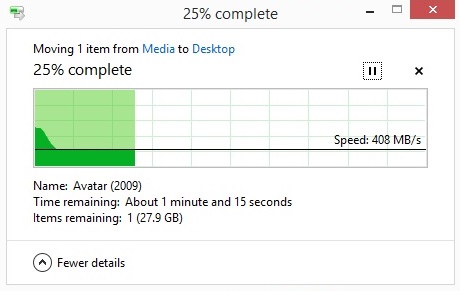

For me a pair of 12tb got me 400mb/sec which is decent enough for me to dump the ssd cache (i found it killed the speed). 15 disks in a pool should blow away 4 ssd drives in straight line speed. I write 1TB/hour doing a tape restore.

-

9207-8(i or e) sas2 pcie 3 is great for low amount of use. These are cheap (IT mode). A cheap intel sas2 expander gets a system off to a good start. Later on when usage ramps up then:- 9300 or 9305 sas3 keeps the controller cooler, and doesnt bottleneck so easy with alot of drives. These arnt cheap, but can be found for reasonable prices. Eventually backup to tape(rather than cheap mirrors) gets important:- Hardware raid-6 sas3. These are the definition of very expensive, but do keep a tape drive sustained fed at 300mb/sec(raid 6 part of pool) while plex is reading 50gb mkv files for chapter thumbnail task(jbod mirror part of pool). A 9440-8i(sas drives only with T10-PI) with sas3 expander is a wonderful thing in action. Also avoid cheap chinese knock-offs. It amuses me how people spend thousands of pounds on cheap drives, and refuse to pay £300 for the controller.

-

Maybe it hates you too , like it hates me Glad to hear it fixed it. The remeasure should go fine now. You should make a note to check the corrupted file, and maybe restore it from a backup if necessary. I've noticed drivepool has a preference for disks used to create a pool. So like in my example i had 2 disks hot, and cold. I used hot to create the pool, and it loved hot ever after. Sadly i needed it to prefer cold. My only solution was to hotswap them around in my chassis so hot became cold , and vice versa thus conning drivepool into using the cold one. The short answer is probably "love".

-

Do a checkdisk instead. or open a cmd prompt and run for example "chkdsk D:\ /F" Regards removing your drive. You should tread carefully. If you have space in the pool, you can do a normal removal (not forced), wait for the disk to empty, and then it'll remove itself safely. Forcing a removal is likely to lead to data loss unless your 100% sure your files are duplicated, or the removal drive is near enough empty already. If your not sure dont do it, until you are 100% sure. If your normal drive removal works out okish but halts before removal because of the corrupted folder, you should end up with just the corrupted folder on the disk, and in the disk properties it'll show the disk space used as a few hundred MB. At which point all files should be duplicated elsewhere (check , and sure), then, and only then should it be forced removed. Theres nothing like watching data vanish, and having to spend the next week breaking out 50 LTO tapes for a restore. I always like being 100% sure before i do something, and double checking it.

-

Try a reboot first. Sometimes i find drivepools tiny brain gets overloaded, and that seems to help. view hidden files, go into the poolpart , thennavigate to the folder location and delete it manually, then remeasure. I had a problem moving dozens of files drivepool kept flagging as viruses (incorrectly)(stopping my rebalancing). If that doesnt work. chkdsk fix all the drives, reboot then try again. I also had a permissions problem. Thats was too much hassle to fix so i just did a force remove, formatted the removed drive, and readded it. Its why i keep my pools with just a simple drive mirror. like 8x8tb hardware raid-6 pooled with 4x12tb , or 12tb single drive+mirror. Adding rules and complexities just makes it more breakable , and complex in my mind. Big pools sound nice in principle, but i like keeping track of where my data *actually is*. Hope it helps. I forgot to add drivepool hates me, it has a preference for using certain drives in my system (annoyingly) the hot temp drive in a mirror, so i have to hotswap them round to make the hot drive the cool one it loves.

-

Yes I think from reading another post here, if you fill out the contact form they deactivate it for you at their end since you cannot, after which you should be able to reactivate it. Wish i could be more help, havent had to do it myself.

-

Its probably keyed to the old hardware, and maybe needs deactivating for the old hardware by sending a contact request to them. After that i imagine there wont be any conflict with activation on the new hardware. Thats assuming you tried pasting in your license code, and activating, and it failed. I'm sure someone will be along soon to help.

-

Solved: Moving and defragging the MFT and related $ files to a location 5% into the platter, and a reboot.I extended the MFT to a 1 GB(700mb free) in size just to be on the safe side. Yay sustained 200mb+/sec is back.

-

I have a pair of 12TB drives in a seperate pool as a pseudo mirror (0 file fragments). But am getting 20mb/sec write speeds, and 40mb/sec reads. The kick in the teeth is duplication is 80mb/sec. When i test the drives, i get 450mb/sec bus speed per port. When i copy a file to a single drive bypassing drivepool i get 250mb/sec. As soon as i use drivepool performance craters. I've tried disabling real time duplication, disabling file balancers, but nothing seems to shift the performance, it just gets slower and slower the more i add. It started out at 100mb/sec, dropped to 80mb/sec, then 60mb/sec, now i'm down to 20mb/sec and getting slower.

-

I dont do software recommendations. All i can say is the read/write speed over the particular sectors on that specific disk is much improved, but not perfect.

-

I found when copying to the pool it defragments files quite badly. My solution to it is ultimatedefrag. Although any folder/file layout defragger will do just fine. I'm old school and obsessive about track to track seeks, power usage and head flying hours. One of the things i've read often mentioned here is how old your drive is. Really the question should be is the drive under 40,000 flying hours thats my benchmark for an old drive where failure rates increase to a significant level. One of the useful things i use is in the power settings, the ability to ignore burst traffic for 1 minute, and HIPM/DIPM/DEVSLP. It keeps the drives spun down until i actually need them. I tend to use a pair of seagate powerbalance SAS drives for frequent data, as i love the timer control, and running Idle_C for extended periods. Copying 8TB over to a pair of 12TB drive i had about 3 million file fragments (mostly film/tv). So i sort to end of disk- File/Folder Descending. It takes 2 days on a new drive, but it has the advantage of the free space being at the front of the disk, and the head doesnt have to seek any further than 33% into the platters unless its a film (in which case its a sequential read and even on the final sectors not alot of work even for a 4k film). It should be pointed out that i do this yearly at most. Doing a consolidation defrag every 3 months to push the new films etc on the outer tracks to backfill from the new end of freespace at 4TB. It also has the advantage of being a sequential read to duplicate the disk. Of course you could defrag to the outside of the disk but that has the disadvantage of the free space being on the end of the platter, which is a full stroke anytime you want to write data. If you really needed file recovery, and can read the platters, sequential data is much easier too. Another issue is rated workload, i tend to prefer SAS drives, so i can get away with sustained use in heavy periods. But a way to age your drives fast is to scan them on a 30 day basis for sector errors. Thats 12x per year or a workload of 144TB on a 12TB drive (Ironwolf have 155TB/year usage). A better solution is to get the new drive, allow it to warm up a couple of hours. Then do the smart tests quick then extended, then use a sector regenerator to scan the disk (theres usually a point on the platter where theres a slowdown at least on the drives i buy 12TB+ (NAS or SAS)). Scans dont need to be more than 180 days less 10days per drive age in years(min 90 days). Also as an old timer, i like the sound of noisy drives I can hear what they're doing better. But mostly the measures herein reduce most of the seek noise, so usually all i hear is parking, or a long seek (and the occasional crunching over an area of weak sectors i forgot to regen). I'm sure i forgot to mention other stuff, but its enough for an intro to me, and my personal choices in how i organzie my data. Of course i welcome any thoughts.

-

Hi i get from the logs :- Scanner.Service.exe Warning 0 [Scanner] Error in scanning thread: Arithmetic operation resulted in an overflow. Thus no scanning on any drives. Any ideas?