Search the Community

Showing results for tags 'google drive'.

-

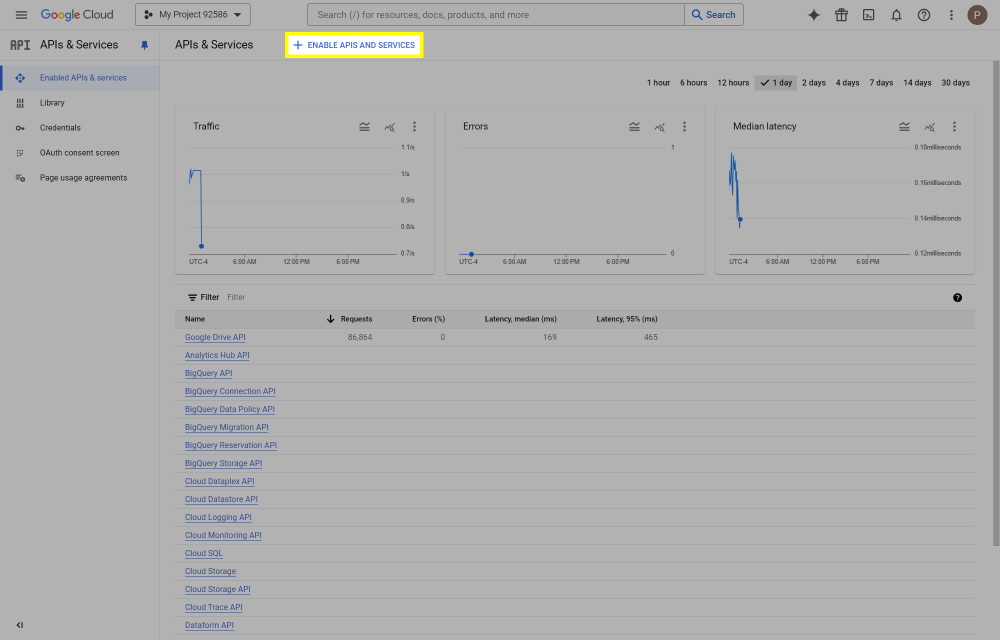

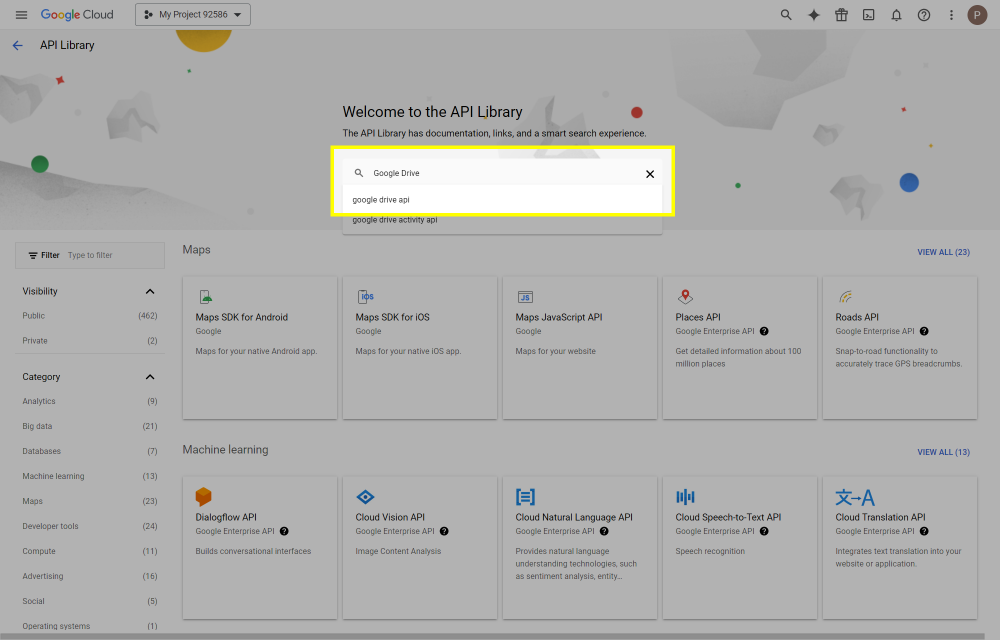

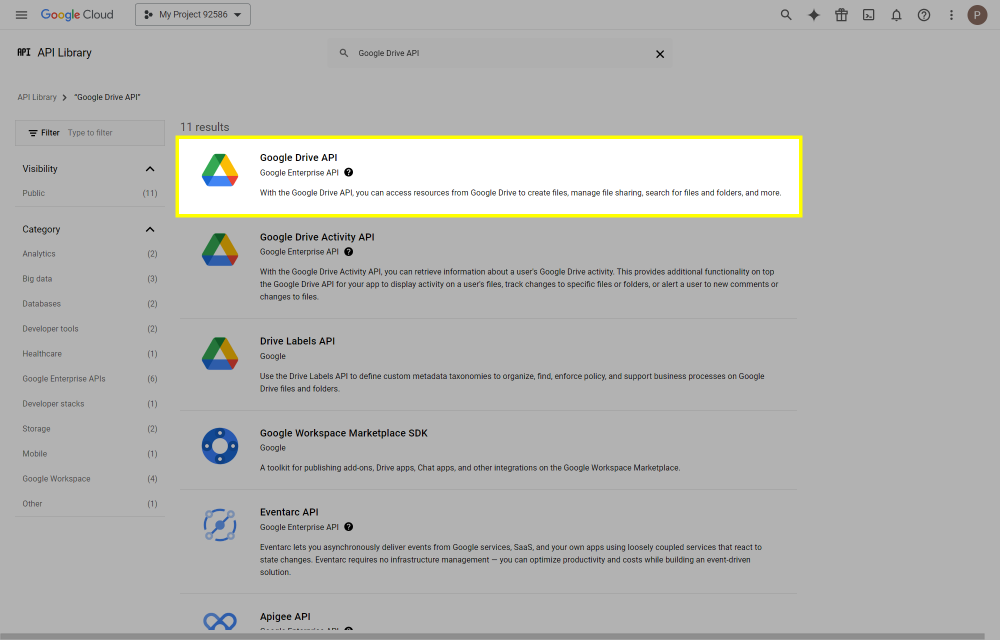

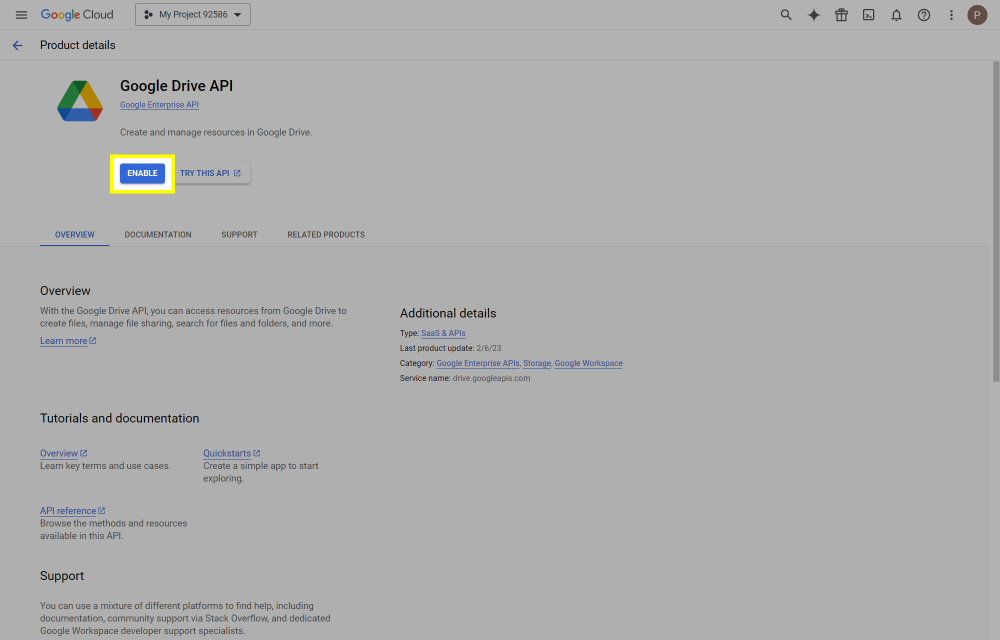

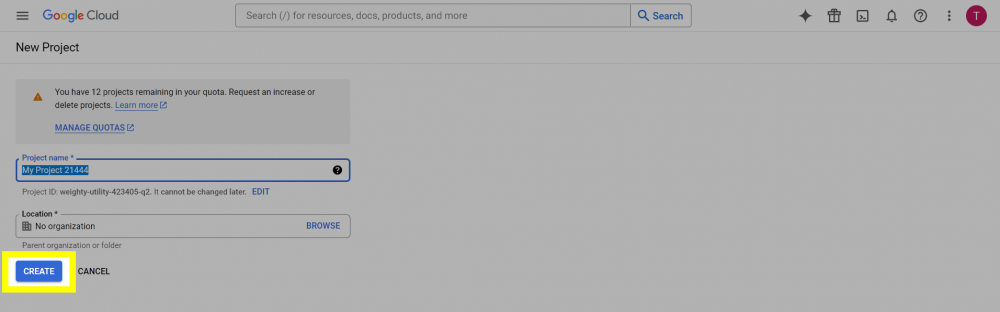

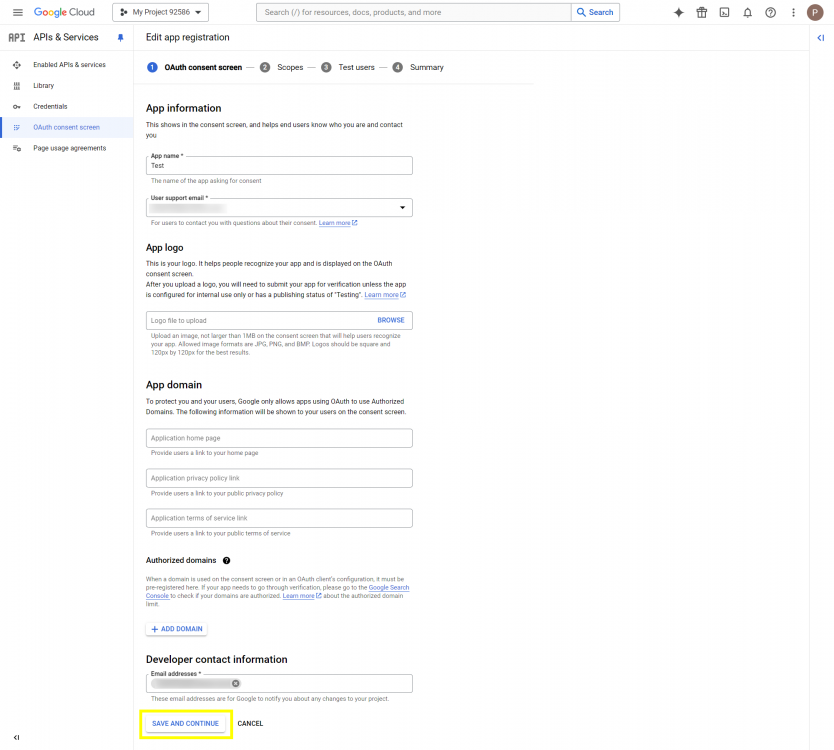

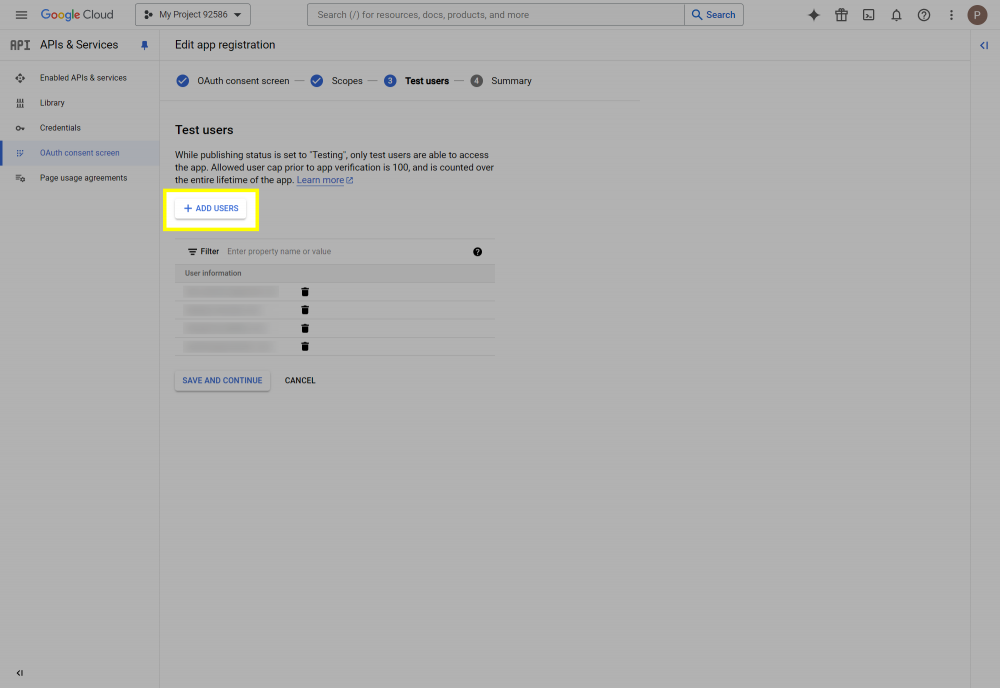

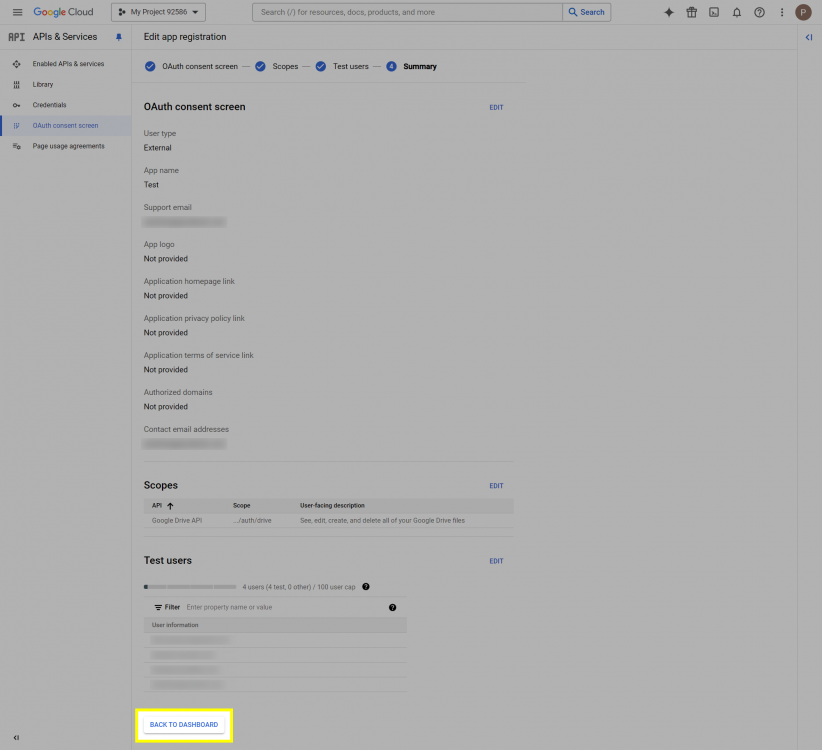

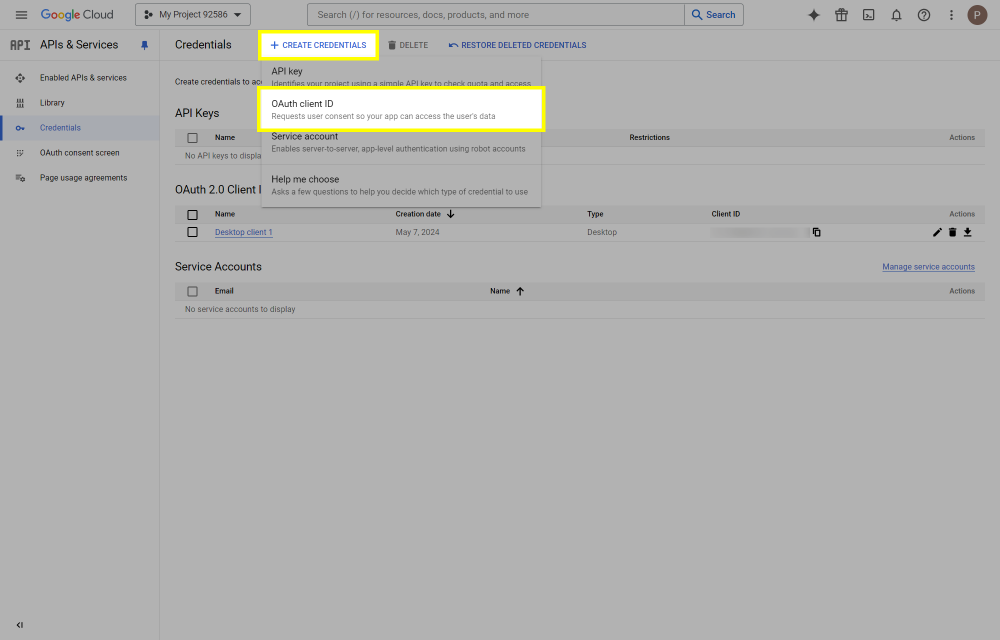

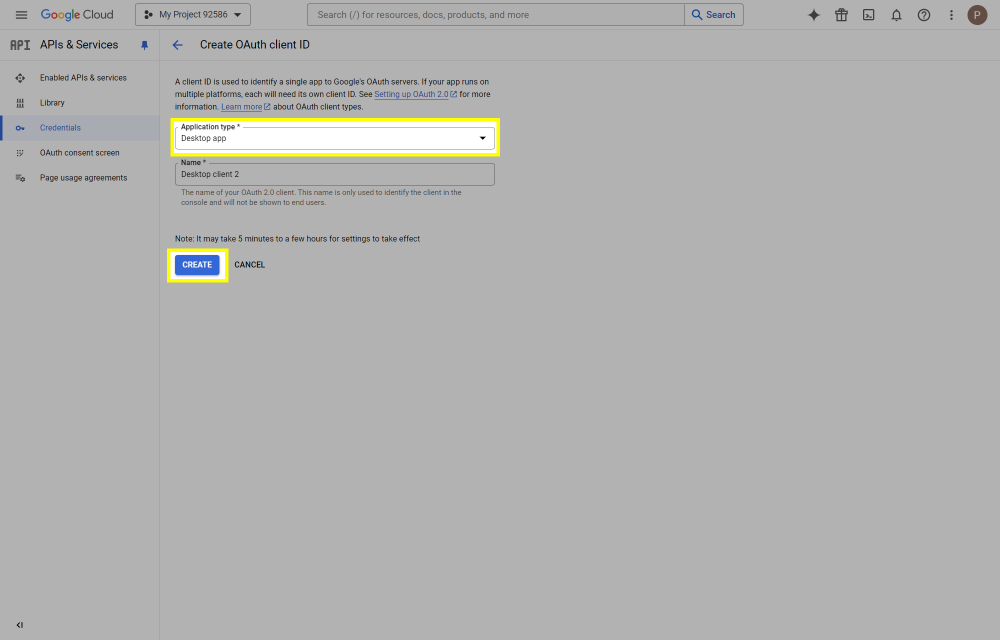

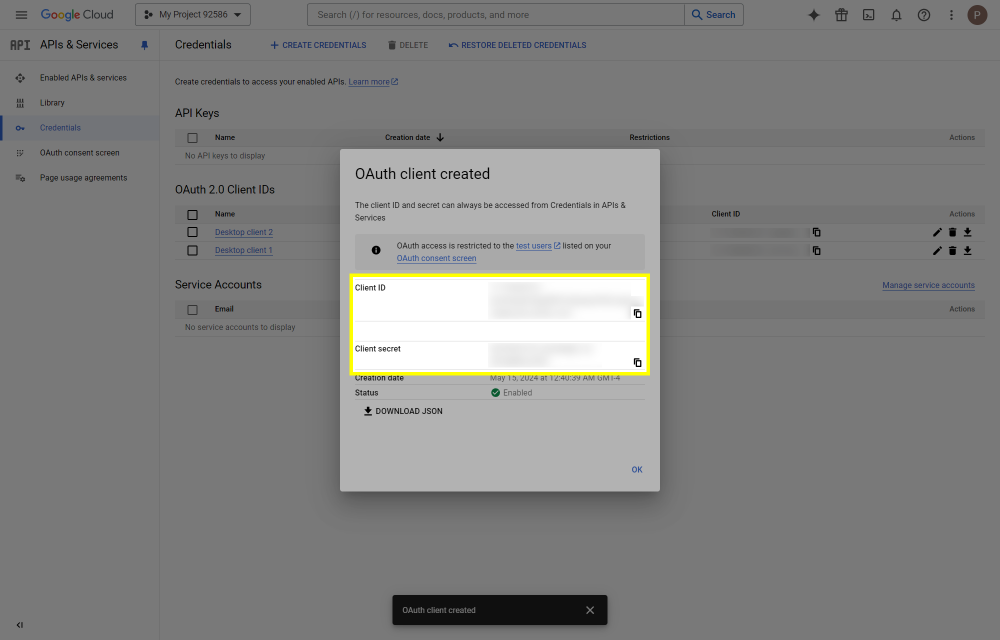

This post will outline the steps necessary to create your own Google Drive API key for use with StableBit CloudDrive. With this API key you will be able to access your Google Drive based cloud drives after May 15 2024 for data recovery purposes. Let's start by visiting https://console.cloud.google.com/apis/dashboard You will need to agree to the Terms of Service, if prompted. If you're prompted to create a new project at this point, then do so. The name of the project does not matter, so you can simply use the default. Now click ENABLE APIS AND SERVICES. Enter Google Drive API and press enter. Select Google Drive API from the results list. Click ENABLE. Next, navigate to: https://console.cloud.google.com/apis/credentials/consent (OAuth consent screen) Choose External and click CREATE. Next, fill in the required information on this page, including the app name (pick any name) and your email addresses. Once you're done click SAVE AND CONTINUE. On the next page click ADD OR REMOVE SCOPES. Type in Google Drive API in the filter (enter) and check Google Drive API - .../auth/drive Then click UPDATE. Click SAVE AND CONTINUE. Now you will be prompted to add email addresses that correspond to Google accounts. You can enter up to 100 email addresses here. You will want to enter all of your Google account email addresses that have any StableBit CloudDrive cloud drives stored in their Google Drives. Click ADD USERS and add as many users as necessary. Once all of the users have been added, click SAVE AND CONTINUE. Here you can review all of the information that you've entered. Click BACK TO DASHBOARD when you're done. Next, you will need to visit: https://console.cloud.google.com/apis/credentials (Credentials) Click CREATE CREDENTIALS and select OAuth client ID. You can simply leave the default name and click CREATE. You will now be presented with your Client ID and Client Secret. Save both of these to a safe place. Finally, we will configure StableBit CloudDrive to use the credentials that you've been given. Open C:\ProgramData\StableBit CloudDrive\ProviderSettings.json in a text editor such as Notepad. Find the snippet of JSON text that looks like this: "GoogleDrive": { "ClientId": null, "ClientSecret": null } Replace the null values with the credentials that you were given by Google surrounded by double quotes. So for example, like this: "GoogleDrive": { "ClientId": "MyGoogleClientId-1234", "ClientSecret": "MyPrivateClientSecret-4321" } Save the ProviderSettings.json file and restart your computer. Or, if you have no cloud drives mounted currently, then you can simply restart the StableBit CloudDrive system service. Once everything restarts you should now be able to connect to your Google Drive cloud drives from the New Drive tab within StableBit CloudDrive as usual. Just click Connect... and follow the instructions given.

-

I currently have a setup using Google Workspace, StableBit DrivePool, and Cloud Drive. I recently received notification from Google stating that I will no longer be permitted to exceed their allotted storage limit of 5TB. However, I am currently utilizing over 50TB of storage. In just one month, my service with Google will be transitioned to read-only mode. Considering my familiarity with DrivePool, I am concerned about the potential for drive corruption if I continue using the service until I find a suitable alternative. I need help to determine if my drive would be at risk of getting corrupted during this transitional period?

- 8 replies

-

- google drive

- google drive pool

- (and 3 more)

-

I have a very large drive setup on google drive. It has ~50Tb of data with duplication on. The drive i set as the max 256TB (50% usable wit duplication) I recently had to move it from the main VM it was on to a new VM. It is taking hours and hours (over 15 so far) to finish indexing the Chunk ID's into SQL Lite. I have a very fast connection (4gb/s up and down) and the VM has lots of resources with 12 5900x v-cores and 16GB RAM The drive is used mostly for large files several gigabytes in size. The storage chunk size of the drive being built is at the default (10MB), but I do plan on migrating (slowly @ ~250gb / day) to a newly built drive if I can improve it with a bigger chunk size, so it has less chunks to index if/.when it needs to. (plus it should improve through put in theory). However the highest option in the GUI is 20MB. In theory that should help, and the rebuild should take half as long for the same data. But my data is growing and I have a lot of bandwidth available for overheads. Would it be possible to use a much bigger storage chunk size, say 50mb or 100mb on the new drive ? This will increase overheads quite a lot, but it will drastically reduce reindex times and should make a big difference to throughput too ? What's the reason its capped at 20MB in the GUI ? Can I over ride it in a config file or registry entry ? TIA

-

- google drive

- chunk

-

(and 1 more)

Tagged with:

-

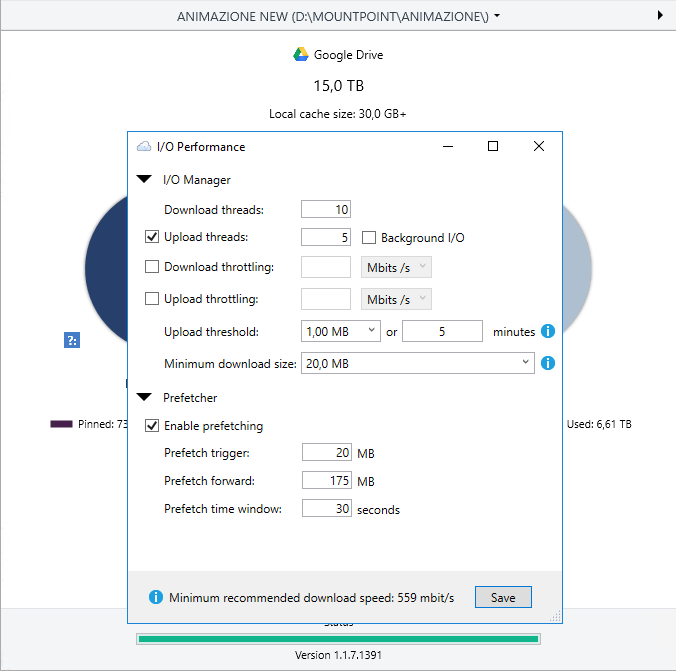

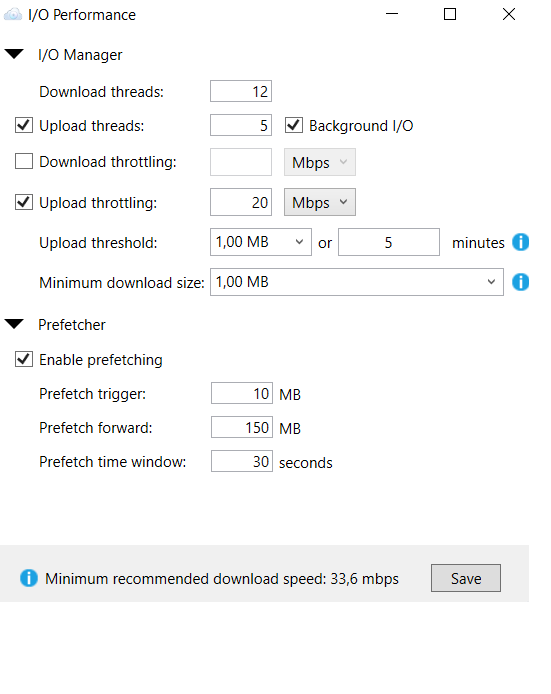

Hello, I changed PC and I am creating from a new library on Plex with the content inside my CloudDrive disks, after having mounted the disk and assigning them a letter I proceeded to change the owner of the disk as you read in this Christopher procedure and then I mounted my disks inside a folder instead of assigning them a drive letter. I noticed that the matching on Plex is extremely slow, and by extremely slow I mean that I added a folder with 3 TV series for about 127 files and an average weight of 1 GB per file and it took about 1 hour to complete the matching, I have read that it takes longer when creating a new library than when adding to an existing library, however I am starting to think that there is something wrong with my settings that I could change to speed up the process, the settings that I have adopted I found them on the forum and I think they are good for streaming (actually I have never had problems) only in the matching phase I have always found a certain slowness. Below is the information and a screenshot of the settings Connection: 1 GB / S symmetrical CloudDrive version: 1.1.7.1391 OS: Windows Server 2016 Cache: Dedicated SSD Anyone have a good idea on how to change the settings to speed up the process? Thank you all

-

I've configured Google Drive to use a custom API key I believe, based on Q7941147. It connects fine, so I think that's ok, but I'm still only seeing 1.54Mbps upload. While I don't have symmetric fiber, I do have a lot more bandwidth than that(I've capped the upload at 20 Mbps, but really it doesn't matter so far!). It also seems to be nailed to exactly that number, which makes me feel like it's an enforced cap. What kind of performance should I be expecting? I can get much more (in line with what I'm expecting) when I use rclone, which I'm also using OAuth for (different credentials). Do I need to unmount and remount the drive for that to take effect? I deleted the original connection already, which the drive was built on, so the only connection I can see is the one I made since I modified the json file. I'm reasonably sure the new connection is good, since I didn't quote the strings original so CloudDrive threw an error. Once I fixed that, it seemed fine. But I am not clear if maybe the drive itself still has cached the connection it was originally made with? It didn't seem to error when I deleted the original and made a new one, so I got a little suspicious as to if it's using the new one or not. I don't think I'll be able to consider CloudDrive viable with Google Drive if I can't get more upload performance than that though! Thanks!

-

I'm trying out CloudDrive with Google Drive, and have created a small drive for testing. If I go this route, I'll have a lot of stuff there, including some things I'd like to have served by Plex. I'd like to test the performance when the content has to be pulled from the cloud. I haven't put enough into the cloud drive to over flow the cache yet. Will it just sit in the cache forever, or do things ever stale out? Or failing that, can I flush the cache, so I can force CloudDrive to have to pull from GDrive to fulfill a request? Thanks!

- 2 replies

-

- gdrive

- googledrive

-

(and 2 more)

Tagged with:

-

I am using a google drive, one of my drive says there was a error when reading a file. a duplication version was used. Will it rebuild a duplication after reading the file?

-

Hi All, I purchased drivepool about a month ago and I am loving it. I have 44TB pooled up with an SSD cache it works great until yesterday. I downloaded backup and sync to sync my google drive to the pool and it synced the files I needed to the SSD then the SSD moved the data to the archive drives. Then backup and sync gave me an issue that the items are not synced and synced it again. It seems like backup and sync cannot sync to the pool for some reason. Did anyone else have this problem? Is this why we have clouddrive? I am really disappointed as If i knew it wouldn't work I wouldn't have bough drivepool or I would've bough the whole package at a discount instead of buying them one by one.

-

Hi there, Sinds a few weeks i use Clouddrive in combination with google drive. I have a connection that can handle 500mbit download en upload speed. I know there is a limit about how much you can upload each day around 500gb/750gb but does that also cound for downloading. I can not get higher speed dan 80mbit and if i am streaming 4k content that is for sure not enough. In the picture below you can see that i got Throtled when i get around 85mbps and that is not enough. I also added a screenshots of my settings. Hope you guys can help me out

-

Curious if there is a way to put the CloudDrive folder, which defaults to: (root)/StableBit CloudDrive, in a subfolder? Not only for my OCD, but in a lot of instances, google drive will start pulling down data and you would have to manually de-select that folder per machine, after it was created, in order to prevent that from happening. When your CloudDrive has terabytes of data in it, this can bring a network, and machine to its knees. For example, I'd love to do /NoSync/StableBit CloudDrive. That way, when I install anything that is going to touch my google drive storage, I can disable that folder for syncing and then any subfolder I create down the road (such as the CloudDrive folder) would automatically not sync as well. Given the nature of the product and how CloudDrive stores its files (used as mountable storage pool, separate from the other data on the cloud service hosting the storage pool AND not readable by any means outside of CloudDrive), it seems natural and advantageous to have a choice of where to place that CloudDrive data. Thanks, Eric

-

Hello, I currently have a healthy 100TB Google drive mounted via CloudDrive and I would like to move the data to two 60TB Google drives. However when I attempt to create a new 60TB drive I get the following error: There was an error formatting a new cloud drive. You can try to format it manually from Disk Management. Value does not fall within the expected range. at CloudDriveService.Cloud.CloudDrivesTasks.CreateCloudPart.#R5c(TaskRunState #xag, CreateCloudPartTaskState #R9f, IEnumerable`1 #8we) at CoveUtil.Tasks.Concurrent.Task`1.(TaskRunState , Object , IEnumerable`1 ) at CoveUtil.Tasks.Concurrent.TaskGroup..() at CoveUtil.ReportingAction.Run(Action TheDangerousAction, Func`2 ErrorReportExceptionFilter) Attempts to format it manually from Disk Management also fail. Interestingly, I can create a small drive of 10GB without any issues (I have not tried other sizes to see if there is a breaking point). I have verified the OS is healthy (DSIM/SFC tests all pass). I am attaching the error report that was generated along with the log file. Any help would be greatly appreciated. Thank you. Alex ErrorReport_2019_03_20-11_55_05.9.saencryptedreport CloudDrive.Service-2019-03-20.log

-

Hi all, I'm attempting to mount a 1.5gb (not a typo) google drive from Windows 10. I am selecting the full encryption option and choosing a 1tb HD with 300gb free for the cache. Everytime I do this I am presented with a BSOD and the following errors, stop code: SYSTEM)THREAD_EXCEPTION_NOT_HANDLED What failed: CLASSPNP.SYS Any ideas what could be causing this and how I can resolve ?

-

I suspect the answer is 'No', but have to ask to know: I have multiple gsuite accounts and would like to use duplication across, say, three gdrives. The first gdrive is populated already by CloudDrive. Normally you would just add two more CloudDrives, create a new DrivePool pool with all three, turn on 3X duplication and DrivePool would download from the first and reupload to the second and third CloudDrives. No problem. If I wanted to do this more quickly, and avoid the local upload/download, would it be possible to simply copy the existing CloudDrive folder from gdrive1 to gdrive2 and 3 using a tool like rclone, and then to attach gdrive2/3 to the new pool? In this case using a GCE instance at 600MB/s. Limited of course by the 750GB/day/account. And for safety I would temporarily detach the first cloud drive during the copy, to avoid mismatched chunks.

- 2 replies

-

- duplicate

- duplication

-

(and 3 more)

Tagged with:

-

I don't know how to fix this. But If I'm supposed to be using the Cloud Drive (through Google) as a "normal" drive, it always behaves erratically. I've mentioned it a few times here, but the software will not even BEGIN uploading until the very last byte has been written to the local cache. So for example, if I'm uploading 7 videos, and they all total 100GB, even with 99GB in the queue to upload, it won't even upload until that last gig is written to the local cache. This is frustrating because it causes two problems: error messages and wasted time. The first big issue is the constant barrage of notifications that "there's trouble connecting to the provider" or downloading, or uploading, but it doesn't even upload! A consequence of these constant error messages is that the drive unmounts because of these messages triggering the software to disconnect. So unless I'm babying my computer, I can't leave the files transferring as normal (which means not pressing the pause upload button). The other issue is the waste of time. Instead of uploading what's currently in the local cache and subsequently deleting that queue, it waits for EVERYTHING to write to the local cache. Settings: UL/DL Threads = 10 Upload Threshold: 1 MB or 5 Minutes Error Message(s): I/O error: Cloud Drive Cloud Drive (D:\) is having trouble uploading data to Google Drive. This operated is being retired. Error: SQLite error cannot rollback - no transaction is active This error has occurred 2 times. Make sure that you are connected to the internet and have sufficient bandwidth available. I/O error Cloud drive Cloud Drive (D:) is having trouble uploading data to Google Drive in a timely manner. Error: Thread was being aborted. This error has occured 2 times. This error can be caused by having insufficient bandwidth or bandwidth trhottling. Your data will be cached locally if this persists. If these two problems are fixed, this software would be perfect. Now I've recommended this program many times because when you're monitoring it works beautifully. But the only thing missing is rectifying these issues so it actually behaves like a normal drive that doesn't constantly throw up errors.

-

Hello ! I would like to ask, what are the best settings for Cloudrive with Google Drive ? I have the following Setup / problems I use CloudDrive Version 1.0.2.975 on two computers: I have one Google Drive Account with 100TB of avaiable space. When I Upload some data to the drive the Upload Speed is perfect. On the frist Internet line I got an Upload Speed of nearly 100 MBit/sec On the Second Internet line (slower one) I also got full Upload Speed. (20 MBit/sec) When I try to download something the download Speeds are incredible slow. On the first line I have a download speed of 3 MB/sec. It should be 12,5 MB/sec (max Speed of the Line) On the Second Line I have a download Speed of 5 MB/sec. It should be 50 MB/sec (max Spped of the Line) I already set the Upload/download Threads to 12 on both Computers. I also set the minimum download size to 10.0 MB in the I/O Performance settings. What are the best settings to increase the download Speed ? Thank you very much in advance for any help. best regards Michael

-

Constant "Name of drive" is not authorized with Google Drive

irepressed posted a question in General

Since a couple of days, I am getting constant "is not authorized with Google Drive" about my drives as soon as I try to upload to it. My account is a G-Suite unlimited account. I've been uploading about 50GB of data today and had to re-authorize all drives (I have 4) 2 to 3 times each. This is a real pain to deal with and I lose access to my drives again and again. There does not seem to be any logic to it, drive will fail to this error one after the other, with random time between getting this error on each drive. As a side note, I have been uploading only to one drive out of my 4 mapped drives. Does anybody know what could be the issue? Thanks. -

So i have been using Google drive on Cloud Drive for months and months and all of a sudden today realized when i ran a clean on my plex server that loads of files are missing. Since i run this daily they were available yesterday and earlier today. In one video folder every folder with a name that is after p in the alphabet is missing. In another folder inside that same folder half the files are missing. It only seems to affect one folder and its subfolders on the drive and i have no explanation for its disappearance. Having done a reboot earlier today it may have happened after that reboot with the mounting of the drive, but none of the missing files are in the recycling bin and unmounting and remounting has no effect. I have a few questions. - Is there anything i could have done wrong and how to do i keep this from happening in the future? - Is there a way to rescan the drive (??) in order to see if the file deletion is permanent? - I have all the files backed up on backblaze so thats not a big deal but should i build another drive instead of putting the files back on this drive? Thanks for the help.

-

Hello, I want to copy my amazon cloud drive to google drive. Is it possible to clone it 1:1? Copying all folders and files takes too long because amazon cloud drives gets after some time disconnect from stablebit clouddrive and it needs me to click manually on "retry". Is it possible to clone with rclone or similair tools the whole acd stablebit cloudrive to gdrive? thanks!

-

I spend almost 3hours every day trying to keep my CloudDrive+CloudPool setup alive. For me its a hard job with crashe and unresponsive system and GUIs. ReFS, NTFS, going virutal, going BETA, Clustersize, adding SSD, SSD Addon, cachesize... trying different providers, Dropbox, Azure, FTP, Google Drive, Local... Nothing keeps CloudDrives working more then some hours. But my latest problem is that im sleeping to long so CloudDrive removes my haddrives after dual bluescreens. When my drives are getting removed i see: [CloudDrives] Drive <ID> was previously unmountable. User is not retrying mount. Skipping. 10 drives is now 5 drives.. And now i need to force it....? So... what to do know? Start all over again? ok, this was not clouddrive related, i lost 2SSD´s with the last BSOD... sorry! but can i copy the cache folder to a new drive if i csn recover the data?

-

Hi, I'm kind of new to CloudDrive but have been using DrivePool and Scanner for a long while and never had any issues at all with it. I recently set up CloudDrive to act as a backup to my DrivePool (I dont even care to access it locally really). I have a fast internet connection (Google Fiber Gigabit), so my uploads to Google Drive hover around 200 Mbps and was able to successfully upload about 900GB so far. However my cache drive is getting maxed out. I read that the cache limit is dynamic, but how can I resolve this as I dont want CloudDrive taking up all but 5 GB of this drive. If I understand correctly all this cached data is basically data that is waiting to be uploaded? Any help would me greatly appreciated! My DrivePool Settings: My CloudDrive Errors: The cache size was set as Expandable by default, but when I try to change it, it is grayed out. The bar at the bottom just says "Working..." and is yellow.

- 2 replies

-

- cache

- google drive

-

(and 1 more)

Tagged with:

-

Hello All, I am new to StableBit CloudDrive and I just wanted to ask a quick question; I am currently bulk uploading about 1.5TB of data over 4 GDrive accounts. I have increase replication to 2 and the upload is currently in process. Every so often I get the following error message from one of the drives: Error: Thread was being aborted When setting up I followed this Plex user guide from Reddit: https://www.reddit.com/r/PleX/comments/61ppfi/stablebit_clouddrive_plex_and_you_a_guide/ Which talks about upload threats potentially being a problem. I had them set exactly as it says in the guide. I have lowered them to: Upload: 5 Download: 5 Does this look OK? Is there anything else I can do? Is this something I can put back once the bulk upload has completed; as I will not be pushing 1.5TB as a one off push all the time?

- 1 reply

-

- gdrive

- google drive

-

(and 1 more)

Tagged with:

-

I'm in the middle of doing a setup on G-suite. Seeing they don't cap space (yet) I want to go that route (I'll upgrade to the required users if it becomes an issue later on). For a cloud drive setup (aiming at 65TB to mirror local setup) Any recommended methods for storage? I saw another post here recommending various prefetch settings, however was not clear on whether new drives should be sized a certain way? Various 2TB drives or max out at 10TB for each one then combine into one share using DrivePool? Ultimately hoping to get an optimal setup out of the gate. And probably would not hurt to document that here for others to follow as well. Ideally using it with DrivePool so that it can be treated as one massive drive.

- 1 reply

-

- gdrive

- google drive

-

(and 2 more)

Tagged with:

-

Hi, My current setup for Cloud Drive working in conjunction with Google Drive for Plex which is working perfectly. Couldn't have been more happier. However, now I would like to have redundancy for the current Google Drive. In order to achieve that, do I just sync or copy the Stablebit Cloud Drive folder on Drive to the new Drive account? If I one drive account was to fall over, do I then just simple link the Cloud Drive to with the other account and it will pick up all the files? Thanks in advance!

-

Can't move my google drive sync folder to a drivepool drive

disposablereviewer posted a question in General

I'm using windows 7. I'm on the latest stable release of drivepool. I can't find any detail other than this bug that appears to have been ignored: https://stablebit.com/Admin/IssueAnalysisPublic?Id=1151 I keep getting the following error from google drive when trying to relocate the google drive folder to my drivepool drive: 'please select a folder on an NTFS drive that is not mounted over a network.' Not sure why google drive thinks this since the drive appears as NTFS in windows storage management and I'm not trying to mount over a network. Other thread where this was pointed out on a different o/s years ago: http://community.covecube.com/index.php?/topic/528-google-drive-and-stablebit-drivepool/- 1 reply

-

- windows 7

- google drive

-

(and 3 more)

Tagged with:

-

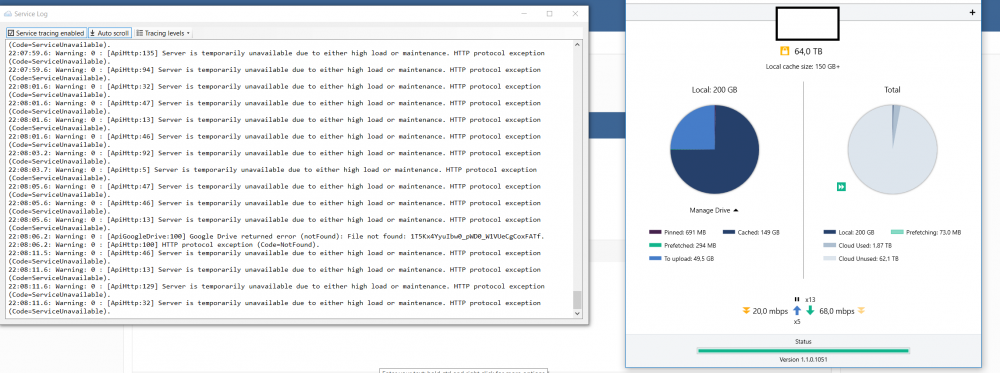

Hello Everyone, I just started testing CloudDrive and have run into a problem copying files to Google Drive. I can copy 1 or 2GB video files to the CloudDrive folder with no problems. I have trouble when I copy 5 or 10 2GB files ... after the first couple files are copied to the folder, the file copy process slows down. After a while, the copy will abort - OSX displays an error that there was a read/write error. In CloudDrive's logs I noticed that Google Drive reported a throttling error? 22:26:22.1: Warning: 0 : [ApiGoogleDrive:14] Google Drive returned error: [unable to parse] 22:26:22.1: Warning: 0 : [ApiHttp:14] HTTP protocol exception (Code=ServiceUnavailable). 22:26:22.1: Warning: 0 : [ApiHttp] Server is throttling us, waiting 1,327ms and retrying. 22:26:23.5: Warning: 0 : [IoManager:14] Error performing I/O operation on provider. Retrying. The request was aborted: The request was canceled. I thought write data is cached to the local drive first then slowly uploaded to the cloud? Why would there be a throttling error with many large files are copied? Thanks.

- 3 replies

-

- Throttling

- Google Drive

-

(and 4 more)

Tagged with: