JasonC

Members-

Posts

95 -

Joined

-

Last visited

-

Days Won

6

JasonC last won the day on December 27 2017

JasonC had the most liked content!

Recent Profile Visitors

1587 profile views

JasonC's Achievements

Advanced Member (3/3)

15

Reputation

-

JasonC reacted to an answer to a question:

Show better information to find corresponding disk in Drivepool

JasonC reacted to an answer to a question:

Show better information to find corresponding disk in Drivepool

-

Ah, thanks! I was trying to find something like that, I'll dig around the GUI again and look for that option

-

It would be nice if Scanner showed something a little bit more useful for easily identifying the corresponding disk in Drivepool. I know deeper integration between the two has been asked for in the past, I'm not even asking for that much. I'd settle for just showing the label of the disk. Let's say Scanner identifies problems on a disk. You decide to remove it from your pool and replace it. If you have just a a few disks, it might not be a big deal, but if you have a large number of disks, finding the corresponding disk from Scanner to Drivepool is not impossible, but it _is_ painful. You have to do it by serial # of by the raw UNC path and work it backwards. If you haven't done something like put the serial # in the label of the disk, this is a pain in the butt if you have a lot of similar disks because you have to check each one individually to see the serial #. A simple showing of corresponding pieces of identifying data one one or both apps would make this go away. I'm not asking for deep integration, just a simple QOL change. It's already worrisome enough that you might eject the wrong disk, this is overly complicated just because not even a basic effort to make it not a problem has been made. Unless there is a simple way, in which case please do tell me, and I apologize for the post.

-

It doesn't look like there was any changes in the current version based on this, but I'm also running into this. The issue here is partly because of how Windows presents USB disks. It either looks like the USB interface is the controller, or for UAS capable devices, it looks like there is essentially a UAS virtual controller that acts as a SCSI interface shim to those devices. But it looks like a single controller as well. Neither case actually really represents the physical situation (in my case, I have 2 USB enclosures with backplanes). What would be nice and might work if we could set the scan to work on a "per assigned enclosure" level. But even that isn't quite ideal, as it wouldn't be too difficult to saturate the USB controller. That's assuming too that you are able to get a full USB 3.1 connection too. I know in my situation, I'm a bit limited on the disk interface side of things at the moment due to still having some older SATA I disks, which limits all the devices on the backplane to SATA I. Until I finish rotating those all out, I won't be able to utilize SATA III speeds, so the USB interface speed isn't even a concern, but the number of simultaneous scans still is. Actually, it's more so in my case, as I don't really have any excess bandwidth available.

-

Hello, I've been encountering an error when attempting to have multiple simultaneous downloads from a single Firefox client go to a network share of a DrivePool disk. When I attempt to start the second download, I usually(but not always, but it's more than 90% of the time) get a "No More Files" error. This will persist until the initial download is complete, and then it works fine. It doesn't happen if I save to a local disk. I'm just looking for possible causes, as DrivePool is the "unsual" bit of the setup here- I wouldn't normally expect to have a problem opening multiple files for writing at once to a Windows share. So I am basically asking if anyone has seen this before and can attach it to being a DrivePool related thing. I do need to do more testing with DrivePool out of the mix etc, it's just a bit of a chore to set all of the various scenarios I can think of to narrow the cause down, so I haven't gotten that far yet. I saw that thread "Beware of DrivePool corruption / data leakage / file deletion / performance degradation scenarios Windows 10/11" and it does sort of make me wonder if I could be running into something related to that with some of information from that thread. Thanks!

-

Is anyone else out there using Backblaze to do their backups? I've been experimenting with it, trying to maximize my backup performance, and it seems to be quite bad when using the pool as the backup source. Individual files are backing up at a decent speed, but there seems to be some kind of latency between small and moderate sized files which really adds up over time. Backblaze thinks it can do between 2-3TB a day, from the raw speed, but in practice, it's not approaching that at all. I'm wondering what other peoples experience is with this. I'm currently testing mounting the drive to a letter and directly backing it up, and this so far seems to be doing significantly better, it's a little unfortunate, because I have 14 disks in my pool currently. Mounting to directories doesn't help, because Backblaze doesn't follow reparse points from what I've seen(Which is how disks are mounted to folders work), so I will have to also mount to disk letters if that's the only way to get optimal performance out of Backblaze. I've of course seen the arguements this is better anyway, since you are likely to only have to replace a single disk, and identifying what's missing in a backup for a restore will be more difficult if you are choosing across the whole pool rather than an individual disk. I think I worked that problem out using Everything to index at the mount point level, but it's a moot point if I need to use driver letters to get reasonable performance out. Anyway, I wondered what other people's experiences with using Backblaze might be, and if anyone knows how to optimize the configuration so as to get good performance out of Backblaze+DrivePool. While I'm on the subject, I am not clear if I should have DrivePool bypass filters or not while using Backblaze...I've read the examples of where you'd maybe want to/not want to do so, but I've still not wrapped my head around it well enough to decide if this could be problematic for DrivePool or not. I suspect though, that it probably doesn't matter right now, since I'm leaning towards just hitting the individual disks directly and excluding the pool disk. Thanks!

-

Hmmm interesting idea. I have PrimoCache, I thought it only worked with memory though- I'll have to investigate that possibility. The disk itself is actually sorta dedicated to caching operations, it also is where my rclone mounts perform caching.

-

Title is the question pretty much. I have a disk I've added to act as a temp SSD cache(related to my other recent questions) until I can get another disk added as a more permanent cache. That disk has other files on it which are (intentionally) not part of the pool. If\when I eject the disk from the pool, I know it copies pool data off- does it leave any other data on the disk intact i.e. things not in the hidden pool folder? I assume it does, I'm just looking for confirmation. Thanks!

-

With the duplication feature, is it possible to designate the target disks? I ended up having a Windows upgrade garble all the disks in an enclosure, so duplication wouldn't really help me if the duplicates were on disks all in that enclosure/connected to that controller. Thanks!

-

I'd like to get the SSD Optimizer as close to a true write cache as I can, at least as far as keeping the amount of space used on the SSD as minimal as possible, over the long term. From the comments in SSD Optimizer problem I've configured my Balancing settings to: activate with at least 2GB data Balance ratio of 100% Run not more then every 30 minutes The SSD Balancer itself I have configured as: Fill SSD to 40% (around 400GB) Fill archive drives to 90% or Free space is at 300GB Does this seem like it should do roughly what I want? The balancing ratio I was a little concerned was going to cause my archive disks to all re-balance, though none of them hit either of the fill settings I have, so I think I'm ok there? I'm a little fuzzy on that ratio. With it set to 100%, does that mean it should trigger the move on the SSD as long as it's above 2GB data, or is it still waiting to the 40% mark (the interaction of the ratio to % full triggers is where I'm unclear). These are semi-short term settings, I'm performing a data recovery at the moment, so I'm doing a lot of writes, which is why I want to cache to fast storage, but offload ASAP. Thanks!

-

Donald Newlands reacted to a question:

"File Scan" option not always doing anything

Donald Newlands reacted to a question:

"File Scan" option not always doing anything

-

Recently I had an issue that caused me to move my array over to another disk. I think the issue caused a problem, as 5 disks show up with damage warnings. In each case the damaged block is shown to be the very last one on the disk. I assume this isn't some weird visual artifact where blocks are re-ordered to be grouped? Anyway, clicking on the message about the bad disk of course gives a menu option to do a file scan. I decide to go ahead and do this, and of the 5 disks, I think only one actually apears to do anything- you can start see it trying to read all the files on the disk. The others, the option just seems to return, pretty much instantly, which makes no sense to me. They don't error or fail, or otherwise indicate a problem, they all just act like maybe there are no files on those disks to scan (there are). The disks vary in size, 2x4TB and the others are 10TB. One of the 4TB disks scanned fine, and indicated no problems, but it's not clear as to why it would work and the others wouldn't. Ideas? Thanks!

-

I have just under 10TB free on my array, but any time I try to copy something it, it's giving me a "not enough space free" error. This is even on very small files. I'm not sure how long it's been doing this for, just accessing files for read(which is what I'm doing most of the time) is fine. I haven't yet rebooted because the machine is in use, so I thought I'd check for other ideas in the mean time. I forgot to mention, I seem able to create folders fine, so it's not locked into a total read-only state. I just can't seem to write if a free space check is performed, I guess? Thanks!

-

Right, except the problem is chkdsk wants to occur on an underlyng disk (2 disks, actually), not the pool. And it wants to umount the disk to be able to perform fixes, i.e. I need to remove the disk from the pool, since it would be unmounted, or I need to have it do it at reboot before the disk is mounted and has files in use by the pool. Not all chkdsk operations can be performed on a mounted file system, basically. So while it's not directly a DrivePool operation, because it's affecting underlying NTFS structures in a repair scenario, it impacts DrivePool.

-

I need to run a chkdsk on a few drives in my pool, and I'll need to have them be offline to do so. Rather then take the entire machine down, can I remove it, check all the check boxes, and then, after I run the repairs, if I re-add the disk to the pool, will the contents just get merged like it had never left? Thanks!

-

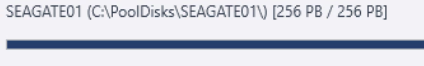

As much as I'd like to have a 256PB disk, it's a little disconcerting. It's also doing some odd things to the metrics...for instance it shows unduplicated file system storage as 256PB, but it still shows free space of the pool correctly. The rest of Windows is seeing the disk correctly (disk manager sees the size correctly etc) I am on the 2.3.0.1244 beta, so if it's that, that's fine, as long as it's just a visual error.

-

Well, found the problem. Didn't really put together what having an I/O default in the app settings implied...and just found that the I/O settings for the drive are at 1.5Mbps. I must have set it to that when I first installed and configured, and didn't realize what I had done at the time. So...I've also just reverted to the generic key, so I'll see how that goes. Thanks!