RG9400

Members-

Posts

28 -

Joined

-

Last visited

-

Days Won

3

Everything posted by RG9400

-

I would just like to say that this is fairly disappointing to hear. Though they added limits, a lot of people are still able to work within those limits. And the corruption issue hasn't been present for many years as far as I can tell -- the article in the announcement by Alex is from April 2019, so over 5 years ago. I get the desire to focus resources though, and I'm glad it still works in the background for now. Hopefully there are no breaking changes from Google, and I hope an individual API key is going to have reasonable limits for the drive to continue working. But yeah, switching providers is not very realistic in my case, so I guess I'll use it until it just breaks.

-

Version 1.2.0.1386 BETA I noticed a few versions ago/a week or two ago, that my CloudDrive was not uploading the same amount of data it normally did. It seems to get stuck at random points, and it would then start a pinning metadata task. After the task is done, the amount cached locally (at least reported by CloudDrive) decreases, and the amount to upload increases. The change is usually only around 5 GB, but since this happens every few hours, it compounds to slow down the overall upload. I ran a chkdsk on every partition in the drive, and I even forced a startup recovery in case it needed to reindex all the local data. However, it's started occurring again. Has anyone else noticed this or is aware of the solution? I don't see any actual errors

-

The DrivePool disk works in Docker containers using a WSL2 backend, but the performance is abysmal, especially compared to the CIFS mount I was using before. I've gone back to CIFS for now. A Plex library scan took almost 2 hours using the DrivePool automount. As a CIFS volume, the same folder was scanned in 5 minutes.

-

I can confirm, seems my DrivePool is accessible from WSL 2 now, and I can mount and use it from Docker containers like any other DrvFs mount (automounted Windows volumes).

-

In the meantime, if anyone wants to get this working with Docker for Win using WSL2 as a backend, you actually don't have to do too much complex stuff. If you use compose, you can create a named volume like below, and then mount this into other containers. Performance is what you would expect from a CIFS mount...not sure if DrvFs is better or not, but it works at least volumes: drivepool: driver_opts: type: cifs o: username=${DRIVEPOOLUSER},password=${DRIVEPOOLPASS},iocharset=utf8,rw,uid=${PUID},gid=${PGID},nounix,file_mode=0777,dir_mode=0777 device: \\${HOSTIP}\${DRIVEPOOLSHARE}

-

+1 from my side. I've been using WSL2 extensively, and it's been hard to work around DrivePool's lack of support. Windows is heading in a direction where it works in harmony with Linux, so it would be nice for the software to be able to support that. I am able to cd to various directories on the mount that Windows does natively, but I can't actually list out any files, and no software running within WSL2 can see them either.

-

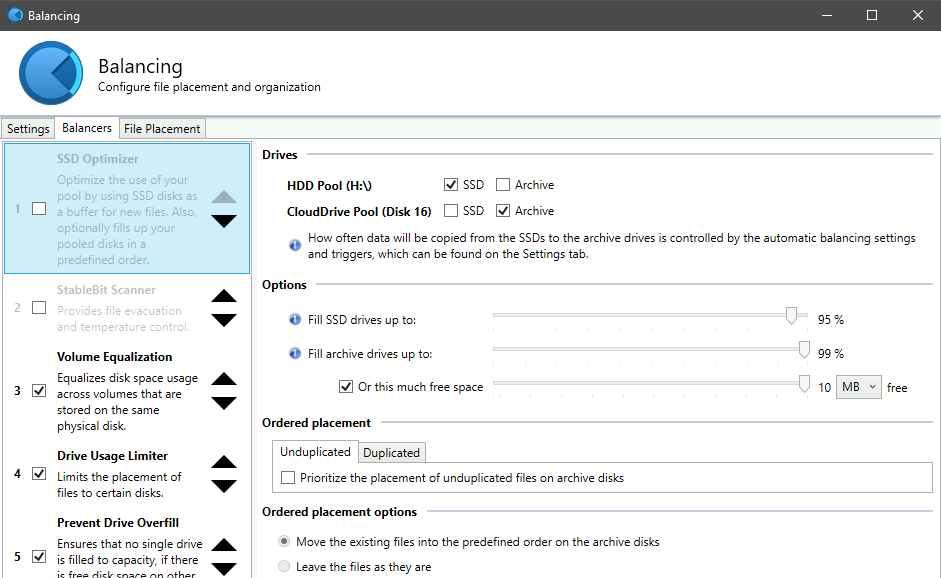

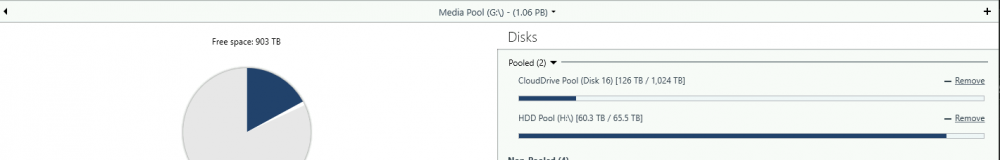

I did some reorganizing, and I basically created a pool of pools. The pool contains an HDD pool (a bunch of local hard disks) and a CloudDrive pool (a bunch of CloudDrive partitions). Now the optimizer plugin seems to be available, and I can properly set the HDD pool as the SSD, and the CloudDrive pool as the Archive. This seems fairly close to what I want, but I still don't know how the balancing would work. 1. Does it try to move *all* files off the SSD into the archive in the first balancing run? Does it run for a set amount of time, or once it starts, it will try to move everything? Reason is that I have a ton of data on the HDD pool that cannot move to the SSD pool right away. I only see a way to immediately balance or to balance at a set time every day, maybe indicating there is no way to move the files over time 2. Are the files that are being moved inaccessible during this time? Can this create issues if applications like Plex are running? 3. If the balancing is running, and the files being moved are too large in size for my CloudDrive cache, I assume writes will be slowed, and the whole balancing task will still run as and when data is uploaded from the CloudDrive. If, during this time, I add a file, will it get placed on the HDD pool since that is still the SSD? Basically, could I theoretically be running balancing 24/7 where files are being added to the HDD pool as others are being moved to the CloudDrive pool? The concern would be that those files may be inaccessible while being moved, and I guess my cache drive would be perpetually be at 99% capacity with slowed writes and under heavy wear.

-

Yeah, I knew the cache drive limitation which is unfortunate. I was actually thinking to do it the other way around. Basically the CloudDrive remains on a single SSD cache, and then I add a local hard drive to a pool with the CloudDrive. I set DrivePool to download to the local HDD first, and then use the SSD Optimizer or some other balancing mechanism to move files from the HDD to the CloudDrive. In this manner, the CloudDrive cache remains on a single SSD, but I have an upload buffer via the HDDs. I am not sure if the above is feasible. I think the biggest concern would be how to move the files from local to CloudDrive given that the pool will not see the space of the underlying cache. EDIT: It does not seem like my pool with CloudDrives in it allows the balancing plugins to actually work. The options seem disabled, though I felt the SSD Optimizer Plugin was close to what I wanted in theory where the HDD acted as an "SSD" and the CloudDrive acted as the Archive.

-

I've been thinking about a new setup, and I wanted to float an idea to see if it works (pros/cons). Basically, right now, I have my clouddrive mounted to a single Optane SSD (C:). This works great with speeds, but the drive is limited to 1TB, and with slow upload, this drive is full almost always, so it's hard to copy new data over. For this purpose, I have a bunch of HDDs that I added to a DrivePool pool along with the CloudDrive, and I copy over new data to those pools before manually moving it to the PoolPart folder in C: when the most recent data is uploaded. It is manual and cumbersome. Could I do something like this instead? I create a pool of my HDDs, then add that to the CloudDrive pool. I set up the CloudDrive pool to download new data to the HDD pool, and then use the plugin to move data from the HDD pool to the CloudDrives? This way, my pool sees all the data, but the underlying locations are being managed automatically. If this scenario is feasible, I do have a few questions. 1. If I have a file on the HDD portion, and I do a "move" via my Pool, will it remain on the HDD portion? Will it remain on the same disk it was downloaded to initially? 2. Can I control the plugin so it moves to CloudDrives based on the cache drive space? The CloudDrives will always look empty/have tons of space but my underlying cache may not. I am concerned that the plugin will constantly be trying to move data to the cache drive, resulting in it being full 100% of the time and slowing writes down.

-

You should be able to Re-Authorize the drive, which would start using the new API key

- 2 replies

-

- googledrive

-

(and 2 more)

Tagged with:

-

I just "Re-Authorized" the drive. You can check by going to the API console, and you should see queries for the Google Drive API -- the Quotas tab is what I am using to monitor it.

-

The main reason is that Windows cannot run various fixes and maintenance on drives more than 64TB (e.g. chkdsk). It cannot mount a partition larger than 128TB either. Some people are also concerned that historically, various partitions got corrupted due to outages at Google, so by limiting the size of any individual partition, you also limit the potential losses

-

Appreciate it, and love the vision for this cloud dashboard. Very excited to see future new features.

-

That makes sense, and it was also my experience after enabling this new feature. One extra development that could really help is to display a changelog against the release notification. It helps to know what new features/bugfixes are being pushed in each release, and it's a bit cumbersome to find the various changelogs for each application (seems beta and final releases for each app have separate txt files).

-

I saw that the latest dev Beta version of CloudDrive allows for automated updates via StableBit Cloud. This seems like a useful feature, but I wanted to know exactly what that meant before I turned it on. Basically, right now, after updating CloudDrive, I have to restart the computer due to something with the drivers. If I get an automated update downloaded, would that mean my computer would restart automatically, or would it need to be manually restarted, or can the various applications now update without a need for restarting the computer? My main worry is that by enabling automatic updates, I end up with something like Windows updates where an update is pushed that causes (either instantly or on a delay) the computer to have to restart.

-

Do you have pinned data duplication enabled? For my case, I found that when it ran weekly cleanups, it caused the memory to increase dramatically. I am on .1184 and still get that if it runs cleanup, so I turned off the pinned data duplication for now. I do notice my system is a bit unstable, and I am not necessarily sure if CloudDrive is the culprit. With CloudDrive no longer doing weekly cleanups though, things are running more or less smoothly. I had found .1145 was very stable, and if you are convinced the BSOD issue is due to CloudDrive, it may be worth trying to downgrade to that version and see how it works. Just uninstall CloudDrive via Windows Add/Remove programs feature, restart your computer, download and install .1145 from http://dl.covecube.com/CloudDriveWindows/beta/download/, and run cleanup. If you had pinned data duplication, you would also want to turn that off now. Should work, and please report back if that helps. It would give me some evidence if my issues are similar to yours or separate.

-

Current: I have a CloudDrive with multiple partitions, and I have pooled them together using DrivePool. This is mounted on my SSD which has around 1TB space, with 200GB set for the cache on the CloudDrive (expandable). I also have a bunch of HDDs storing local data that has yet to be uploaded onto the CloudDrive. I added these HDDs to the aforementioned pool so that applications like Plex can access the files before they are uploaded to the CloudDrive. Since space is limited on my cache drive, the upload process has been a bit of a pain. I currently check if there is space on the cache drive as data is constantly being uploaded, and I manually move files out of a HDD "poolpart" folder onto the drive's own directory, then copy them back onto my Pool where they are now placed onto the CloudDrive partitions due to them having a lot more free space than the HDDs. Then I wait a day until the data is uploaded and repeat. Goal: Would there be a way to use DrivePool to automate the above? Basically, as my cache drive empties (based on my config, my cache drive itself is not in any pool) via uploading data, the local HDDs move data over to the CloudDrive partitions. Of course, this would ideally happen in the background, and for all application purposes, the Pool itself remains the same, only the files shift from HDD -> Cache -> Cloud. Then, when I download future data, it could be placed on the local HDDs and be added to the queue. Basically, my goal is to let the multiple HDDs serve as an extension of the upload queue while keeping the cache portion on the SSD itself. I feel like the SSD optimizer is close to doing this, but as I understand it, the problem is that it uses free space from the CloudDrive partition instead of from the cache drive itself. So the partitions might only be 5% full, but the cache drive would be 95% full.

-

Can you check if the drive was performing cleanup? On .1184, I have noticed that the drive does not have trouble uploading on a routine basis, but whenever it has to clean up data (every week by default if you turn on Pinned Data Duplication), the drive starts aborting threads. It also ends up using up to 14GB of memory, and it has crashed the CloudDrive service and my PC before. I have opened a ticket and am awaiting a reply, but it is possible the issue is related. While I am debating going back to .1145 (the last "stable" version that at least I used), for now, I just restart the service when it enters cleanup mode. It says the drive needs to perform cleanup, but I just don't click on it.

-

Have you been using the latest release without issues? I installed .1173 earlier, and while the upload issue seemed to be fixed, my computer would randomly shut off (probably BSOD crashes) every couple of hours. I did install latest versions of DrivePool, Scanner, and Windows at the same time, so it could have been one of those; however, after reverting back to .1145 on CloudDrive, I haven't faced the issue again. I am just hesitant to update now, so waiting until I hear it's fully stable.

-

No, you won't lose it. I was worried, but uninstalling, then rebooting, then reinstalling .1145 worked perfectly. It did not even need to "recover", it just found the data right away. If you had pinned data duplication on, I would turn it off and run a cleanup on the drive just to be safe since that was not available in .1145. However, I myself forgot to do that, and all the drive showed was full data duplication on when .1145 began running. Turned it off and ran cleanup at that time with no issues, but better safe than sorry.

-

I had this issue and rolled back to .1145 which works perfectly. To do this, I first turned off pinned data duplication as it was not available in .1145, and cleaned the drive. Once all of that was done, I uninstalled Clouddrive and restarted my computer. Finally, I re-installed .1145 and it worked right away.

-

I/O error: Thread was being aborted....Can't upload anything

RG9400 replied to ilikemonkeys's question in General

I had this problem when I was using .1159 as well. I downgraded back to .1145 to fix it. I opened a ticket and sent my logs, but no idea if they figured out the problem. -

Sure. I purchased and installed PartedMagic onto a USB. I then booted using this USB to run a Secure Erase, but it was not able to complete successfully. So I ran DD (through PartedMagic as well) on the drive around 5 times. I then converted the disk to GPT using diskpart and installed a fresh copy of Windows. I used CHKDSK, StableBit Scanner, and Intel SSD Toolbox (Full Diagnostic) to confirm that read/writes were functioning as intended. Based on what I could understand from Intel, it seems like the Optane drives are fairly unique due to their usage of 3D XPoint technology which caused the specific/strange behavior I was facing.

-

Some background: I typically am constantly moving items to my CloudDrive, so the "To Upload" is always around 500GB. It is mounted on my Intel Optane 905P NVMe SSD. A few days ago, while I was remote, one of my automated programs tried to update the SSD Driver, and upon reboot, the SSD would not boot. At this time, the CloudDrive still had 500GB left to upload. Since I was remote, it got stuck in a restart cycle. When I was able to get to the computer, the only option I had was to reinstall Windows. While mounting the CloudDrive, I had a BSOD related to Memory Management. Long story short, the CloudDrive went through a forced reattach that was cut short, and then a recovery. It has mounted succesfully now. However, Scanner is obviously picking up the CloudDrive has a damaged file system. It is composed of 20 partitions, and it has found damage in every single one of them. Just for my sanity, I deleted everything I had uploaded to the Drive in the 3 days before the crash, but I am not sure if the drive uploads in a sequential manner, i.e. 3 days would cover the latest 500GB I had uploaded, but I am not sure if the drive was still trying to upload something I had moved a week ago. I also guess that even deleting the corrupt files is not enough. Is there a way to fix the file systems? On a more serious note, now Scanner is picking up damage to my SSD. It is showing an absurd 154,317 "Media and Data Integrity" issues with this number steadily increasing, and it finds around 250 sectors unreadable. When I run the file recovery, all the files can be recovered. I replace them, rerun the scan, but it finds a different number of unreadable sectors (203) and completely different corrupt files now. I ran the Intel SSD Toolbox. Quick Diagnostics gives 0 problems back, and Full Diagnostic fails instantly on the Read Scan with a statement saying to call Intel -- the Data Integrity portion of the Full Diagnostic passes. Now, I obviously know that I put this SSD through a lot, but I am really hoping that I can repair it. It is fairly stable -- there was only that one initial BSOD right after reinstalling Windows due to "Memory Management." Since then, I think it is running fine? Could this scan possibly be related to the damage on the CloudDrive since it is mounted on this SSD? Is it pending failure? Is there a way to fix this as well? Sorry for the long message, but I am not very versed in this stuff, so I figured I would give as much detail as I could. The SSD is very new and very expensive, and an idiotic program might have ruined it. I'm really hoping there's a way to fix it that avoids having to reinstall Windows for the (I just got everything set up again). EDIT/UPDATE: So, after doing a low level format of my SSD and doing a few extra steps as per Intel's instructions, it turns out that I was able to stabilize my Cache Drive. It was not due to the CloudDrive but due to some issue in the specific technology behind the Optane drive that was causing various files to become unreadable for small amounts of time. Now it is stable, and by ignoring the Error Count in Scanner unless there is further increases, everything seems to be going smoothly. I ran CHKDSK on each and every CloudDrive partition a few times using a batch file, and now everything seems to be stable there as well. I will run a file system scan to make sure it does not detect any more damage. I did lose data, but it was minuscule compared to how much was on the drive to begin with. Overall, it was a frustrating and stressful week, but I was able to get everything back to normal with minimal cost.

-

I absolutely love DrivePool, Scanner, and CloudDrive, so I have downloaded the beta versions of each software. One thing I do for peace of mind whenever using a beta version of software is to link the changelog/update feeds of that product into my RSS feed so that I can be aware whenever a new version is available, as well as what that version contains. Especially since I use these products so heavily, I would love it if there were similar feeds for each one. I had found some text files earlier, but it was hard to use these. I know it's a bit of a specific usecase, but if you do get somee time to do it, it would be much appreciated!