All Activity

- Today

-

The 12 Gb/s throughput for the Dell controllers mentioned on the datasheet is total per controller, so if you operated on multiple drives on the same controller simultaneously I'd expect it to be divided between the drives. Having refreshed my memory on PCIe's fiddly details so I can hopefully get this right, there's also limits on total lanes direct to the CPU and via the mainboard; e.g. the first slot(s) may get all 16 direct to the CPU(s) while the other slots may have to share a "bus" of 4 lanes through the chipset to the CPU. So even though you might have a whole bunch of individually x4, x8, x16 or whatever speed slots, everything after the first slot(s) is going to be sharing that bus to get anywhere else (note: the actual number of direct and bus lanes varies by platform). So you'd have to compare read speeds and copy speeds from each slot and between slots, because copying from slotA\drive1 to slotA\drive2 might be a different result from slotA\drive1 to slotB\drive1 from slotB\drive1 to slotC\drive1... and then do that all over again but with simultaneous transfers to see where, exactly, the physical bottlenecks are between everything. As far as drivepool goes, if C was your boot drive and D was your pool drive (with x2 real-time duplication) and that pool consisted of E and F, then you could see drivepool's duplication overhead by getting a big test file and first copying it from C to D, then from C to E, then simultaneously from C to E and C to F. If the drives that make your pool are spread across multiple slots, then you might(?) also see a speed difference between duplication within the drives on a slot and duplication across drives on separate slots. If you do, then consider whether it's worth it to you to use nested pools to take advantage of that. P.S. Applications can have issues with read-striping, particularly some file hashing/verification tools, so personally I'd either leave that turned off or test extensively to be sure.

-

Shane started following SMART errors and Writing zeros to free space in pool

-

If you mean Microsoft's cipher /w, It's safe in the sense that it won't harm a pool. However, it will not zero/random all of the free space in a pool that occupies multiple disks unless you run it on each of those disks directly rather than the pool (as cipher /w operates via writing to a temporary file until the target disk is full, and drivepool will return "full" once that file fills the first disk to which it is being written in the pool). You might wish to try something like CyLog's FillDisk instead (that writes smaller and smaller files until the target is full), though disclaimer I have not extensively tested FillDisk with DrivePool (and in any case both programs may still leave very small amounts of data on the target).

-

Depending on the error, it might have been one that the drive's own firmware was capable of (eventually) repairing?

-

Shane reacted to an answer to a question:

Drive question.

Shane reacted to an answer to a question:

Drive question.

-

Is it safe to use the cypher /w command in Windows to zeros/random data to the free space in a pool?

-

donwieber started following SMART errors

-

I have a Windows 11 PC with Stablebit Scanner on it and also an UnRaid Server with Linuxas the OS. In UnRaid, I got a SMART error (Forgive me but I wrote down the error and then lost the stickynote I wrote it on) on a 3 year old hard drive, it is an Seagate Ironwolf 8TB NAS drive, anyways my raid was not compromised but I replaced the drive anyways. I put the drive into another Linux computer I have and it showed the same error. So then I put it into my Windows 11 PC and let Scanner scan it and looked at the SMART results and it showed that there were no errors on the drive at all. I am just wondering why Linux would show an error but Scanner did not???

- Yesterday

-

I heard back from Gillware. They want $1200 AND another drive that's at least 20 TB. And they can only "guarantee" that they'll be able to recover around 80% of the disk. JFC. It's like they think the drive has been at the bottom of the sea for the past year. I was thinking $400 tops. I may never again go anywhere near diskpart. If I had known things were going to be this expensive, I would've tried using something like EaseUS first, on my own. Ughughughughughugh.

-

The thing is that the drive dropouts occurred seemingly at random after the Sabrent had been powered on for awhile and they only reappeared after a reboot. So, something was going awry while the disks were in their steady-state. The disks do get power simultaneously...sort of. The Sabrent is a bit odd in that, after a cold boot, each drive bay has to be hand turned on individually. There is a master power switch on the back, but it still won't power anything until you hit that specific bay's power button and only AFTER it senses USB power, which a lot of people have complained about. However, the bays will continue to be powered after warm reboots. I have no idea if it employs any smart power-up strategies, though since it's just a DAS unit at the end of the day, I sincerely doubt it. The disks I'm using are Seagate Exos and a smattering of shucked white-label Western Digitals. I'm out of the shucking game, so when the WDs go, I'll replace them with true OEMs.

-

inedibleoatmeal joined the community

-

Do the disks all power up at the same time? During spin up, the peak power consumption of a disk can be 2 to 3 times the power consumed in the steady-state condition (when the disk is spinning). The ancient SCSI bus had a facility for delaying the spin up of each disk on the bus by an amount proportional to the disk's SCSI id, thereby reducing the peak power required during system power up. Does your Sabrent enclosure do that? Hopefully the specs for each of the disks in your Sabrent will specify the peak power, as well as the steady-state power. -- from CyberSimian in the UK

-

Hi Shane, thanks for your observations, most useful. The dual cpu Dell T7910 has four useable full-fat PCIe x16 Gen 3 slots and before the changes I made yesterday I had three official Dell NVME card holders in them as follows: 2 x Quad NVME (4 SSDs in each) 1 x Dual NVME (2 SSDs) and with that configuration I swear I was seeing speeds more like the 1.2GB/s (and above) as I tested and moved things around while getting ready to swap components to be better arranged and add in an 'ASUS Hyper M.2 X16 Card V2' in place of the dual Dell one so that I could utilise its 4 NVME slots (for 12 NVMEs in total instead of 10). At that point I hadn't tried DrivePool duplication, I only turned on pool duplication after adding the new card but I get what you say about DP writing in parallel so it shouldn't slow writes/copying down. All the NVME cards support bifurcation so they fully allow independent 4 x 4 lanes for the x16 slots (which are all electrically x16). I have done some more testing today between drives to and from the two pools that I now have, one pool is made is made up of 4 x 1TB drives (different brands but all on one of the Dell quad cards), that arrangement was also my initial configuration yesterday where I saw the 1.2GB/s speeds, so no difference there. The other (new) pool is made up of two identical 2TB drives (WD SN550 Blue) on the other Dell quad card which also contains two more identical 2TB drives. My tests involved a 60GB folder containing 18 large files ranging from 1.41GB to 8.2GB and I've done repeat tests copying to and from the non-pool and pooled drives and all I can really observe is that the approx 675MB/s copying to either of the pools is consistent whereas from the pools to a non-pooled SSD that speed increases to about 1GB/s but one test saw it as high as 1.32GB/s. Copying between the pools I saw an approx 10% speed increase. I believe these increases (copying from a pool to a non-pooled drive) are because of DrivePool's Read Striping optimising how it pulls the data, so that is to be expected. My main system (C) and some other drives are on the ASUS card and that NVME card has nothing to do with the pools at the moment so I'm sort of ruling that NVME card out as the cause of the slowdown but I guess the only way I can really fathom this out is to try swapping cards/drives around again and trying different arrangements, but that's a bit of a chore to try right now. Unlike copying the test 60GB folder to a Samsung 980 I have as my main system drive, I saw no significant increase or decrease in copying speeds for the duration of the test in the pooled WD SN550s or the disparate bunch of 1TB pooled drives. On the Samsung 980 I saw a massive spike in performance at first up to 2GB/s (due to buffers I guess) but that dropped to like 200MB/s for most of the test in all averaging at 407MB/s. My copy speeds and timings are as reported by Directory Opus's (Dopus) copy process and are shown similarly in DrivePool. The 12Gb/s of throughput that the Dell datasheet quotes equates to 1.5GB/s, so that does seem to indicate that my rough average of yesterday of 1.2GB/s (or variably above) before changing things was possible, I just wonder why speeds have now roughly halved? I have not tweaked any of the settings in DrivePool, everything is as default except I did activate 'Bypass File System Filters' before all these changes and tests because I do have Bitdefender Internet Security running and I read in the manual that AV scanners can double/triple/etc scanning times (because of the duplication). Any more thoughts before I pull everything apart again for more tests? I haven't got around to checking BIOS yet but I can't recall anything in there that might help, the Dell BIOS doesn't have much in there to tweak, but in any case things were faster yesterday so it's still a puzzle.

-

Shane started following Is speed of copying to a pool reduced with pool duplication set to on?

-

Real-time duplication xN writes to N drives in parallel, so while there's a little overhead I can only imagine that seeing the speed effectively halve like you're describing would have to be because of a bottleneck between the source and destination. Note the total speed you're getting is roughly what the 7910's integrated/optional storage controllers can manage, 12Gb/s per Dell's datasheet, and that while any given slot may be mechanically x16 it may be electronically less and in any case will also not run any faster than the xN of the card plugged into it (so a x1 card will still run at x1 even in a x16 slot). So I'm suspecting something like that being the issue; what's the model of the NVME cards you've installed? EDIT: It may also be possible to adjust the PCIE bandwith in your BIOS/UEFI, if it supports that.

-

Shane reacted to an answer to a question:

Drive question.

Shane reacted to an answer to a question:

Drive question.

-

Hi, firstly thanks for a great set of StableBit programs, nicely designed and explained. I've just started using DrivePool properly after buying the bundle two years ago and all is working nicely with two NVME 4TB pools setup so far (I'm putting a lot of trust into DrivePool as I move all my data around finally to sort it out once and for all!). My question relates to whether the speed of copying files to a pool is reduced due to having to copy things twice (for duplication)? I ask because I just added another 4-way NVME PCIe card and at the same time also turned on pool duplication to secure my data better. However, I noticed that my copying speeds seem to have roughly halved from an NVME drive (outside the pool) to a pool made up of NVME drives. Before setting duplication I was seeing copying speeds roughly averaging 1.2GBps in transfer speed to that pool, now it's more like 600MBps (with 2 x duplication now on). So my thought is, either the duplication makes initial copying to the pool take twice as long, or maybe it's something to do with adding the new NVME card which may have slowed things down? (I did a search on the forum and manual but didn't spot anything that may help answer my question). My system should cope with the extra card, it's got enough PCIe lanes and x16 PCIe slots (a Dell Precision T7910, 2xXeon, 128GB RAM, Win 10). Would be grateful for your thoughts (anyone).

-

MrArtist joined the community

-

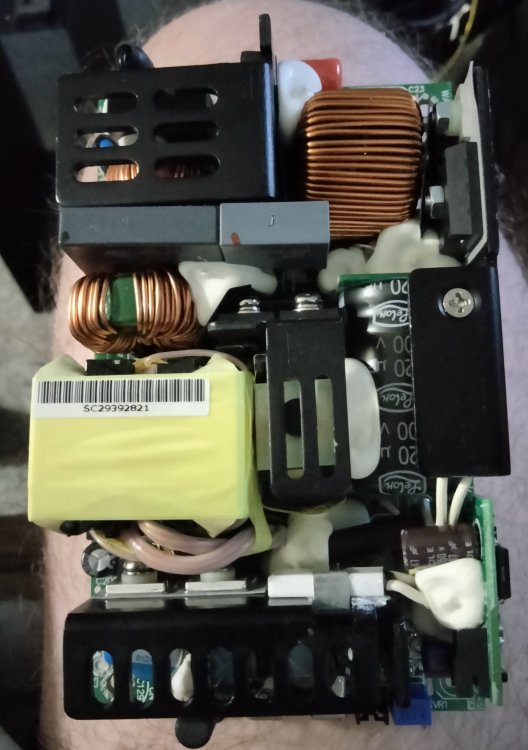

I'm still restoring the pool, which'll take another couple of days (no word yet from Gillware), but I got off my duff and took the Sabrent apart. I have to say that it's really impressive on the inside, with almost everything some manner of metal. Only the front doors and a handful of other parts are plastic. Unpopulated, Sabrent says that it weighs 17.8 lbs, so it would definitely kill you if someone dropped it on your head, even drive-less. The PSU is a very cute 3.5" x 5" x 1.25" device that should be up to the task since it only has to have enough oomph for 10 drives along with the two 120mm "Runda" RS1225L 12L fans. As you can see from the label, it's a 399.6W "Mean Well" EPP-400-12 unit when cooled adequately. Since a normal 3.5 drive only consumes around 9.5W at load, that's only 95W needed for the drives, with the rest for the fans and the supporting PCB. So, assuming the PSU isn't outright lying, I don't think that's the issue. I checked mine for obvious problems, like expanding or leaking caps, but didn't see anything. The caps themselves look to be of pretty decent quality and the bottom circuit board appears clean. The unit has what looks to be a rheostat, but I have no idea what it controls. If I had to take a very wild guess, I would say there's something amiss with the backplane, as that seems to be a common source of consternation with low(er)-grade server-style boxes, like the Norco. It is interesting that I only started seeing drive dropouts once I had populated all ten bays, so maybe it works fine with only 8 or 9 bays running. So far, the two Terras are running just fine. I'm praying to all sorts of gods that it remains so.

- Last week

-

Hi Thanks for answer! ECC ram - good idea - will make sure to use such. Current installation will be windows (later down the road might switch to linux and zfz or similar) For now I will go with ECC ram + drivepool + stablebit scanner + snapraid/md5deep (think I will start with trying this one: https://github.com/marcopaganini/bitrot + a bit of scripting to have it run weekly on all drives) Updated Drivepool feature wish * at every write, calculate and write checksum * have a check/scan command that can be run daily/weekly/monthly uses checksums and verifies data integrity /Andreas

-

Shane started following silent bit rot protection

-

Hi Andreas, My question is - how do I protect against silent bitrot The two main risks for silent bitrot are "while in RAM" and "sitting on disk". For the former, ECC RAM for your PC (and making sure your PC will actually support and use ECC RAM in the first place). For the latter, you can rely on hardware (e.g. RAID 5/6 cards, NAS boxes like Synology) and/or software (e.g. for Windows, md5deep for checking, SnapRaid or PAR2/MultiPar for checking and repairs; for linux there's also better filesystems like ZFS or bcachefs; or linux-based operating systems like Unraid which simplify the use of those filesystems, etc). For use with StableBit DrivePool, my personal recommendation would be ECC RAM + StableBit Scanner + either (SnapRAID) or (md5deep +optionally PAR2/MultiPar). And if you have very large pools consider whether to use hardware RAID 5/6 instead of SnapRAID et al. Plus, of course, backups. Backups should go without saying. Question does drivepool secure against "silent bitrot" Not actively. Duplication may "protect" against bitrot in the sense that you can compare the duplicates yourself - with x2 duplication you would need to eyeball inspect or rely on checksums you'd made with other tools, while with x3 duplication or more you could also decide the bad one based on the "odd one out" - but DrivePool does not have any functions that actively assist with this. Question: Does diskpool have a "diskpool check" command that checks all files (all duplicates) and detects corrupted files? It does not. If not - sounds like a great value-add feature - ex everytime diskpool writes a file it also calculates and stores a checksum of the file I would like to see a feature along those lines too. * option to enable: read verification: everytime diskpool reads a file - verifies that read file checksum is as expected You'd see a very noticeable performance hit; regular (e.g. monthly) background scans would I think be better.

-

Andreas W joined the community

-

Hi I am starting building out my home storage system and learning as I go. Below is my current thinking/question I will use drivepool and with all pool/files duplicated. I will have additional backups (cloud and local) I feel ok against drive-failures, against entire system failing etc. My question is - how do I protect against silent bitrot I know drivepool via integrated scanner will find blocks that are bad. But how do I protect against silent bit flip. I know its very rare. But still - would be nice to know how to protect against it Question does drivepool secure against "silent bitrot" Lets say a file fileA is stored in diskpool and dubplicated on two drives and one of the drives a bit flips, changes value from say 1 to 0. At this point in time I will have * disk1: fileA (correct file) * disk2: fileA (corrupt file) Question: Does diskpool have a "diskpool check" command that checks all files (all duplicates) and detects corrupted files? Question: Next read of file1 - will it be the fileA from disk1 or from disk2? or? Question: Will diskpool detect that fileA is now different on disk1 vs disk2? Essentially * will diskpool detect a underlying bit-root like this? * will it in addition to detect it be able to fix it? If not - sounds like a great value-add feature - ex everytime diskpool writes a file it also calculates and stores a checksum of the file * option to enable: write verification: after write - read both duplicates and verify checksum dispool check command * reads all files (all duplicates) and verifies that checksum is as expected diskpool file read * option to enable: read verification: everytime diskpool reads a file - verifies that read file checksum is as expected * option to enable: read verification all duplicated: everytime diskpool reads a file - reads all duplicates and verifies that read files checksum are as expected /Andreas

-

Patrik_Mrkva reacted to an answer to a question:

Google drive not supported after may 2024? Why?

Patrik_Mrkva reacted to an answer to a question:

Google drive not supported after may 2024? Why?

-

I would just like to say that this is fairly disappointing to hear. Though they added limits, a lot of people are still able to work within those limits. And the corruption issue hasn't been present for many years as far as I can tell -- the article in the announcement by Alex is from April 2019, so over 5 years ago. I get the desire to focus resources though, and I'm glad it still works in the background for now. Hopefully there are no breaking changes from Google, and I hope an individual API key is going to have reasonable limits for the drive to continue working. But yeah, switching providers is not very realistic in my case, so I guess I'll use it until it just breaks.

-

hello yes, easy peasy. get all your data straight and organized within DrivePool. this means remeasuring/rebalancing/whatever so that you have the solid green bar at the bottom of the DP GUI. the point of this is to mitigate any inconsistancy issues that could 'migrate' to unRAID. i have read that unRAID has its own peculiar issues, so i would also take note/screenshots of my DrivePool settings. you never know... and better safe than sorry. you can 'reverse' this process: http://wiki.covecube.com/StableBit_DrivePool_Q4142489 so, basically just show hidden files and folders, Stop DP service, and navigate to the hidden PoolPart.xxxx folders on each of the underlying drives comprising your DrivePool. select all folders/files in each PoolPart Folder, right-click and CUT (do not select copy), and go back up and PASTE into the root of each drive. do this for each pooled drive. when done, reopen DP GUI and go to Manage Pool ^ Remeasure > Remeasure the pool. this should happen very quickly since all your previous data is now absent from the pool and it will all show as 'other.' this will also help ensure pain-free drive removal. REMOVE each drive from the pool (leave all pop-up removal options UNCHECKED (should also happen very quickly). Viola, you are done. i would reboot here. delete the now empty unused PoolPart folders too. a defrag of all drives concerned can only speed up any upcoming tasks with unRAID. i have never used SnapRAID or unRAID, but i imagine you could now delete/format/diskpart whatever the drives you were using as parity for SnapRAID and get busy setting up unRAID. hope this helps

-

Hey guys, Drivepool has served me well for over 10 years. Unfortunately, I'm moving over to Unraid. Nothing to do with Drivepool itself; it works fantastic. I just can't get Snapraid to work in conjunction with it, and I need that extra layer of backup. So my question is, I do need to evacuate off of the Drivepool one drive at a time, and I don't have enough room to empty a drive, remove it, and then go to the next drive. I was wondering if it was possible to move files directly off of a particular drive's poolpart folder instead? Will it cause Drivepool to freak out in any way?

-

SaintTDI changed their profile photo

-

tylerdotdo joined the community

-

Shane reacted to an answer to a question:

Google drive not supported after may 2024? Why?

Shane reacted to an answer to a question:

Google drive not supported after may 2024? Why?

-

Martixingwei is correct, you would need to download that entire clouddrive folder from your google drive to local storage and run the converter tool.

-

Shane reacted to an answer to a question:

Drive question.

Shane reacted to an answer to a question:

Drive question.

-

Google drive not supported after may 2024? Why?

Martixingwei replied to warham28's question in Providers

I believe currently the only way is to download everything to local and use the converter tool come with CloudDrive to convert it to other mountable format. -

Let's say.....Google does change something with the API and my Google Drive is no longer mountable using the StableBit Application(s), is there a way to decrypt the Chunk of Data files in the actual Google Drive folder "Stablebit Cloud Drive" if I use a different program like rclone or Google Drive Desktop for Windows?

-

Oh, hrrmmm, interesting. It looks like FreeFileSync did the job, but its UI looks like it was done by a madman, so I might try Robocopy next time. That fileclasses link is EXTREMELY helpful. A bit more on the pool reconstruction front. I really needed more than six drive bays to work with, so I bloodied a credit card and bought another Terra. FFS mirrored everything on the old pool to the three new Seagates seemingly fine. Oh, but before that, the Seagates long formatted successfully, so I'm considering them good to go. I'm currently long formatting three of the old pool drives, which'll take another 24 hours. Once that's done, I'll fire-up the second Terra with old pool drives and copy everything over from them to the drives that I'm in the process of long formatting (presuming they pass). Gillware has the drive. I stressed to my contact that the drive should be fine electronically and mechanically, so they shouldn't have to take it apart. I'm HOPING this will lower the cost of the recovery substantially. You would think restoring everything from a simple diskpart clean should be a cakewalk for a professional recovery service, but we'll see. Incidentally, I was looking over Terramaster's line of products and they are all-in on NAS devices, with their flagship product supporting 24 drives. I wish they would offer a 10- or even 8-bay DAS box, but then you're back to needing a beefy PSU. I still intend to take apart the Sabrent assuming it's not a nightmare to do so. That's currently all the news that's fit to print. More to come!

- Earlier

-

DrewDunn changed their profile photo

-

iurirolho changed their profile photo

-

Alex He changed their profile photo

-

Wonderful, thanx Drashna, and yes, I've learned and already have locations/IDs in the volume names 😄.

-

Christopher (Drashna) started following Transferring pool to new system

-

For the mount points, at any time that the drives are connected to the new system. Personally, I would recommend changing the volume labels for the drives to match their locations/IDs, so that it's easier to identify the drives. Also, if you're using StableBit Cloud, it should sync the settings for the pool in StableBit DrivePool, and should sync the scan history and settings for StableBit Scanner, on the new system.

-

IanT started following Transferring pool to new system

-

So piggy-backing on the original request, I'd like to do the something very similar, but there's an added complication. On my existing system I have a Pool consisting of 10 drives each of which is mounted in a folder on the C drive. I'm comfortable with Shane's method above, but unsure where in the procedure I should handle the creation of mount points on the new system. Any ideas Shane?? Thanx in advance.