-

Posts

48 -

Joined

-

Last visited

-

Days Won

1

Everything posted by JulesTop

-

Another question is, does anyone have another provider comparable or better to gdrive? In terms of costs and quotas?

-

I'm going through the process now. It started at 6:45am this morning and is currently at 2.62% complete... I think this will take some time. But I don't think it actually downloads/re-uploads the chunks, it just moves them. I have 55TB in google drive BTW. Also, as far as I can tell, the drive is unusable during the process, and there is no way of pausing the process to access the drive content. However, it will be able to resume from where it left off after a shutdown. Having said all that, I have to say thanks to @Alex and @Christopher (Drashna) for mobilizing quickly on this, before it becomes a really serious problem. At least right now, we can migrate on our schedule, and until then there is a workaround with the default API keys.

-

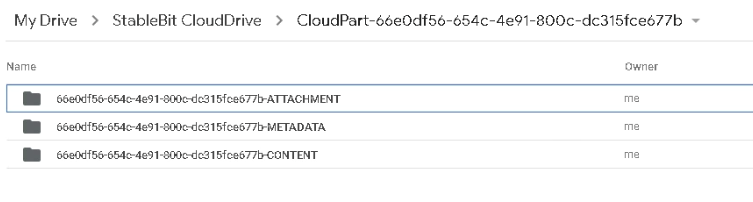

You called it. I just tried uploading a small file to the content folder directly from the Google drive web interface and got an 'upload failure (38)', same as before. It looks like this restriction rollout is definitely happening. It will be fantastic if @Alex has a solution coming soon! But also good that the default keys are keeping us going for now.

-

I was on the latest stable (I think the last 4 digits we're 1249) when this occured. After a couple of days, I updated to the latest Beta to try and solve it. Also, I realized that at some point I removed my personal gdrive API credentials from the config file, and this is about the time the issue resolved. I just put back my personal API credentials and re-authorized and the issue came back. I yet again switched back to 'null' (the default stablebit API credentials) and the issue went away again... I wonder if there are higher API limits with the Stablebit credentials. Maybe @Christopher (Drashna) can shed some light.

-

So the issue has been "fixed". There must be a bug or something, but not sure if it's on Google's side or stablebit. I deleted the file that I figured it was stuck trying to upload. The file I deleted was about 20GB, and there was over 65GB in queue to upload. As soon as I deleted the 20GB file from my computer (which was on the clouddrive), my upload queue seemed to just resume. It didn't drop by 20GB... It just resumed. Anyway, everything seems to be totally back to normal.

-

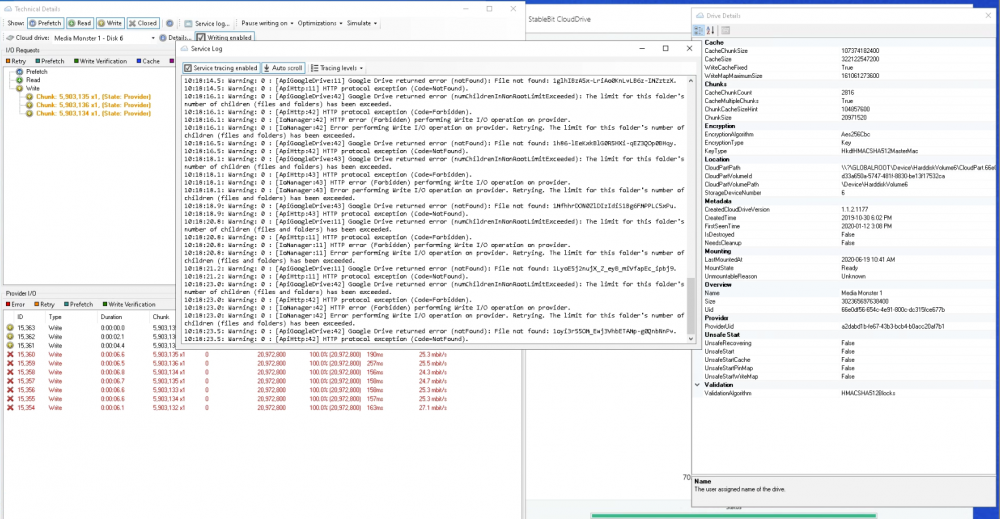

I can download from the gdrive without issue. I can also upload to the root of the gdrive without problem (manually). When I tried adding a file to the clouddrive content folder manually (via the gdrive web interface), it fails. It looks like there is an issue with the content folder... Maybe @Alex or @Christopher (Drashna) can help.

-

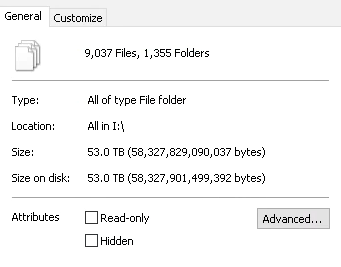

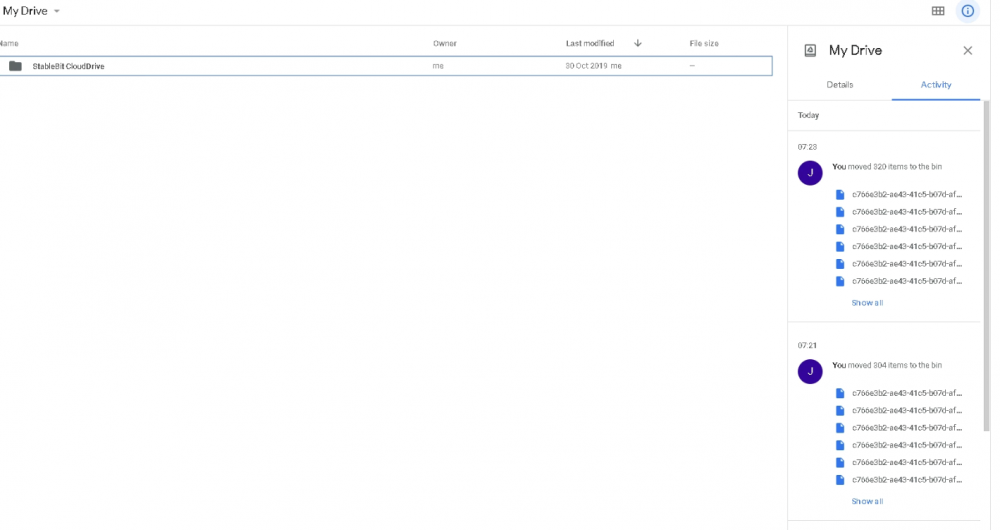

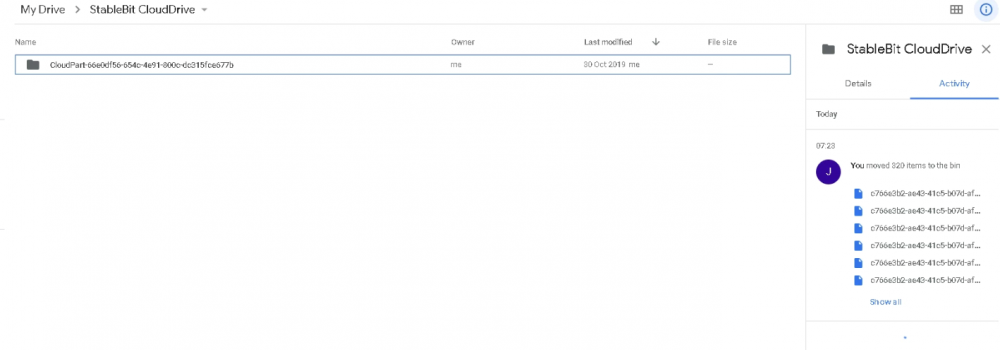

My windows recycling bin? I went there and it is empty. Just in case, I just changed the settings for my cloud drive to permanently be deleted without being stored in the recycling bin. I also went to my actual Google drive we interface, however, the bin is empty. I'm still having the issue... It's weird, I deleted a bunch of files and still have the issue. I have it setup with 20MB chunks... So my drive would likely have to be humongous to reach the file limit.

-

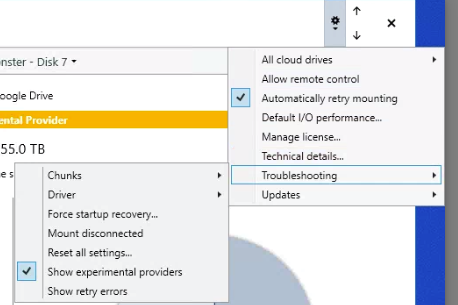

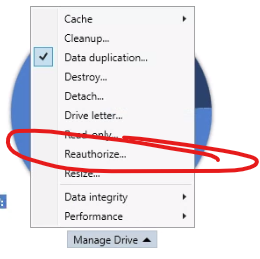

To reauthorize, you shouldn't have to look at the providers list. Although, it's not in the list because you have not enabled 'experimental providers'. See below. To reauthorize, you need to click on 'manage drive' as shown below.

-

Hi @Historybuff, The wiki is at the following link. http://wiki.covecube.com/StableBit_CloudDrive_Q7941147 I did this a while ago, so I don't remember if there are extra steps, but it's all free and works great. Once you change the key in the json file, you just need to re-authorize the clouddrive you want to use that key.

-

Those are all awesome ideas!

-

I also just reverted to 1145. I'm sure Alex is working on it. Looks like a lot of features were added. I especially liked being able to duplicate pinned data only on existing pools... gives them a layer of protection while I re-upload the whole drive to a fully duplicated cloud drive. I was having issues with uploading and downloading in 1165. I never noticed it when accessing the files, but there were always critical errors in the dashboard... Also, my uploads were not stable for some reason, always jumping around in speed and never stable at 70Mbps... 1145 it is until the team addresses some of the issues. Thanks @Christopher (Drashna) for the tip on reverting to 1145!!