darkly

Members-

Posts

86 -

Joined

-

Last visited

-

Days Won

2

Everything posted by darkly

-

Most changelogs don't go in depth into the inner workings of added functionality. They only detail the fact that the functionality now exists, or reference some bug that has been fixed. Expecting to find detailed information on how duplication functions in the CloudDrive changelog feels about the same as expecting Plex server changelogs to detail how temporary files created during transcoding are handled. When your cache is low on space, you first get a warning that writes are being throttled. At some point you get warnings that CD is having difficulty reading data from the provider (I don't remember the specific order in which things happen or the exact wording, and I really don't care to trigger the issue to find out). That warning mentions that continued issues could cause some issues (data loss, drive disconnecting, or something like that). I'm 99.99% sure CD does not read data directly from the cloud. If the cache is full, it cannot download the data to read it. I've had this happen multiple times on drives that were otherwise stable, and unless there's some cosmic level coincidences happening, there's no way I'm misattributing the issue when it occurs every time the cache drive fills up and I accidentally leave it in that state for longer than I intended (such as leaving a large file transfer unattended). Considering clouddrive itself gives you a warning about having trouble reading data when the cache becomes saturated for an extended period, I don't think I'm wrong. Anyway, I've personally watched as my writes were throttled, and yet my cache drive continued to fill up more and more (albeit much slower) until it had effectively no free space at all except for whenever CD managed to offload another chunk to the cloud (before the drive ultimately failed due to not being able to fetch data from the cloud fast enough anyway). I've had this happen multiple times on multiple devices and the drives never have any other problems until I carelessly left a large transfer going that saturated my cache. I've had multiple clouddrives mounted on the same system, using the same cache drive, with one drive sitting passively with no reads and writes, and the other drive having a large transfer I accidentally left running, and sure enough, that drive dismounts and/or loses data and the passive drive remains in peak condition with no issues whatsoever when I check it after the fact for any problems. EDIT 90% sure the error in this post is the one I was seeing (with different wording for the provider and drives ofc):

-

oh THAT'S where it is. I rarely think of the changelog as a place for technical documentation of features . . . Thanks for pointing me to that anyway. This has definitely not been my experience, except in the short term. While writes are throttled for a short while, I've found that extended operation under this throttling can cause SERIOUS issues as CloudDrive attempts to read chunks and is unable to due to the cache being full. I've lost entire drives due to this.

-

I fully understand that the two functions are technically different, but my point is that functionally, both can affect my data at a large scale. My question is about what the actual step-by-step result of enabling these features on an existing data set would be, not what the end result is intended to be (as it might never get there). An existing large dataset with a cache drive nowhere near the size of it is the key point to my scenarios. What (down to EVERY key step) happens from the MOMENT I enable duplication in CloudDrive? All of my prior experience with CloudDrive suggests to me that my 40TB of data would start being downloaded, read, duplicated, and reuploaded, and this would max out my cache drive, causing the clouddrive to fail and either become corrupted or dismount, if hitting the 750GB upload cap doesn't do so first. As I stated in my first comment, there is NO documentation about how existing data is handled when features like this which affect things at a large scope are enabled, and that's really concerning when you're familiar with the type of problems that you can run into with CloudDrive if you're not careful with things like the upload cap, available space on the cache, and R/W bottlenecks on the cache drive. As someone who has lost many terabytes of data due to this, I am understandably reluctant to touch a feature like this which could actually help me on the long run, because I don't know what it does NOW.

-

Can you shine some more light on what exactly happens when these type of features are turned on or off on existing drives with large amounts of data already saved? I'm particularly interested in the details behind a CloudDrive+DrivePool nested setup (multiple partitions on CloudDrives pooled). Some examples: 40+TB of data on a single pool consisting of a single clouddrive split into multiple partitions, mounted with a 50GB cache on a 1TB drive. What EXACTLY happens when duplication is turned on in CD, or if file duplication is turned on in DP? 40+TB on a pool, which itself is consists of a single pool. That pool consists of multiple partitions from a single clouddrive. A second clouddrive is partitioned and those partitions are pooled together (in yet another pool). The resulting pool is added to the first pool (the one which directly contains the data) so that it now consists of two pools. Balancers are enabled in drivepool, so it begins to try to balance the 40TB of data between the two pools now. Same question here. What EXACTLY would happen? And, more simply, what happens on a clouddrive is duplication is disabled? I wish there was a bit more clarity on how these types of scenarios would be handled. My main concern with these type of scenarios is that suddenly my 40TB of data will attempt to be duplicated all at once (or in the case of the second scenario, DrivePool rapidly begins to migrate 20TB of data), instantly filling my 1TB cache drive, destabilizing my clouddrive, and resulting in drive corruption/data loss. As far as I can tell from the documentation, there is nothing in the software to mitigate this. Am I wrong?

-

I have two machines, both running clouddrive and drivepool. They're both configured in a similar way. One CloudDrive is split into multiple partitions formatted in NTFS, those partitions are joined by DrivePool, and the resulting pool is nested in yet another pool. Only this final pool is mounted via drive letter. One machine is running Windows Server 2012, the other is running Windows 10 (latest). I save a large video file (30GB+) onto the drive in the windows server machine. That drive is shared over the network and opened on the windows 10 machine. I then initiate a transfer on the Windows 10 machine of that video file from the network shared drive on the windows server machine to the drive mounted on the windows 10 machine. The transfer runs slower than expected for a while. Eventually, I get connection errors in clouddrive on the windows server machine, and soon after that the entire clouddrive becomes unallocated. This has happened twice now. I have a gigabit fiber connection, as well as gigabit networking throughout my LAN. I'm also using my own API keys for google drive (though I wasn't the first time around so it's happened both ways). Upload verification was on the first time, off the second time. Everything else about the drive configuration was the same. Edit: to be clear, this only affects the drive I'm trying to copy from, not the drive on the windows 10 machine I'm writing to (thank god because that's about 40TB of data).

-

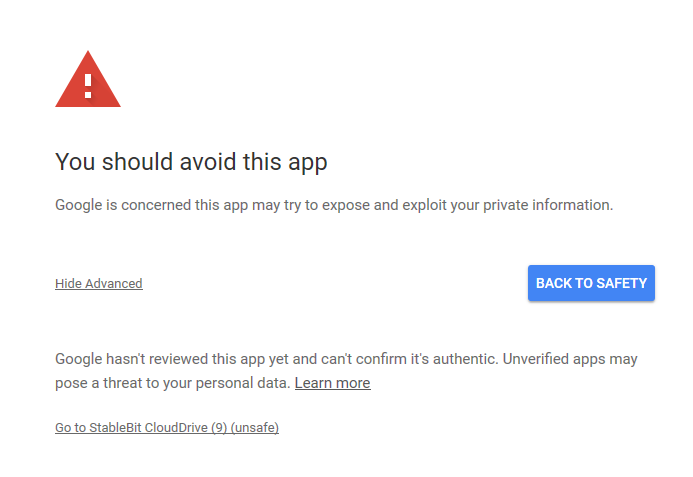

Just received this email from Google regarding my G Suite account. This won't affect CloudDrive right? " . . . we’ll be turning off access to less secure apps (LSA) — non-Google apps that can access your Google account with only a username and password, without requiring any additional verification steps." Also worth noting: I couldn't remember how CloudDrive authenticated with Google so I tried adding another account and received this message that I don't remember seeing before. Looks like Google flagged CloudDrive for some reason?

-

I've been using 1165 on one of my Win10 machines for the longest time with no problems, and I mean heavy usage, hitting the 750GB daily upload cap for a week straight once. The same version ran into the "thread aborted" issue within a couple days on my Server 2012 machine, but 1171 fixed it. Need to install 1174 now to test.

-

I/O error: Thread was being aborted....Can't upload anything

darkly replied to ilikemonkeys's question in General

I was having this issue and after trying a bunch of different things, installing this version and rebooting did the trick. Thanks! -

Oddly, I did another chkdsk the other day and got errors on the same partition, but a chkdsk /scan and chkdsk /spotfix got rid of them and the next chkdsk passed with no errors. I didn't notice any issues with my data prior to this though. Going forward, I'm running routine chkdsks on each partition to be sure. If the issue was indeed caused by issues with move/delete operations in DrivePool, it would appear that this is a separate issue from the first issue I encountered (where I was only using one large CloudDrive), but maybe the same as the second time I experienced the issue (the first time I did the 8 partition setup). I was using ReFS at the time though, so I'm not sure. I'll try to find some time to reproduce the error. In case anyone else with some spare time wants to try, this is what I'll be doing: 1) Create a CloudDrive on Google Drive. All settings on default except the size will be 50TB, full drive encryption will be on, and the drive will be unformatted. 2) Create 5 NTFS partitions of 10TB each on the CloudDrive. 3) Add all 5 partitions to a DrivePool. All settings to be left on default. 4) Add the resulting pool to yet one more pool by itself. 5) Create the following directory structure on this final pool: Two folders at the root (let's call them A and B); one folder within each of these (call them A2 and B2); finally one folder inside A2 (A3). 6) Within A3, create 10 text files. 7) Move A3 back and forth between B2 and A2, checking the contents each time until files are missing. This approximates my setup at a much simpler scale and SHOULD reproduce the issue, if I'm understanding Chris correctly as to what could be causing the issue I experienced, and if this is indeed what is causing the issue. I plan on getting another set of licences for all 3 products in the coming weeks as I transition from hosing my Plex on my PowerEdge 2950 to my Elitebook 8770w which has a much better CPU for decoding and encoding video streams (only one CPU vs two obviously, but the server CPU had 1 thread per core, and the 8770w's i7-3920XM has much better single threaded performance for VC1 decoding). I probably won't have to much time to attempt to reproduce the issue until this happens, but I'll let you know once I do. Finally, some question: Is there any sort of best practice of sorts to avoid triggering those DrivePool issues, or any sort of workaround for the time being? Do you know the scope of the potential damage? Will it always be just some messy file system corruption that chkdsk can wrap up or is there the potential for more serious data corruption with the current move/delete issues?

-

I feel like this kinda defeats the purpose of CloudDrive. I will say there are other tools that do this already (most obviously, something like Google Drive File Stream, but there are other 3rd party, paid options that work on other cloud providers). Are those not options for you? To address your issue directly though, I did provide my workaround for this issue on another thread, and the first response on this thread covers part of that: Network share your drive over LAN. What I do in addition to this is run openVPN on my Asus router to access my drive away from home if need be. I also use Solid Explorer on Android to access my files from my phone as well (after using the Android openVPN client to connect).

-

I just network share the CloudDrive from the host (which is a 24/7 server), then all my other systems on the local network can read/write to it. Every machine on my network is connected to gigabit ethernet, so I don't really have a noticeable drop in performance doing this. I also have gigabit upload and download through my ISP. For mobile access, I have my home network on an Asus router with built-in VPN functionality running an openVPN host on it. I connect to it using openVPN on my Android phone and use Solid Explorer to mount the network share (having gigabit upload really helps here). MX Player with the custom codec available on the XDA forums allows me to directly play just about any media on my CloudDrive, bypassing Plex entirely.

-

Got some updates on this issue. I'm still not sure what caused the original corruption, but it seems to be of only the file system, not the data. I'm not sure if this was caused for the same reasons as in the past, but I'm happy to report that I was able to fully recover from it. The chkdsk eventually went through after a reboot and remounting the drive a few times. Like I suspected above, it was only one partition of the CloudDrive that was corrupted, and in this case, having this multi-partition CloudDrive + DrivePool setup actually saved me from quite the headache, as only the files on that partition had gone missing (due to DrivePool dealing with entire files at a time). I took the partition offline and ran chkdsk on it a total of 5 times (the first two being repeats of what I mentioned above, stalling after a certain point; the next 3 being performed after the reboot and remounting) before it finally reported that there were no more errors. I remounted the partition, and upon checking my data, found that everything was accessible again. Just to be sure, I'm having StableBit Scanner running in the background until it passes the entire cloud drive, though it's going to take a while as it's a 256TB drive. One thing that maybe someone could look into: The issue seemed to have happened during a move operation on the CloudDrive. I had moved a bunch of directories to a different directory on the same drive (in one operation), and found that some files had not been moved once my data was available again. Maybe this is somehow related to what caused the corruption to begin with, or maybe it's just coincidence.

-

Ohh, I just realized you were speaking hypothetically as if CloudDrive could mount to multiple machines... I get what you're saying now lol

-

I understand the cache and upload process, but I was wondering what you meant by "situations where data corruption [ . . . ] is highly likely". As long as the data syncs correctly, there would be no issues right? So do you just mean cases where the cached data fails to upload correctly to the cloud, or are there other issues that could present themselves even if the data appears to sync properly. I know there's the upload verification option. Are there any situations that would fall outside of its scope?

-

Can you explain this further?

-

Yup it's me again. On the latest version. I completely trashed the previous cloud drives I was working with before and made a new one a couple months ago. Was working fine until now when I just scanned through all my files to find random files in random directories all over my drive had gone missing again. Cloud drive is version 1.1.0.1051. Drive pool is 2.2.2.934. Running on Windows Server 2012. My cloud drive is a 256TB drive split into 8 NTFS partitions (I ditched ReFS in case that was causing issues before. apparently not) which are each mounted to an NTFS directory. Multiple levels of drivepool then pool all 8 partitions into one drive, then pool that drive (on its own for now, but designed this way for future expansion) into another drive. All content is then dealt with from that drive. Seemed to be working fine for months until I looked through the files today and found that random ones (a good 5-10%) have gone missing entirely. I really need to get this issue figured out as soon as possible. I've wasted so much time due to this issue since I first reported it almost a year ago. What information do you need? Edit: No errors in either software. Even went and bought two ssds for a RAID1 to use as my cache drive so I've eliminated bandwidth issues. Edit again: Just tried remounting the drive and drivepool shows "Error: (0xC0000102) - {Corrupt File}" which resulted in it failing to measure a particular path within one of the 8 partitions. How does this happen? Is there a way to restore it? Another note: The thing is, I don't think it's just a drivepool issue because the first time I reported this, i was using one single large clouddrive without drivepool. More: Tried going into the file system within that partition directly and found that one of the folders inside one of drivepool's directories was corrupt and couldn't be accessed. I'm running a chkdsk on that entire partition now. Will update once done. Sigh...: If it ever completes that is. It's hanging on the second "recovering orphaned file" task it got to. I'll be leaving it running for however long seems reasonable.... A question: as long as I'm waiting for this... is there a better way to get a large (on the order of hundreds of terabytes) cloud drive set up without breaking things such as the ability to grow it in the future or the ability to run chkdsk (which fails on large volumes). I'm at my wit's end here and I'm not sure how to move forward with this. Is anyone here storing large amounts of data on gdrive without running into this file deletion/corruption issue? Is it my server? I've had almost 10tb of local storage sit here just fine without ever losing any data. From searching this forum, just about no one else seems to be having the issues I've been plagued with. Where do i go from here? I'm almost ready to just my server to you guys for monitoring and debugging (insert forced laughter as my mind slowly devolves into an insane mess here).

-

Here's what I'm trying to figure out. So I only have one actual "cloud drive", but it's split into multiple partitions. Those are pooled together in DrivePool, and the resulting pool is pooled as well (on its own for now (future proofing)) in yet another pool. So obviously, I'd need both CloudDrive and DrivePool in the second system to properly mount the drive. I'm confused at how this would happen. First I mount the cloud drive. I'd assume that my system would try to automatically assign drive letters to each partition? I then have to manually remap each partition to a directory instead, right? But either before or after I do that, does DrivePool see the existing DrivePool data in each partition and know that they belong to a pool? Or do I have to add each partition to a pool again? This is the part that I'm not sure about. Basically, will the existing pool structure automatically get recognized and implemented? Because without the pool, the data on each partition is useless.

-

Not sure why you keep going back to DrivePool balancing. This is the CloudDrive forum after all. And as I've repeatedly stated above, I'm not talking about a DrivePool cache. I'm talking about CloudDrive's cache. As I've said, again, the only way DrivePool is being used is to pool together the multiple partitions of the CloudDrive into one drive.

-

Not sure what balancing you're talking about. I'm only DrivePool with the partitions of my cloud drive (the configuration I described 2 posts above). The SSD would only be used as a cache for the letter mounted drive (top level pool)

-

So right now, my cloud drive has 8 partitions, each are mounted to directory, then all 8 are pooled together in Drive Pool and mounted to another directory, then that pool is in another pool by itself. Only that highest level pool is assigned a drive letter and I access all the data through that only. How would I go about properly mounting this on another system if I needed to? Does the amount of upload data that was queued at the time of drive failure affect the likelihood of issues/corruption? Is there any possibility of adding levels of protection in the future? I don't know the inner workings of CloudDrive very well, but off the top of my head, would something like writing empty placeholder files onto Google first or something of the sort reduce the risk of damage? Or maybe there's something else you can do entirely? I wouldn't know where to start. Yeah I probably need to do this. The only problem for me is that I'm regularly working with around a terabyte at a time so I was hoping to use a 1tb SSD. Two of those is getting $$$$$

-

I've been running my drive with a cache on my RAID so far, but due to the amount of i/o from other apps causing a bottleneck from time to time, I've been considering getting a dedicated SSD to use as a cache drive. My only concern with moving it off my RAID is, what happens when the cache drive fails? The way I see it, there's a few different scenarios here. For instance, there could only be downloaded read cache stored on the cache at the time of failure, there could be an actively uploading write cache, or there could be an upload queue with uploads currently paused. There could be other cases I haven't considered too. What I'm interested is what would happen if the drive failed in any of these scenarios. What protections does CloudDrive have to prevent or limit data loss/corruption in the cloud?

-

If DrivePool is distributing data between multiple gdrive accounts, does that also increase the potential read/write speed of the drive? My current setup is a 256TB CloudDrive partitioned into 8 32TB partitions. All 8 partitions are pooled into 1 DrivePool. That DrivePool is nested within a second DrivePool (so I can add additional 8-partition pools to that in the future). Basically: Pool(Pool(CloudDrive(part1,part2,part3,...,part8))). Hypothetically, if I did something like Pool(Pool(CloudDrive_account1(part1,part2,part3,...,part8)),Pool(CloudDrive_account2(part1,part2,part3,...,part8),Pool(CloudDrive_account3(part1,part2,part3,...,part8)), would I see a noticeable increase in performance, OTHER than the higher upload limit?

-

As clouddrive doesn't use personal gdrive API keys, how does it double api calls? I figured this would just increase the upload limit. Also, some clarification. Is this using distinct gsuite accounts, or can it simply be multiple users under one gsuite account each with their own gdrive account? Does each user on the Gsuite account each get 750 GB upload a day?

-

So this version should fix the issue of files disappearing? I installed this while ago but I've been swamped by other things since. Unfortunately at this point, it's become rather difficult for me to see if further things are deleted, as the last cycle of deletions took out roughly half my files and I've still not identified everything deleted from that incident.