-

Posts

1036 -

Joined

-

Last visited

-

Days Won

105

Posts posted by Shane

-

-

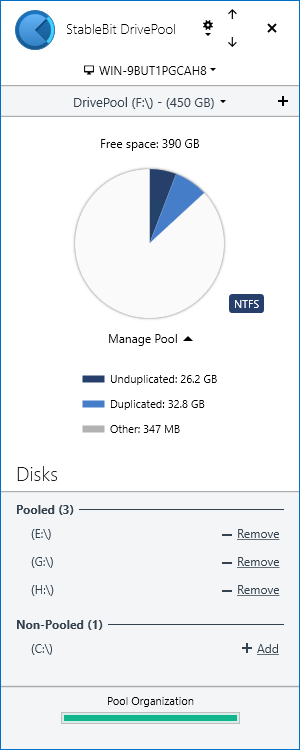

Directly under the pie graph, "Manage Pool" is clickable and it highlights when you place the mouse cursor over it; I've linked a screenshot:

-

In the GUI you should be able to click Manage Pool and then click Balancing.

-

Yes, if you want to keep the old drives together as a backup/pool, that would be the way to do it.

Once all the old drives have been disconnected and the computer restarted, you should be able to rename F: to E: straightforwardly.

Caveat: if you have shared any folders on E: then I'd expect you would need to recreate those shares on F:.

-

Hi sardonicus, the button to trigger manual rebalancing only appears if DrivePool determines the pool needs rebalancing (and will disappear once it doesn't) according to the current balancing configuration.

-

Try uninstalling DrivePool before installing an earlier version?

Try uninstalling DrivePool then deleting the "C:\ProgramData\StableBit DrivePool\" folder and rebooting before installing an earlier version?

While I know you mentioned WHS 2011, support for WHS 2012 was discontinued alongside the release of version 2.3.9.1654, and 2.3.8.1600 was the previous DrivePool release before that, if that helps. You can find links to download the various release versions here.

-

On 11/1/2024 at 8:47 PM, RockmanXX said:

To add more information, I have also tried directly transfer the file to the disk itself and it seems the freeze does not happen. (the transfer speed stay constantly ~150MB/s if transfer the files to disk directly)

Just checking, have you confirmed that the disk you tried transferring to directly is the disk that the pool is attempting to write to (e.g. via opening the Disk Performance section of the DrivePool GUI or using Windows Resource Manager)?

Does the problem go away if you tick Manage Pool -> Performance -> Bypass File System Filters?

What is the product model code of the affected disk?

-

Hi, if you'd like the StableBit team to look at your specific BSOD, you'll need to open a ticket so they can provide you with instructions for supplying error data from your machine.

-

The light blue, dark blue, orange and red triangle markers on a Disk's usage bar indicates the amounts of those types of data that DrivePool has calculated should be on that particular Disk to meet certain Balancing limits set by the user, and it will attempt to accomplish that on its next balancing run. If you hover your mouse pointed over a marker you should get a tooltip that provides information about it.

https://stablebit.com/Support/DrivePool/2.X/Manual?Section=Disks List (the section titled Balancing Markers)

-

1. It might be a damaged volume. You could try running a chkdsk scan to see if you need to run a repair, perhaps do a chkdsk /r to look for bad sectors.

2. It might be damaged file permissions. I would suggest trying these fixes (the linked wiki method and the SetACL method) to see if that solves things.

3. Another possibility is some sort of problem involving reparse points or symlinks.

If none of the above help, I'd suggest opening a support ticket.

-

As a general caution (I haven't tried kopia) be aware that some backup tools rely on fileid queries to identify files and unfortunately DrivePool's current implementation of fileid is flawed (primarily relevant is that files on a pool do not keep the same fileid between reboots and the resulting collision chance is very high). I would test thoroughly before committing.

-

Hi David,

DrivePool does not split any files across disks. Each file is stored entirely on a disk (or if duplication is enabled, each copy of that file is stored entirely on a separate disk to the others).

You could use CloudDrive on top of DrivePool to take advantage of the former's block-based storage, but note that doing so would give up one of the advantages of DrivePool (that if a drive dies the rest of the pool remains intact); if you did this I would strongly recommend using either DrivePool's duplication (which uses N times as much space to allow N-1 drives to die without losing any data from the pool, though it will be read-only until the dead drive is removed) or hardware RAID5 in arrays of three to five drives each or RAID1 in pairs or similar (which allows one drive per array/pair to die without losing any data and would also allow the pool to remain writable while you replaced the dead drive).

-

Hi, first do a Manage Pool -> Remeasure and a Cog icon -> Troubleshooting -> Recheck Duplication just in case.

If that doesn't fix it and you want to just get rid of the duplication you can then Manage Pool -> File Protection -> Pool File Duplication -> Disable Duplication.

If you want to see which folders are duplicated first, you can either:

-

Manage Pool -> File Protection -> Folder Duplication to go through all the folders via the GUI.

-

or if you have a LOT of folders and having trouble isolating the culprits, you could run the following two commands from a Command Prompt run as Administrator, where T is your pool drive:

- dpcmd check-pool-fileparts T: 3 true 0 >c:\poolcheck.txt <-- create a text file showing actual and expected dupe level of all files in pool, can take a long time especially if you have a lot of files in the pool

- type c:\poolcheck.txt | find /V "/x1] t:\" >c:\pooldupes.txt <-- create a text file from the above text file that shows only the duplicates, ditto.

-

Manage Pool -> File Protection -> Folder Duplication to go through all the folders via the GUI.

-

Hi haoma.

The corruption isn't being caused by DrivePool's duplication feature (and while read-striping EDIT:

can have some issues with some older or... I'll say "edge-case" utilities, so I usually just leave it off anywayis buggy and should be turned off, that's also not the cause here).The corruption risk comes in if any app relies on a file's ID number to remain unique and stable unless the file is modified or deleted, as that number is being changed by DrivePool even when the file is simply renamed, moved or if the drivepool machine is restarted - and in the latter case being re-used for completely different files.

TLDR: far as I know currently the most we can do is to change the Override value for CoveFs_OpenByFileId from null to false (see Advanced Settings). At least as of this post date it doesn't fix the File ID problem, but it does mean affected apps should either safely fall back to alternative methods or at least return some kind of error so you can avoid using them with a pool drive.

-

If you have version 2.3.4.1542 or later release of DrivePool, when you click Remove on the old Ironwolf you have the option to tick "Leave pooled file copies on the removed drive (archival remove)" in the removal options dialog that pops up.

So you could just add the new 20TB, click Remove on the old 8TB and tick that option before confirming.

(if you feel anxious about it you could still copy the old 8TB to your external 14TB first)

-

Hi Austin, I would either revert to the previous release (2.6.8.4044) or update to the newer releases (2.6.10.4074 or 2.6.11.4077) of Scanner and see if the problem remains or goes away (link to releases).

Scanner's logs are written to "C:\ProgramData\StableBit Scanner\Service\Logs".

-

It's not possible to 'replace' your boot drive with a pool drive; is that what you're wanting?

-

That does sound like the app could be using FileID to do syncs; DrivePool's implementation of FileID reuses old IDs for different files after a reboot instead of keeping them until files are deleted (the latter is what real NTFS does). This confuses any apps that assume an ID will forever point to the same file (which is also naughty) but currently there's no setting in DrivePool to make it return the codes that officially tell any apps doing a FileID query to not use it for a particular volume or file. Please let us know what you find out / hear back.

-

Hi Shinji, this might be related to a fileid problem some of us have encountered re DrivePool. Try opening the advanced settings file and setting the CoveFs_OpenByFileId override to false (edit: and then restart the drivepool machine and set up the sync again) to see if that helps?

-

5 hours ago, JC_RFC said:

If there was no Object-ID then I agree with the overhead point. However the Drivepool developer has ALREADY gone to all the trouble and overhead of generating unique 128bit Object-ID's for every file on the pool.

This is why I feel it should be trivial to now also populate the File-ID with a unique 64bit value derived from this Object-ID. All the hard work has already been done.

My testing so far hasn't seen DrivePool automatically generating Object-ID records for files on the pool; if all the files on your pool have Object-ID records you may want to look for whatever on your system is doing that.

I suspect that trivial in theory ends up being a lot of work in practice. Not only do you need to do populate the File-ID records in your virtual MFT from the existing Object-ID records in the underlaying physical $ObjID files across all of your poolpart voumes, you also need to be able to generate new File-ID records whenever new files are created on the pool and immediately update those $ObjID files accordingly, you need to ensure these records are correctly propagating during balancing/placement/duplication changes, you need to add the appropriate detect/repair routines to your consistency checking, and you need to do all of this as efficiently and safely as possible.

-

3 hours ago, JC_RFC said:

I just don’t understand why they can’t map the last 64bit of object-id to file-id. This seems like a very simple fix to me? If the 128bit object-id is unique for every file then the last 64bit are as well. Just means a limit of unique 18446744073709551615 files for the volume as per ntfs.

Object-id follows RFC 4122 so expect the last 52 bits of those last 64 bits to be the same for every file created on a given host and the first 4 of those last 64 bits to be the same for every file created on every NTFS volume. You'd want to use the 60 bits corresponding to the object-id's creation time and, hmm, I want to say the 4 least significant bits of the clock sequence section? The risk here would be if the system's clock was ever faulty (e.g. dead CMOS battery and/or faulty NTP sync) during object-id creation but that's a risk you're supposed to monitor if you're using object-id anyway.

First catch would be the overhead. Every file in NTFS automatically gets a file-id as part of being created by the OS and it's a computationally simple process; creating an object-id (and associated birthobject-id, birthvolume-id and domain-id) is both optional and computationally more complex. That said, it is used in corporate environments (e.g. for/by Distributed Link Tracking and File Replication Service); I'd be curious as to how significant this overhead actually is.

Second catch would again be overhead. DrivePool would have to ensure that all duplicates have the same birthobject-id and birthvolume-id, with queries to the pool returning the birthobject-id as the pool's object-id, I think... which either way means another subroutine call upon creating each duplicate. Again, I don't know how significant the overhead here would be.

But together they'd certainly involve more overhead than just "hey grab file-id". How much? Dunno, I'm not a virtual file system developer.

... I'd still want to beta test (or even alpha test) it.

-

9 hours ago, MitchC said:

I don't know what would happen if FileID returned 0 or claimed not available on the system even thought it is an NTFS volume.

I should think good practice would be to respect a zero value regardless (one should always default to failing safely), but the other option would be to return maxint which means "this particular file cannot be given a unique File ID" and just do so for all files (basically a way of saying "in theory yes, in practice no").

DrivePool does have an advanced setting CoveFs_OpenByFileId however currently #1 it defaults to true and #2 when set to false any querying file name by file id fails but querying file id by file name still returns the (broken) file id instead of zero. I've just made a support request to ask StableBit to fix that.

Note that if any application is using File ID to assume "this is the same file I saw last whenever" (rather than "this is probably the same file I saw last whenever") for any volume that has users or other applications independently able to delete and create files, consider whether you need to start looking for a replacement application. While the odds of collision may be extremely low it's still not what File ID is for and in a mission-critical environment it's taunting Murphy.

8 hours ago, Thronic said:Maybe they could passthrough the underlying FileID, change/unchanged attributes from the drives where the files actually are - they are on a real NTFS volume after all. Trickier with duplicated files though...

A direct passthrough has the problem that any given FileID value is only guaranteed to be unique within a single volume while a pool is almost certainly multiple volumes. As the Microsoft 64-bit File ID (for NTFS, two concatenated incrementing DWORDs, basically) isn't that much different from DrivePool's ersatz 64-bit File ID (one incrementing QWORD, I think) in the ways that matter for this it'd still be highly vulnerable to file collisions and that's still bad.

... Hmm. On the other hand, if you used the most significant 8 of the 64 bits to instead identify the source poolpart within a pool, theoretically you could still uniquely represent all or at least the majority of files in a pool of up to 255 drives so long as you returned maxint for any other files ("other files" being any query where the File ID in a poolpart set any of those 8 bits to 1, the file only resided on the 256th or higher poolpart or no File ID returned by the first 255 poolparts was not maxint) and still technically meet the specification for Microsoft's 64-bit File ID? I think it should at least "fail safely" which would be an improvement over the current situation?

Does that look right?

8 hours ago, Thronic said:Does using CloudDrive on top of DrivePool have any of these issues? Or does that indeed act as a true NTFS volume?

@Christopher (Drashna) does CloudDrive use File ID to track the underlying files on Storage Providers that are NTFS or ReFS volumes in a way that requires a File ID to remain unique to a file across reboots? I'd guess not, and that CloudDrive on top of DrivePool is fine, but...

-

19 hours ago, Thronic said:

That's kind of my point. Hoping and projecting what should be done, doesn't help anyone or anything. Correctly emulating a physical volume with exact NTFS behavior, would. I strongly want to add I mean no attitude or any kind of condescension here, but don't want to use unclear words either - just aware how it may come across online. As a programmer working with win32 api for a few years (though never virtual drive emulation) I can appreciate how big of a change it can be to change now. I assume DrivePool was originally meant only for reading and writing media files, and when a project has gotten as far as this has, I can respect that it's a major undertaking - in addition to mapping strict NTFS proprietary behavior in the first place - to get to a perfect emulation.

As I understood it the original goal was always to aim for having the OS see DrivePool as of much as a "real" NTFS volume as possible. I'm probably not impressed nearly enough that Alex got it to the point where DrivePool became forget-it's-even-there levels of reliable for basic DAS/NAS storage (or at least I personally know three businesses who've been using it without trouble for... huh, over six years now?). But as more applications exploit the fancier capabilities of NTFS (if we can consider File ID to be something fancy) I guess StableBit will have to keep up. I'd love a "DrivePool 3.0" that presents a "real" NTFS-formatted SCSI drive the way CloudDrive does, without giving up that poolpart readability/simplicity.

On that note while I have noticed StableBit has become less active in the "town square" (forums, blogs, etc) they're still prompt re support requests and development certainly hasn't stopped with beta and stable releases of DrivePool, Scanner and CloudDrive all still coming out.

Dragging back on topic - any beta updates re File ID, I'll certainly be posting my findings.

-

Hi ToooN, try also editing the section of the 'Settings.json' file as below, then close the GUI, restart the StableBit DrivePool service and re-open the GUI?

'C:\ProgramData\StableBit DrivePool\Service\Settings.json'

"DrivePool_CultureOverride": { "Default": "", "Override": nullto

"DrivePool_CultureOverride": { "Default": "", "Override": "ja"I'm not sure if "ja" needs to be in quotes or not in the Settings.json file. Hope this helps!

If it still doesn't change you may need to open a support ticket directly so StableBit can investigate.

-

"Am I right in understanding that this entire thread mainly evolves around something that is probably only an issue when using software that monitors and takes action against files based on their FileID? Could it be that Windows apps are doing this?"

This thread is about two problems: DrivePool incorrectly sending file/folder change notifications to the OS and DrivePool incorrectly handling file ID.

From what I can determine the Windows/Xbox App problem is not related to the above; an error code I see is 0x801f000f which - assuming I can trust the (little) Microsoft documentation I can find - is ERROR_FLT_DO_NOT_ATTACH, "Do not attach the filter to the volume at this time." I think that means it's trying to attach a user mode minifilter driver to the volume and failing because it assumes that volume must be on a single, local, physical drive?

TLDR if you want to use problematic Windows/Xbox Apps with DrivePool, you'd have to use CloudDrive as an intermediary (because that does present itself as a local physical drive, whereas DrivePool only presents itself as a virtual drive).

"But this still worries me slightly; who's to say e.g. Plex won't suddenly start using FileID and expect consistency you get from real NTFS?"

Well nobody except Plex devs can say that, but if they decide to start using IDs one hopes they'll read the documentation as to exactly when it can and can't be relied upon. Aside, it remains my opinion that applications, especially for backup/sync, should not trust file ID as a sole identifier of (persistent) uniqueness on a NTFS volume, it is not specced for that, that's what object ID is for, and while its handling of file ID is terrible DrivePool does appear to be handling object ID persistently (albeit it still has some problems with birthobject ID and birthvolume ID, however it appears to use zero appropriately for the former when that happens).

P.S. "I have had server crashes lately when having heavy sonarr/nzbget activity. No memory dumps or system event logs happening" - that's usually indicative of a hardware problem, though it could be low-level drivers. When you say heavy, do you mean CPU, RAM or HDD? If the first two, make sure you have all over-clocking/volting disabled, run a memtest and see if problem persists? Also a new stable release of DrivePool came out yesterday, you may wish to try it as the changelog indicates it has performance improvements for high load conditions.

Only adding files to single drive in pool

in General

Posted

DrivePool's default behaviour is to write to whichever drive* has the most free space; given two empty drives, one large and one small, it will write to the large until the free space is less than the small.

Presuming that you started with an empty pool on empty drives, and the stats in your post are the end result, notice that the bytes free on the two drives are very close.

*(if you have real-time duplication enabled, drives plural)