-

Posts

1034 -

Joined

-

Last visited

-

Days Won

104

Posts posted by Shane

-

-

Other registry changes to try (separately or together):

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Session Manager\Memory Management

PagedPoolSize (REG_DWORD, Hexadecimal) to ffffffff (that's eight 'f' characters)

DisablePagedPoolHint (REG_DWORD, Decimal) to 1

These changes should be reverted if they don't help.

You could also try resetting security permissions on the pool (yes, that sounds weird but yes, it may fix it).

-

You can wait for it to eventually detect the excess duplication and correct it automatically, or you can use the Troubleshooting menu (from the Cog icon) to manually Recheck Duplication immediately. Either way it should reclaim the free space.

Another concern to check however is whether you made any changes to files on the pool between removing the disk and later reconnecting it? If so, you could potentially have issues (e.g. if you removed a drive, deleted a file from the pool that was on that drive, then reconnected the drive, the file would effectively be un-deleted; similarly if you moved a file that was on that drive, a copy would re-appear at the old location; etc).

-

5 minutes ago, Bradleyb said:

If I had duplication turned on and everything in the pool was duplicated, if a single drive died, it could be forcibly removed from the pool and no files would be lost - since everything is on at least two-drives at a time? Am I correct in thinking this?

Yes, that's correct.

-

Perhaps you could work around the problem with a third-party copier (e.g. UltraCopier, FastCopy or Copy Handler) or file manager (e.g. Double Commander), as some use their own copying code (to varying degrees) rather than rely on Windows?

-

You'd need a plugin to accomplish this. You could try a feature request via the contact form?

-

If it is not already present, try adding PoolUsageMaximum (REG_DWORD, Decimal, 60) to HKEY_LOCAL_MACHINE\System\CurrentControlSet\Control\Session Manager\Memory Management in the registry.

If it is already present but higher than 60, record its value and try changing it to 60.

In both cases a restart will be required.

-

DrivePool has the dpcmd CLI tool, but I couldn't find any options re indicating missing disks.

You could test by copying a file to the pool - if it succeeds, there are no missing disks (since pools become read-only while disks are detected missing).

I see another problem however - if it is critical that disks not be missing, and if there is no checking during the sync operation itself, then there is no protection against the risk of a disk going missing during the sync operation?

Therefore if a disk going missing means the sync will remove files from the cloud, that indicates you are not using (full) duplication - perhaps you could sync from the individual disks that make up the pool, that way if a disk goes missing during the sync then the sync itself could stop?

-

You could use the Ordered File Placement balancer (or the corresponding sections of the All In One balancer) to tell DrivePool that it should use that drive last for both new and existing files, so that it would re-balance your files into the other drives?

-

On 2/18/2021 at 3:04 AM, RussellS said:

Thanks Shane. So just to be sure, by having 'Do not balance automatically' ticked in Balancing settings the 'evacuation of data' feature will be disabled.

No, as the evacuation feature overrides the Automatic balancing section (because if you're using the evacuation feature, you want it to get to work the moment it notices a problem). So you would also want to do at least one of the following:

- configure the StableBit Scanner entry in the Balancers tab as you prefer (for example, you could turn off everything under File Evacuation while turning on Temperature Control)

- un-tick the StableBit Scanner entry in the Balancers tab to disable it completely (if you don't want Scanner and DrivePool to interact at all)

- un-tick the "Allow balancing plug-ins to force immediate balancing" in the Settings tab if you do not want ANY balancer to be able to override the Automatic balancing section of the Settings tab.

-

I'd take a wild stab and say probably negligible difference so long as windows is idle and behaving; checking task manager, my fileserver's boot SSD is currently averaging ~1% active time, ~450 KB/s transfer rate, ~1ms response time and <0.01 queue length over 60 seconds at idle, and it's a rather old SATA SSD. I think you're much more likely to run into other bottlenecks first.

But YMMV as it depends how many simultaneous streams are you planning for, what other background programs you have running, etc. You could test it? Open task manager / resource monitor, set to show the drive's active time, transfer rate and queue length, then open some streams while you run an antivirus scan or create a restore point and so on?

-

Sorry, I haven't used CloudDrive nearly enough to be able to tell you whether your plan is actually sound (I use DrivePool and Scanner a lot more) though that may change in the near-ish future.

"Drive Usage set to B only having duplicate files." - Have you tested that this works with a pair of local pools? If so, it should also work with a local+cloud pool pairing.

"Is read striping needed for users of Pool C to always prioritize Pool A resources first?" - According to the manual the answer is no; regardless of whether Read Striping is checked it will not stripe reads between local and cloud pools, instead it tries to only read from the cloud pool if it can't read from the local pool. However, I have noted forum posts about issues with getting DP to prioritise correctly, and I don't know if those issues have been fixed since those posts as I haven't dug through the CloudDrive changelog recently. https://stablebit.com/Support/DrivePool/2.X/Manual?Section=Performance Options.

The rest of your plan seems sound.

-

Yes; the response handled via a balancer in DrivePool, which you can configure or disable as you desire.

-

In simple terms, some of the methods used by copiers to improve performance push the edge of what is safe. To use a driving analogy, the issue with taking shortcuts via back roads is that sometimes you hit loose gravel during a turn or the map doesn't match the terrain. Great when it works, bad when it doesn't.

It might be worth running a memory diagnostic on your computer, though, just in case.

-

That doesn't account for the possibility of any given pool using a mix of duplication levels. Much better I think to stay with your original suggestions of not treating child pool duplication as Other (which I'd think would be doable via fetching the child pool's own measurements) and checking at interval(s) during re-balancing to see if it's satisfied the goalpost conditions.

-

On 2/15/2021 at 3:00 PM, zeroibis said:

If the above steps does not recreate the issue then something is just wrong for me.

Thanks for your patience! Following your steps recreated the issue. So... yeah, that's a bug.

I'd hazard a guess that because the ability to pool drivepool volumes was added after the ability to pool physical volumes, the pool measuring code hasn't been updated to take that new ability into account.

I've reported this issue to Stablebit in a ticket, however you may still wish to report the constantly oscillating balancing as that may be a separate issue (I wasn't able to reproduce that; I did notice some oscillation with my test data set but it gradually decreased and reached equilibrium after some iterations; your suggestion of having it check partway might be useful there too).

-

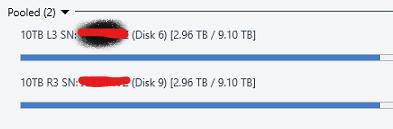

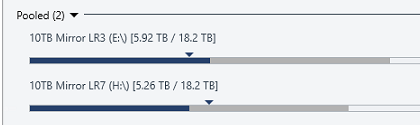

... Something's not right. I'm just going to collect all the relevant screenshots here.

.. Okay, there's no "Other" data showing up on the four physical disks.

... But it says there's "Other" data on the two respective pool volumes.

... Even though the only other folders apparently contain <1MB, which is a negligible amount when we're looking for 5.59 TB of "Other" data?

Wait, do those explorer views still have protected (system) files hidden (Explorer window, View menu, select Options, select View tab, look under Advanced settings, "Hide protected operating system files (Recommended)", defaults to ticked)? Because unless pool measuring has been borked (which would confuse the balancing), you've got 5.59 TB of "Other" data somewhere and one of the possibilities is shadow files in the System Volume Information folder, and I'm not seeing that folder in those screenshots.

On that note, just in case it is borked, can you please open the DrivePool GUI and for each of pools E, H and Z - in that order and waiting for each to finish before starting the next - select Manage Pool -> Re-measure...

-

Okay, I think I can see what you're trying to accomplish and why it's having issues with balancing. The default balancer settings expect that a pool is not competing for its parts' free space with external applications. Thus, if a user is placing data into each of the pools A, B and C, but C also happens to use pools A and B as parts, then unless the balancer settings used for C are adjusted to take this into account you can end up with the oscillation you're seeing - because from the point of view of pool C, data being added directly to pool A or B outside of C's own purview/control is the action of an external application, or "Other".

Your options are pretty much:

- Continue to micro-manage C's balancing yourself.

- Turn off automatic balancing for C, or have it balance only every so often.

- Adjust balancing for C until it can tolerate A and B being used independently.

- Place data into A or B via C instead of directly, by making use of the File Placement feature of balancing.

Personally I'd pick #4, but your use-case may vary.

-

It's possible that the disconnects are the result of the provider (Dropbox in this case). You might have run afoul of their firewalls for any of a number of innocent reasons. You could try throttling your CloudDrive upload/download speed/threads, etc.

Otherwise you might need to contact Dropbox to see whether you can get them to investigate.

If you feel it's on CloudDrive's end (or Dropbox goes "nope, not us"), you could open a support ticket with StableBit and they could work with you to examine the connection logs in detail to see where exactly the ball is being dropped.

Alternatively, if you can still manually download the Stablebit folders from your provider to a local disk, it should be possible to create a local instance from which to extract your backup... @Christopher (Drashna) I've spent some time looking, but I was unable to find anything official in the user manual or wiki or forums about how to manually copy/setup a clouddrive as an instance on a local disk in the event a cloud provider becomes unreliable, only comments that it is indeed doable. It seems like the sort of issue that should have its process formally described somewhere easily found? Or have I just flubbed my search roll?

-

7 hours ago, zeroibis said:

There is no data that is not within the pool. All data is within the pool or within a poll that is part of this pool. You can see this indicated in the images below.

Incorrect. There may be no data that is not within _a_ pool, but data that is within a pool is not necessarily also within _the_ pool made using that pool.

For example:

You have disks A, B, C and D. You have pools E (A+B) and F (C+D), and pool G (E+F).

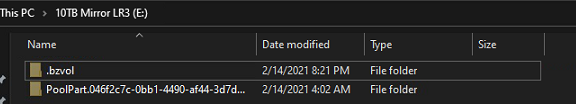

A and B will each contain a root hidden folder PoolPart.guidstring (together containing the contents of Pool E).

C and D will each contain a root hidden folder PoolPart.guidstring (together containing the contents of Pool F).

E and F will each contain a root hidden folder PoolPart.guidstring (together containing the contents of Pool G).

(note that "guidstring" is a variable that may differ between poolparts)Thus you will have a hidden folder A:\PoolPart.guidstring\PoolPart.guidstring where data placed in A:\PoolPart.guidstring outside of A:\PoolPart.guidstring\PoolPart.guidstring will show up as Data in E and Other in G, while data placed in A:\ outside of A:\PoolPart.guidstring will show up as Other in both E and G.

Can you provide more information on your balancer/placement settings? I would suggest it's possible that your chosen rules and your specific data sets have created a situation where DrivePool can only fulfill a triggered requirement in a way that sets up a different requirement to be triggered on the next pass that will in its own fulfillment in turn trigger the previous requirement.

Alternatively, if you want DrivePool's developer to inspect your situation personally (well, remotely) to see if it is due to a bug and/or whether your suggested fix would be feasible, you could use https://stablebit.com/contact to open a support ticket.

-

Your pooled files should still be on the physical disks (in hidden PoolPart.* folders) even if something has removed the virtual pool "drive".

If the pool drive (S:\) is still in Disk Management but just missing its drive letter, you could try right-clicking it and Change Drive Letter to Add a new letter.

If not, you could try uninstalling then reinstalling DrivePool.

If that too doesn't work, you could try going to the Cog icon in the DrivePool GUI and selecting it then Troubleshooting then Reset all settings...

-

"Other" data in a pool usually indicates non-pool data taking up space in one of the volumes that make up the pool.

E.g. if you had a pool that had 1 TB of data in it, and you added that pool as a "disk" for another pool, then that 1 TB would show up as "Other" in the latter pool.

I don't know why that would affecting the balance however.

-

I imagine you'd need at least the Cove file system drivers, yes. I don't know what else you'd need. Perhaps you could request a PE build (something like how the trial becomes read-only after expiring) via the Stablebit contact form?

-

A1) Once you've selected "Fill up the disks in the order specified below", you can then tick the Prioritize checkbox in the Unduplicated and/or Duplicated tabs to select the desired order (note: if you first ticked in the former tab, it will also tick it the corresponding checkbox in the lattertab as well for some reason, but if you're not actually using duplication then the latter tab is irrelevant).

A2) On the triangles for each drive in the pool (as indicated by the tool tips when the mouse cursor is resting on them):

- Dark blue downwards triangle indicates balancing target for existing un-duplicated files

- Light blue downwards triangle indicates balancing target for existing duplicated files

- Dark red upwards triangle indicates placement limit for new un-duplicated files

- Light red upwards triangle indicates placement limit for new duplicated files

where "balancing target" is the amount of existing files DrivePool calculated should (under ideal conditions) be on that drive based on your settings, and "placement limit" is the amount of new files DrivePool will put on the drive before it tries to put them somewhere else based on your settings. It can't always achieve these goals due to files having different sizes.

-

Welcome to DrivePool!

If "Or this much free space" is ticked, it does override the percentage slider.

Prevent Drive Overfill is different to Drive Usage Limiter. The first is "what to do if a drive gets too full" the second is "where should files go". If the former has a higher priority (is higher in the list), it will override the latter.

If you're using the All In One plugin, then both the Drive Usage Limiter and Ordered File Placement balancers should be unticked as they are redundant in such case.

The StableBit Scanner plugin balancer for DrivePool gets its data from StableBit Scanner, and is available because you've installed the latter software.

It sounds like you might want only StableBit Scanner and All In One ticked.

Will duplication repair corrupt files?

in General

Posted

DrivePool's duplication is primarily intended to help with losing a drive; if individual files are being corrupted it may or may not be able to help depending on the situation.

In this case where you know the corruption is limited to files on a single drive, if you're using pool-level duplication then the safest thing to do is let DrivePool re-duplicate the pulled drive using the rest of the drives: "Remove" the missing drive and it should proceed to re-duplicate (if not, you can use Cog icon -> Troubleshooting -> Recheck Duplication). Once you've got the pool fully duplicated again, you can format the corrupted drive (or at least delete/rename the hidden poolpart) and then re-connect it (in that order, as current versions of DrivePool will automatically attempt to re-add it to the pool and in this case you don't want that).

However, if you've got insufficient free space on the remaining disks to re-duplicate the pool, or you're certain that only the 0kb files are the files that have been corrupted (that the remaining files on that drive are bit-for-bit intact) then you could just delete the 0kb files, re-connect the drive and tell DrivePool to Recheck Duplication. This will certainly be quicker since the pool will have less files that need re-duplicating.

Short answer is it can't know. It checks each file's attributes and if they don't match then it runs a check of the content. If that also doesn't match then it alerts the user and offers them the choice of fixing it themselves or (if applicable) letting DrivePool replace the "older" instances.