-

Posts

1036 -

Joined

-

Last visited

-

Days Won

105

Posts posted by Shane

-

-

You'll have to disable/uninstall DrivePool (or at least turn off all balancing) and run Recuva or whatever other tool you use on the individual drives. Don't forget to restore any recovered files to a completely different physical non-pool drive (not a drive that doesn't have any deleted-but-needs-to-be-recovered data).

Then once you're satisfied you've got the files back, re-enable/install DrivePool.

-

Correct, it doesn't support software RAID (because Windows insists on Dynamic disks for that). Hardware RAID is fine.

All that matters to DrivePool itself, is that it can see the NTFS (or ReFS) volumes on Basic (not Dynamic) disks that are locally (not network) attached to the system.

Doesn't matter whether that's via motherboard or expansion card, PCIE/SATA/USB/Thunderbolt/otherwise, in any combination.

-

Hmm. You've got the "respect real-time file placement" ticked, and I was under the impression that the OFP plugin was real-time for new files. Try a Remeasure.

But if it's not real-time, then you'd need to choose an Automatic balancing setting other than "Do not balance automatically" so it can be triggered.

Edit: I've made a feature request to have the DrivePool GUI explicitily indicate which balancers are "real-time" and/or can force immediate balancing.

-

Check that the ordered file placement plugin isn't being out-prioritised by another plugin that would prefer to write to the older hdd. Also check the Balancing options (Manage Pool -> Balancing -> Settings), particularly the "Plug-in settings" and "File placement settings" sections.

-

Ouch. What are the settings on your install of Scanner, particularly Throttling?

-

Unfortunately DrivePool doesn't support Dynamic disks (if I recall correctly it could be added but would require an overhaul of the balancing engine and further work on the duplication code), so it's not compatible with Windows' software RAID implementation.

If your motherboard has even barebones RAID 0 support, that would suffice for DrivePool to "mirror" the pairs.

-

Hi vervolk. You should still attempt to activate the license on the new system anyway. If that does not automatically transfer the license, contact Support via the contact form (in the "What do you want to contact us about?" field select "An issue with licensing").

-

As you've discovered you can manually move files at the individual drive level without immediate issues so long as the directory path is the same; the only potential issue is that DrivePool may then not have the correct measurements of the state of the pool for balancing; this can be remedied by manually forcing a re-measure (Manage Pool -> Remeasure) after you're done.

Alternatively you could use Manage Pool -> Balancing -> File Placement to set rules for folders and/or files (e.g. "\tmp\today's project\*" or whatever) that you wish DrivePool to move to/from the NVMe drive; you could just (un)tick drives in the rule and then manually start a balancing pass to have it move files accordingly before and after your work.

-

Hi! DrivePool only works with NTFS or ReFS formatted drives (there's also an advanced setting to allow both in the same pool but it's not recommended outside of migrating between the two formats); exFAT is not supported.

-

If you're getting ongoing file/directory corruption, that could indicate physical problems with your hardware. You might wish to make sure you have intact backups as well as use Stablebit Scanner or another SMART/diagnostic tool to check the disks in your pool.

-

I'll clarify, when I say recommendations I'm not asking for a spiel, just a "X is what I'm using" with an implied "it hasn't bitten me (yet)". Like how you mentioned which defragger you use.

Also thankyou for highlighting the impact of sector scans re drive workload limits, and welcome to the forums!

-

I'd be interested in more details on the "sector regenerator", e.g. software recommendations.

-

I'd suggest using the contact form to let Stablebit know about the problem.

-

Unless the card does something very very weird, just make sure it's going to treat them as individual drives rather than an actual RAID array and DrivePool should find and recognise them.

-

Weird. Your account had been flagged, but I can't see any reason for it. Should be fixed now.

-

If you uninstall DrivePool, any existing pools will retain their content and be automatically re-detected when you reinstall DrivePool. Their duplication levels should be kept, but you may need to recreate any custom balancing/rules you had for them.

-

No need to worry, Drivepool is not affected by changing which bay any of its drives is in. So long as it can see the drives locally, you're good.

-

Nice catch. I'm guessing the large difference in the example screenshots is because you have NTFS compression enabled?

-

Generally well-developed. Scanner will also still report - as you found - that the drive itself is flagging a problem even if Scanner can't recognise exactly what the problem is.

-

The problem is that SMART is not a strictly formalised standard; every manufacturer has their own implementation (sometimes differing even between models) using different scales and ranges and formats. Manufacturer A might use values between 100 and 199 at location 235 to measure an aspect of their drives while Manufacturer B might use values between 0 and 65535 at location 482 for that same aspect; and those values may not even be in the same scale (e.g. celsius vs kelvin vs fahrenheit for temperature). And so on ad nauseum.

This means that if the particular SMART software you're using doesn't have a translation of whatever proprietary implementation it encounters sufficient to know that A=B means "bad" (and also track stuff like "not bad yet but it is getting worse"), it then has to rely entirely on whether (and when) the drive itself flags the results as "bad".

-

Hmm. Tricky. I've done some testing; the SSD Optimizer balancer conflicts with the requirement to keep certain files on the SSDs because the Optimizer wants to flush the cache disks despite the File Placement rules (even if it does not do so on the first balancing run, it will do so on a subsequent run) regardless of the File Placement Settings in the Settings tab. I'm not sure if that's a bug or if it is working as intended... though I suspect maybe the former.

I'll play around with it some more and see what I can come up with.

EDIT: @Christopher (Drashna) is it possible to get the SSD Optimizer balancer to play nice with the File Placement rules? I've tried the various permutations possible in the Manage Pool -> Balancing -> Settings -> File placement settings section but even when it respects them on the first subsequent balancing pass it fails to do so on later balancing passes, i.e:

- I set up a pool with the SSDs marked as SSDs in the SSD Optimizer balancing plug-plugin.

- I create a "SSD" folder and set up a File Placement rule that any file in that folder should be kept on the SSDs.

- In File placement settings I tick "Balancing plug-ins respect the file placement rules".

- I copy files to the pool; they go into the SSD drives per the real-time requirement of the SSD Optimizer.

- I start a balancing pass; the File Placement rules are respected and the matching files stay on the SSDs while the rest are moved to the HDDs.

- I start another balancing pass; the File Placement rules are ignored and SSD Optimizer flushes all files on the SSDs to the HDDs.

#6 shouldn't be happening? Doesn't seem to matter whether the other File placement settings are ticked or unticked.

-

Something like "If user rate limit exceeded, defer uploads for ____ minutes" (and another for downloads) ?

-

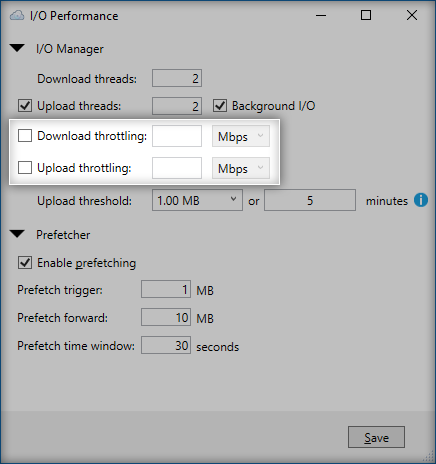

Perhaps submit a feature request for a daily upload limit option in Clouddrive, and in the meantime maybe set the upload throttling speed to about 80 Mbps to avoid hitting the provider's daily quota limit?

-

I'm on the latest stable release (2.2.5.1237) for my backup machine and the latest beta release (2.3.0.1244) for my main server; haven't encountered any problems with either.

Possible to remove drive including its files from pool?

in General

Posted

In order:

Based on my testing, "dpcmd ignore-poolpart" does not wait for open file handles to be closed. The results are as per bullet point four above.

Renaming a poolpart will also 'disconnect' that drive (volume) from the pool, but poolparts cannot be renamed if they contain open file handles, so this may be a partial workaround (partial, because the pool will still become read-only until you formally remove the 'missing' drive via the GUI (or via dpcmd).

Can you pause the copying at all? Alternatively, can you at least throttle the copying speed from the source? Then you could have the source filling the pool while you empty the pool to destination drives that you swap out as the latter fill (i.e. the drives in the pool act as a buffer so the source never needs to stop copying, so long as you can empty the pool faster than the source can fill it)?

Edit: A third option might be to make a feature request for StableBit to add an advanced setting (advanced because it would be a 'use at own risk' setting) to DrivePool that enables forcing pools to remain writable even when a drive is missing. I don't know how feasible that would be.