-

Posts

24 -

Joined

-

Last visited

Posts posted by Tell

-

-

I just submitted a support request using the link you provided @srcrist, thanks.

I can see that my version of the file is signed by the developers, so it seems highly likely that it’s a false positive, but it’s very worrying that you’re all getting different results with your versions of what should be the same file.

-

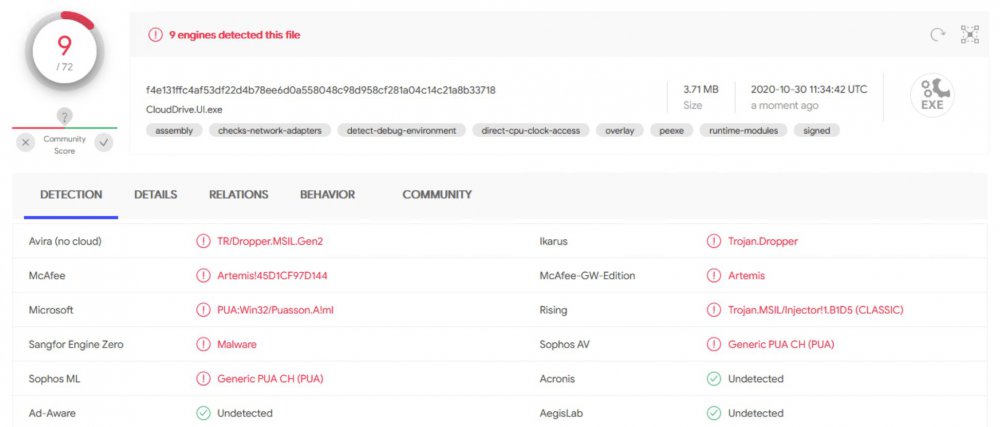

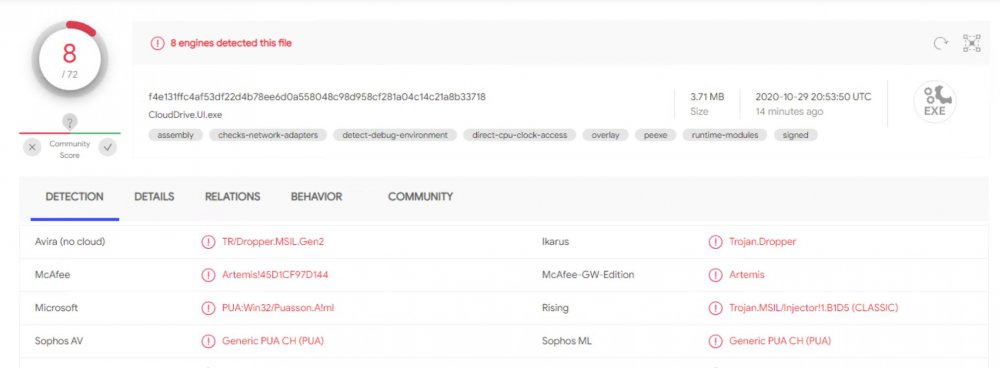

One more engine on VirusTotal is now detecting this as malware, bringing the total to 9 engines.

I’ll reach out to CoveCube to bring this to their attention (or do we have @Christopher (Drashna) here now?)

-

So, the latest release of CloudDrive.UI.exe is being detected by multiple AV engines as various forms of malware or potentially unwanted application. Anybody else seeing this?

Have a look at the VirusTotal report of CloudDrive.UI.exe. I was alerted by Windows Defender.

I’d welcome other members of the community to submit their own CloudDrive.UI.exe to VirusTotal to see if I’m the only one getting this.

-

-

16 hours ago, RetroGamer said:

They also say "unlimited storage" But if you read the fine text in norwegian they say that anything over 10tb is what they call abusive and you will not be allowed to upload more than that.

Just a heads up

Other than that, its great.Well, they reserve the right to do so, but they haven't capped me – and I'm well in excess of 10 TB! :-)

-

Well, sort of, but not really. rclone did it using the jottaCLI. Any possibility that there might be something here for CloudDrive?

-

I’d like to suggest that Covecube revisits Jottacloud as a possible provider for StableBit CloudDrive. Jottacloud is a Norwegian cloud sync/cloud backup provider with an unlimited storage plan for individuals and a very strong focus on privacy and data protection.

The provider has been evaluated before, but I’m not sure what came of it (other than that support wasn’t added).

Jottacloud has no officially documented API, but they have stated officially that they don’t oppose direct API access by end users or applications, as long as the user is aware that it isn’t supported. There is also a fairly successful implementation in jottalib, which I believe also has FUSE support.

-

I’d like to chip in that this is no longer the case. JottaCloud now limits their storage and bandwith usage to “normal individual usageâ€. Specifically:Actually, there isn't. Their unlimited plan is limited at 10 TB. See https://www.jottacloud.com/terms-and-conditions/ section 15, second paragraph.

Users with an Individual account may use the Service for normal individual usage. Although Jotta does not limit bandwidth or storage on Unlimited subscription plans, we reserve the right to limit excessive use and abuse of the Service. If a user’s total storage and network usage greatly exceeds the normal usage of an average Jotta user, and/or indicates that the Service is being uses for other than normal individual use, this may in some cases be deemed as abuse of the Service.

In these abovementioned cases, we reserve the right to offer an alternative Jotta subscription, or in some cases delete the user account and/or user data. The user will be contacted by us with a suggested solution or notice of action in such events.

-

Not sure if this is related but maybe it will be helpful to someone

I had an issue where my cache drive was filling up and it ended up being because the ACD software was running and going crazy trying to sync on it's own and had made a boat load of its own files that filled the drive. I exited the amazon software (In fact i ended up uninstalling it) deleted all of the random files it made and havent had an issue since.

Thank you for your input! Unfortunately, the ACD software is not related to the issue being discussed in this thread. This thread details a problem that appears when an amount of data greater than the size of the drive the cache resides on is written (copied) to the CloudDrive drive.

-

After some testing, I can confirm that the .777 build does NOT resolve this issue.

I understand that the issue is caused by how NTFS handles sparse files. Why is this issue so "rare"? Is it only a small subset of users that experience this bug? Does not everybody see this happen when they copy more data to cloud drive than the size of the cache?

For me, this makes CloudDrive incredibly difficult to use – I'm trying to upload about 8 TB of data, and given a 480 GB SSD as a cache drive, you can do the math on how many reboots I have to do, how many times I have to re-start the copy and how often I need to check the entire CloudDrive for data consistency (as CloudDrive crashes when the cache drive is full).

The reserved space is released when the CloudDrive service is restarted. This indicates that the deallocated blocks are freed when the file handles are closed from CloudDrive. To me, it seems like a piece of cake to just make CloudDrive release and re-attach cache file handles on a regular basis – for example, for every 25% of cache size writes and/or when the cache drive nears full or writes are beginning to get throttled.

-

Actually, there is! Jottacloud a Norwegian cloud provider have unlimited speed, space and they are even a bit cheaper than ACD.

Actually, there isn't. Their unlimited plan is limited at 10 TB. See https://www.jottacloud.com/terms-and-conditions/ section 15, second paragraph.

-

I'm currently testing to see if the .777 build har resolved this issue.

-

I doubt it, because once the service starts up, it would open the handle back up. And the reserved space will decrease over time, as well.

Turns out running…

net stop CloudDriveService net start CloudDriveService

… does actually resolve the issue and release the reserved space on the drive (which in effect was the same as a reboot accomplished), allowing me to continue adding data. It is clear to me that the issue is resolved when CloudDrive then releases/flushes these sparse files. The issue does not re-appear until after I have added data again. While the problem might be NTFS-related, I would claim that this would be relatively simple to mitigate by having CloudDrive release these file handles from time to time so that the file system can catch up on how much space is occupied by the sparse files. It makes sense to me that Windows, to improve performance, might not compute new free space from sparse files until after the file handles are released – after all, free disk space is not a metric that mostly needs be accurate to prevent overfill and not the other direction.

TL;DR: The problem is solved by restarting CloudDriveService, which flushes something to disk. CloudDrive should do this on its own.

-

Drashna and friends,

I'm still experiencing the issues, which is preventing me from uploading more than the size of my cache drive (480GB) before I have to reboot the computer. Hosting nothing but the CloudDrive cache, fsutil gives:

C:\Windows\system32>fsutil fsinfo ntfsinfo s: NTFS Volume Serial Number : 0x________________ NTFS Version : 3.1 LFS Version : 2.0 Number Sectors : 0x0000000037e027ff Total Clusters : 0x0000000006fc04ff Free Clusters : 0x0000000006fb70c3 Total Reserved : 0x0000000006e6d810 Bytes Per Sector : 512 Bytes Per Physical Sector : 512 Bytes Per Cluster : 4096 Bytes Per FileRecord Segment : 1024 Clusters Per FileRecord Segment : 0 Mft Valid Data Length : 0x0000000000240000 Mft Start Lcn : 0x00000000000c0000 Mft2 Start Lcn : 0x0000000000000002 Mft Zone Start : 0x00000000000c00c0 Mft Zone End : 0x00000000000cc8c0

Since a reboot solves the issue, could it be that CloudDrive needs to release the write handles on the (sparse) files so that the drive manager will let go of the reservation? Did https://stablebit.com/Admin/IssueAnalysis/27122uncover anything?

Best,

Tell

-

-

To clarify, all of the space is freed up when unmounting the CloudDrive disk?

"Used space" on the physical drive is returned to near-zero when I "Detach" the drive from CloudDrive. This also clears the red warning CloudDrive throws. I can then "Attach" the drive again and resume uploading, until the physical cache drive again is full.

-

Drashna,

Thank you for your feedback. Yes, I suspect this is somehow related to the handling of sparse files, as I wrote.

VSS is totally disabled on the server in question (the service is not running). On Windows Server, Shadow Copies appear as part of the "Shared Folders" MMC Snap-In (under All Tasks). I've attached a screenshot confirming that the service is disabled in general and on this drive in particular.

I also intuitively suspected VSS from doing this (since "Used space" on disk ≠ "Size on disk" of the folder). However, as far as I can reason, if VSS was the culprit, detaching the Cloud Drive should not immediately free all the space again. VSS seems to be ruled out, however.

Any other ideas?

-

Hi all,

I've run into a situation where the local cache drive is getting filled up despite having a fixed local cache. Configuration is:

- StableBit CloudDrive v 1.0.0.634 BETA

- 4 TB drive created on Amazon Cloud Drive with 30 MB chunk size, custom security profile

- Local cache set to 6 GB FIXED on a 120 GB SSD (the SSD is exclusive to CloudDrive - there's absolutely nothing else on this drive)

- Lots of data to upload

When the local cache is filled up (6 GB) CD starts throttling write requests, as it should be doing (hooray for this feature, by the way). However, when the total amount of data uploaded is nearing the size of the cache drive, CloudDrive starts slowing down until it completely stops accepting writes and throws a red warning message saying that the local cache drive is full.

This is the CloudPart-folder after a session of having uploaded approx 30 GB of data.

This is the local cache disk at the same time as the screenshot above. Remember, there is absolutely nothing on this drive other than the CloudPart-folder.

Selecting "Performance --> Clear local cache" does nothing. Detaching and re-attaching the drive clears and empties the local drive, reducing the "Used space" to almost nothing, and I can again start filling the cloud drive with data until the cache drive runs full again.

As is obvious, a discrepancy exists between the amount of data reported as "Used space" on the SSD and the "Size on disk" of the CloudPart folder. My guess is that this is some sort of bug related to the handling of NTFS sparse files. Any ideas?

-

I created a test with a 200 GB disk. I the 200 GB disk on Amazon Cloud Drive, with a local cache size of 1 GB and the other options set at their defaults except for not formating and assigning a drive letter.

I then formatted the it as a block device with VeraCrypt (one full pass of ciphertext) and filled the disk with cleartext data (a second almost-complete pass of ciphertext). StableBit CloudDrive began uploading, and the Amazon Cloud Drive web interface confirms that I have so far uploaded about 90 GB of chunks. This gave the same behaviour in the CloudDrive UI as seen previously - take a look at http://imgur.com/Zqhlo7H

The screenshot is taken after about 24 hours of uploading since the last time I unmounted the VeraCrypt volume.

I will destroy the drive, enable logging and recreate the problem.

-

I hate to ask, but could you see if this behavior occurs on a disk that isn't protected by VeraCrypt or the like?

I'll test that.

If it doesn't, then it may be an interaction between the two that is causing the odd behavior.

I'll be seeing about replicating this issue, as well, but on the chance that it's hardware/ISP specific...

I created a new 500 GB disk without formating or assigning a drive letter, then whole-disk-encrypted it with VeraCrypt again. I did not fill the encrypted volume with data. This means that the entire CloudDrive has had ciphertext (of cleartext 0) written to it exactly once. It began uploading immediately, and the StableBit CloudDrive UI reports that over 91 GB has been uploaded since my previous post.

To isolate the problem further, I will destroy this drive and then re-create and fill it with data (so that it is written to twice). In the interest of time I think I will do it with a 250 GB disk.

And if it is still showing the "to upload" and no cloud content, then something is clearly going wrong here.

Could you enable logging and let it run for a bit?

http://wiki.covecube.com/StableBit_CloudDrive_Log_Collection

I will do that after conducting the 250 GB filled cleartext test.

-

Yes. By default, it's treated as a block storage solution. However, the software is file system aware (at least for NTFS) and attempts to pin the MFT and other NTFS data (including directory entries).

However, using VeraCrypt may interfere with this, depending on exactly what is going on.

I'd say it may be an interaction, but if the drive has been unmounted (in VeraCrypt) and essentially untouched, it should be uploading, even slowly.

The VeraCrypt volume should still resemble an NTFS volume in layout, so if CloudDrive pins the area (beginning?) of the block device that is normally used for the MFT and other metadata it should give the sought-after performance improvement. The VeraCrypt documentation states that encryption offsets the cleartext drive blocks by 131072 bytes in the ciphertext. There is also some trim from the end of the volume. Anyway.

You have said that the drive itself is 1TB and that the cache size is 100GB, right? I'm pretty sure that's the case (as listed in the first post).

Correct.

The size on disk is right, if it's not really uploading, or has only uploaded very small amount, as it will be kept on disk until it's able to be uploaded.

The Amazon Cloud Drive web interface reports that I have uploaded 340 GB now (my Amazon Cloud Drive is empty save for this CloudDrive disk). This has increased from the original 80 GB i reported in the first post. Clearly, CloudDrive has spent a lot of time and bytes uploading something, but apparently it's not reported in the UI or acknowledged by reducing the "size on disk" of the CloudPart.xxx-folder.

The StableBit CloudDrive UI still shows no data in the cloud (right pie chart is all-local) and the left pie chart shows "To upload: 1 TB".

Though, a good question here, is what is your upload speed?

80 Mbit/s from the ISP. The upload speed to Amazon in the StableBit CloudDrive UI varies between 2 and 15 Mbit/s.

At this point I will destroy this disk and re-create it to test if there is some improvement from a second attempt. Maybe I will test with a smaller disk to see if there is any change.

-

Hi Drashna!

Thanks for getting back to me.

I actually didn't make CloudDrive format it and assign a drive letter; I had the volume full-disk-encrypted with VeraCrypt which I then loaded with some test data. I take it that this means that absolutely every bit on the physical drive has been touched at least once in addition to the data I later wrote to it. The volume has been unmounted (untouched) for the last 24 hours. Is CloudDrive filesystem-aware, or is the drive treated as a block storage device?

The hidden "CloudPart.xxx-"-folder on one of my drives shows that both "Size" and "Size on disk" is the same and equals the drive size at 1 TB.

CloudDrive has been running continuously since my previous post. It has shown some "blue" and some "orange" notifications, but as they are infrequent I assume that these are the result of normal network bottlenecks and the like. The right-hand pie chart still shows all data as "Local". The Amazon Cloud Drive web interface shows that I have uploaded about another 80 GB of data for a total of 160 GB used space in the cloud.

I'm running version 1.0.0.463 BETA.

Thanks a lot!

-

Hi all!

First-time user of CloudDrive, still in the trial. Considering going for the bundle but want to make sure that it works before I purchase.

I'm wondering wether CloudDrive is actually moving any data or not. I'm trying to get this to work with Amazon Cloud Drive (yes, experimental and throttled, I know). I've created a 1 TB drive with 20 MB chunk size and a 100 GB local cache. After the drive was created I almost filled it up, most of which I then deleted whereafter I have almost filled the drive to about half capacity (the point here is that I'm estimating that I've totalled more than 1 TB of writes to it). The last writes to the drive finished about five hours ago.

CloudDrive has been showing various upload speeds between 1 Mbit/s and 10 Mbit/s since I started writing to the drive. The cloud provider's web interface confirms that I've so far managed to upload about 80 GB of chunks. The right-hand pie chart in CloudDrive is still showing all of my data as "Local" and nothing as stored in the cloud. The left-hand pie chart shows "To upload: 1 TB". I've tried turning "Background I/O" both on and off, but I haven't seen any difference (not entirely sure what this toggle does anyway). Should I not see about 80 GB of data starting to appear in the cloud here?

Thank you guys for any feedback.

CloudDrive.UI.exe detected as malware by multiple antivirus engines

in General

Posted

@Tetradi Your file is triggering with the same pattern as mine ("PUA:Win32/Puasson.A!ml"). Let's hope CoveCube can sort this out soon.

Paging @Christopher (Drashna) and @Alex :-)