steffenmand

-

Posts

418 -

Joined

-

Last visited

-

Days Won

20

Posts posted by steffenmand

-

-

2 hours ago, CDEvans said:

Disabled and re-enabled the main Google Drive API on the GSuite admin portal bud.

https://console.developers.google.com/apis

https://console.developers.google.com/apis/library?supportedpurview=project

Dont use my own API key

use Stablebit's

use Stablebit's

-

2 hours ago, CDEvans said:

As a 'Have you tried turning it off and on again'. I disabled Google Drive API in the admin panel, then turned it back on.

Now it works again.... Not sure why that would fix it but back to basics.

What did you disable/enable where ?

-

Have to note that it downloads and reads just fine - only writes that fail

-

1 hour ago, srcrist said:

I'm not really going to get bogged down with another technical discussion with you. I'm sorry.

I can only tell you why this change was originally implemented and that the circumstances really had nothing to do with bandwidth. If you'd like to make a more formal feature request, the feedback submission form on the site is probably the best way to do so. They add feature requests to the tracker alongside bugs, as far as I know.

I know the reason - i was here as well you know

All back in the days when the system was single-threaded and only 1 MB chunks.

All back in the days when the system was single-threaded and only 1 MB chunks.

Also never said the issue was bandwidth - i just said that internet speeds increased and that more people could potentially download bugger chunks at a time. EDIT: with the chunks being the bottleneck as my HDDs hit their max IOPS. Thus 100 MB chunks could result in less I/O and thus higher speeds.

I completely agree that partial reads is a no go - which is why i mentioned increasing minimal download size (this could be to the chunk size you know)

So I agree that there is no reason to discuss it as we both know the reasons why and when it happened - im just justifying that that bigger chunks could be utilized in a good way nowand P.S. i do utilize the support, however sometimes it is good to bring it into public discussion to get other peoples oppinion as well

Will be trying a 3D Xpoint drive as cache drive soon to see if that can give some more "juice" on the speed

-

6 minutes ago, srcrist said:

No, I think this is sort of missing the point of the problem.

If you have, say, 1 GB of data, and you divide that data up into 100MB chunks, each of those chunks will necessarily be accessed more than, say, a bunch of 10MB chunks, no matter how small or large the minimum download size, proportional to the number of requested reads. The problem was that CloudDrive was running up against Google's limits on the number of times any given file can be accessed, and the minimum download size wouldn't change that because the data still lives in the same chunk no matter what portion of it you download at a time. Though a larger minimum download will help in cases where a single contiguous read pass might have to read the same file more often, it wouldn't help in cases wherein any arbitrary number of reads has to access the same chunk file more often--and my understanding was that it was the latter that was the problem. File system data, in particular, is an area where I see this being an issue no matter how large the minimum download.

In any case, they obviously could add the ability for users to work around this. My point was just that it had nothing to do with bandwidth limitations, so an increase in available user-end bandwidth wouldn't be likely to impact the problem.

I cant see how its different from 20 MB vs 100 MB. The content needed is usually within a chunk download anyway. Your problem is more if you do PARTIAL reads of a chunk in which you end up having too many actions on a file - however i always download full chunks. Thus i agree that it shouldnt be possible to download partials on such a chunk size, but if i can finish 100 MB in 1 sec anyway, then it doesnt really matter for me

-

I actually think this might be a bug!

[IoManager:214] HTTP error (InternalServerError) performing Write I/O operation on provider.

20:02:40.7: Warning: 0 : [IoManager:214] Error performing Write I/O operation on provider. Failed. Internal Error

20:02:40.8: Warning: 0 : [ApiGoogleDrive:244] Google Drive returned error (internalError): Internal Error

20:02:40.8: Warning: 0 : [ApiHttp:244] HTTP protocol exception (Code=InternalServerError).

20:02:40.9: Warning: 0 : [IoManager:244] HTTP error (InternalServerError) performing Write I/O operation on provider.

20:02:40.9: Warning: 0 : [IoManager:244] Error performing Write I/O operation on provider. Failed. Internal Error

20:02:40.9: Warning: 0 : [IoManager:214] [W] Error writing range: Offset=2.789.212.160. Length=20.971.520. Internal Error

20:02:41.1: Warning: 0 : [IoManager:244] [W] Error writing range: Offset=11.533.601.996.800. Length=20.971.520. Internal Error

The offset seems ridiculous high, which most likely is why Google is throwing an internal error since we are waaaay out of bounds!

-

An option could also be to expose it in the settings file. Then only people who are Tech savvy will know what to do - and also be aware of the requirements for the change!

-

20:09:24.7: Warning: 0 : [ReadModifyWriteRecoveryImplementation:371] [W] Failed write (Chunk:133, Offset:0x00000000 Length:0x01400500). Internal Error

20:09:24.9: Warning: 0 : [TemporaryWritesImplementation:371] Error performing read-modify-write, marking as failed (Chunk=133, Offset=0x00000000, Length=0x01400500). Internal Error

20:09:24.9: Warning: 0 : [WholeChunkIoImplementation:371] Error on write when performing master partial write. Internal Error

20:09:24.9: Warning: 0 : [WholeChunkIoImplementation:371] Error when performing master partial write. Internal Error

20:09:24.9: Warning: 0 : [IoManager:371] HTTP error (InternalServerError) performing Write I/O operation on provider.

20:09:24.9: Warning: 0 : [IoManager:371] Error performing read-modify-write I/O operation on provider. Retrying. Internal ErrorThis is what im seeing non-stop right now!

Is the Internal Error message from Google or a status from Stablebit ? - It could be great to make that more clear during logging which is what

-

1 hour ago, srcrist said:

I believe the 20MB limit was because larger chunks were causing problems with Google's per-file access limitations (as a result of successive reads), not a matter of bandwidth requirements. The larger chunk sizes were being accessed more frequently to retrieve any given set of data, and it was causing data to be locked on Google's end. I don't know if those API limitations have changed.

But this would be avoided by having large minimum required download size. The purpose is to download 100 MB at a time instead of 20 MB

-

As internet speeds have increased over the last couple of years, is it maybe possible to increase the chunk size up to 100 MB again ?

With a 10 gbit line on my server, my bottleneck is pretty much the 20 MB chunks that is slowing down speeds. An increase to 100 MB could really make an impact here speedwise. Especially because the current size builds up the Disk Queue which is causing the bottleneck.

I'm fully aware that this isn't for the average user - but it could be added as an "advanced" feature with a warning about high speeds being required!

Besides that - i still do love the product as always :)

-

On 11/22/2019 at 1:32 PM, zhup said:

Hello,

Is it possible to use one google drive account and create two CloudDrives on it? Each folder encrypted with a different key.

Yes - it just creates a seperate folder to save in

-

You can always follow the changes in the beta here:

http://dl.covecube.com/CloudDriveWindows/beta/download/changes.txt

I always use it to see if an update was needed from my side

Beta's can ofc. be found at http://dl.covecube.com/CloudDriveWindows/beta/download/

-

1 hour ago, davidkain said:

Hi there,

I recently set up CloudDrive and CloudPool via Google Drive, and it was working beautifully until about a week ago.

I started seeing this error, and while attempting to migrate or upload files I'd see a good initial transfer speed drop down to almost nothing before timing out.

I've seen something similar to this reported in the forums for specific beta versions of CloudDrive. Here's my current version: 1.1.1.1165

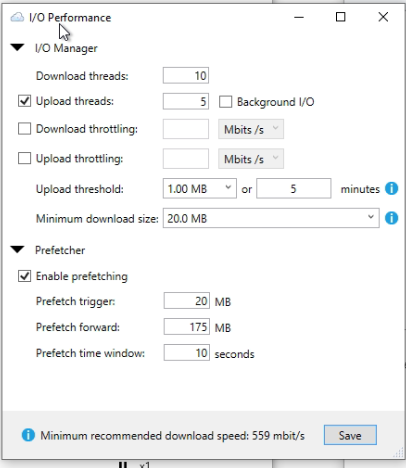

Here are my I/O Performance settings (I hadn't been hitting any sort of throttling previously, but maybe there's something I need to set here to help avoid that?):

Appreciate your help!

Try the newest version:

http://dl.covecube.com/CloudDriveWindows/beta/download/StableBit.CloudDrive_1.1.2.1174_x64_BETA.exe

It should fix the issues

-

15 hours ago, bequbed said:

When I originally created the CloudDrive I didn't realise that I can enter a value for CloudDrive size larger than 10TB. Hence I selected this option

Now that I am nearing this limit, I want to resize the CloudDrive size to larger than 10TB. I was able to increase the size as you can see in the screenshot but as suspected, the CloudDrive in Windows is still shows 10TB. I am guessing that is because the drive was selected as NTFS at the time of creation which resulted in 10TB as size.

Guess my question is that if I have increased my CloudDrive limit to 200TB, will I be able to go past 10TB?

Depending on the sector size when creating, you should be able to expand with no issues.

Try going into the hard drive partitions settings in windows and expand the drive there - maybe its just sitting as an unpartitioned part of the drive which just needs to be extended to the current

You will never be able to go above your sector size limit though

Recommend never going above 50 TB, so chkdsk will always work. Above that it will fail.

Then its better to do two different drives

-

18 hours ago, Christopher (Drashna) said:

Yeah, it would, and it is something that we've talked about internally. But "complicated" is a bit of an understatement, and it would eat up a lot more bandwidth, etc.

We should have a release for it out Very SoonTM

Maybe this could be a slow process taking maybe maximum of 100 GB per day or something (maybe a limit you set yourself, where you showcase it would take ~X amount days to finish), with a progress overview. Having maybe 50 TB would be a huge pain to migrate manually, while i would be OK with just waiting months for it to finish properly - ofc. knowing the risk that data could be lost meanwhile as its not duplicated yet

Would also make it possible to decide to change to duplication later on instead of having to choose early on

with Google Drive it does limit the upload per day, so could be nicer to upload first and then duplicate later when you are not uploading anymore

with Google Drive it does limit the upload per day, so could be nicer to upload first and then duplicate later when you are not uploading anymore

Another good feature could also just be an overview of how much you uploaded/downloaded from a drive in a single day - could be great to monitor how close you are to the upload limit on google drive drives

-

15 hours ago, ychro said:

Trying to use Cloud Drive on a Server Core install the gui appears when launching the executable, but if I try to create a drive on Google drive I am having an issue.

When I go to set up an encryption key a different window pops up, in order to save my configuration it appears I need to print my password and save it to a removable drive. I have a copy of the key but since I cant print on a Server Core install it wont let me hit the save button. Is there a work around for this?

Save as PDF and just cancel

-

On 5/16/2019 at 3:57 PM, JulesTop said:

Hi,

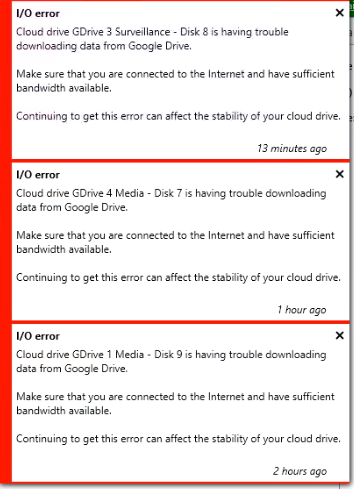

I get this error frequently... I'm not sure if it's because my cloud drive has been uploading for about 1.5 weeks so far and I am also downloading from it periodically for use with Plex... but I do get this error frequently enough. It seems to happen with all my cloud drives... I've detached and re-attached, but that doesn't seem to work... I suspect it might be my cache (SSD shared for all 4 cloud drives which is also being used for Windows OS), but I would like to have a way of making sure it's the SSD before I go out and by another one.

Thanks!

I got a dedicated SSD for my cache, maybe that is why i never encountered it.

Maybe your SSD's hit their max IOPS due to the OS and more stuff also running there?

-

On 7/18/2019 at 10:09 PM, neoark said:

Yeh, it just exits after that error.

you could try testdrive

-

42 minutes ago, neoark said:

That the last error.

usually it would continue or throw an actual error. I always see that one you see in the middle

-

-

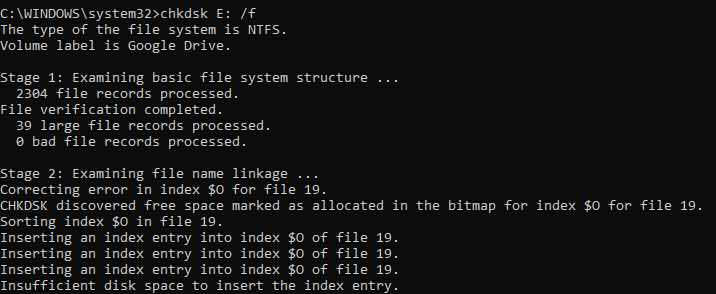

try chkdsk e: /f instead

/r is recover and search for bad sectors

-

On 7/11/2019 at 10:56 AM, schudtweb said:

3 CloudDrives in 2 GDrive accounts. At the moment its nearly unusable for me... At the moment it is working with disabled prefetching and only 1 connection. But it happens again, if i eg. download one file and upload other files.

I got 19 drives on 5 accounts and no issues at all - im at 10 download threads and 12 upload threads and 20 MB chunks with limited throttling

My guess is something in your system is causing it

-

9 hours ago, Christopher (Drashna) said:

Yes and no. It's not raid, it's a pool. So, you won't get better speeds, really.

As for the throttling, this is done per account. So if it's the same account, it won't matter if it's separate drives. And it may also be per IP address.

No, but using multiple accounts you could in theory increase speeds, while also ensure stability by using multiple providers

-

3 hours ago, MisterCrease said:

I've replaced my computer and moved Cloud Drive to my new laptop.

I am able to unlock my cloud drive storage using the decryption key, however, the disk does not display with a drive letter when I have done so.

In disk management, it shows as Healthy GPT Protective Partition and online. My only options in the right-click menu are to convert to a dynamic disk.

Unfortunately, I have no longer got access to my old laptop.

Can someone please help, I hope I've not lost all of my data?

Kind regards,

Chris

It's not because it is marked as Offline?

Did CloudDrive give a notice that it couldn't assign the drive letter?

Google Drive: The limit for this folder's number of children (files and folders) has been exceeded

in General

Posted

Maybe this is related to the issues we have with Internal Error etc. if we are already above the 10 TB mark. Then it fails when trying to write new chunks because we are already above the 10 TB mark