Chris Downs

-

Posts

67 -

Joined

-

Last visited

-

Days Won

7

Posts posted by Chris Downs

-

-

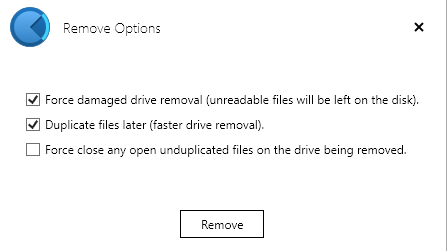

These two options leave your data on the removed drive:

-

19 hours ago, Umfriend said:

That ain't easy.

Huh? You just tick "fast removal", and it leaves the data on the disk alone.

-

2 hours ago, CoveVid said:

Thanks for this thread. I ran into this issue with Plex and Drivepool and had a hunch it was due to Drivepool.

It's unfortunate that this was never put into the FAQ and I had to find out the hard way after spending 12+ hours moving my Plex media over.

Just configure the metadata to be stored elsewhere - it should really be on an SSD anyway, for speed. It should be defaulting to using the system (C:/) for metadata. Personally, I use a dedicated cheap and small (120GB Sandisk Plus) SSD for mine.

-

13 hours ago, billis777 said:

I remember 10 years ago it was possible with some programs to use all space of hdd. Just got a 16tb and it has actual size 14.5, is it possible to unlock the extra size with drivepool or other programs?

Disk manufacturers use the definition of a kilobyte as 1000 bytes vs 1024 bytes on the computer side. That's where your "missing" space is. There is no way to unlock more space, nor has there ever been a way.

-

On 7/13/2020 at 3:45 AM, DaveBetTech said:

Thanks Chris for the reply. That's good to know about the drives being visible / accessible. I haven't added the drives to the DrivePool yet, so I haven't seen this. I assume there is still a 'drive' that shows the cumulative space across the drives (e.g. 10 tb if you have five 4tb drives in the pool w/duplicate on everything).

I'm now curious how it would use the bit rot protection in ReFs. There's a CRC that's calculated originally and recalculated each time it reads the file & compared to the original. If it's bad on a future read or scan, then it copies over the 'good' copy over the 'bad' copy. I'm now wondering if DrivePool works with ReFs in this manner. Or perhaps there's another method DrivePool & Scanner uses?

Yes, Drivepool creates a new drive for the pool. It's auto-assigned a drive letter but you can change it using the usual Windows method. It appears as a (mostly) normal drive, though disk management might only show it as "2048GB", as it has to report a value, and since the pool can grow and shrink, it supplies a fixed value. You still see the correct values in anything that isn't a partition manager though. The physical drives can have their letters removed if you want, or mounted to folders instead - I use C:\DP\ to mount all mine in case I want to access them (unlikely). Keeps the drive letter clutter down.

I have no idea about ReFS, I will tag in @Christopher (Drashna) for that part Or maybe someone else can answer.

Or maybe someone else can answer.

-

On 7/12/2020 at 3:41 PM, johnjay829 said:

I deleted them after i moved the data. The drives are fine they are in the pic E F and I. My pool drive was letter G which is not showing

ah ok, and the 3 drives that were in the pool are now completely empty? (both hidden and system hidden files visible)

-

1 hour ago, DaveBetTech said:

- I think you can see where each copy of a file is located on which physical disk. Can't do that w/Storage Spaces AFAIK.

- All your drives show up in My PC with DrivePool *PLUS* your JBOD virtualized drive vs Storage Spaces which just shows the virtualized one

- I think it handles bit rot by using Scanner to periodically scan your files which should trigger ReFs recalculation of the CRC. Storage Spaces does this automatically, so this is just a similar feature, not different.

- Scanner seems to have a better GUI for evaluating health of drives. I have some old suckers in my array. I use CrystalDiskInfo and HWinfo to monitor usually.

- Scanner can eject drives with issues from the pool. Holy crapballs this seems awesome.

QUESTIONS FOR THE BIG BRAINS / THOSE FAMILIAR:

- Is my above statement correct about showing where each copy of a file is located when you enable redundancy?

- Is my above statement correct regarding how BitRot is handled with ReFs & Scanner? If not, how would I be able to protect against this using DrivePool &/or Scanner?

GENERALLY I'M LOOKING FOR SOMETHING THAT FITS THESE CRITERIA:

- Ease of administration. I want to avoid using Linux if I can. I'm between a novice and an intermediate Linux user.

- Has to be on Windows or Ubuntu because of GPU acceleration in Plex. I don't want to buy a separate NAS.

- Has some level of redundancy and protection against Bit Rot. I want to "set it and forget it"

- Would be 'nice' to see which physical disk houses each copy of a file.

I do not think you can tell which disks contain a certain file from within DrivePool, but they are stored as normal files and visible in Windows Explorer (if you mount the individual drives to a folder, or give them drive letters) - so using Search would tell you.I am not certain that Scanner helps with BitRot - that is why many people use SnapRAID in combination with Drivepool. It's a free software tool that allows you to add parity to your DrivePools. I don't use it myself though.

-

On 6/29/2020 at 3:12 AM, mtaffe said:

I was reinstalling windows like I have done many times. I accidentally deleted one of my drives that is part of a drive pool. When I got windows up and running that drive was no longer available under windows explorer. I went into disk manager where the only option was to "make a new drive" now that drive shows up as empty. I reinstalled stable bit, upon reconstruction of the pool I only have 3 drives in the pool. The forth drive now has no files on it. I am looking for a way to get the pool back. There are 10+ years of pics on this drive so formatting is not an option.

When you say "deleted" the drive, what do you mean exactly? Deleted how, and where?

-

15 minutes ago, billis777 said:

Thank you Chris, i'm kinda still confused, i hope it doesnt come to it lol.

I was thinking copy all files from old hdds to the new pool and skip existing files, is this a good idea?

What do you mean by skip existing? Surely they are empty if they are new?

-

-

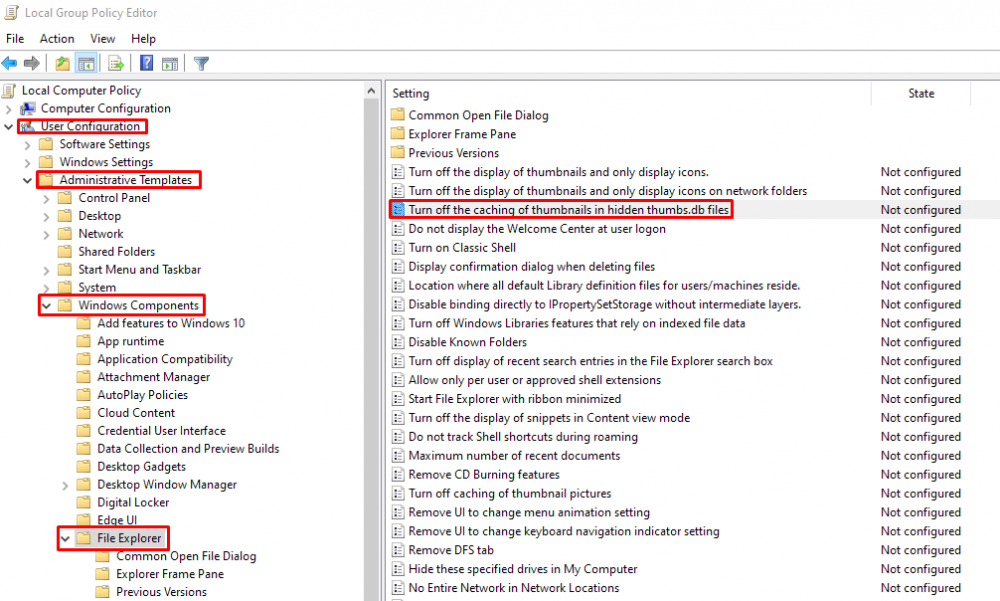

Windows 10 can be prevented from creating thumbs.db on network drives using gpedit.msc - but on local ones, it should not be creating them, as W10 uses a central thumbnail and icon cache located at C:\Users\[USERNAME]\AppData\Local\Microsoft\Windows\Explorer

So no need to turn off caching, just fire up gpedit.msc (from [WinKey]+R ) and change the following entry to "Enabled".

-

On 6/21/2020 at 3:25 AM, ClayE said:

Hello all,

Had a question about the "To Upload" cache on a local disk provider. I'm not familiar with how it works, but is it normal to have it hang around for a bit? I figured it would be a direct write through and not hit the caching system.

I'm seeing 40GBs after writing 140GBs (rough numbers).

Thank you

Clay

Where are you uploading to? Is there a daily/monthly bandwidth cap? eg, Google Drive has 750MB/day IIRC.

-

On 6/3/2020 at 8:54 AM, TomP said:

Is there a user guide for SSD Optimizer so I can set it up correctly ?

It's a bit too simple for a guide... just remember that you need more than one SSD if your pool has duplication. 2x dupe needs 2x SSD, 3x needs 3 etc. Also, keep in mind that the size of the SSDs determine the maximum filesize you can move onto the pool. I once had a pool with some 64GB SSDs as landing drives, and spend a while scratching my head when I couldn't copy across some large disk-image backups despite the pool having plenty of space.

-

On 6/4/2020 at 4:29 PM, jaydash said:

Sorry to revive an older post, but I came across this one after a quick search... also need an 8 port sata card for my server, I'm using Windows 10. I've been running a Highpoint card I wound up with for several years, but it appears to be throwing some errors and probably time to replace it...

Same as OP, I also just need a simple JBOD solution, no RAID necessary. Just need it to be reliable (of course, right? haha), handle large (10+ TB discs), and have decent/recent driver support.

Any suggestions? Is the Supermicro card recommended a few years ago still a reasonable bet?

Dell Perc H310 or one of the variants are generally very cheap now - I use three of them, flashed to IT-Mode. They are essentially transparent interfaces now.

I got them from ebay, and one from AliExpress IIRC. All under £30 delivered. You will need some of these cables: https://www.amazon.co.uk/Cable-Matters-Internal-SFF-8087-Breakout/dp/B07QFSLP6FThe cards have 2 SFF-8087 ports, with each one having 4x 6Gbps SATA lanes for 8 drives at full 6Gbps speed. However, that is not the limit on drives. Each card can handle up to 256 physical disks using SAS expanders - so adding more later on doesn't need HBA replacement.

The H310 comes in another version, with external SFF-8088 connections instead, to allow for an external JBOD drive enclosure to be connected.

Definitely avoid the 8-port SATA PCIe cards on ebay and Amazon that have 8 actual SATA ports, but only a x1 PCIe interface. There are *some* with x4, but very few. I have one with 6 ports and x4 PCIe, and that works nicely. -

On 7/9/2020 at 4:50 AM, billis777 said:

Turns out my problem was windows fast startup. It's all good for now. But if anyone knows how to activate staggered spin up on the highpoint rocket 750 card please let me know, will come handy eventually.

A really hacky/homebrew way to achieve this might be with some sort of Arduino setup that controls power to the HDD power connectors. You'd have to make a custom power cable loom... but the Arduino could be set to stagger 16 relays (or maybe 4 or 8 to bring the disks up in groups) when it's powered up? It's something I've considered myself, as I have a VM box with 12 spinning disks, and the peak power draw when they all spin up at once is... yikes. Had to carefully distribute them over the power rails. I'm using an older 1000W PSU that has 6 +12v rails.

However, the thought of the wiring loom I'd have to make kind kills the idea for me as my old arthritic hands are not up to it anymore

-

On 6/20/2020 at 5:09 PM, undead9786 said:

Is it possible to disable scanning on cloud mounts automatically? Last thing I need is for the scan to cause me to hit the daily 750GB upload limit because I missed disabling "Never scan file system automatically" on a new cloud mount.

I don't think Scanner knows/cares about where the drive is, so probably not. Scanner just sees a local drive and has no way of knowing that it's a Cloud Drive (as far as I know). In theory, it shouldn't be too tricky on the Cloud Drive side to add a flag of some sort (maybe a simple text file?) for Scanner to pick up on, but then Scanner would need more significant changes to be able to check for and read said flag/file.

-

On 7/9/2020 at 4:56 AM, billis777 said:

Thanks i found out the 10tb elements hdd is using the 3.3v pin to spin it down and when connected internally to a computer the computer doesnt use that signal so it cannot spin down.

Why wd makes things complicated, i tried so hard to find me a10tb air hdd and now i was forced to buy a helium so my computer doesnt sound like jet engines.

SAS disks use that 3.3v pin too for power control. You could try the second solution suggested here: https://www.instructables.com/id/How-to-Fix-the-33V-Pin-Issue-in-White-Label-Disks-/

It is likely that while your PSU doesn't make use of the 3.3v signalling, it may still be supplying it, preventing spin-down? Try the molex-sata adapter suggestion in the link, and see if that helps? If it does, you could go for the more fiddly pin-tape option in the first solution.

-

On 7/10/2020 at 1:36 AM, Christopher (Drashna) said:

Well, StableBit DrivePool can use 100x drives, without any issues.

As for snapRAID, I'm not too familiar with it, but you may want to take a look at this link:

http://www.snapraid.it/faq.html#howmanyparbasically, more == better, but they've only had reports of 4 disks failing at a time. So, you may be able to get away with 5-6 disks for parity.

But again, I'm not too familiar with it.

As a general rule, I use the following:

Consumer disks (including NAS WD RED and SG Ironwolf, but not the "Pro" versions) - assume a 10% failure in the first 3-5 years of their life. This % will increase over time of course. So for 100 disks, I'd go for 10 parity if I was doing it, and using consumer-grade disks.Pro/Enterprise, you could likely get away with 5% failure rate assumption in the same time - obviously assuming you stay under the yearly workload specs. So the suggestion of 5-6 disks is pretty spot on if these disks are in use.

-

On 6/29/2020 at 7:27 PM, RobbieH said:

What I am seeing is that I copy files to the pool, and the SSD eventually fills to 95% as is set in the maximum use settings in the SSD Optimizer. The files never shed off onto hard disks. In the File Placement tab, should the SSD be removed from the File Placement options?

Make sure the SSDs are unticked in the "Archive" column in the optimizer settings?

-

If you have the ability to run the new disks at the same time as the old ones, you could just make a new pool with the new drives, and copy the data across without involving Drivepool directly? Then when you're done, just shutdown the PC and remove the old drives physically (don't remove them in Drivepool). In the event that the new disks fail, you then have a complete physical copy of the pool to fall back to.

The new pool doesn't even have to be made on the same machine - use a 30 trial install of Drivepool on a second PC to make the new pool, copy the files over the network? Once it finishes, all you have to do is remove all the old disks on the first PC and move the new ones in place. Since Drivepool doesn't care about the physical disks, and uses the data on the disks, it should immediately work. You can then store the old disks away as a fall-back option, as they will still be "in a pool", making access really simple.

-

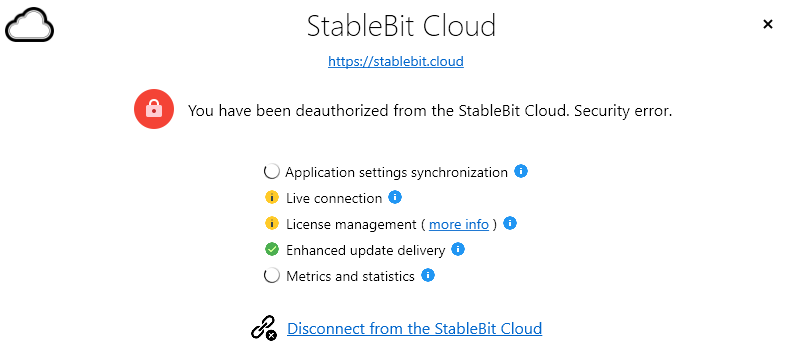

I love the Stablebit Cloud, for remote access to Pool and Scanner info, as well as seeing how many products I have for each activation ID etc. However, could you add the ability to deactivate a specific installation too? I've been running a few VM testing scenarios with new hardware, and I thought I was done testing... but I managed to forget to deactivate Drivepool (again!), though I did remember Scanner. Now the only way to fix this is with a support request. While that is "ok", surely it makes sense to be able to deactivate an installation from the cloud interface for cases where failed hardware makes it impossible on the actual machine, or if you're just forgetful like me? ;-(

There is an ominous "Delete License" button in red, but there is no solid information about what it will actually do - is it actually what I need (deactivate all installs on that key), or will it really delete it and the key can never be used again?

@Christopher (Drashna) tagged in case the delete is deactivate and I don't need another support request... ;-)

-

On 2/7/2020 at 1:21 AM, Christopher (Drashna) said:

Also, having the drive connected directly is always going to get better performance than over the network, because there are fewer moving parts.

Now I have an image of you having some sort of steampunk mechanical networking setup...

-

-

Duplication questions

in General

Posted

It literally duplicates the data, so a 30TB pool has space for 15TB of data if you select 2x, 10TB if you selected 3x, etc. It will still show as 30TB in Explorer though. So yes, if you have 30TB of actual data, you need 60TB of disks for 2x duplication.

The files in the pool are stored in a hidden folder in the root of each drive. Change your Explorer options to show hidden files, and navigate into the long-named folder starting with "PoolPart." and you will see all your files and a ".covefs" folder at the top. Simply move everything except the ".covefs" folder out to another location. Where did it used to belong?

If the old home of the data is still available, you can copy the content of each PoolPart folder to the same place, and it will all merge in the usual Windows Explorer manner - ie, with that popup to confirm merge/overwrite.

If the old home is not available, just copy the file to the root of each disk. When you are done with that part, you can delete any remaining hidden folders.

Hopefully that's clear?