Sonicmojo

Members-

Posts

54 -

Joined

-

Last visited

-

Days Won

2

Sonicmojo last won the day on November 13 2018

Sonicmojo had the most liked content!

Sonicmojo's Achievements

Advanced Member (3/3)

2

Reputation

-

Sounds good. Thanks for the update! Cheers Sonic.

-

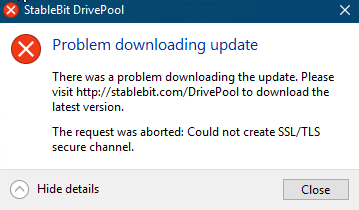

Understood - but this error is a result of updating to the latest? Or do I need to manually update and then this will go away for the future? Sonic.

-

Yesterday Scanner tells me we have an impeding drive failure so I evacuated the drive and swapped a new one into the pool. But even after forcing a Rebalance (using the Disk Space EQ plugin) - Drivepool has been sitting here for over an hour "building bucket lists" and not moving anything. What is the deal with this plugin - does it actually do anything or is it conflicting with the other 5 plugins I have installed - which seemingly never seem to balance anything either? I remember a few year back - I would swap in a new drive, add it to the pool and almost immediately Drive Pool would start balancing and moving files to ensure each drive was nicely filled - now it seems no matter what I throw at the problem - it does not want to do anything unless forced? Sonic.

-

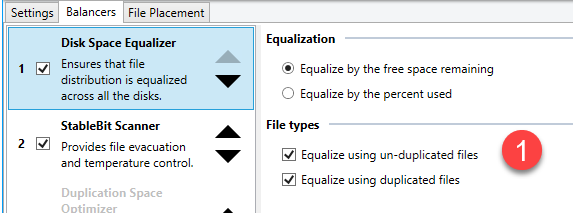

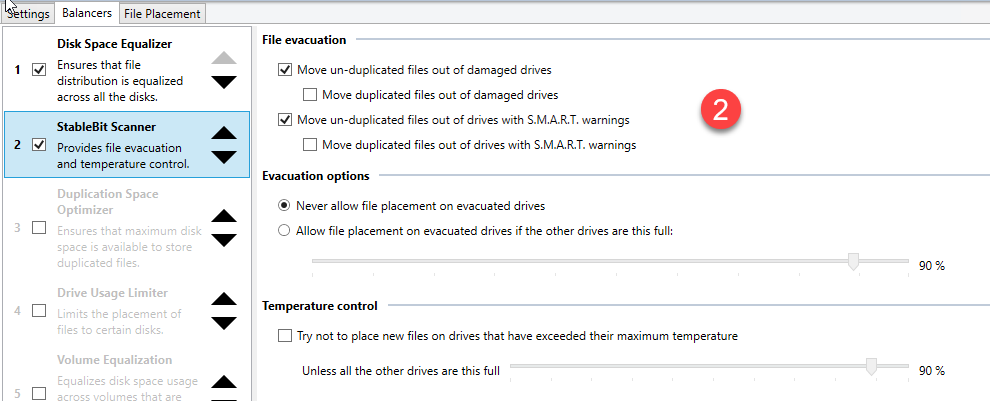

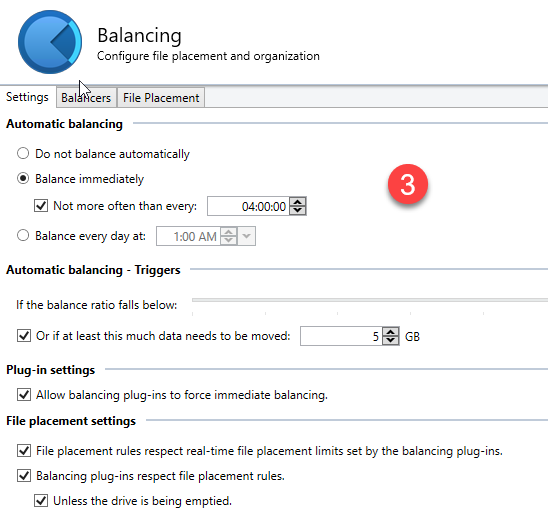

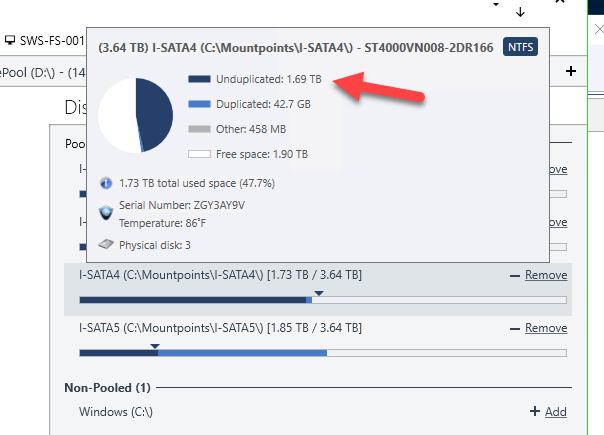

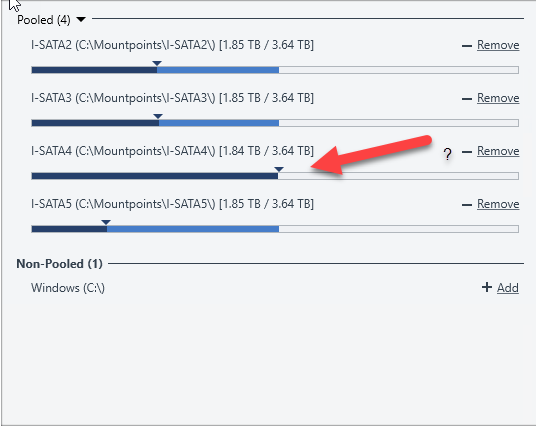

Chris The disk checks out perfectly in Scanner. The only issue presented was this massively excessive "head parking" which looks like was being caused BY Scanner. All other SMART data is fine and I cannot see any evidence of anything else wrong with it. Regarding the "evacuation" by Drivepool/Scanner - I did have a number of the built in balancers activated for a long time but have since cut it back to just two: Disk Space EQ And StableBit Scanner - with a specific focus on ensuring my "unduplicated" files are being targeted - if there's a problem with a drive. And here are my "balancing" settings: What is most concerning to me is that Scanner is NOT honoring the logic of moving "unduplicated" files away for "possible" problematic drives. In this case - here is the drive that exhibited the excessive head parking (But nothing more) and now Scanner/Drivepool have filled this drive to max with unduped files - leaving me in a nasty state if something does happen here: Ideally - I need to see this drive looking more like the rest of the drives with a balance leaning more to "duplicated" files rather than not duplicated. Any ideas with the balancers or balance settings that might make this even out a bit better. Also - this drive is less than a year old - and while I am certainly not saying that problems are not possible - Scanner is not indicating any issues that are obvious. I have also checked the drive extensively with Seagate tools and nothing negative is coming back from that toolset either. As of right now - this drive appears to be as normal as the other three - except for the ridiculous head parking count - which as of now looks to be driven by Scanner itself. I have another server - running a pair of smaller 2TB Seagate drives (from the same series as the server above)- which have been up and running for a year and 4 months - and each drive has a load cycle count of 109 and 110 respectively. The 4x4TB drives in the server above have been running for just over a year and each drive has load cycle counts in 490000 range. The one drive that Scanner started reporting SMART issues with had a load cycle count of over 600000 before I shut it off. Even Seagate themselves were shocked to see that load cycle number so high - indicating that all of these NAS drives should really never exceed 1000-2000 for the life of the drive. So I am not sure exactly what SCANNER is doing to one set of my drives and not the other - but it's a problem just the same. As I mentioned - my File Server (4x4TB) does not see a lot of action daily and anything it does see should be handled with ease by the OS. It is unfortunate that there is some sort of issue with the Advanced Power Management settings on Scanner for the File Server but even with APM enabled on the other server - there appears to be no issues with the Load Cycle or any other issue. Appreciate any additional tips. Cheers Sonic.

-

That may be true - but it looks like the beta action stopped around Mar 12-15. Would not surprise me if this is COVID related but it would be nice to at least get a one liner status update. Sonic

-

Just wondering what is happening in here lately? I have asked a couple questions in the last week to 10 days and usually get an answer after a day or two? Is Covecube support no longer coming round or is the actual company not active? I realize the world is in a crap state right now but it would be nice to know if anyone is home? Sonic.

-

Updated Data I did some reading on the forum here and found this tidbit from a few years back: In theory, the "Disk Control" option in StableBit Scanner is capable of doing this as well, and persistent after reboot. To do so: Right click on the disk in question Select "Disk Control" Uncheck "Advanced Power Management" Hit "Set". I have enabled this on all my drives to see if the "parking" will settle down But then I noticed that DrivePool was acting strange last night as well. It had begun an "Evacuation" of all the files from this specific drive - acting as if there was some sort of data emergency. When I checked into the server around dinner time last night - DP was displaying a message saying 63.5% Building data buckets (Or something similar) - it seems like it was hanging there forever. So I hit Reset in Drivepool and let it run the inspection routines etc to confirm the pools and ensure the data was sound (it was). But when I went into server again this morning - I saw that my Balancers had been altered. The "disk space" balancer (at the top of the stack) had been disabled - leaving the Stablebit Scanner balancer next in line. I believe it's parameters took priority which some lead to the emergency evacuation action. I re-enabled the Disk Space Balancer and now ended up[ with this: Any ideas what is going on? Sonic

-

For the first time ever - I received this message from Scanner on a SEAGATE Ironwolf NAS drive that is about a year old: ST4000VN008-2DR166 - 1 warnings The head of this hard drive has parked 600,001 times. After 600,000 parking cycles the drive may be in danger of developing problems. Drives normally park their head when they are powered down and activate their head when they are powered back up. Excessive head parking can be caused by overzealous power management settings either in the Operating System or in the hard drive's firmware. This drive (and 3 other identical ones) are part of my Windows Server 2016 file server which really sees very little action on a daily basis. What is the story with this message and why would this drive be "parking" Itself so frequently - considering the server is never powered down (only rebooted one per month following standard maintenance). I have not had a chance to look into the other 3 drives yet - but this is concerning just the same. Is there a possibility that DrivePool or Scanner are the cause of this message? Appreciate any info on this. Cheers Sonic.

-

Well - I am not about to remove a drive to test this version but it's nice to know something new is available. Also glad I am not the only one experiencing this issue. S

-

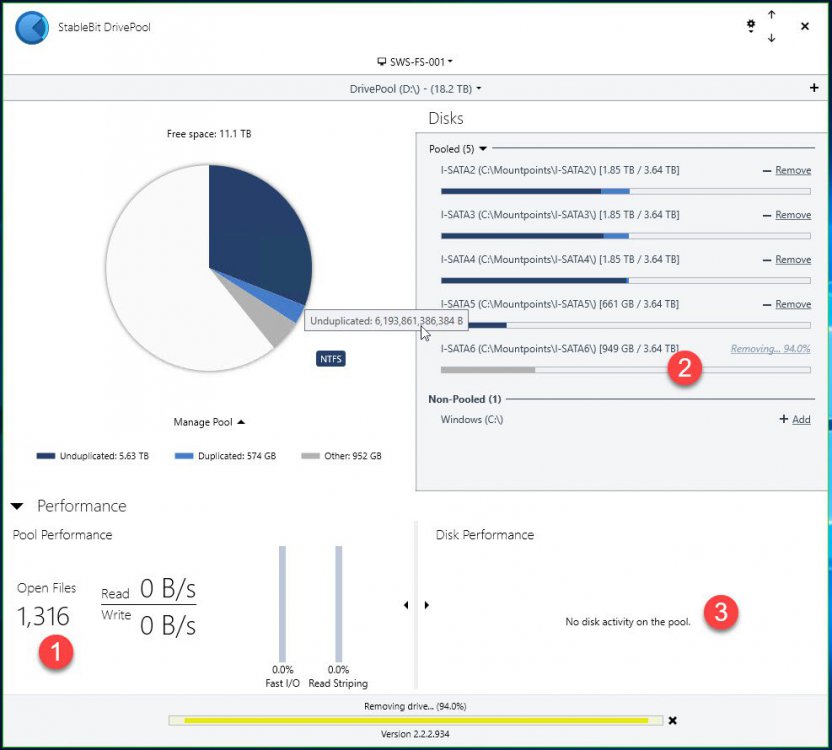

This makes complete sense - but how come the actual "Remove drive" process never completed? Is it not supposed to go to 100% (while leaving the dupe files on the drive) and conclude in a correct fashion? In my case - DP simply stopped doing anything at 94% and sat there for hours and hours and hours. This feels very uncomfortable on many levels - yet I had no choice but to kill the process. Luckily - there was no residual damage to my hard stop as all that was left on the drive where dupes. But this experience does not make me feel very trustworthy toward this app if a process cannot wrap up gracefully and correctly - especially when user data is being manipulated. S

-

Well - the drive is gone now so enabling logging will not help. And as far as unduplicated data - I used the same process for 4 consecutive drive removals. The first three went like clockwork...the "remove" drive process (whatever that entails) went to 100% and then DP did a consistency check and duplication AFTER the drive was removed completely. This last drive "removal" not go to 100% - it simply sat at 94% for like 18 hours. For me there is long and then there is REALLY long. So I eventually got fed up - cancelled the removal and pulled the drive. My concern is that this "remove" process did not go to 100%. There was zero file activity on the pools for hours and hours - so if DP was doing something - it should have been communicated. Oddly - the only files left on this drive (after I killed it at 94% for 18 hours) - oddly - were just the duplicates. So I do not understand what the right conclusion to this process should be. I am assuming that if I choose to "process duplicates later" the removal process should be successful and go to 100%. Yes? No? In this case it seems like it was set up to sit at 94% forever. Something was not right with this removal - the seemingly non-existent communication of the software (telling me exactly nothing for 18 straight hours) - should be looked at. S

-

Update. After I returned home from work - the drive targeted for removal STILL said 94.1% so I killed the process and restarted the machine. Once it came back up - I assigned this drive it's own drive letter and examined the files in the Poolpart folder - seems all the files in there were the files I had set to duplicate. So while that makes a bit of sense (I did tell DP to duplicate later) - I still do not understand why the removal stalled out and would not complete. I could have left this drive in this state for days with no change. Seems like a bug or something here. After the drive was forcefully removed - DP went ahead and did a consistency check and then started a duplication run that took another couple of hours. Looks like everything is good now. All my drives have been replaced so I will not need to do this exercise again for a while but I would still like to know why this drive was not removed cleanly. S

-

A Over the past few months I have been slowly upgrading the drives in my Pool. It's a 16TB pool with 4x4TB drives. Last night around 7:00pm - I added the last new drive to the pool and targeted the last drive I wanted to replace. I checked Duplicate later and close all open files and let the process begin - as per usual - things moved along nicely and around 9:30 - I closed my RDP session to the server and let DP do it's thing. When I checked back in this morning - I see the following on screen (see attached): 1. I see a ton of open files 2. The gauge has been at 94.0% like for hours 3. There is no file activity that I can see. The only thing that DP is telling me is that - if I hover my cursor over the "Removing drive ...(94.0%) indicator near the bottom of the screen (where the progress bar is pulsating) I see a tooltip that say "removing pool part" What the heck is going on and why is this taking hours upon hours? When I replaced my third drive a few days ago - the removal process completed cleanly, the drive I wanted to remove was 100% empty in about 5 hours and the UI was silent. The drive I am removing here shows 949GB of "other" on it and this is causing me concern. This drive should have nothing on it if the removal process is working correctly. Would love to know what is going on here and if I should just let it go, stop it or what? I do hear drive activity and drive LED's are flashing - but what is it doing? S Additional: Here are the last 20 lines of the Service log - anything here that looks suspect? 0:00:33.1: Information: 0 : [FsControl] Set overall pool mode: PoolModeNormal (lastKey=CoveFsPool, pool=c2cea73a-6516-4ed7-906e-864291ed7d8f) 0:03:22.7: Information: 0 : [Disks] Got MountPoint_Change (volume ID: dd88cf22-51f8-4ba9-a676-d0bb8b20430b)... 0:03:23.7: Information: 0 : [Disks] Updating disks / volumes... 0:03:30.1: Information: 0 : [Disks] Got MountPoint_Change (volume ID: dd88cf22-51f8-4ba9-a676-d0bb8b20430b)... 0:03:31.1: Information: 0 : [Disks] Updating disks / volumes... 0:03:43.1: Information: 0 : [Disks] Updating disks / volumes... 0:04:20.6: Information: 0 : [Disks] Got Pack_Arrive (pack ID: 0db29038-5dbc-4cdd-a784-5748d8b2f063)... 0:04:22.2: Information: 0 : [Disks] Updating disks / volumes... 0:04:50.5: Information: 0 : [Disks] Got Volume_Arrive (volume ID: f28ef3b5-4c91-44cb-9f35-7c76d4f97ef5, plex ID: 00000000-0000-0000-0000-000000000000, %: 0)... 0:04:51.6: Information: 0 : [Disks] Updating disks / volumes... 0:04:57.0: Information: 0 : [Disks] Got MountPoint_Change (volume ID: f28ef3b5-4c91-44cb-9f35-7c76d4f97ef5)... 0:04:58.0: Information: 0 : [Disks] Updating disks / volumes... 0:05:16.8: Information: 0 : [PoolPartUpdates] Found new pool part C6C614F2-7102-411D-B9C9-4D2F05B68ABB (isCloudDrive=False, isOtherPool=False) 0:05:27.3: Information: 0 : [FsControl] Set overall pool mode: PoolModeOverrideAllowCreateDirectories (lastKey=DrivePoolService.Pool.Tasks.RemoveDriveFromPool, pool=c2cea73a-6516-4ed7-906e-864291ed7d8f) 0:05:27.3: Information: 0 : [FsControl] Set overall pool mode: PoolModeNoReportIncomplete, PoolModeOverrideAllowCreateDirectories (lastKey=DrivePoolService.Pool.Tasks.RemoveDriveFromPool, pool=c2cea73a-6516-4ed7-906e-864291ed7d8f) 0:05:27.3: Information: 0 : [FsControl] Set overall pool mode: PoolModeNoReportIncomplete, PoolModeOverrideAllowCreateDirectories, PoolModeNoMeasure (lastKey=DrivePoolService.Pool.Tasks.RemoveDriveFromPool, pool=c2cea73a-6516-4ed7-906e-864291ed7d8f) 0:05:27.3: Information: 0 : [FsControl] Set overall pool mode: PoolModeNoReportIncomplete, PoolModeOverrideAllowCreateDirectories, PoolModeNoMeasure, PoolModeNoReparse (lastKey=DrivePoolService.Pool.Tasks.RemoveDriveFromPool, pool=c2cea73a-6516-4ed7-906e-864291ed7d8f)

-

All, After a instant drive failure on a secondary server last week - I am now being proactive with my primary server and replacing old disk in biweekly stages. This server has 4x4TB lineup and I have identified disk 3 as being the first one to be removed. The new disk arrived yesterday but - I do not have all folders in this pool duplicated. What are the correct steps to get this drive out of the pool, out of the box and then swap in it's replacement without jeopardizing any files that could be on drive 3 as single files? Assuming the Remove function in the UI should empty the drive (?) but I want to be sure before I start making any moves. And I assume Balancing would be my next step after the new drive is in place? Appreciate any tips from the field. Cheers Sonic.