KiLLeRRaT

-

Posts

25 -

Joined

-

Last visited

-

Days Won

2

Posts posted by KiLLeRRaT

-

-

Hi All,

I've recently noticed that my drive has 40GB to be uploaded, and saw there are errors in the top left. Clicking that showed

QuoteThe limit for this folder's number of children (files and folders) has been exceeded

What's the deal here, and is this going to be an ongoing issue with growing drives?

My drive is a 15TB drive, with 6TB free (so only using 9TB).

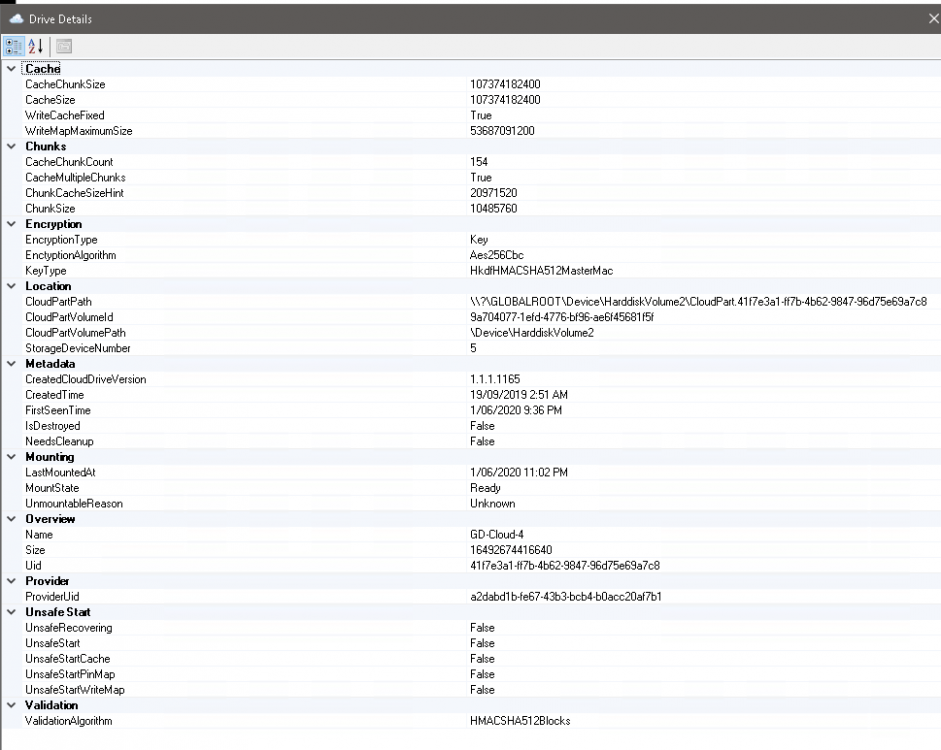

Attached is my drive's info screen.

EDIT: Cloud Drive Version: 1.1.5.1249 on Windows Server 2016.

Cheers,

-

I still think it's a good idea to have auto reconnect.

The way this would work is still to have the same setting for failures in the config, and to let it disconnect as normal. We then want it to essentially click the retry button, maybe an hour or two later after it was disconnected by itself... I don't see how that will cause the system lockup, compared to if I was to manually click the retry button.

What's everyone's thoughts on this?

Edit: Also, I think recommending that people up the CloudFsDisk_MaximumConsecutiveIoFailures WILL make their system more flaky, because there will be guys that put unrealistic values in there to try and get around the disconnect, where they actually just need a auto reconnect in x hours setting.

-

Ouch, I wish it was simpler. I will test it using a small dummy disk, clone it, delete the old disk, and get the new disk in place...

I guess I will leave this as the 'last resort' if something really bad happens...

Right now I just need to know if it will be reliable. I guess testing this will give me the answers.

Thanks

-

Hi, just a quick question, can I make a copy of the drive contents in my Google Drive folder, and attach it as a new drive? Do I need to create a new Guid for it somewhere other than renaming the Google Drive folder?

Reason I'm asking is, rclone supports server side copying, so I can make a duplicate copy of my entire drive once a month and mount it later on in case of any corruption on my main drive like what happened with a few of us at the start of the month.

Thanks!

-

Hey guys,

Good to see that the releases are getting more and more solid.

I just had a thought while looking at my transfers/prefetching.

What if you had the prefetch forward value automatically scale based on the hit rate of the prefetch? Perhaps you can provide a range, e.g. between 1 MB and 500 MB based on hit rate.

For streaming movies, a large prefetch works well (e.g. 150 MB, or even more). The hit rate will be good, thus growing the prefetch size more and more.

If I later then start looking through photos that are only 3 MB each, the hit rate will get pretty terrible, and thus scale back the prefetch again to say 1 MB again.

Just thought this could improve the general bandwidth use, and ultimately performance.

-

Hey,

Would it be useful to have a sticky on here, where it's posted when a new beta version comes out, along with what people run into and find in that version? Basically a copy of the changes.txt, but people can then post comments on it etc...

e.g. The issue we had where drives become corrupt... That way it's quick to realize that you probably shouldn't update to the update until it's resolved...?

Just a thought!

-

Hey Chris,

Yeah I haven't lost anything important, I have quite a number of backups of my important stuff on multiple services and also multiple physical drives on separate locations.

I was just hopeful with this since I just sorted out my TV Show library and took a while to get sorted out and working the way I wanted, so was hoping I wouldn't have to do it over again heh.

Thanks for the help and so on though, loving the product so far!

-

Heh still stays RAW... I guess I better dust off the old local disks and plug in the array controller :\ See what I can recover here.

I'm just thinking of a way to prevent this in the future without having local disks around... Any suggestions other than the rclone suggestion by Akame?

-

I guess you could use another utility to copy the chunks to a backup folder once a month. It will not take any client side bandwidth as google drive supports server side moving. One utility that can be used together with drivepool is rclone as it supports server-side moving.

Ah that's a good idea, I will check that out

-

Apparently the new beta has the ability to combine chunks into one, did that one fix it for you?

I think my drive is too far poked. I tried it but it remained RAW.

I was part way through a backup to another cloud drive. That one recovered fine, but I'm now missing a load of stuff that wasn't backed up.

I wonder if it's a good idea to create a full backup of your drives, and detaching them, then maybe once a while, connect it, and sync it. Like a backup, although it will be on the same provider... But it'd mitigate these issues.

-

Is there a way to have the application automatically discard the duplicate chunks that were made after a certain date? Or automatically keep/use the oldest file if it finds duplicates? That may fix my problem, then I can just restore my Google drive to say the 28th of Jan or something, and voila?

-

Any update on this yet? I'm still stuck without my files

-

I can say I've managed to restore my stuff, so apparently the new update or maybe two updates backwards added something called authorative chunk ids so there is only a metadata folder and nothing in chunkid folder. So I used the google business restore which allows you to restore permanently deleted items, data-chunkIdStorage is where the chunks where saved before it migrated over, luckily I had one from 31 the day the migration and the corruption started. Cache is at 0 B but afraid of a reboot at this point as moving pc made it unallocated. Moving files works and opening. Adding as well I think but I put it to read-only.

1: Updating to 819 made some folders corrupt as I could not access them.

2. I ran chkdsk and it detected errors

3. I ran chkdsk /r and it deleted index and fixed errors so cloud usage showed 6 GB at this point.

4. After several troubleshooting steps involving contacting google support they notified me if you go to google suite admin center and go to user you can press at three dot menu and press store, but you can only specify date and not what files to restore so you may end up with multiple chunks. As probably most know API deletes on CloudDrive deletes a file permanently which rendered our files unable to restore without going to google suite admin center, I think most has unlimited plan.

5. After restoring my fourth chunkid storage file from 31st it migrated into Metadata and as we know API does not manage versions when stablebit is editing them which proved to be a problem if I wanted whole drive restored, but when I was on my fifth file it managed to mount but still corruption errors. And the random file I tried CloudDrive deleted the chunkid file for the migration process and my clouddrive was 12.1 TB, even though some chunkid messed with my CONTENT folder and I may have multiple chunks that are different and I believe I may have corruption due to the steps involved I took the risk.

At this point was my drive no longer unallocated and I was able to access any file and folder, calculated i may have around 80 MB corruption, so I transferred over the most important stuff and transferring less important now and it seems stable, remind me not to update versions as soon as it releases.

I have been up all night and pretty tired writing this, admin can edit this to make it more readable if they want. But with that said StableBit CloudDrive is a good product which is in beta, I've had no issues with DrivePool. Also you may wanna wait on @Drashna before you do what I did due to the consequences involved.

That sounds pretty painful! Let's hope they can give us a quick easy fix for this via an update!

-

When trying to enter some folders for me it says that it's corrupt. Also getting corruption errors when I try to robocopy stuff from my cloud drive across to my machine.

-

Excellent, thanks, I will give this a shot and let you know how it goes!

-

Hi Chris, I have just uploaded the drive trace. I'm having an issue where my download is BARELY getting 3mbps using 8 download threads

I have a 130mbit connection.

Let me know if that helps.

-

Subscribe, I have the same issue at times.

-

Hi,

I have a drive pool with a local, and a cloud drive in it. I have duplication set to 2x, so it's basically a mirrored setup.

Is there a way I can set it so that ALL reading will be done off the local disk? I want to do this, because reading off of the cloud drive, while I have all the data locally doesn't make sense, and even though it's striping, it's still slower than just reading it all off the local disk...

Any thoughts about this?

Thanks

-

I'm having the same issue as @wid_sbdp. Mine is actually running 2 threads, and after 8-10 hours or so, I still have ~150GB to upload but my transfer speed is stuck at 0....

After a reboot it starts back up again.

I'm on BETA 749.

-

After a reboot, I'm also getting this, but on my entire drive.

Looking at the disk in Disk Management, it's showing my disk as RAW.

It's a ReFS partition, so how do I go about sorting this out? (Can't run CHKDSK on ReFS)

This is actually the 2nd time that this has happened to me, the first time I just recreated the disk and uploaded all the data.

But now that it has happened to me again, I'm starting to be wary about using the product even...

Any tips for this corruption? Can it be fixed without having to recreate the disk?

-

Hey Guys,

I got slow transfers with Google Drive, and set the threads to 12 up and 12 down. This worked for a while and everything was a bit faster.

For the last two days, I have been getting countless Rate Limit Exceeded exceptions, even running 1 up and 1 down thread.

I check out online in the Google Drive API guides and found a bit about exponential backoff.

So a few questions/thoughts:

- Is exponential backoff implemented for the Google Drive provider?

- If I set the provider to use say 12 up and 12 down threads, do they all get blasted out using multiple requests at the same time? (causing rate limit exceptions later on)?

- Would it work to have something like a 'request gatekeeper' where you can set your own rate limits client side so that no matter how many threads you run, it always obeys that limit you set, and so that there is a 'master exponential backoff' in place?

Is there at all a possibility to look at the provider implementation code? Or is this fully baked into the product? It'd be good if there was an API to allow anyone to build their own providers.

Thanks for a good product!

EDIT: Also, how will all the rate limiting work if you added say 5 Google Drives, each with 12 threads up and down? Quite quickly you will be making a TON of requests...

- KiaraEvirm, Ginoliggime and Antoineki

-

3

3

-

^ Same here. It it occurs no matter how many threads I have set.

I only have 1 up and 1 down thread set too.

-

I'm also getting a lot of Rate Limited Exceeded exceptions... Google Drive provider.

-

Hi, similar issue for me too. Computer had a unexpected shutdown (power outage).

Now it's back, but my Google Drives are 'Performing Recovery'.

I have a 600GB local cache on one, and a 500GB local cache on the other.

Any way to get these drives mounted quicker? Or is it now going to try and upload/download all 1.1TB worth of data to verify the local cahce? Is there a way to dump this cache, since it's just a cache?

Thanks,

Albert

Google Drive: The limit for this folder's number of children (files and folders) has been exceeded

in General

Posted

I also upgraded to 1315 and it looks like everything is working the way that it should. I have not had issues that @darkly have reported where gets the insufficient bandwidth errors.

My disk upgrade went instantly, even through I was getting the API limit error before. I did revert back to standard API keys (not using my own) before upgrading.

I haven't yet tried 1316 yet.

Cheers,