-

Posts

26 -

Joined

-

Last visited

Posts posted by mcrommert

-

-

-

6 hours ago, Christopher (Drashna) said:

Just keep in mind that you may want to leave this running for a few hours, at minimum.

Also, USB drives are notoriously finicky.

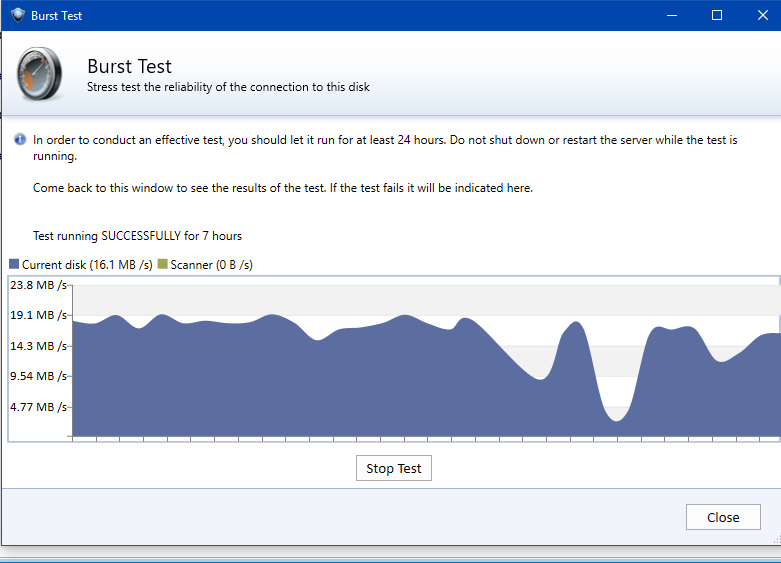

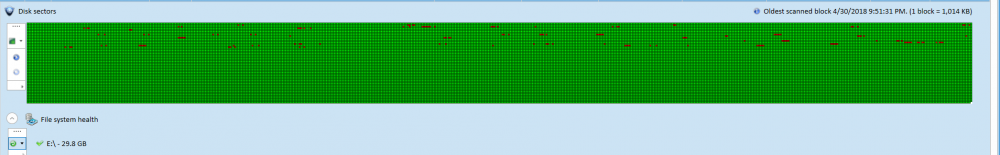

okay here is the results after 7 hours

-

20 minutes ago, Christopher (Drashna) said:

Just keep in mind that you may want to leave this running for a few hours, at minimum.

Also, USB drives are notoriously finicky.

Will do.

-

12 minutes ago, Christopher (Drashna) said:

^

Right click on the drive, and run the "Burst Test".

If this comes back with issues, then it's the connection to the drive that is flaky, and would by why you're seeing inconsistency with the surface scan.

Running...will report back

EDIT: may only run it for a few hours...i don't think this tiny usb drive could handle a constant 24 hour run

-

So i have read

and

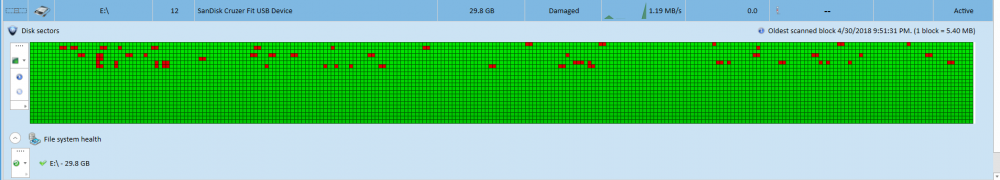

on this issue but I still don't know why i'm running into this problem. I am on Beta 2.5.2.3128 and while none of the spinning drives have problems and older tiny usb flash drive is pulling errors.

I then ran a chkdsk /f on the drive and no errors were found or fixed after a very long run. After i moved to the beta release of scanner ( i had been on stable) i ran again after clearing the drives status (both readable and unreadable) and ran again. I have the exact same errors. Just want to know how to proceed as i had planned to continue using this drive but am now unsure whether there is any issue. I am transferring files off the drive and did have one not move correctly.

EDIT: Also its inconsistent...this was the first scan

And this is the final - the blocks don't match up

-

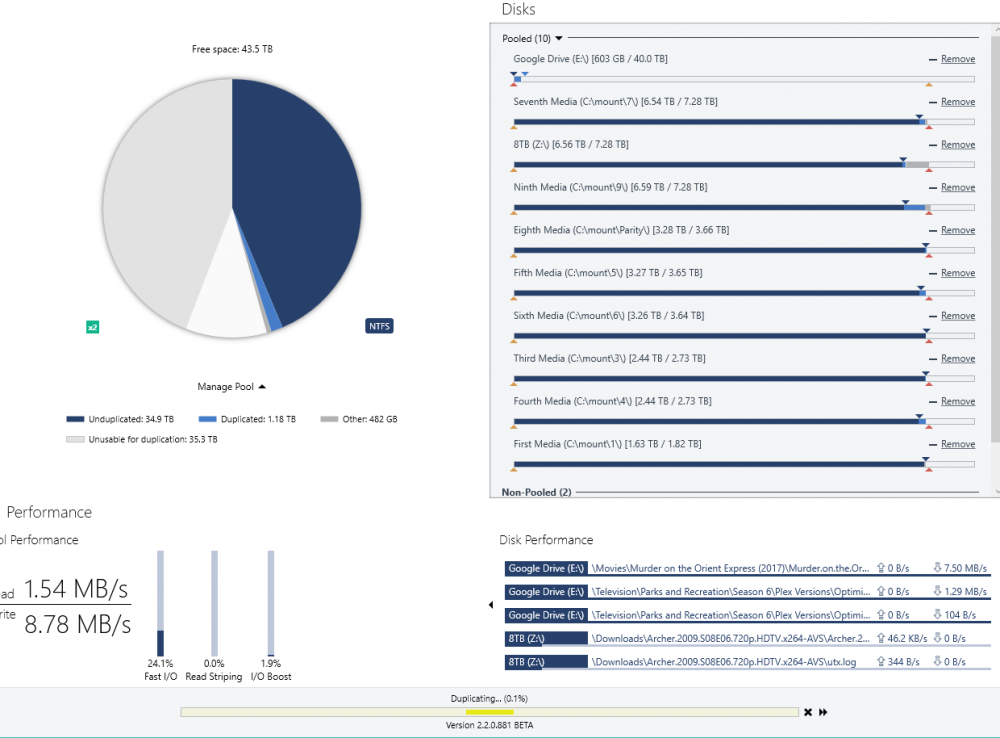

Thanks for the info - also realizing while it isn't showing the writes going to the pool when they first happen on the new pool...if i go to the old pool it is showing the writes there

With the ssd option...what is meant by "need a number of drives equal to the highest duplication level"? Do you mean i would need 40 tb of ssd's or ssd designated drives? Sorry I'm missing this.

Thanks for the help...seems to be working very well now and noticing no issues at all in reads and writes in terms of speed

And i have moved to the RC release - only complaint is inability to see disk and pool perf at the same time

-

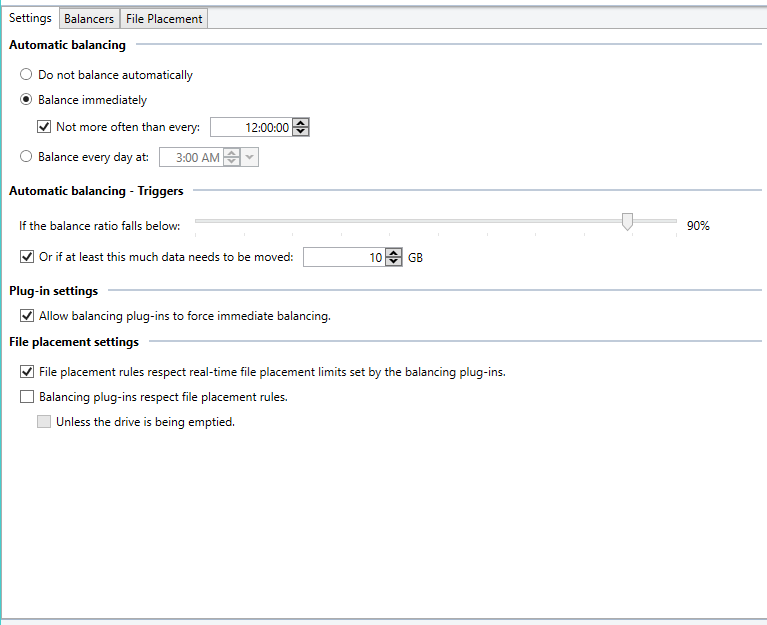

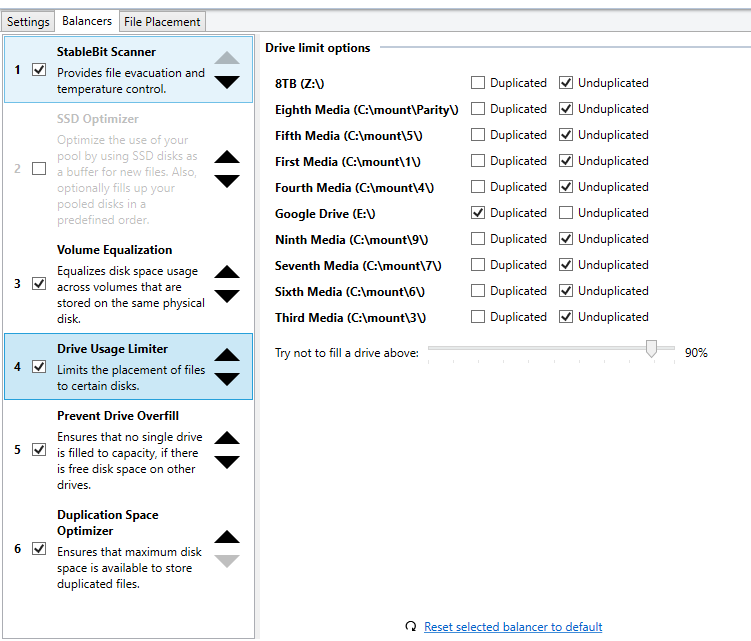

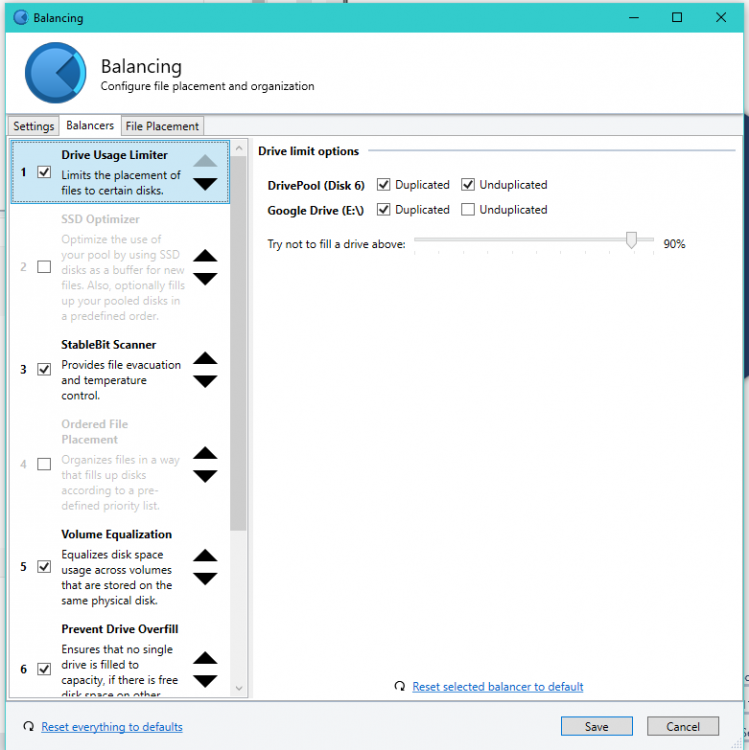

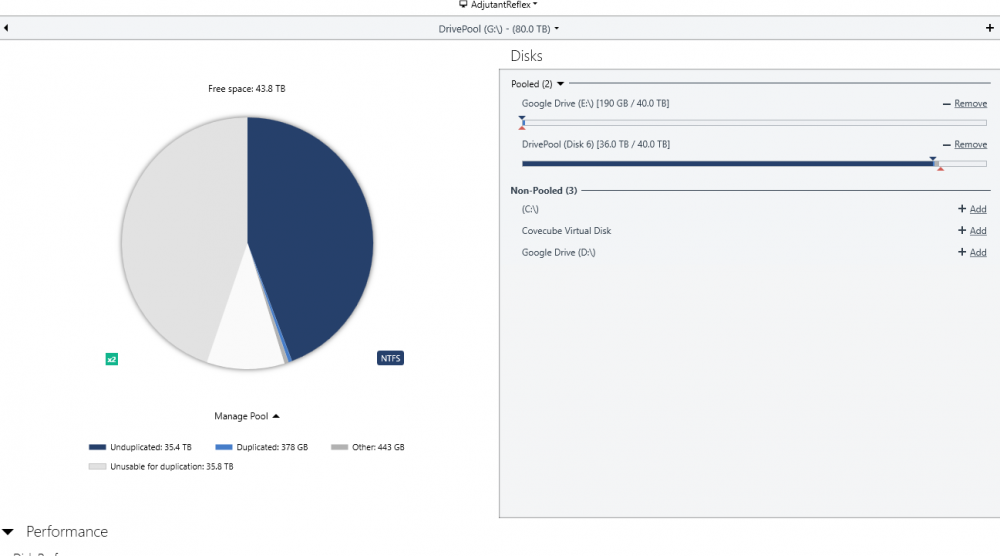

Apologies for being so confusing. I have a 40 tb pool that i have used for a long time, but I now want to duplicate the data to a 40 TB google cloud drive. After the initial post, I realized some of my errors by reading through some of your other older responses to other users. So what i need is for the local pool to be prioritized for writes and reads, but to be duplicated to the cloud drive for restore if a drive fails. I have set it up after reversing my old mistake and I wonder if there is anything i may have still gotten wrong. From what you have said i do need to turn off unduplicated content for the clouddrive which may explain why the drive thinks its 80 tb instead of 40. Even after making these changes though my two big concerns are that it still shows 80 tb as size of drive (instead of the 40 tb that are really available) and also writes still seem to initially go to the cloud drive. I have gigabit internet so thats not that big of a deal but any advice on my setup would be very welcome.

-

Okay i followed the directions in those posts but my main issue now is even with file placement balancer...i cannot get the harddrives to take the files first. Always shows my dvr recordings being saved to my cloud drive as they are being recorded

-

Started a duplication of my 40 tb drivepool and read this answer which confused me and seems to contradict how i'm going about this

I made a 40 tb clouddrive and thought that if i selected the original drives as unduplicated and the cloud drive as duplicated and selected duplication for the entire array it would put a copy of every file in the original pool on the cloud drive. If a drive failed i could easily rebuild from the cloud drive. Here are some settings

In that other example you told the user to make another pool with the original pool and the cloud drive and set duplicated and unduplicated on both. I am missing the (probably easily apparent) issue with my setup. Also if i do need to do that what is the most efficient way to start that work without losing anything or having wasted time uploading to cloud drive

-

16 tb just left as a single partition as built

-

Yeah i checked but the folder was never made - might be from the insufficient space message on the chkdsk

Any understanding why this could happen? i now also see there is 101 mb that won't upload...but i'm uploading so much to reform the drive that i'm getting all sort of rate limiting and so forthI have also moved to the rc for those features

-

Okay will continue to update this

- Built new drive and transferring old contents over plus downloaded backups to rebuild...Still mostly concerned on what brought on this behaviour and what i can do to prevent it in the future. Since the initial file disappearance there may have been more files also but probably more to do with me not seeing the extent of the files missing

- Built new drive and transferring old contents over plus downloaded backups to rebuild...Still mostly concerned on what brought on this behaviour and what i can do to prevent it in the future. Since the initial file disappearance there may have been more files also but probably more to do with me not seeing the extent of the files missing

-

-

Okay did some checking...the drive mounted to my computer gives the size of the used space as 4.36 tb...this is similar to what the backblaze program reports. When I do a simple file size calculation though it is around 3.99 tb. Running Windirstat finds 398 gb of unknown taken space. This gets stranger and stranger

Also realizing its not just folders about p that have the issues...some of the folders still exist but everything inside of them is gone

-

I did upload about 75 gb worth of files to that folder earlier today...don't know if that could be related

-

So i have been using Google drive on Cloud Drive for months and months and all of a sudden today realized when i ran a clean on my plex server that loads of files are missing. Since i run this daily they were available yesterday and earlier today. In one video folder every folder with a name that is after p in the alphabet is missing. In another folder inside that same folder half the files are missing. It only seems to affect one folder and its subfolders on the drive and i have no explanation for its disappearance. Having done a reboot earlier today it may have happened after that reboot with the mounting of the drive, but none of the missing files are in the recycling bin and unmounting and remounting has no effect. I have a few questions.

- Is there anything i could have done wrong and how to do i keep this from happening in the future?

- Is there a way to rescan the drive (??) in order to see if the file deletion is permanent?

- I have all the files backed up on backblaze so thats not a big deal but should i build another drive instead of putting the files back on this drive?

Thanks for the help.

-

Ignore the error.

Open the balancing settings.

I'd be willing to bet that the balancer is already there.

Yep you got it...seemed to not install anything hence my confusion

-

Quick question - The current download version doesn't seem to want to install with the current 2.2 beta version of drivepool. Am i missing something?

-

Okay so today the drive reattaches...but there is 8 gb of material to upload (according to the app) and it is not doing so\

EDIT: So somehow in I/O i unchecked upload threads...why would you ever turn off upload? Sorry for the bother on these questions

-

-

So wanted to get the temperature on this issue. I have a 10 tb mount on Google Drive that just keeps unmounting...i even reauthorized it. It will quickly and easily mount...if i try to pull or upload any data to it...it will open 1-8 threads but no data will flow...will always say 0 bps despite it trying to pin data. After a few seconds of it not pulling data it will unmount with error "The drive was forced to unmount because it couldn't read data from the provider" Tried to detach the drive but apparently i have 7.8 gb of data waiting to upload. Before it unmounts it does pop the "The dowload quota for this file has been exceeded" on both upload and download.

So should i continue to work on this or have i somehow gotten softbanned from Google Drive...I haven't really done anything abnormal today and it has been working for a few months no problem.

-

Yeah i should have also written i tested with Junction links too...same issues

Good resource on why you should use one over the other.

But yes it was working before and something in the new version or something i changed or the update broke it

-

Okay first i'm the same person as mcrommert2

finally got my correct account found

finally got my correct account found So the cloud drive turning off bitlocker fixed my issue. Apologies for not getting back sooner to report my changes. Seems to no longer do the constant checks on all of the 8 drives in my drivepool

Thank you so much for the quick responses...have used many pieces of software from smaller vendors but have never gotten this level of customer service on forums. Thank you so much and keep making great products

-

I purchased drivepool because even though i like flexraid, they are slow to catch up and i need win 10 pooling right now. Tested a few months ago and it didn't work, but read here that it was now working. Because i already used the trial i couldn't test so i bought drivepool. It is still not working for me...the drive shows up but there seem to be no files in it. Any recommendations?

Missing Drive/Failed Drive behavior

in General

Posted

Okay, just a question on how i should expect Drivepool to react in my current setup when one of my drives eventually fails and has to be pulled

So this is my current drivepool...it is made up of a 40 tb drivepool and a 40 tb clouddrive...i am almost done with the initial seeding of the cloud drive

So my question is mainly...if a drive fails on my initial drivepool will the clouddrive be queried for all the missing files or do i need to manually do something? My goal would then be to add another harddrive and let it restore from the clouddrive. I have real backups also on backblaze...but i set this up for nearly zero restore time in the case of hardware failure. Thanks for any help/advice.