klepp0906

Members-

Posts

120 -

Joined

-

Last visited

-

Days Won

5

Everything posted by klepp0906

-

so before i bother to set these cloud drives up again, i have to know if options exist to make this less of an issue. I did some testing last night i knew could have ended up badly. (lotta registry work) and instead of keeping track my intention was to simply "reset" by restoring an image i'd taken beforehand. well, i did just that and was immediately hammered with i/o errors in the clouddrive ui. i tried clearing caches and re-authorizing and reconnecting and every option they all gave me short of destroying the drives, and in the end the errors from the providers could not be stymied. fortunately I'm just getting started with clouddrive so nothing of value was lost but i'm curious if this is to be expected and would be the result anytime i restore an image? It wasnt like i rolled back through iterations of cloud drive and weeks of time or anything. it was earlier in the day and nothing was added to clouddrive(s) in the interim.

-

okay, what am i doing incorrectly? I edited the bitlocker+poolpartunlockdetect in the json from true to false and saved it. drivepool immediately reverted the change. I stopped the service and changed it. upon restarting the service drivepool immediately re applied it to true again.

-

So 99% of the package is user experience or some such right? I believe someone once told me thats what's taught in dev school or something. Anyways, shameless request. We all like polish just as much as we like things to do what they say and say what they do hassle free. it would be great to be able to somehow hide/clean up the list of providers. For a whole slew (dare i say the majority) of us, all the enterprise options will never be used or useful and it would be great to weed it out to keep the list of options or potential providers concise and organized. whether it be a hide/unhide system. or a categorization system. or a blanket checkmark for show enterprise, personal, server/hosted/local etc. just a thought. also, since im incredibly aware of how many questions ive had lately and these forums being pretty much the only outlet for them (without a discord or subreddit im aware of) I'm gonna condense. Any reason scanner uses conventional windows frames and cloudddrive and drivepool use something different/custom? Are they older than scanner and have just not yet been brought in line or?

-

So using my previous example(s) based on my other posts figuring this thing out... lets say I have 1TB of files in my dropbox cloud drive. I want to expand the drive whether it be via cluster size or chunk size or whathaveyou. its become I can only change these upon drive creation. How do i "sync up" the data from the cloud drive i'd be destroying to the new one i'd be creating? is it something like 1) create new cloud drive with preferred chunk/sector 2) move old files to new cloud-xxx-xxx-xxx folder on new drive 3) destroy old drive

-

thanks for that, appears i skimmed too fast. I knew i could have several connections to the same provider - however I was unsure if it was possible from the same account. that answers that. as for the other portion, i did know you couldn't mount the same drive on multiple computers but I was unsure of exactly what "same" meant hence the confusion. It would be a different drive, probably the same letter but created on another computer using the same cloud service provider and account. I just wanted to know whether or not I could access the data put forth by the other if need be. in a disaster scenario like being unable to access the other pc.

-

yep, i'm in the process of pulling down/changing my clouddrives as we speak. sometimes we learn the hard way

-

It appears you can update almost everything on a cloud drive.. the only thing you cannot, is the chunK? i shoulda done my research before creating all my cloud drives, now it appears im going to be redoing them all

-

Let me explain. I use 2 pc's in perpetuity. when I rebuild the first, it becomes the secondary, the secondary goes to my kids or the spare parts closet etc. I take the image from the "current" first and restore it to the "new current" i build, and at that point, the "new secondary" does its own thing and needs its own backups. I just set up clouddrive on my main pc as a means to ship macrium backups to the cloud since macrium cannot back-up to dropbox directly (at least not on your system drive). I want to be able to do this on the secondary as well. If something were to happen to the secondary and I needed access to the cloud backup for it, could i get to it on my main pc? If they both had cloud drive installed, and they both had their own license, and assuming they can both connect to the same dropbox account etc. could one pc see the others data in dropbox and pull it down/decrypt it for use? If the answers are yes, how does that appear in the dropbox folder? would i see on the opposing pc a second apps/stablebit clouddrive/cloudpart-xxx-xxx-xxx-xx folder in my dropbox folder?

-

I know how they like to lock things down, and im unsure how much that comes into play. Figured id ask as i'd love to see it added.

-

I do have it somewhat sorted now. its backburnered until i get the drivepool + snapraid configuration sorted out for the long haul though. I was able to ascertain that the limit is per cache task so that was a win. as for primocache vs ssd optimizer, the latter is just a pseudo write cache of sorts. preferential to using primocache for a write cache as with primocache theres a pretty reasonable risk of data loss if you have a computer crash or power outage or something. ssd optimizer wont help you on read speeds though, thats where primocache shines and why thats what i'll be using. read speeds and latency off spinners is more important than quicker writes. gotta be honest, the decision is closer than i thought though due to having some crappy SMR drives mixed in until i get around to upgrading. Wont be soon given the price of hard drives at current

-

so the data in the cloud would be un affected, thats what my concern was regarding. I guess unless it was like mid upload or something. the likelihood is probably miniscule though, to be fair

-

So my primary use case for this clouddrive is to shuttle backups to my dropbox. dropbox is on my system drive and you cant back up images to a provider on the same partition as its backing up. I cant/wont move it to another disk for a plethora of reasons beyond the scope of this post. I realize my backup will be encrypted and to access it i'll need to have cloudddrive installed, but thats the least of my worries if i ever needed to use it lol. anyways, what happens should the drive fail that has the cloud drive local cache on it? nothing presumably? data still safe in the cloud?

-

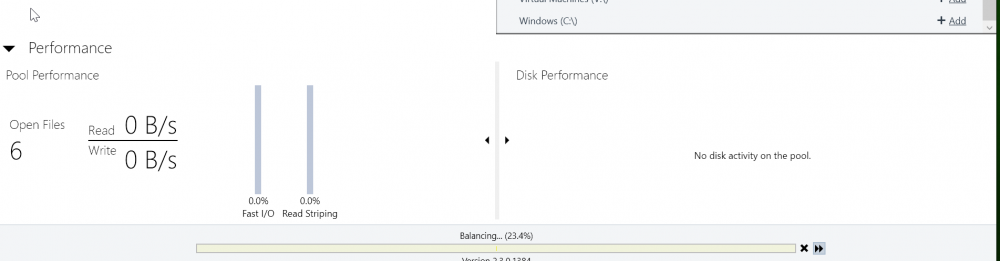

ah, makes sense. so if i disable automatic balancing and become "not" balanced - then the option will appear to initiate one in that location?

-

So i'm trying to understand the settings a bit better to determine how much/far i need to deviate from the defaults if at all. this is especially important to me as balancing can have a severe impact on the viability of snapraid which im postponing my first sync until I have it sorted and fully understand the implications of my settings within drivepool. Many of the plugins offer the option to not fill a drive past xxx %. Its pretty universally accepted not to fill drives beyond 90% and i believe thats the default anyways. 90% applies to 16TB disks, and it applies to 8tb disks. keep 10% free for optimal functioning/health etc. Drivepool itself gives an example surrounding the aforementioned settings that i'm failing to grasp. "Try not to fill a drive above 90%" which is easy and ideal. The wrench gets thrown in when right below it says "Or this much free space" which is set to 100GB by default. Now on the overwhelming majority of disks these days, 100GB is going to be well under 10%. Even the example "setting 90%/full 100gb free on a 2tb drive will preven it from being filled beyond 1.9TB (100gb free) which again, is well over the 10% free set above. What am i failing to understand? Its evident that the "or this much free space" takes priority, as 10% on a 2TB disk would be leaving 200GB free. Why is having this checked the default though? That would be filling disks to the absolute brim in most cases. I assume most would want this option unchecked or? I'm just trying to better understand the pros/cons/implications.

-

the manual seems to indicate I should have an up arrow that allows me to manually run a balancing pass should I choose. I disabled auto balancing and the bar is still just a bar.

-

I asked a few days ago if I had them and the ios app both installed/enabled if one would override the other or if i'd get both. You answered both. That wasnt the case. Today i disabled all notifications except for SMS and removed and re-added my number. I did not get the confirmation it suggested i should but I figured that may be because I already linked it (cant remember if i got one initially) either way i instead tried to send the test notification. still radio silence. I'm not sure if its not functioning, if i declined by accident or its some kind of conflict. hence my post

-

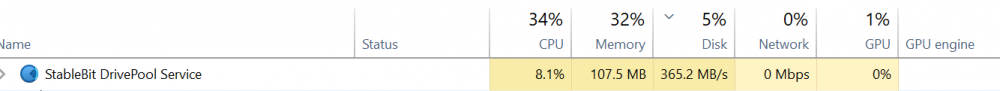

my performance tab is dead empty even though i certainly have activity going on. Is this because theyre not internal drives and are connected via an HBA or something? EDIT: Nevermind It appears when other things are using the pool - plex scanner is now showing up. I guess drivepools own operations just wont/dont show activity.

-

and with any type of power loss before it got shipped off or completely shipped off, drivepool would still see it there and resume/re-send if need be? sounds like primocache is my solution if i want a read cache, and drivepool is the solution if i want a write cache.

-

yes i believe it does use journaling. as for context menu, yea if you right cilck on a file or directory you can choose some stablebit specific entry that will take you to the underlying disk/path in the pool/wherever its mounted etc. would be super convenient and oft used im sure.

-

yea i went with bay number of the chassis

-

So I’m trying to plan out and look forward a bit now that I’ve made the leap. Migration to another pc is in my near future. I back up my system with macrium and my intent is to restore it to the new pc. Obviously the hardware will change, but the image will be from my current pc. Will I be fine or will I need to deactivate here first then create the image to restore to or… what would my process be to carry on uninterrupted. (Def don’t want my settings to be lost etc)

-

ah, maybe ill look into another solution then (storage provider wise) purely for the macrium images. While it seems like a pain (and more overhead running several cloud providers in tandem) it also seems cumbersome to say, lose my local images (perhaps my pc dies and i build a new one) and have to go to my in laws house -> deactivate my drivepool license (from dead pc) --> install on their pc ---> download image ---> deactivate license ---> uninstall from their pc etc. i guess in a catastrophe situation thats all small potatoes though. just trying to find the most convenient, most sense-making, non cumbersome way to accomplish cloud backups. i just finished my first snapraid sync and was forced to exclude my macrium images directory due to its ever changing nature with consolidations and purges. I couldnt risk it torching data on 24 other drives should one go down before a sync but after macrium decides to purge 1TB worth of old backup sets. (since it has no option to purge to a dir or recycling bin etc) anyhow, im digressing. I appreciate the reply!