sfg

Members-

Posts

14 -

Joined

-

Last visited

sfg's Achievements

Member (2/3)

0

Reputation

-

I don't think so, I have the cache balance to the other disks around once a week, well before it is full

-

Interesting, I did not know this. Even on Windows server editions? Can you elaborate at all about torrents on the pool?

-

Hi, I have drivepool 2.2.5.1237 with ~11 drives installed - no duplication or file placement rules. The only balancing plugin enabled is SSD optimizer for a SSD I use as a 'cache' drive for the pool. I have ~3600 torrents seeding that all reside in the pool (B:\) on one of the 11 spinning drives. In the past, in the log, qBittorrent would occasionally show "error: the device is not ready". This coincided with unreadable sectors on one of my drives according to scanner. After I removed that drive, everything was good. I am now getting the same error in qBittorrent, but all drives are healthy according to Scanner. Is there some sort of drivepool driver error that could be causing this? Any help would be appreciated. Thanks

-

Sorry to resurrect this, but did you resolve it? I'm having the exact same issue. I can barely even open file explorer while the pool is being measured (and it takes a very, very long time). Is perhaps a drive failing? I have no smart errors

-

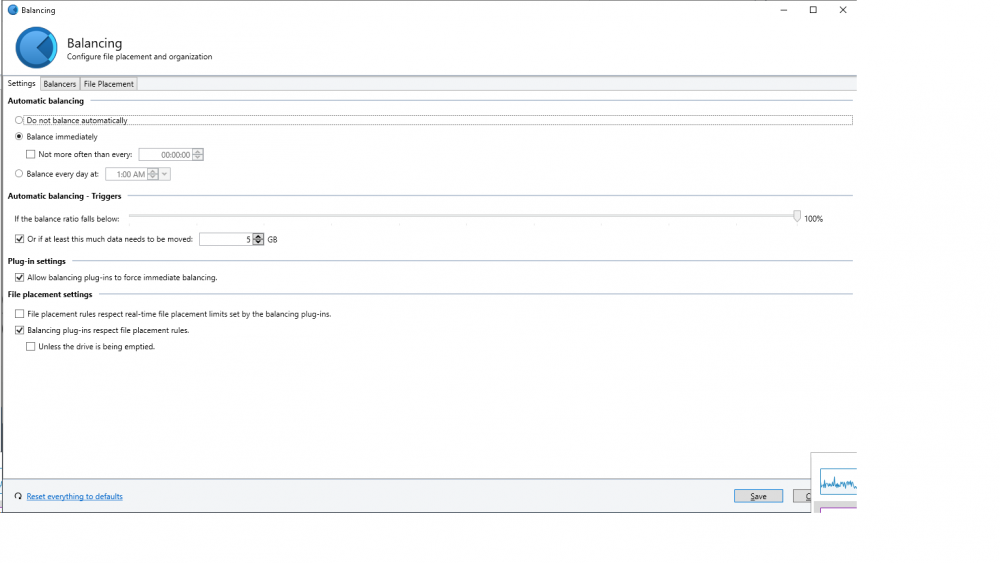

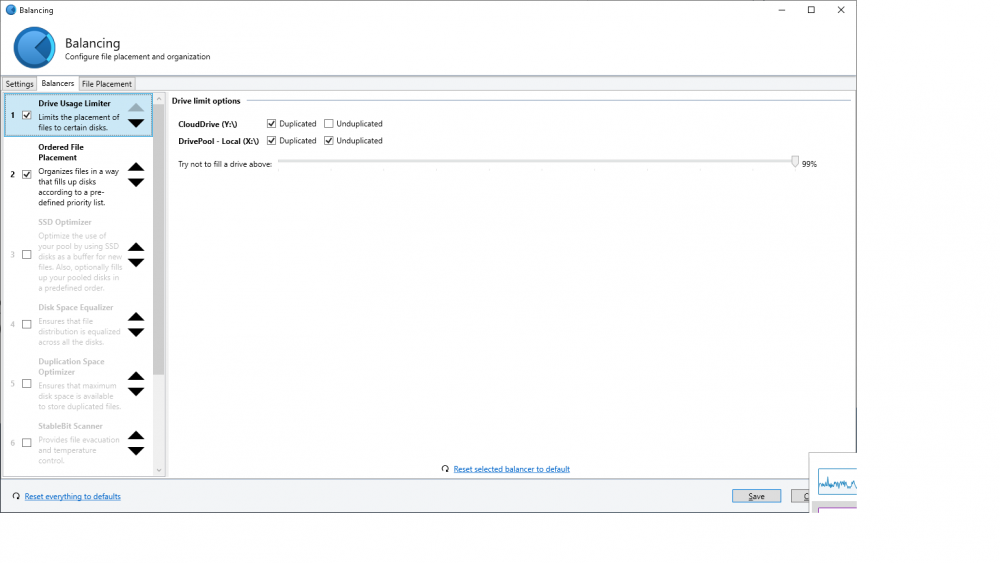

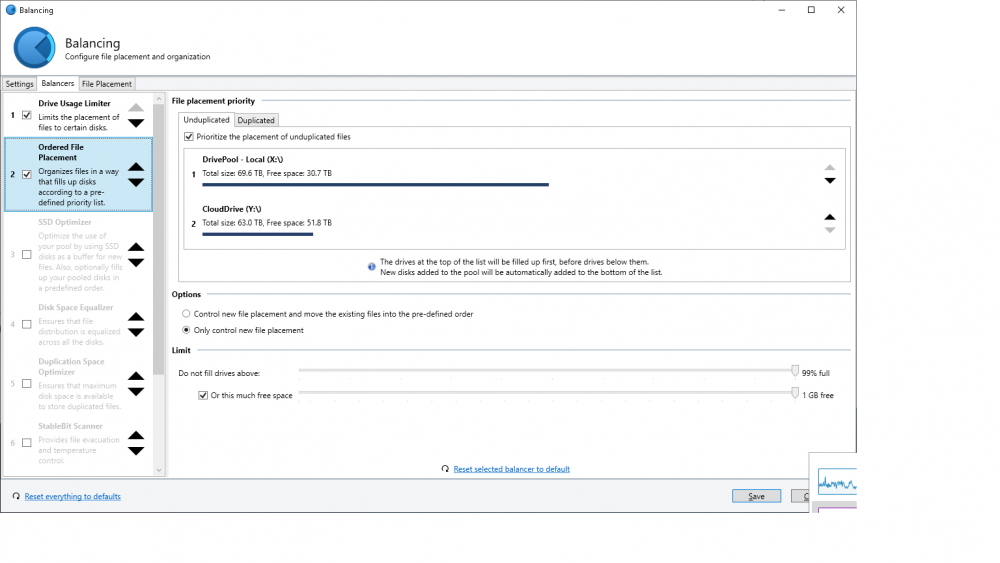

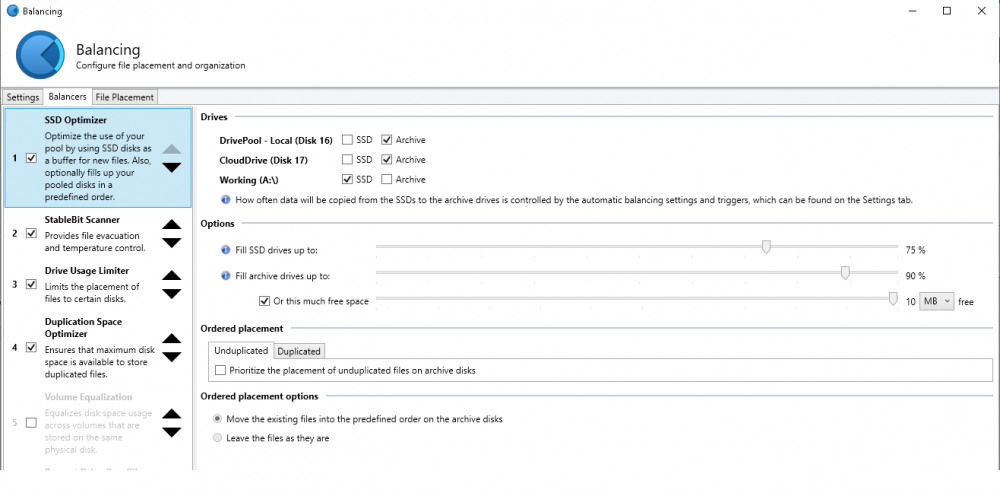

Hmm, maybe I don't in fact have it working... the only two balancers I have enabled now are ordered file placement, (with my local pool listed first), and drive usage limiter ('duplicated' enabled on cloud drive, and 'duplicated' and 'unduplicated' enabled on my local pool) . Every time I copy files to my pool, they go to the cloud drive first, which copies very slowly. I'd just like all new files to be placed on the local pool first, then duplicated to the cloud drive overnight 1. how can I get this to work properly - all new files to be placed on the local pool first, then duplicated to the cloud drive overnight?

-

I think I got it, no worries everyone. Thanks

-

Hi, I know after creating a partition (ie google drive), it is not possible to change the cluster size of that partition. I'm wondering if it is possible to create a new partition with a larger cluster size, and migrate the data I currently have uploaded to the new partition, server side. I would like to have partitions bigger than 64 TB in windows, but I realized this too late, after I already uploaded almost 50TB. Thanks!

-

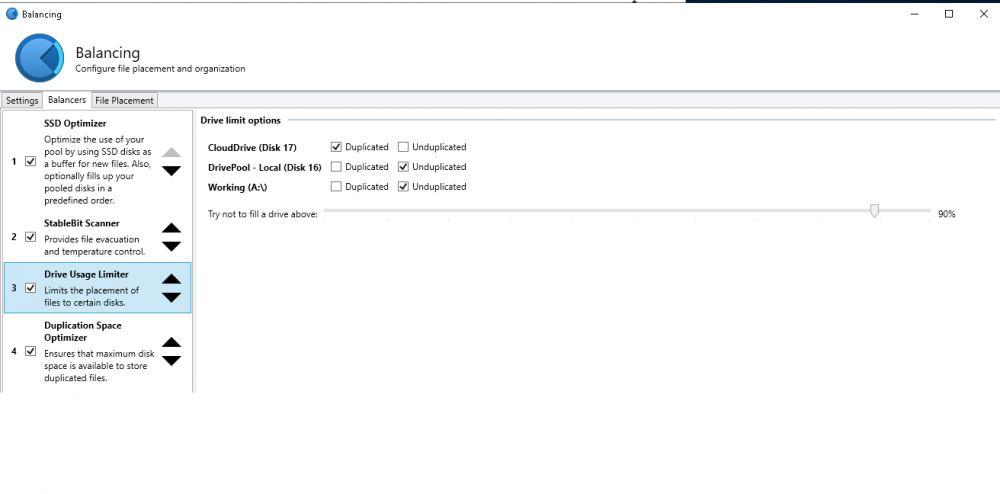

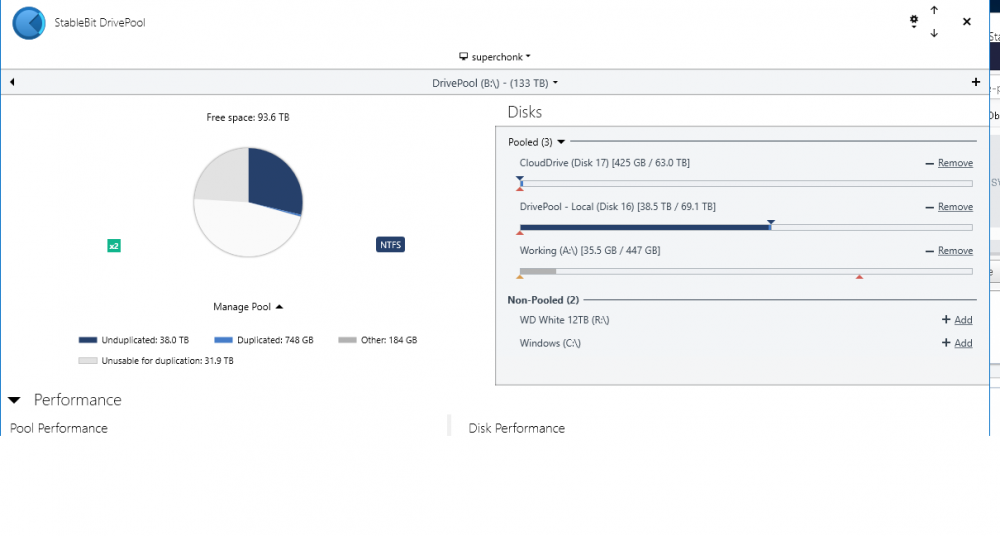

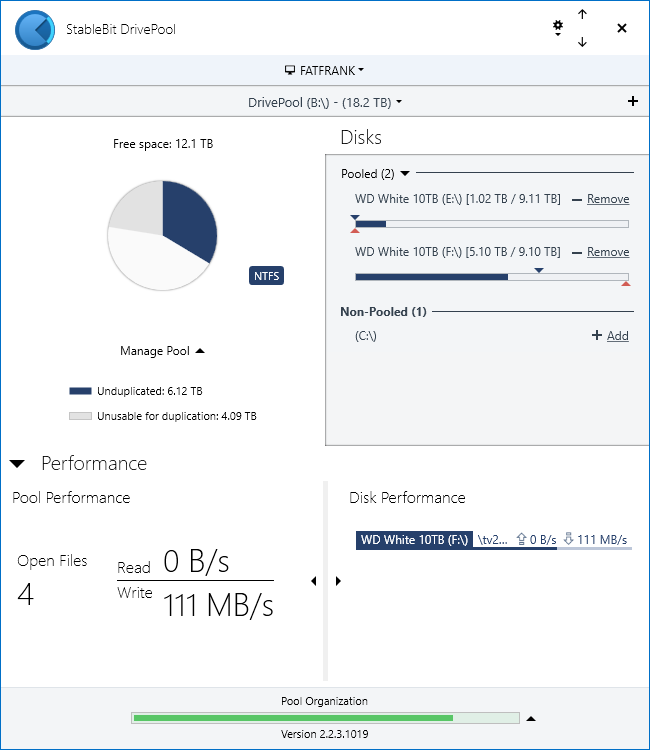

Ok, I think I have some things figured out. I created a clouddrive, and then created a new pool with my old pool and clouddrive. I only allowed unduplicated data on my local pool, and duplicated data on the cloud drive. It appears to be syncing now, so we'll see if this is all a waste of bandwidth or is doing what I want... This brought up a few more questions though. I have an nvme drive that I used before where all new files would be written, then transferred to the spinning disk pool. Is it possible to use this with my new pool? I want it to work like this: new data -> nvme -> local spinning disk pool -> duplicated to cloud drive. 1. I added this nvme drive to my new pool with 'old pool' and clouddrive, and enabled ssd optimizer. will this work as I intend? 2. I've attached my drive usage limiter settings and ssd optimizer settings, does this look right? 3. As well, why is there such a large portion of my pool that is 'unusable for duplication'? If I add up unused space, used space, and unusable for duplication space, the total is much larger than my pool and the clouddrive. is this correct? Thanks!

-

sfg started following Ordered file placement question and CloudDrive duplicate pool to Gdrive

-

Hi, I know this has been covered about a million times, but I'd just like to get some clarifications on a few things with DrivePool and CloudDrive (Gdrive) before I commit to changing my existing pool configuration around and uploading a large amount of data. I would like to duplicate this entire pool to my gdrive, and I'm just trying to decide if it would be best to use cloud drive or rclone at the disk level. I would like to use clouddrive if possible as it seems the most automated and easiest to use. I have an existing local pool consisting of ~14 drives and has ~42Tb used (all unduplicated), mounted as B:\. I created a gdrive cloud drive that is sufficient to back this all up, although I did not mount it as a lettered drive in windows (something about being larger than 63Tb causes problems?). In order to backup or duplicate this entire pool to gdrive, I have the steps figured out so far: 1. create a new pool with just gdrive (pool 2) - does not need a drive letter 2. create a new pool with gdrive AND my existing pool (pool 3) - make this new one B:\? I want the existing file structure to remain the same (ie: B:\movies, B:\tv, etc) 3. ? do I now need to move files from their existing poolpart folders into new poolpart folders? I'm a little confused here 4. enable duplication on pool 3. Could someone clarify if my understanding here is correct? Thanks! Additionally, once I get this all going I just have a few questions in regards to the setup: 1. Can I limit the amount of data that is uploaded per day, or set a bandwidth limit (~75mbit)? 2. In the future, can I increase the size of the cloud drive to match my physical pool? 3. If a drive fails in my physical pool (pool 1), will DrivePool automatically restore all missing data from pool 2? The only data recovery I have done (entire drive size) has been with snapraid; I have never used DrivePool for this yet. I believe that's all I have for now... I just want to get everything clarified before I mess with existing data and upload so much, only to realize I did it incorrectly and will just have to do it all again. Thanks!

-

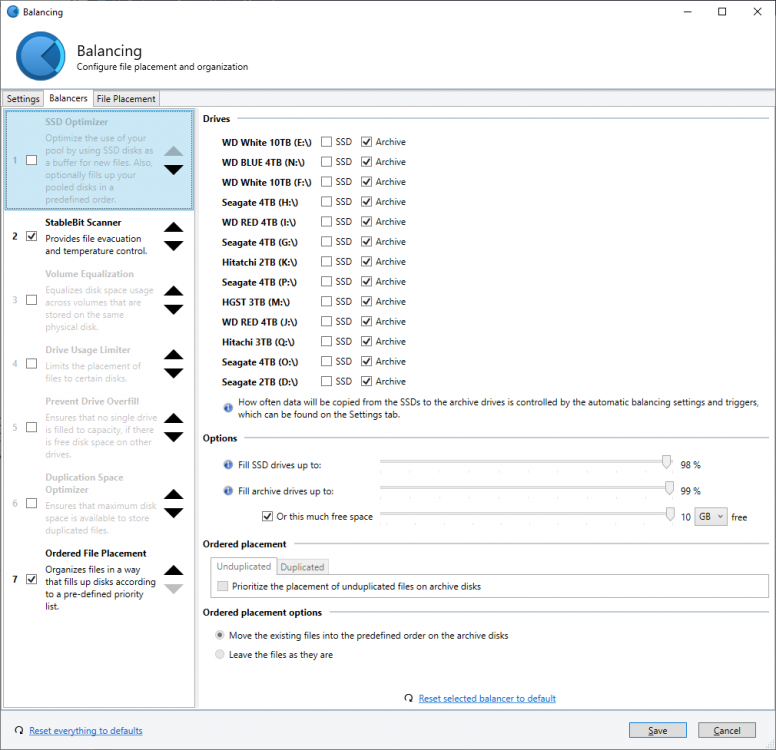

Thanks! what is "the predefined order on the archive disks" though? Is that just the order they are in in the 'drives' section? essentially, I want DP to sequentially fill my drives (as per the ordered file placement balancer), but to use a SSD as the temporary storage space for newly created files. IE: new file in pool (copy from network, etc) ---> SSD ---> Archive disks, and fill in a set order

-

Thank you for the reply! I would like to try this, but I don't see "ordered placement" in the SSD optimizer... am I just overlooking it? I've pulled the SSD for now, that's why it doesn't show up in the list ATM. Thanks again!

-

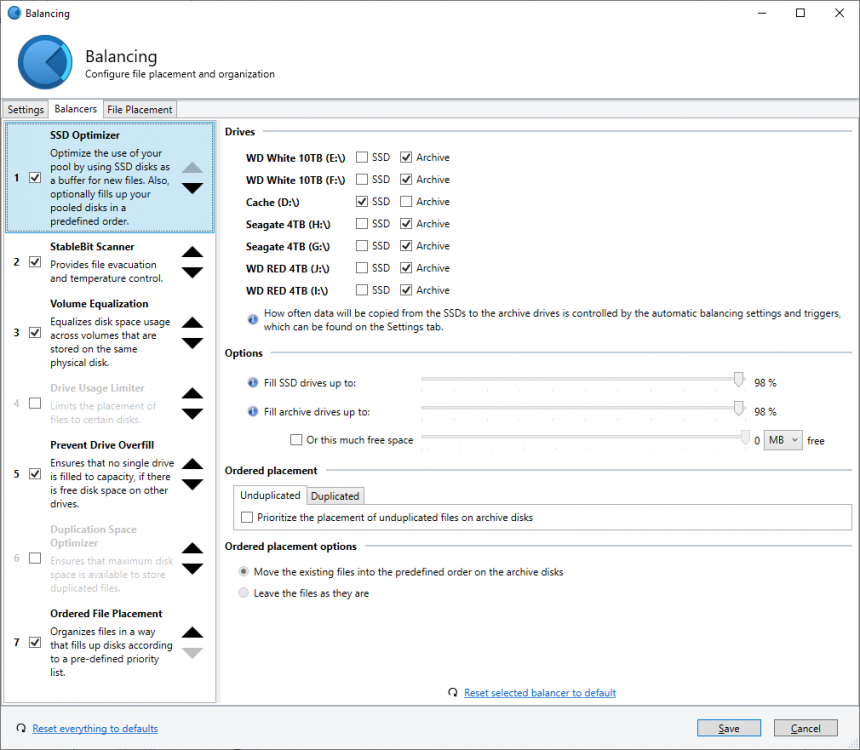

Thank you for the reply! That all makes sense. So far, so good! I also have an SSD I would like to use as a cache drive. I added it to my pool, and installed the SSD optimizer (at the top of the balancers). However, it doesn't seem to use the SSD when I copy files to the pool. Is that because 'ordered file placement' is taking priority? What I would like is new files to go to the SSD, then moved to the appropriate drive in the background/when balancing occurs. Is that not how this plugin is supposed to work? Thanks!

-

additionally, I'm not sure if this is related.. but what is "unusable for duplication"? it seems like it is taking up a lot of the pool. I have no files or folders flagged for duplication (that I know of)

-

Hi, I just purchased drivepool and have just started using it. So far, I have 2x 10TB drives in my array, and I'm copying data from my 16TB qnap (ext4) to my pool. I have about 40TB of additional hard drives to add, which are all full, essentially 100%. I have the "ordered file placement" plugin, as I'd rather the files be placed sequentially on each drive so that in case anything fails it's easier to replace the data on one given drive. My question is this - how do I add my full drives so that drivepool doesn't move files around on that drive/in the pool, and just leave them where they are? Do I just move each new "full" drive to the top of the "ordered file placement" plugin? I'm just getting used to the software and I'm not entirely sure how to configure everything properly. Thanks! ** oops wrong forum... can this be moved to the drivepool forum?