cichy45

Members-

Posts

13 -

Joined

-

Last visited

cichy45's Achievements

Member (2/3)

0

Reputation

-

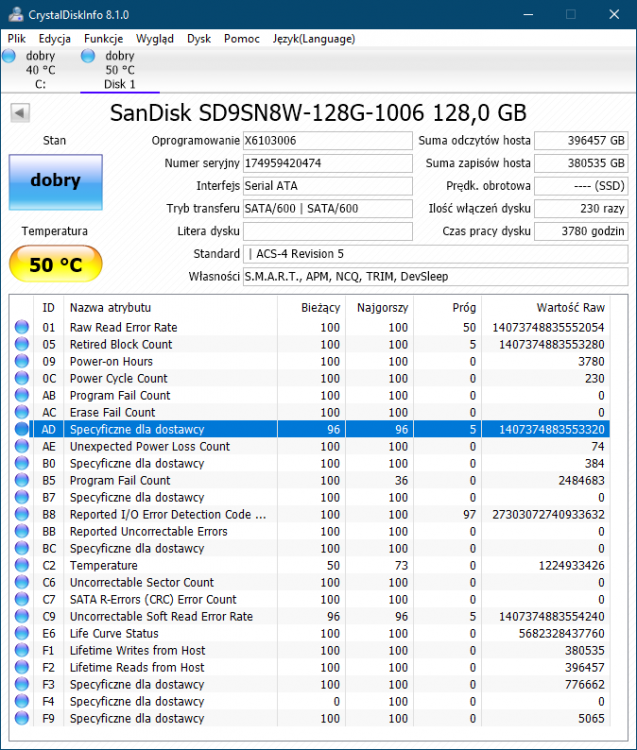

Hello. I believe there is no such option is Scanner, but it could be useful for some (at leas for me). I would like to open a request to add option to enable dumping SMART attributes to log files (ideally one file per physical disk) with all parameters (even unrecognized) on schedule set in options - like every 5 minutes. It would be great if it was a nice CSV file but clean TXT would also do. Layout could be like: columns of parameters, rows of dump/log time and values. So it would be easy to plot charts form it. I ask about it as I have a small 128GB sandisk SSD, it its shoving remaining live and data written to ssd as "unrecognized parameters - manufacturer specific" (AD and F9) but in nice decimal numbers (like 96 means 96% of "live" remaining and 5065 means 5065GB written by host). This SSD serves me as a cache for system HDD and it would be great to be able to create chart with decreasing live attribute and increasing data writes (disk under constant but quite uniform load).

-

@gd2246 I asked similar question some time back. No, there is no way to force equal "%" used on every disk (for example 10% used on 1000GB and 500GB = 100GB and 50GB) in real time. I am also hoping for introducing such feature

-

Hello. I ihink that I might have encountered a bug in DrivePool behavior when using torrent. Here shown on 5x1TB pool. When on disk (that is a part of Pool) is created a new file that reserve X amount of space in MFT but does not preallocate it, DrivePool adds that X amount of space to total space avaliable on this disk. DrivePool is reporting correct HDD capacity (931GB) but wrong volume (larger than possible on particular HDD). To be clear, that file is not created "outside" of pool and then moved onto it, it is created on virtual disk (in my case E:\Torrent\... ) on that HDD where DrivePool decide to put it. Reported capacity goes back to normal after deleting that file:

-

Snapraid does parity filebased, so you can defrag your HDD, delete/add files and it will calculate parity only for these changes. You can also force it to recalculate parity for all files if you want. For me, on 5x 1TB pool it's going 300-500MB/s, not too fast as each drive can do between 120-180MB/s but my NTFS is compressed so its additional CPU load (+ I got a lot of small files, so HDD can't hit their max transfer speed). Some people hit 1500MB/s when calculating parity and 2500MB/s when checking hash on pools with multiple HDDs.

-

@Umfriend I have a random collection of hard drives, from a 80 to 2000GB. I would like to put them all into one pool. One reason is that SnapRAID is faster with more drives, as it is calculating parity from all physical HDD at once. Second, if data is distributed between more disks, if something goes wrong - less of it will be lost. Third - I just "feel better" (yes, that is totally personal preference) when all of hdd in pool are used instead of spinning for nothing. Default file placement is great if all of your HDDs are the same size. It is great for my 5x 1TB pool, but it starts to get messy when I add 2TB, 320GB, 500GB, 1.5TB etc., drives. Yes, I could just Pool two 500GB to make one 1TB Pool, and pool that pool into pool to get equal % of disk space used (10% of 1TB pool = 10% out of each 500GB) but... you can see where it is going with even more random disk like 750, 640GB etc. That is why I would like to have that option build into DrivePool.

-

But there is not any way I could change from absolute to %? The only way is wait for developer to add it I guess.

-

Thanks for your answer @Christopher (Drashna) Would it be possible for developers, to create a new file placing strategy, to replicate behavior of Drive Space Equalizer but in real time? So users could switch between "place on HDD with most space" or "use equal % of space on every HDD".

-

cichy45 reacted to an answer to a question:

Not balancing onto new drives

cichy45 reacted to an answer to a question:

Not balancing onto new drives

-

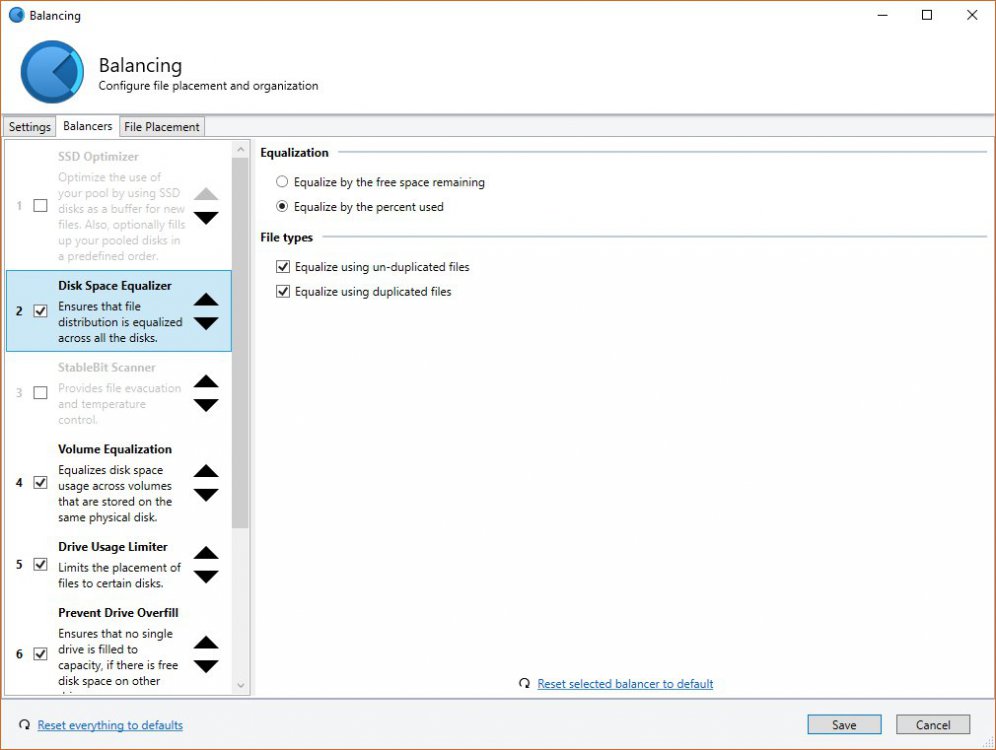

Maybe I am just simply misunderstanding this plugin and its is not able to equalize disks in real time? Only after being triggered (manually or automatically). If that is the case, I would be a bit disappointed. I hope @Christopher (Drashna) will be able to confirm or deny this ability of Disk Space Equalizer plugin. If it can not do it in real time, maybe it would be a nice feature to consider? Basically, I would like DrivePool to fill all my Pooled disks to the same % of space used. If new disks are added, all new data copied into Pool will go onto these new HDD until they are the same % used as the rest of old HDD in pool. All on-the-fly while new files are written into Pool, instead of manually/auto triggering balancing. I have a lot of HDD that are various capacity, from 80 to 2000GB so this behavior would be great feature for me as I would be able to add new disks without triggering balancing, new files would be used to balance the pool instead of moving files from old HDD to these newly added. 3 disks, 2TB, 2TB and 1TB used in 10%, so they hold 200GB, 200GB and 100GB respectively. I add one new 3TB, and all new data is written only into that new 3TB until it is used in 10% (holding 300GB of data). After being filled to 300GB, all new files should be distributed on-the-fly between these 4 HDD so they will have constant X % of space used.

-

Yeah, I think that's what Disk Space Equalizer was made for - to equalize space between HDDs with different capacity. I suspect that this plugin might be a bit outdated and thus don't work as expected.

-

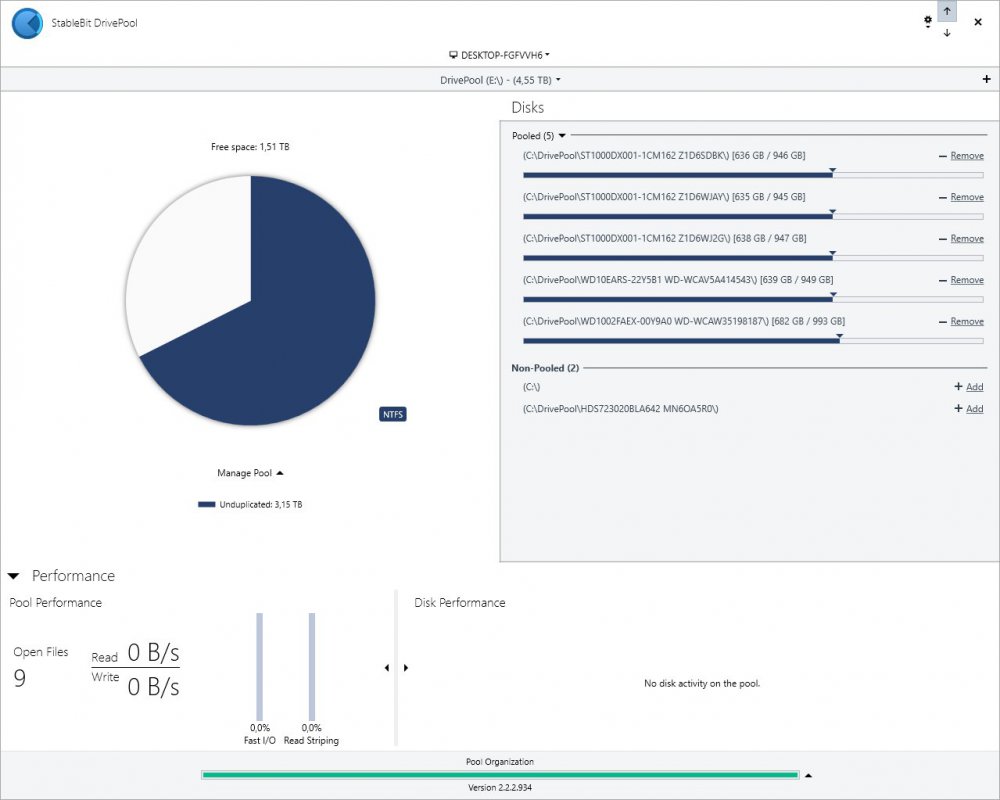

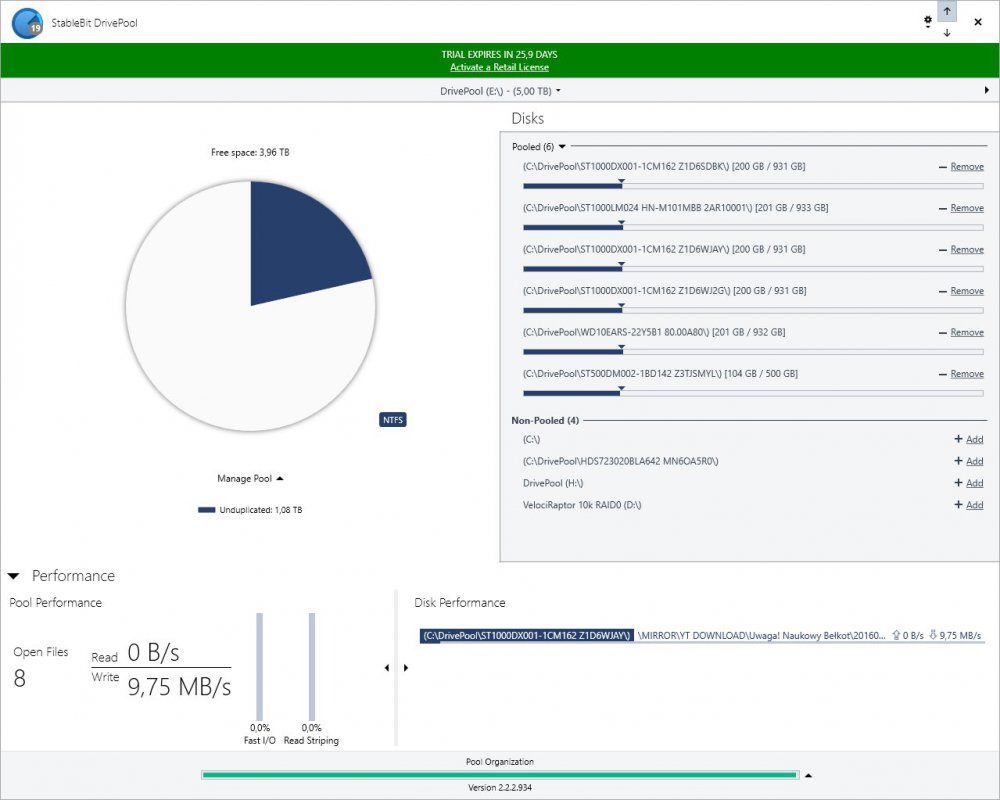

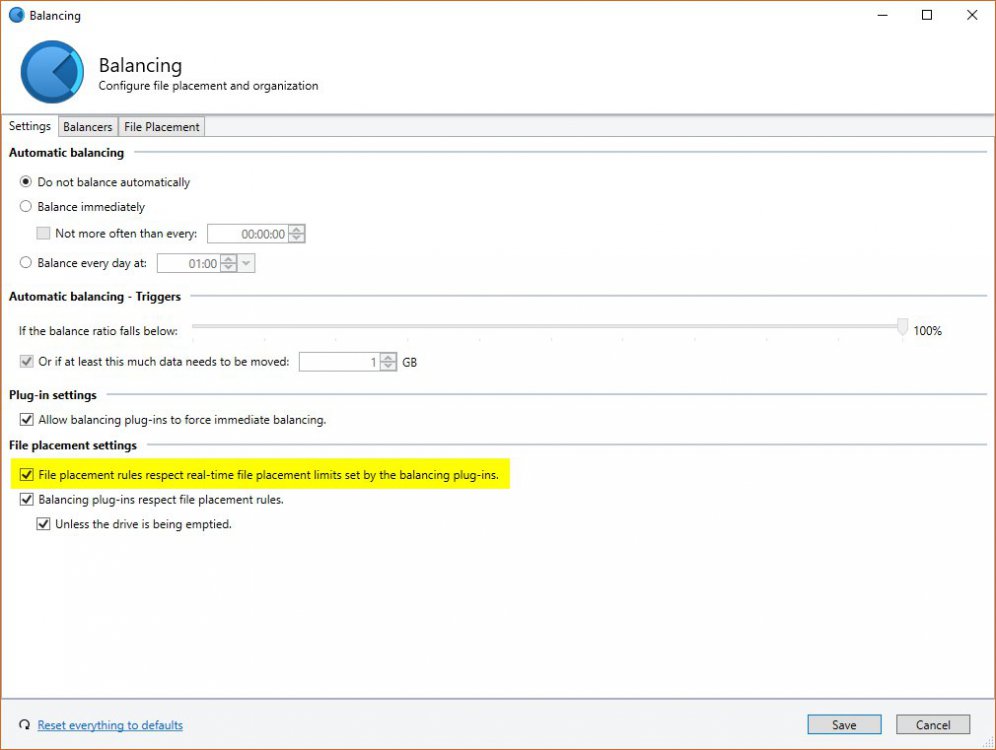

@Jaga Tried that settings-reset but didn't work. As you can see, (copying from D: to DrivePool) it will only put data on those five 1TB disks. The only difference is that 500GB HDD is attached via SAS Perc H310 flashed to IT mode, but that shouldn't make any difference. (Don't mint that HDS723020BLA642 MN6OA5R0 as it's a snapraid parity drive for Pool so it is only used when syncing). I will wait, maybe one of developers/administrators will be able to provide more information on this error I encounter. I really like DrivePool and it would be perfect for my needs, but lack of this feature (real-time disk equalization for added disks) is almost a deal-breaker. It would be cool if they could make it a core functionality (like the default ordered file placement) instead of being an plug-in that could go outdated and incompatible after some DrivePool release.

-

@Jaga No, it doesent and that is the problem. I can use manual balancing, to equalize all (old and new disks), but if I start copying new data onto Pool, these new drives wont recieve any of it. All data will be distributed only between old disks. So, to make it clear: 1. I create Pool with 5 drives. 2. I copy some data to pool, Disk Space Equalizer Plugin is equalizing placing of data between all 5 disks in real time. 3. I add new disk to this old Pool. 4. I start to copy new data again, but DSEP is still placing it only on 5 disks that were used to create Pool. 5. I do Manual Balancing proces, now 5 old and 1 new disk is balanced correctly. 6. DSEP is still not putting any data on new disk when I copy something into pool after balancing manually. Interesting fact. Even when I disable all plugins, DrivePool will still place data only on original 5 HDDs. Remeasuring pool doesn't change anything. Only manual balancing seems to be able to put new data onto new disk. It looks like DSEP/DrivePool is able to distribute data between old disks in real time, but in will fill new disks only after old are full.

-

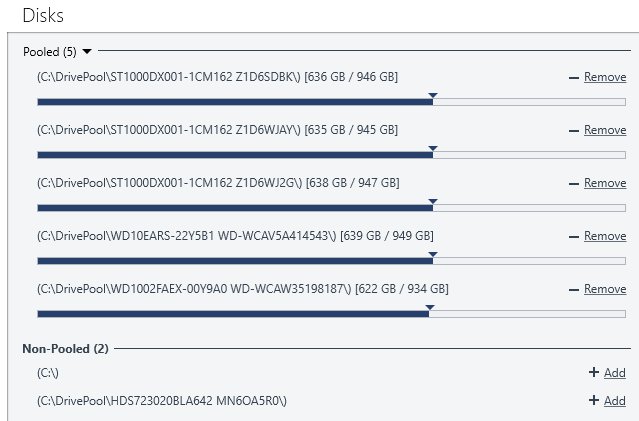

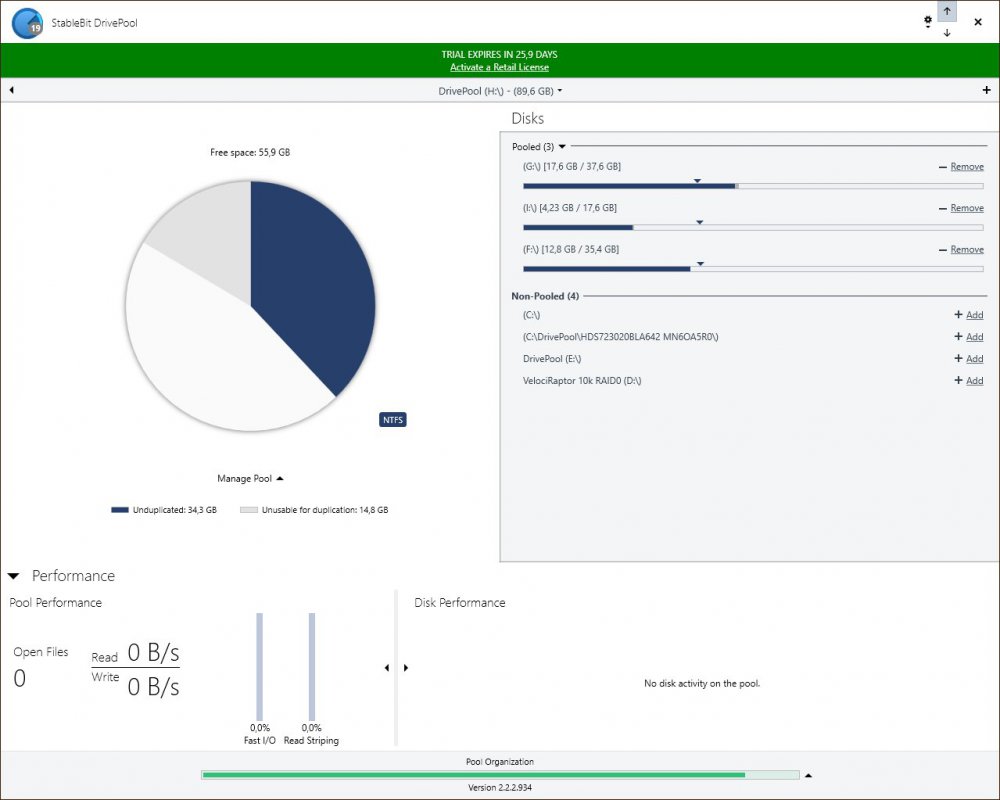

@Jaga I do not have any file placement rules as I don't mind on which HDD files are placed. When I disable all plugins except Disk Space Equalizer nothing changes. I made small experiment with small partitions. I started another pool with 2 partitions (G & F), filled them with some data, all was balanced +/- OK. Then I added another small partition ( i ) and it was not "noticed" by equalizer plugin neither before or after manually balancing. Manually balancing is working with Disk Space Equalizer - however it is not able to work correctly in real time with added disks/partitions after filling pool with some data. Screen of Test Pool: Here is screen with my main Pool (320GB removed already): Balancing plugin seems to "know" (considering that "balance to" arrow) to fill 500GB HDD as its is lacking behind, but it is not doing so.

-

cichy45 started following Not balancing onto new drives

-

Hello! I will post here instead of creating a new topic as I experience similar issue. I use Disk Space Equalizer plugin with "Equalize by percent used" setting. I had 5x 1TB pool, and it was distributing new files equally onto those 5 HDDs on the fly. However, after adding one new 500GB HDD and manually balancing the pool, all new files are still placed onto those five 1TB hdd and nothing goes into 500GB HDD so I have to balance it manually. No settings were changed and it stopped equalizing files properly after adding that new 500GB HDD. One workaround is to use automatic balancing with "If the balance ratio falls below 100%" but its not elegant solution - it keeps files moving around the pool almost continuously instead of placing them right away onto the right HDD. It simply looks like drives added after creating pool are not taken under consideration by balancing plugin. Now I added another empty 320GB and its the same behavior. No files copied into Pool are written onto those 500 & 320GB HDDs, only 5 1TB drives that were used to create original pool.