jik0n

-

Posts

12 -

Joined

-

Last visited

Posts posted by jik0n

-

-

9 hours ago, srcrist said:

Your post is missing a few bits of important information to help determine if there's actually a problem.

Notably, you never mention what you actually have your cache set to. What sort of cache mode are you using, and what size? CloudDrive will *always* fill the drive to *at least* the size that you specify when you mount the drive. So if you're using a 6TB drive and you've set up a 6TB cache size, it'll store 6TB of information and never any less. It will cache the most beneficial data, as determined by the algorithm, until it reaches the size you've set. If you've set your cache to something smaller, like a few hundred GB, and you've selected the "expandable" cache mode that is recommended for most use cases, it will fill up to whatever amount you've set and then continue to fill the drive only while it has additional content that needs to be uploaded to the cloud. As an example, if you have a 250GB expandable cache, you'll always see the cache as *at least* 250GB--but if there is an additional 100GB that needs to be uploaded to the cloud, the cache will grow to 350GB and decrease as that additional data is uploaded until it reaches its 250GB minimum again.

So if I don't help you with the following information, share those details.

Now, that being said, if you *are* using a 6TB drive with a 6TB cache size--that's excessive and counter-productive. I'd say a 500GB cache or so, at the most, is all you would ever need for even the most heavy uses (video streaming from your drive or something similar). That means that CloudDrive will store the 500GB of the most accessed/most useful data locally, and move the rest to the cloud for later access.

But here is the good news: the detail that is actually important with respect to whether or not you can disconnect the drive right away isn't actually the cache size at all. It's the "To upload:" number that is displayed right below the pie chart in the UI (if you have any data to upload). That number is what actually represents the data that is in your cache but *not* in your cloud storage yet--and that is the only number that will prevent CloudDrive from letting you detach the drive and attach it to another system. As long as you don't have any data "to upload," you can detach the drive in less than a minute and your entire cache--whether it's 6GB or 6TB--will instantly poof. It's all just duplicated data from your cloud storage. If you actually have several TB of data *to upload*, then you're almost certainly running into other problems like daily data upload limits set by your cloud provider. And there isn't much CloudDrive can do about that. You'll just have to stop putting data on the drive and wait until it's done.

Does that make sense?

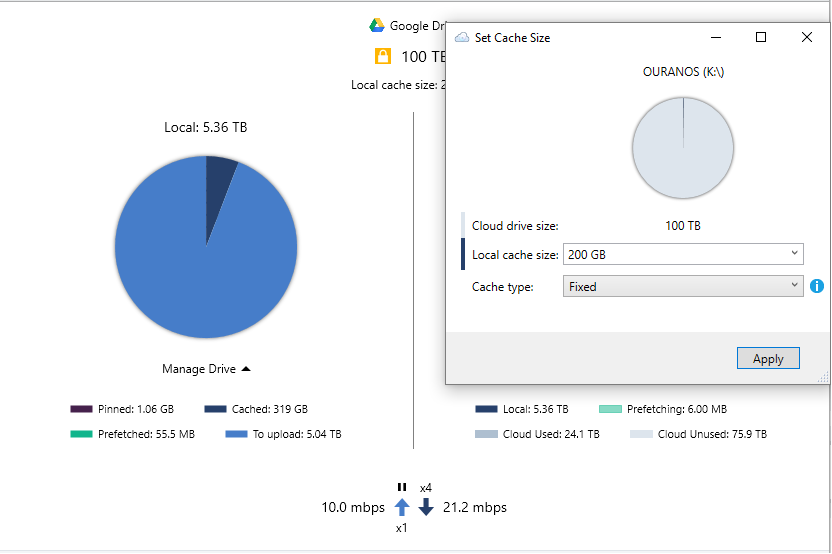

I'm attaching a screenshot. Fixed cache 200gb its exceeding that and will not clear. Latest stable release.

-

Hello,

I have a 6TB cache drive that is almost full. For some reason no matter what I do the cache on cloud drive exceeds the max cache I have set so my 6TB drive never empties. Even telling clouddrive to empty the cache does nothing. Reboots did not solve it and updating did not solve it. So I want to move my setup to a different computer entirely. Is there a way to go about just junking the 6TB I have in cache and having stablebit remove any unfinished transfers or will I end up with half transferred items if I just pull it and wipe the cache drive and re-setup the drive on a different computer?

-

Ah okay, so its possible that whats causing this issue for me may be a completely different reason then.

-

On 4/25/2018 at 8:07 PM, auzi68 said:

Christopher,

So I seem to have pinpointed it down to a few options and maybe others can throw in when it's happening to them. So last night it happened to me 3 times in a row:

The drive is connected to another machine in "read only" state and I changed the max upload speed. All three times this broke the UI function.

The drive in question is a "pre release drive" but hopefully that has nothing to do with it.

The fourth time after resetting I just changed the threads and not the max upload speed and it didn't break it.

Hopefully that helps,

Austin

What do you mean by "read only state" ? Like, you don't have write permissions to the drive stablebit is installed on? Or the drive that is the cache?

Edit: if you're talking about read only inside of the stablebit program settings, I've never set my drive to that myself. Is it doing it on its own for some reason?

-

On 4/23/2018 at 8:26 PM, Christopher (Drashna) said:

I was hoping it would. If it's not, then just the normal "All" option.

You joke, but sadly, that may help. That or a remote support session. So, you know, you wouldn't need to ship it.

If it comes to that I'm sure one of us having the issue wouldn't mind assisting, I'm unsure how that would work though.

-

On 4/16/2018 at 3:20 PM, Christopher (Drashna) said:

We are aware of the issue, and we are looking into it.

Unfortunately, reproducing the issue is difficult (at least, is has been for us). So it makes troubleshooting this difficult.

Guess one of us will just have to ship you our system lol

-

+1 to having this as well, but I've already opened a contact request.

Is there anything we can do in the time being file wise to edit the settings?

-

OK, yeah. I think that's probably your best bet.

My main point, in any case, is that the application doesn't have those problems for most people. So, network issue or not, it's probably something to tweak for your specific setup. I'm sure a ticket can help to troubleshoot that though.

Something interesting to note, and idk if I can mention it here, but another software like stablebit (but I think is mainly used for linux) has been getting errors while trying to pull from gcloud / gsuites "403: rate limit exceeded." I don't know if google is doing something on their end but it may come up at some point with stablebit since the two programs act the same

-

CloudDrive doesn't typically do this. So a fix isn't really something that is needed. It's likely a network issue. But I would open a ticket.

In any case, if you're changing that setting to anything over 10 or so and still seeing disconnects that's a much larger problem.

Disconnect only has happened once since I've changed it. I'm not convinced its a network issue since when I'm not running the cloud drive I have zero issues. I could transfer terabytes of files over the network or DL / UL tons of files via p2p and never have issues.

I'll try doing the ticket route via the troubleshooter.

-

35 is waaaaaaay too high. I wouldn't go over 10.

It worked up until this point. Still hasn't locked up. It just disconnected again. Sigh... is there any fix for this currently? Is there a way to tell stablebit to download files into the cache without trying to access them? Or if they are video files, if I try to play it, will it download the whole thing if I attempt to play it for just 5 seconds then close it?

-

When I try to copy files from my clouddrive local over network to another computer the cloud drive starts the DL on its end and starts a transfer, after a few minutes it will disconnect and error out forcing it to re-mount. Is there a fix for this? I tried googling and checking around the forums and couldn't find much.

Thanks,

Edit:

I tried the fix posted in:

http://community.covecube.com/index.php?/topic/2997-automatically-re-attach-after-drive-error/

I set the threshold to about 35

so far this is working, will transfer more files over the next few hours / days and report back.

Edit: okay so its been about a day and what I'm finding is that it'll stall still but this time it doesn't disconnect. So if I have a pending transfer that will stop / pause and ask me to retry but stablebit won't disconnect. I'm going to find a transfer software that auto retries

Cache question

in General

Posted

I did not alter the cache size recently, Windows does show the drive as full. I did open a support ticket just waiting for a response.