clonea1

Members-

Posts

36 -

Joined

-

Last visited

Everything posted by clonea1

-

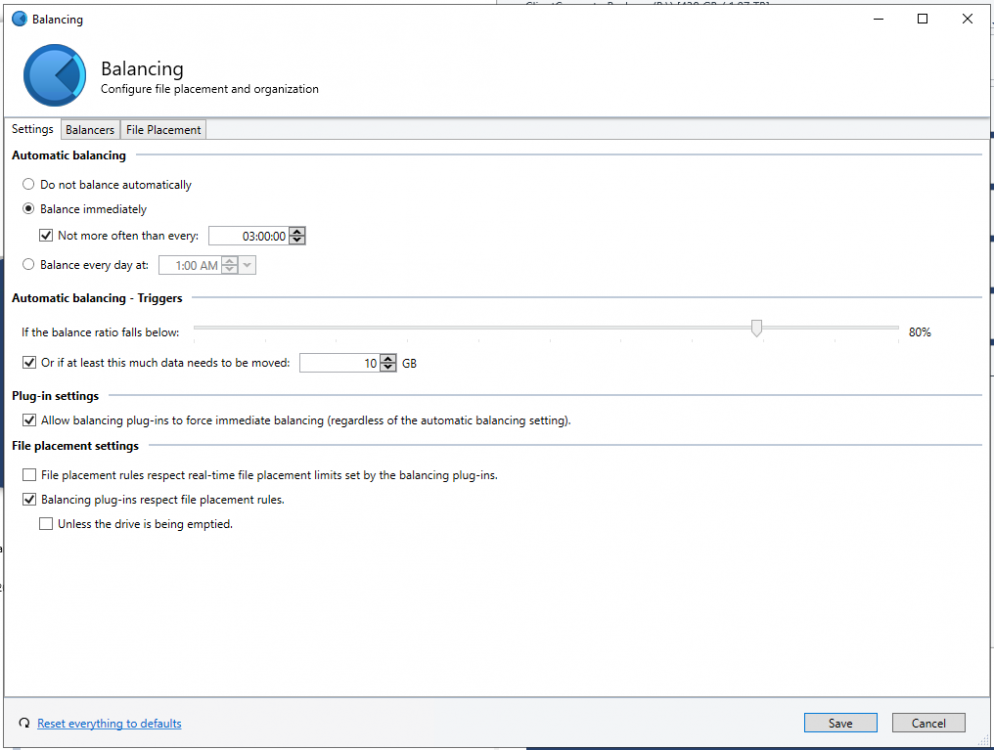

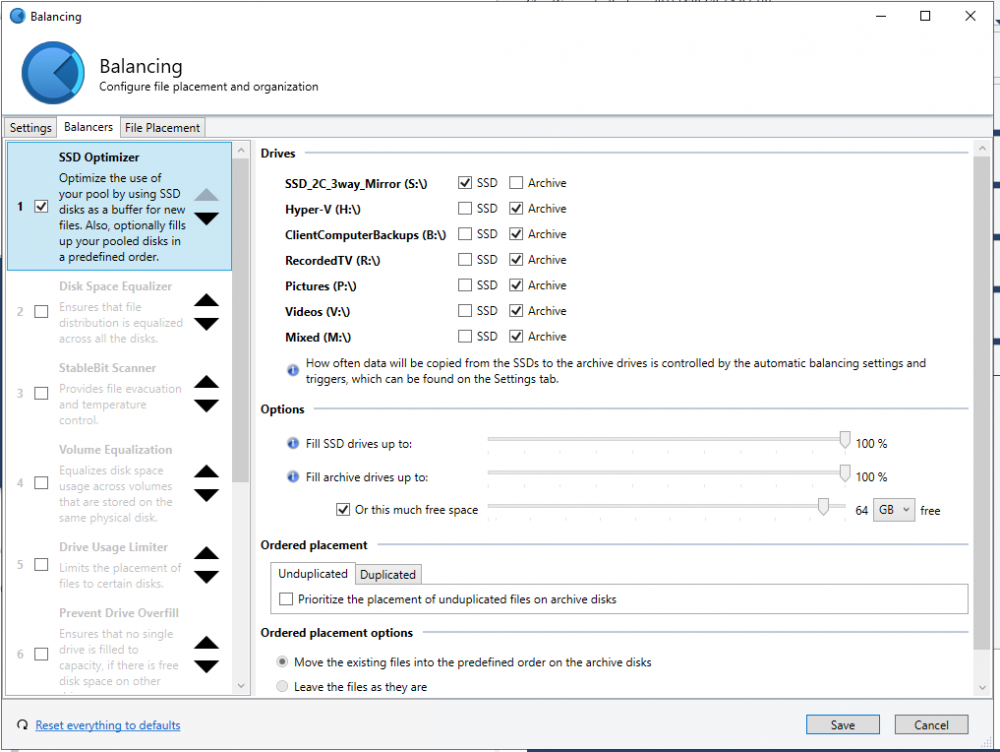

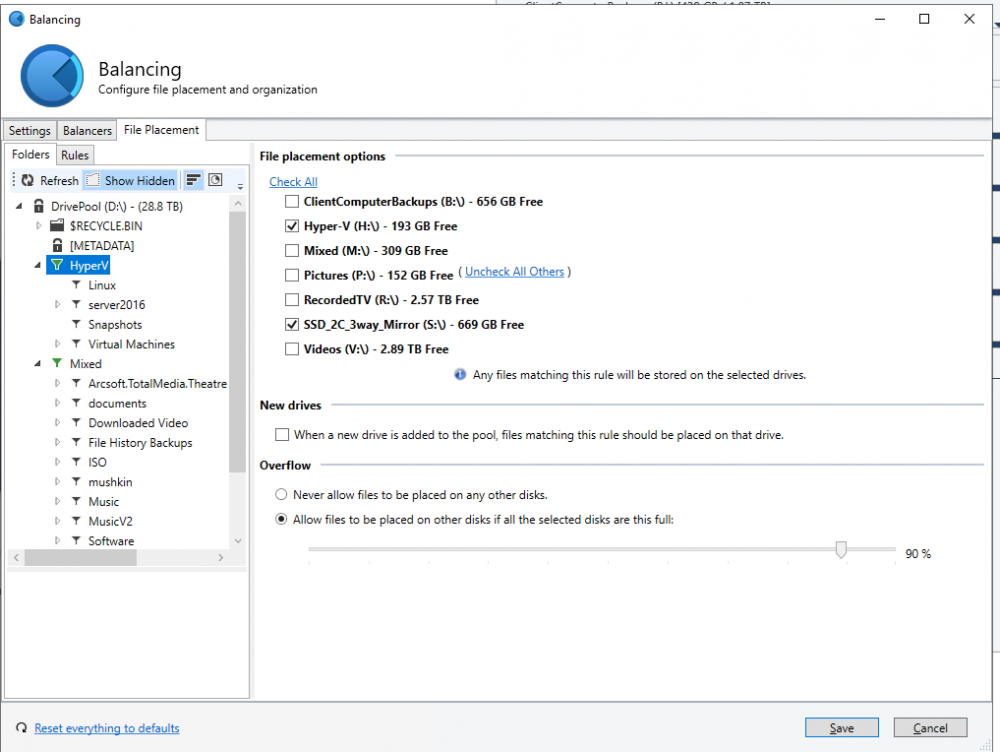

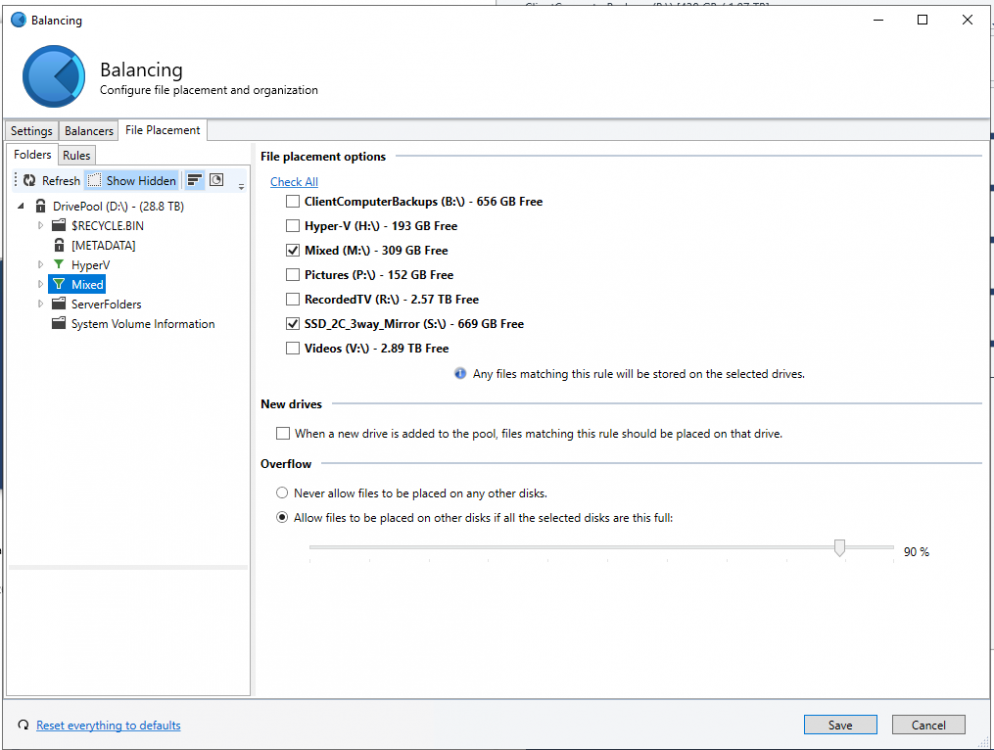

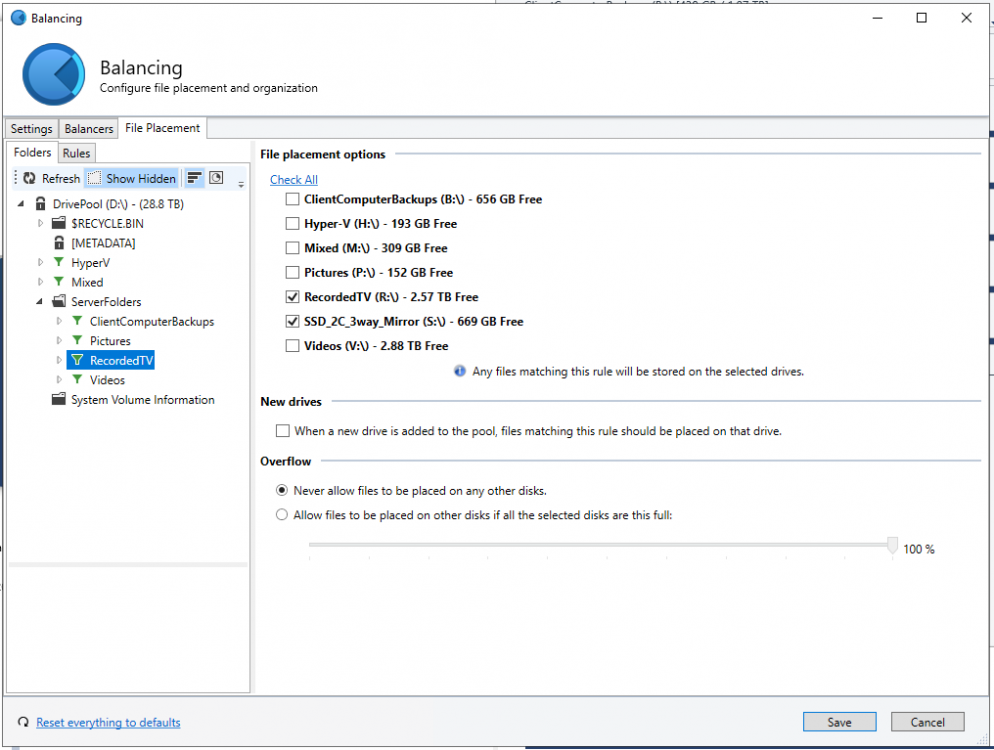

it appears that my SSD drive is being fully bypassed by new files and going directly to the spindle storage. i am useing dedicated drives for certain purpose's and oganization. so videos go to V; recordedtv from MCE goes to R; backups b; etc... so for the placment rules i have done the arhcive disk plus the cache disk (S for SSD) what i am seeing is that the things for R skip the S first and then go direct to R, videos go direct to V etc.... the problem is that these are all storagespaces backend drives and they are dual parity so the write speed on them is INSANE slow. so i want the new data to cache into the 3 way mirror of SSDS as they are incredibly fast, hang out for a little and drain to the parity back end drives. but what is instead happening is all new content to the server is going in at the insane slow speeds in the short term just to test that the S drive would drain is manually directly put content into the s:\pool..... location and did a remeasure and then it would drain to the expected locations. but yeh if i just put it to D the drivepool disk nope skips cache and so on, i dont think i need to include each print screen ?

-

intresting even though the disable command in power shell errored the drive is now a low number and the emulated disk driver is present

-

o i did also upgrade to the most recent beta build and same issue

-

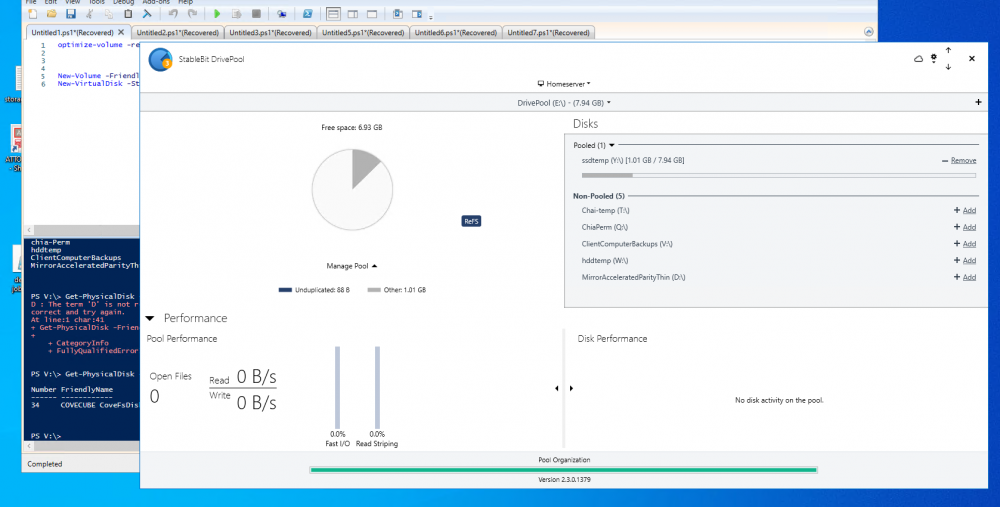

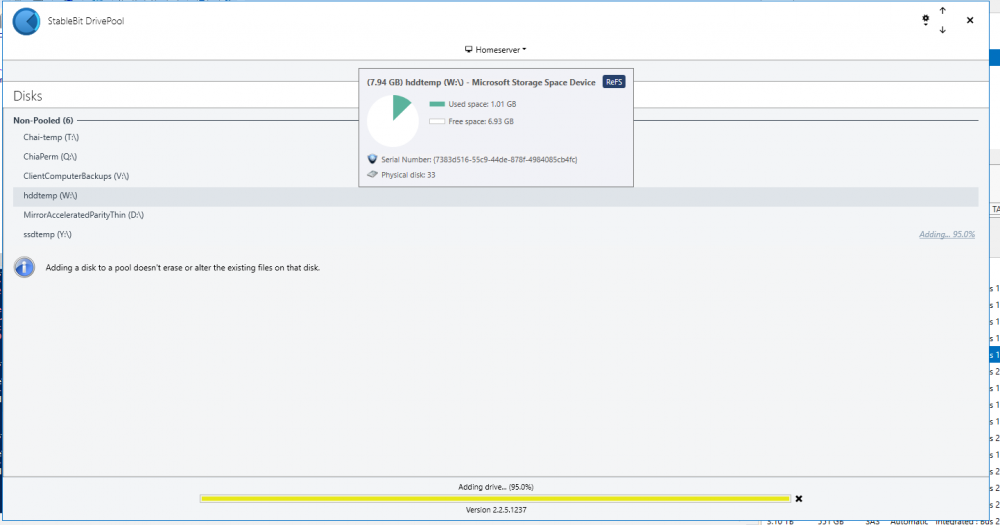

ok so some more details if i do a get physical disk it has a drive id of 500~ this means that windows this its a bus cache participant either target or cache PS V:\> Get-PhysicalDisk -FriendlyName *cove* Number FriendlyName SerialNumber MediaType CanPool OperationalStatus HealthStatus Usage Size ------ ------------ ------------ --------- ------- ----------------- ------------ ----- ---- 534 COVECUBE CoveFsDisk_____ {1030eff7-2ab2-4627-aef3-0da89a10773c} Unspecified False OK Healthy Auto-Select 2 TB and when i try to disable the disk to make it non eligible for storage bus i get a error that its the wrong type of disk to even be eligible in the first place PS V:\> Get-PhysicalDisk -FriendlyName *cove* | Disable-StorageBusDisk Exception setting "BusType": "Cannot convert value "File Backed Virtual" to type "Microsoft.Windows.Storage.Core.BusType". Error: "Unable to match the identifier name File Backed Virtual to a valid enumerator name. Specify one of the following enumerator names and try again: Unknown, Scsi, Atapi, Ata, IEEE1394, Ssa, Fibre, Usb, RAID, iScsi, Sas, Sata, Sd, Mmc, Virtual, FileBackedVirtual, Spaces, Nvme, SCM, Ufs, Max"" At C:\WINDOWS\system32\WindowsPowerShell\v1.0\Modules\StorageBusCache\StorageBusCache.psm1:1233 char:13 + $devices[$deviceGuid].BusType = $phyDisk.BusType + ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ + CategoryInfo : NotSpecified: (:) [], SetValueInvocationException + FullyQualifiedErrorId : ExceptionWhenSetting you wont be able to repo this in hyperv unless you do full disk mounting, as a VMDK disk in hyper v is also not eligable so you may need to test on physical hardware, or mark a bunch of drives as offline on the hyperv host and then do direct full mounts on the virtual machine then its as simple as bring those disks in to server 2022 and fresh virgin disks then do enable-storagebuscache it will enumerate eligable disks and create a new storagebuscache storage space pool and will bind your SSDs to HDDS, so your test case will need to have both. then you can create some virtual disks and then try to add them to drive pool PS V:\> New-Volume -StoragePoolFriendlyName storagebus -MediaType ssd -FriendlyName ssdtemp -Size 1gb -ProvisioningType Fixed -DriveLetter y -FileSystem ReFS DriveLetter FriendlyName FileSystemType DriveType HealthStatus OperationalStatus SizeRemaining Size ----------- ------------ -------------- --------- ------------ ----------------- ------------- ---- Y ssdtemp ReFS Fixed Healthy OK 6.93 GB 7.94 GB PS V:\> New-Volume -StoragePoolFriendlyName storagebus -MediaType hdd -FriendlyName hddtemp -Size 1gb -ProvisioningType Fixed -DriveLetter w -FileSystem ReFS DriveLetter FriendlyName FileSystemType DriveType HealthStatus OperationalStatus SizeRemaining Size ----------- ------------ -------------- --------- ------------ ----------------- ------------- ---- W hddtemp ReFS Fixed Healthy OK 6.93 GB 7.94 GB

-

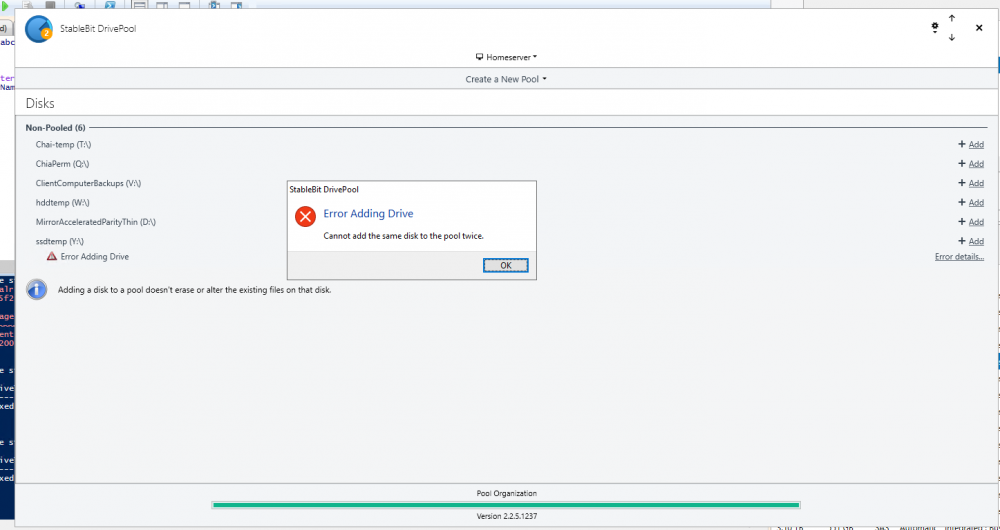

ok here is the repo it evently goes green on the add bar but the pool doesnt get created and the ssd temp that i was trying to add gets its add button back and if you click add again it then gives a error saying you cant add it to the same pool already DrivePool.Service-2022-02-23.log DrivePool.CoveFs.etl ErrorReport_2022_02_23-11_47_48.0.saencryptedreport ErrorReport_2022_02_23-11_59_15.3.saencryptedreport

-

this could have been my issue, trying to just build a new pool in this case the add just hangs this is using the current production release version and the current version of server 2022 and with storage bus enabled

-

o i think i found old logs from that time frame at the end of the day maybe it was just that whatever updates you needed for server2022 was all that was needed, i was also having the same issue with drive binder when i downloaded a trial for that DrivePool.Service-2021-09-16.zip

-

o boy, and the only way i can reproduce it for you is to nuke a 60TB of storage .... software defined so on server 2022 you can layer a flash devices as a cache device and bind it to a spindle. so if you have say 16 spindles and 4 SSD's those 4 SSDS will bind to 4 hdd's each and they will serve as a write buffer and/or read cache. the problem i had when i tried to configure this when i went to add the drive to a pool it would 'add' but not really add and just hang, i wish i took some logs from back then darn it i also may be miss remembering specific details. unfortunately to build the test case is a destructive process i do have all my binding setup currently, maybe i can generate some vdisks but i dont think that was the scenario i was doing originally

-

a couple of months ago i tried to use drive pool on a server that had storage bus cache enabled and drive pool would not work with the drives that where backed by a SSD. do you know if this has been sorted out ?

-

hello fellow people, i done and lost my activation key does anyone know if their is a place to find it online ? or should i wait for my support ticket ?

-

robocopy is now working as I would expect it to now to figure out the best string of options to set to do a sync diff instead of fulls

-

I'm getting a feeling I should avoid the beta build ? or should I be fine as this is for my desktop and not my whs ?

-

install failed see attached log StableBit_DrivePool_(64_bit)_20150324193827.log

-

i will give this a swag when I get home beta in name only ? as this is the only change or is there other items in the compile ?

-

glad you could repo look forward to a correction, not critical as I have duplication

-

wasn't able to get this to work out that great, what I had done was set the SSD's (3x of various vintage) as feeder drives but then I also assigned the blizzard folder to the ssd's this way the game would come off ssd instead of spindle. however in game when pulling boss's performance dropped to zero fps and could not play, moved the blizzard folder out of the pool but still on to a member disk and game play is smooth as glass. so could be that read stripping is not fast enough / evaluating driver performance fast enough for real time interaction. I have taken a drive out of the pool and will keep the games alone and just have whs back it up

-

purged the old logs, did a repo so that DrivePool.Service-2015-03-07 - Copy.log

-

switched to chrome for copy paste This fails - using the pool letter as the source C:\>robocopy d:\ \\vailserver2\desktoppool /mt:8 /r:1 /w:1 /zb /e ------------------------------------------------------------------------------- ROBOCOPY :: Robust File Copy for Windows ------------------------------------------------------------------------------- Started : Saturday, March 7, 2015 9:07:22 PM Source : d:\ Dest : \\vailserver2\desktoppool\ Files : *.* Options : *.* /S /E /DCOPY:DA /COPY:DAT /ZB /MT:8 /R:1 /W:1 ------------------------------------------------------------------------------ 2015/03/07 21:07:22 ERROR 123 (0x0000007B) Accessing Destination Directory d:\ The filename, directory name, or volume label syntax is incorrect. ------------------------------------------------------------------------------ Total Copied Skipped Mismatch FAILED Extras Dirs : 1 1 0 0 0 0 Files : 0 0 0 0 0 0 Bytes : 0 0 0 0 0 0 Times : 0:00:00 0:00:00 0:00:00 0:00:00 Ended : Saturday, March 7, 2015 9:07:22 PM C:\> This works - same command different source - this being a mount point for a member drive C:\>robocopy c:\mount\Green\PoolPart.2408d1d0-b227-4436-a15c-e51c3742e9bb \\vail server2\desktoppool /mt:8 /r:1 /w:1 /zb /e this works - using drive letter to a member drive C:\>robocopy f:\ \\vailserver2\desktoppool /mt:8 /r:1 /w:1 /zb /e il work on logs in a bit the robocopy of a member only finished recently

-

log on what side I have a drive pool on the WHS and I have a drive pool on my desktop right now im doing a robo copy of a member drive to the whs pool.... when that finishes I will try the logging for pool to pool prolly should just enable logging on each side

-

same deal with xcopy if I do xcopy d:\ \\vailserver2\desktopdbackup fails if I change d to f (one of the member drives) it works do the same with robo copy cant do d but f works fine d is drivepool sigh

-

am I being stupid or can robocopy not enumerate a drivepool from root ? but a std copy command does work from root if I robocopy a specific folder it appears to work but well that would be a pain in the but to backup. as backing up each member drive will produce a extreme amount of data over lap I would copy and paste issue's but no copy paste on this forum

-

its a Epson 1080ub - its a older model no reason to replace it

-

guess not, robocopy with delta I guess it is

-

-

ya the down side to doing a backup of the pool disks is that because these are drives with smart errors I have duping set to 3x... so that ALLOT of wasted backup space on the server. I think I can run wbadmin with out vss needed but im tired for the night