GoreMaker

Members-

Posts

9 -

Joined

-

Last visited

Everything posted by GoreMaker

-

I'm not inclined to go through the process again just for the sake of testing. I don't have the spare hardware or the time to test for it. I can create a ticket, but I will not follow up on it afterwards. Here's a description of what I experienced, which is what I'll include in my ticket, but I feel it should be publicly viewable. I was using a read-striped duplicated pool (as outlined in my post here) to host a vast quantity of DNG photo files and Adobe Lightroom catalogs. Thousands upon thousands of photos from countless events spanning many years, all distributed across dozens of catalogs. I was in the process of editing hundreds of photos from a recent event, which initially involved flagging all the keepers and then "Enhancing" those raw photo files for noise reduction. "Enhanced Denoise" is a VERY resource-intensive AI feature in current versions of Lightroom Classic that creates brand new raw files from existing ones, typically hammering the GPU at 100% the entire time. This also generates a ton of disk I/O since these raw files are pretty large and brand new, bigger ones are being generated. I do these in large batches, typically dozens at a time, then I walk away and find something else to do for a few minutes because the computer becomes very slow to respond during the process, even with a Core Ultra 7, 64GB of fast DDR5 RAM, an RTX 4070 Super and very fast NVME SSDs. The result was that at least 10% of the newly created raw files were corrupt, all very randomly. No error message was ever generated, Lightroom simply completed the process "successfully", but when I tried to edit the photos, Lightroom refused to load the corrupt ones, displaying only the embedded thumbnail. It would give me an error message at this point stating "This file is corrupt" [not those actual words]. Initially I thought Lightroom was at fault, so I spent a lot of time finding the corrupt files (they're not identified until I try to edit them), deleting the corrupt version, and re-enhancing the original. Again, I got about a 90% success rate, with 10% of those being corrupt. It never occurred to me that DrivePool might be at fault, I assumed it was either my GPU or Lightroom that was messing up. I had a deadline for these photos so I just powered through it and got my work done. When I read on this forum about this potential corruption issue, I turned off read striping on DrivePool and restored a recent backup of all my files to overwrite everything on the pool. Then I tried "Enhancing" a batch of 500 photos. There was zero corruption. All the files were generated successfully without any problems. Everything was otherwise exactly the same: hardware, software versions, drivers, etc. It was the exact same Windows 11 installation, just with read striping disabled. Of course, all my hours of work for the recent photo event was lost since I needed to restore a backup that preceded the use of DrivePool. Luckily I haven't needed to create new prints of those photos since the project was completed. I've since reconfigured my system and no longer use DrivePool at all. The file corruption left a sour taste and I no longer have faith in the product. This file corruption issue wasted many hours of my time and made the job much more stressful unnecessarily. It's entirely possible that there were other corruption issues in the rest of the filesystem that I wasn't aware of. I can't be taking that risk with my data. I now run an Intel RSE array with RealTimeSync to a separate volume, instead. I'm not pursuing a refund for the product, it's not worth my time and effort, but I also have no plans to use the product again.

-

100% Once I disabled it, all my data corruption issues vanished. I haven't turned it back on since. It was a nightmare restoring all my backups and re-doing days of work from scratch.

-

This explains why thousands of my photos in Adobe Lightroom Classic suddenly started becoming corrupted over the last couple days since I enabled read-striping and then moved catalogs around between devices en-masse.

-

Yup, can confirm this is definitely an issue. It corrupted thousands of my photos. Luckily, I have excellent backups of everything.

-

I don't really need those tools because I also setup a drive hosted on my home server via SMB using StableBit CloudDrive, and that drive is now part of the M:SATA pool for lazy (not real-time) duplication of files that are hosted on the SATA disk. That means I have a current copy of all my pool's files on a different computer. I also run a nightly backup of the directories hosted on the N: pool as a historical backup, which is accomplished with Duplicati and stored off-site. I've come across no issues with Duplicati and DrivePool so far. The resulting backups can be restored without problems.

-

That worked perfectly. I chose a variant of the first option you suggested: - a 4x 2TB NVME pool at L: with... > Duplication Space Optimizer plugin enabled, > Disk Space Equalizer plugin enabled, > duplication enabled, > real-time duplication disabled, > read striping enabled - a 1x 4TB SATA pool at M: with... > Disk Space Equalizer plugin enabled (for when I add disks to this pool in the future), > duplication disabled, > read-striping enabled (for when I add disks to this pool in the future) - a pool at N: made up of pools L: and M: with... > the Ordered File Placement plugin enabled prioritizing the L:NVME pool for writing new files, > duplication enabled, > real-time duplication disabled, > read-striping disabled (I don't want to include slow M:SATA disks in a read stripe) With these settings: - sequential writing to pool N: happens as fast as the max speed of one NVME disk (7000+ MBps) - sequential reading for unduplicated files happens as fast as the max speed of one NVME disk (+/- 7000 MBps) - sequential reading for duplicated files happens almost twice as fast as the max speed of one NVME disk (12,000+ MBps) - my usable NVME pool capacity is 3.65TB (half of 7.3TB formatted) - all files are hosted and duplicated on the L:NVME pool - a 3rd copy of all files exists on the M:SATA pool - everything can be expanded or repaired at a later date by adding or replacing disks as needed - this all happens seamlessly through a single partition at N: I'm particularly impressed that the M:SATA pool is intelligently only keeping 1 copy of every unique file from the L:NVME pool, and not duplicating duplicates. It just lists the duplicated files on L: as "Other". I'm using this pool to host all my Windows User directory's libraries (Documents, Pictures, Videos, Music, etc, with the sole exception of AppData). So far, this is almost as good as ZFS in some ways, and better in other ways. It blows Intel RST, Microsoft Storage Spaces, or Windows Disk Management arrays right out of the water. I'm annoyed I haven't discovered DrivePool sooner.

-

Thank you! That sounds like exactly what I was looking for. I didn't realize I could include pools within pools, that's fantastic.

-

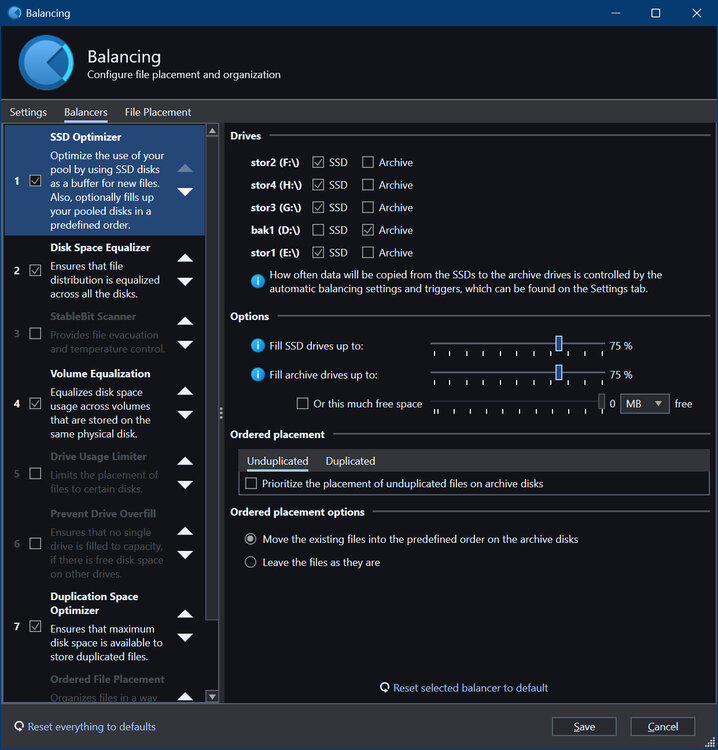

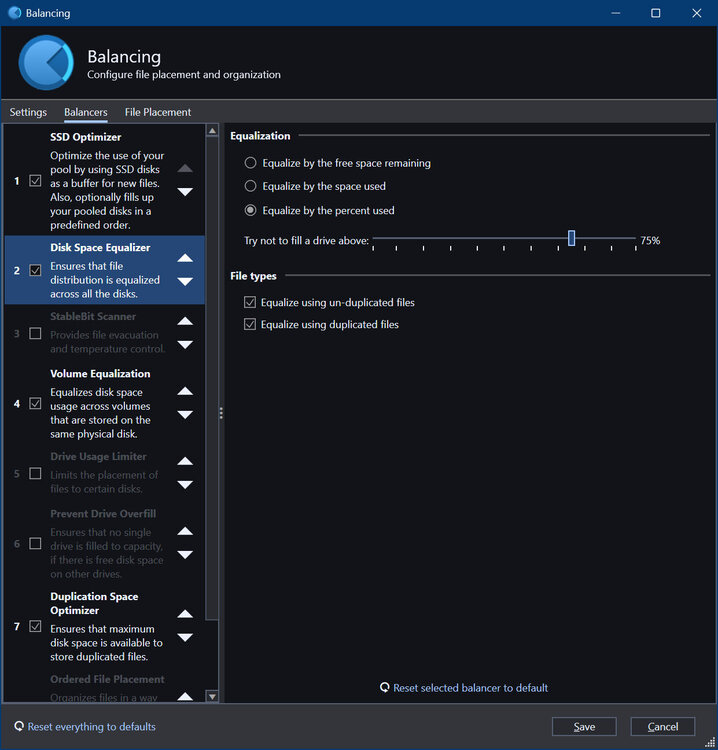

I have 4x 2TB nvme SSDs and 1x 4TB SATA SSD in one pool. Ideally, I'd like all my files to be balanced evenly across the 4x nvme disks, and the duplicates stored on the SATA SSD. Eventually, I plan to add another 1x 4TB SATA SSD to equal the total volume of the 4x nvme disks, but for now that's not necessary for the amount of storage my files are taking up. I've got it mostly working as intended, but for some reason one of the nvme disks is holding an inordinately large amount of data and I can't figure out why or how to get the files to balance out more evenly: I've got my balancing plugins setup as so: Can anyone provide advice on how I can achieve what I'm hoping to do? Thank you!