xliv

Members-

Posts

7 -

Joined

-

Last visited

xliv's Achievements

Newbie (1/3)

0

Reputation

-

The more I think about it, the more I think I should have configured Snapraid to the content of the PoolPart folders. That way, moving the content of 1 drive to a new one would have kept the parity untouched. Of course, it protects only the content of the pool (meaning nothing outside of the PoolPart is protected), but in my case I don't mind as my drives are purely for use by the pool. Would there be a problem with that? For example, the snapraid.content files are at the root, so out of the PoolPart folder, and therefore are not part of the area protected by parity. Are those files skipped anyway when computing parity? I would assume so, they are changing while computing the parity during the sync command.

-

I tried the following: Add new drive Robocopy data from the poolpart folder of the old drive to the new drive Insert drive in DrivePool, then stop service to move files to the new poolpart folder, restart service and remeasure pool Edit Snapraid config to replace the old drive by the new Diff command => massive "copy" and "remove" of all files Sync (with option --force-empty as otherwise sync does not work) => takes 20h for 5TB There's got to be a better way... I was thinking about putting the PoolPart folder instead of the root of drives ni SnapRaid config. Would that work? There is a trace "WARNING! UUID is changed for disks: 'd2'. Not using inodes to detect move operations." Maybe using the --force-uuid option instead, or together with the option --force-empty. I'll try next time. I know this is not the Snapraid forum, but I would have thought more people used both at the same time (they complement each other very well).

-

Many thanks! Ok I'll do this next time, and will report here about if (and how much) the fact that all paths have changed (PoolPartA to PoolPartB) impacts Snapraid. It's actually probably very similar to what I've done (except that instead of using robocopy, I've let DrivePool handle the move, since my config was set to be sure that the data would be moved only to the new drive. But maybe robocopy does a better job at keeping the file attribues the same.

-

Hi, I was looking for a specific topic or wiki page of the tips & tricks to best use DrivePool + Snapraid together. Today, my question is about: how to replace a drive with a larger one. "DrivePool way": you simply can add the new drive and remove the old drive from the pool, the rest is done automatically. Problem with Snapraid is that, if by chance (or right balancing config) the content of the removed drive ends up on the new drive, the paths to each file will have changed, so Snapraid will report a massive deletion / creation of files, and the sync command may take 12-24h to run... "Snapraid way": copy (with robocopy) the content of the old drive to the new, change the config with the new drive, run a diff and fix potential "changes", and once everything seems ok, run a sync (which should be immediate). The problem here is you'll end up with the old PoolPart folder, which I guess DrivePool will not cope with. Is there a preferred way to do this? Right now I did it the "DrivePool way", and the sync command reports 12h (I replaced a 4TB with a 12TB, so moved approx 3.5TB of data). What I would loooooooove would be a deep integration with Snapraid, and potentially (why not) a Snapraid UI in DrivePool...

-

Hi, I've just finished setting up my pool with a 500GB SSD as a cache, and I have to say, it's pretty awesome! I was wandering though about the best configuration - there are many parameters, and it's not obvious how each one works, and how they interact with each other. Apologies if this has already been answered somewhere, or seem obvious in the user manual, I'm still a DrivePool newbie. I want the balancer to work only for SSD cache - moving out the files, as quickly as possible without impacting other activities on the pool. I however do not want the balancer to move files from 1 archive disk to the other (my data is pretty static, and I don't use duplication). What's the best way to configure that?

-

So I had the first issue with DrivePool. I noticed this morning that one of my HDD had a SMART status of 53% using HardDisk Sentinel (Stablebit Scanner did not detect it since it's connected through a SAS controller, and as I understand, Scanner cannot get SMART data through SMART). So I did what I thought was recommended: remove drive from pool Attempt 1: I started the process, ticking all 3 options because I urgently needed it to complete without blocking on first error (btw should be default I guess?). It started, but somehow after few minutes I decided to empty the recycle bin, to make sure that deleted files were not moved over. The process was interrupted with an error. The pool start re-measuring. I rebooted. I don't know if the interruption was caused by the fact I emptied the recycle bin. Attempt 2: The drive was now showing 52% in HD Sentinel. I launched the process again. But, as it started and got to 1.5% after 10-15mn, I opened HD Sentinel and clicked on "Repeat Test". The process was again interrupted with an error, The pool started re-measuring again, but suddenly indicated that the drive was missing. No idea if SMART status test did interrupt the process. Attempt 3: The drive was now showing 48% in HD Sentinel. I was then determined to run the process without any other interaction. I clicked again on remove, but after 30mn, the status was showing 0.0%, and "Write to pool" was at 0MB/s. I interrupted, rebooted. Attempt 4: I decided to do it manually, I don't trust the process anymore. So I did the reverse of the seeding guide: closed DrivePool interface, stopped the service (stopped Scanner as well), went to the failing drive and to its PoolPart folder. I'm now moving files out of the pool, it works so far at a speed of 100MB/s. Status shows 43%, so I hope that I'll be able to move files out before the disk burns. I'll then disconnect the drive, reboot and indicate to DrivePool that the disk is gone. Moving files back to the Pool will be kind of painful, as I'll have to merge directory structure. So I guess Is what I faced normal? Is there a recommendation when doing a "remove drive" (shut down all other servies, shut down access to the pool?) Any reason why on my third attempt the process did not even start? Why is SAS not supported? It really means that the Scanner piece is pretty useless for me. I guess we're a lot using SAS extender cards, this is pretty key to make of Scanner a good alternative to other more specialised (but not integrated to DrivePool) solutions.

-

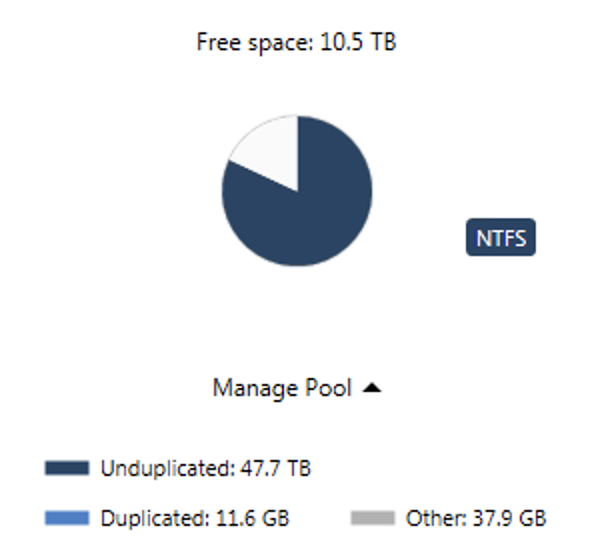

Hi, following my tests, I went ahead and acquired a license. I followed the seeding guide to migrate data from my FlexRAID pool, and got it running without much trouble. I have a question on file duplication. I did not enable any protection on my pool, but had initially warnings about duplicated files which were different. What happened is that, while I was running FlexRAID, a drive went missing, and one of my software re-created some of the missing files (media description files coming from automated scrapping). When I reconnected the missing drive, there were suddenly 2 copies of those files, and some did differ. I did not notice this, as FlexRAID apparently did not care about that. I ticked the tick box to enable DrivePool to automatically resolve this conflict by choosing the newest copy of the file, and havre no more warnings. However, my Pool is now reporting: 11.6GB of duplicated files 15.8 GB of "other" Is there a way to understand what those are?

-

xliv joined the community

-

Hi all, I'm new here - currently testing DrivePool as an alternative to FlexRAID, which unfortunately is not supported anymore. I was about to ask the most basic question about why my Pool was not showing the data that was already in the drives, but found the answer here. So I guess I'll follow the recommendation about seeding the pool. I'm moving off FlexRAID with a pool of 58TB, made of 10 local hard-drives (3 to 10TB), 48TB already full. I was not using FlexRAID parity, but would like at one point to do that. I have a couple of questions: The drive letter for the pool seems to be automatically assigned by Windows. Is there a way to change it within DrivePool, or should I do it in Windows? Are there any other recommendation from users who have mirgrated their pool from FlexRAID to DrivePool? Should I clean-up anything on the drives before using them in DrivePool? About having a small SSD as a landing drive - is there a recommendation about the size of it? I have a lot of Blu-Ray rips, so quite often I move 50GB+ files to the pool. My pool will is almost exclusively dedicated to expose network shares - do I create those shares direclty in Windows, or is there a place in DrivePool GUI that I should use to do so? Do you recommend activating the Network I/O boost? When my PC reboots, will the pool be started before the shared drives reconnect? I won't be using duplication - any recommendation about a good parity redundancy software? SnapRAID looks fine, but snapshot only, I hoped to find a real-time parity platform. Many thanks in advance