Malgos

-

Posts

4 -

Joined

-

Last visited

Posts posted by Malgos

-

-

Since my workaround still have a lot of overhead, i'm switching to an other strategy.

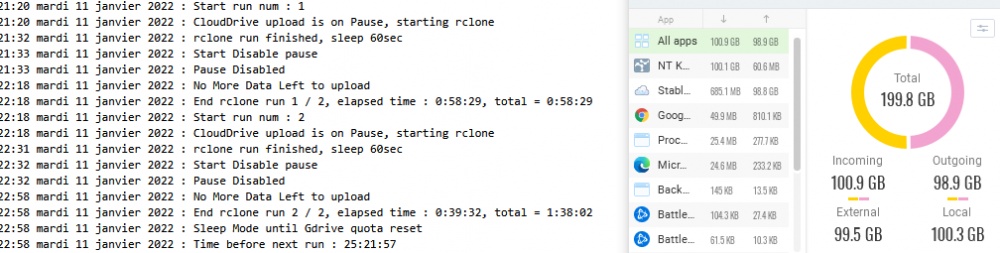

It's crucial for me to reduce upload overhead to maximise the amount of data i can upload to google drive by day. I have a 50TB nas to backup, by default with my first method it will take me around 440 days to backup, with my workaround, it will be around 105 days, if i want to go bellow, i will have to do an other way.So, i made up an ahk script (not fully tested right now), that pause CloudDrive, feed it with 50Gb of data, unpause and wait the upload finish, and do it over until 700GB are send. Then he wait 26 hours and do it again.

max_gb_transfert_day := 700 gb_buffer_size := 50 nb_run := Floor(max_gb_transfert_day / gb_buffer_size) command = rclone.exe copy "\\MyNas\MyData" "D:\MyData" --max-transfer %gb_buffer_size%G -P rclonedir = C:\rclone-v1.57.0-windows-amd64\ WinGet, pc_wID, ID, ahk_exe CloudDrive.UI.exe while(1){ StartTime := A_TickCount NextDay := StartTime + (26*60*60*1000) current_run := 1 while(current_run <= nb_run){ StartRunTime := A_TickCount Debug("Start run num : " . current_run) ;We Enable Pause While(is_pause(pc_wID) == false){ clickpause(pc_wID) Sleep, 5000 } Debug("CloudDrive upload is on Pause, starting rclone") RunWait, %command%, %rclonedir% Sleep, 300000 Debug("rclone run finished") ;We Disable Pause While(is_pause(pc_wID)){ clickpause(pc_wID) Sleep, 5000 } Debug("Pause Disabled") ;We wait until there is no data left to upload and uncheck pause if Upload paused While(is_datatoupload(pc_wID)){ While(is_pause(pc_wID)){ clickpause(pc_wID) Sleep, 5000 } Sleep, 5000 } Debug("No More Data Left to upload") Sleep,2000 Debug("End rclone run " . current_run . ", elapsed time : " . FormatSeconds(Floor((A_TickCount - StartRunTime )/1000))) current_run := current_run + 1 } Debug("Sleep Mode until Gdrive quota reset") while(A_TickCount < NextDay){ Debug("Time before next run : " . FormatSeconds(Floor((NextDay - A_TickCount)/1000))) TimeSleep := 20*1000 if( ((NextDay - A_TickCount)/1000) > 20*60){ TimeSleep := 5*60*1000 } if( ((NextDay - A_TickCount)/1000) > 90*60){ TimeSleep := 60*60*1000 } Sleep, TimeSleep } } Debug(str){ FormatTime, TimeString FileAppend %TimeString% : %str%`n, * } FormatSeconds(NumberOfSeconds) ; Convert the specified number of seconds to hh:mm:ss format. { time := 19990101 ; *Midnight* of an arbitrary date. time += NumberOfSeconds, seconds FormatTime, mmss, %time%, mm:ss return NumberOfSeconds//3600 ":" mmss } ;Click on the Pause button on CloudDrive.UI to Pause/Resume clickpause(pc_wID){ wTitle = ahk_id %pc_wID% If WinExist(wTitle) { WinActivate MouseMove, 319, 624 Click MouseMove, 100, 100 } } ;Check if the Pause is ON in CloudDrive.UI is_pause(pc_wID){ colorpause:=PixelColorSimple(pc_wID, 319, 624) if(colorpause == 0xC8D2DD){ return true } return false } ;Check if there is data left to upload in CloudDrive.UI is_datatoupload(pc_wID){ colordata:=PixelColorSimple(pc_wID, 47, 546) if(colordata == 0x467DC9){ return true } colordata:=PixelColorSimple(pc_wID, 184, 546) if(colordata == 0x467DC9){ return true } colordata:=PixelColorSimple(pc_wID, 59, 569) if(colordata == 0x467DC9){ return true } colordata:=PixelColorSimple(pc_wID, 184, 569) if(colordata == 0x467DC9){ return true } return false } ; Coordinates are related to the window's client area PixelColorSimple(pc_wID, pc_x, pc_y) { if (pc_wID) { pc_hDC := DllCall("GetDC", "UInt", pc_wID) pc_fmtI := A_FormatInteger SetFormat, IntegerFast, Hex pc_c := DllCall("GetPixel", "UInt", pc_hDC, "Int", pc_x, "Int", pc_y, "UInt") pc_c := pc_c >> 16 & 0xff | pc_c & 0xff00 | (pc_c & 0xff) << 16 pc_c .= "" SetFormat, IntegerFast, %pc_fmtI% DllCall("ReleaseDC", "UInt", pc_wID, "UInt", pc_hDC) return pc_c } }

I'm still testing it, just done it with 100GB of data transfer (with a 50GB buffer) and i don't get any upload overhead.

Still that sucks to have to resort to this kind of dirty tricks when an option should be mandatory.

What is the point of wasting upload bandwidth for files that are still incomplete (especially when one of the most used cloud hosting have daily limits) ? -

After some thought, i did find a workaround, but that's nasty.

Since, my first data to transfer is just from a NAS on SMB, i can replace rclone with rsync (i can install rsync on windows on top of git) and do instead of :

rclone copy "\\MyNas\MyData\" "D:\MyData" --bwlimit 7M --rc --rc-no-auth -P

rsync -a --progress --size-only --bwlimit=7M --temp-dir=/c/temp "/w/MyData/" /d/MyDataThat way, data will be uploaded first on a temp directory, but that sucks, because rsync do not support multi-thread uploads, you can't use the -rc flag like rclone to change on the fly the bandwidth (i had an external script that reduce the bandwidth to 10K if the space left on device go under a certain amount) and finally this strategy will hit a wall when i will want to upload an other drive that is not on a local smb network but stored on a gdrive account (Maybe using a rclone mount before using rsync, but omg it's so dirty...)

Edit : Even with this strategy, for 14GB of data pulled from my NAS, CloudDrive still upload around 20GB. (Using the max value -10MB- of upload threshold)

-

I have few T to transfer from an external source to CloudDrive and i have little free space availiable on my local harddrive.

So i did use rclone and use an rclone copy from my external server to my gdrive clouddrive disk with a bandwith limit around 7MB to avoid quota ban from google.

I have an upload speed that allow cloud drive to do the upload quick enought to keep my local buffer at an acceptable level.But, i was getting ban, so i did a test :

I transferer a 1Gb.file to my CloudDrive at full speed and monitor my bandwith, so i saw CloudDrive uploaded around 1.2GB.

Next, i transfered 1Gb.file to my CloudDrive but with a bwlimit at 5M. And that's the issue, CloudDrive uploaded around 6GB.Is there an option or something to avoid this useless upload and wait for the file complete before sending it to google drive ?

I saw, upload threshold, but it's worthless since it can't go over 10MB, i would need something like 30GB.And from my point of view, the threeshold is a non-sense, instead of waiting for few minutes elapsed since the data was first written, we should have an option to wait few minutes AFTER the data did not change (like if a file did not grow since one minute, it's good to go, and you can start uploading it)

Or maybe as a workaround something like an API to pause upload / resume upload from an external script ?

Can cloud drive works with a read only (over quota) gdrive ?

in General

Posted

If you create a cloud drive on gdrive, and your gdrive account became over quota so you can't write new file (readonly), can you still use and mount your cloud drive ?