-

Posts

14 -

Joined

-

Last visited

Everything posted by Julius

-

That is still not explaining why the entire pool needs to be checked. I disconnect only small part of the pool, I'm assuming the pooling software knows what is (or was) on that part, that disk that got temporarily removed for a few seconds, otherwise using a drivepool would not make any sense. So the question remains: Why does it have to actually *read* the entire pool of data from disks that don't even have any of the data that was on the removed disk?

-

I understand that it needs to at least roughly check *that* disk, but it checks the entire pool again (reads all disks). That really seems overkill. It's 2019, aren't we at a level (of AI) where it can grasp the fact that if one disk was removed, it was disconnected for less than, say, 5 minutes, software should wonder if that was intended or not, and if not, just decide it wasn't? And then only (prompt to) check what changed on *that* disk alone?

-

I still feel it's doing the "Measuring..." way too soon and too often. Just now I accidentally removed a drive, put it back in immediately, and now it's decided to measure the entire pool (32 Terabytes of data) again. This is not good for the drives, a lot of bonus wear and tear, a lot of bonus reads (and probably writes as well), and waste of time and resources. Perhaps a good idea to make the trigger for "Measuring..." user configurable? I mean, to me this comes across as serious overkill, the fact that one pool member just shortly got disconnected does not warrant yet another scan of the entire pool. There were not even any reads or writes going on in the pool while the disconnection occurred.

-

If it would be file corruption, scandisk would have solved it. It didn't find any corruption. Memory/RAM is all fine. Upon closer look, the files that DrivePool had trouble with could be moved within the pool, but not deleted (also not manually, not from any pool-member outside of StableBit control). Really strange. They were old files (pre 2000), from really old HDD sources that I've copied to the pool long ago. Apparently, according to MicroSoft, they had a permission order problem. All the solutions MS offered against that did not solve it, but using lockhunter on the folder they were in did fix it! Finally. I had many files with this exact issue, LockHunter cured the pool for me. Cute little tool, and free!

-

I ran chkdsk /f /r /x on all drives in the pool. Didn't find anything bad. However, now Pool Organization keeps halting with warnings of "mismatching file parts" and DrivePool asks me if I want to automatically delete the older file parts or let me manually delete the incorrect ones. Which surprises me is that DrivePool doesn't see which of the file versions is broken, since thus far the ones mentioned under the halted error's details list all clearly have only 1 version that's correct, namely the one that has a correct CRC32. All were old rar files, apparently some on some disk were not OK, because I could not even open them. Just found that perhaps some older archives had attempts at virus-planting in them from a really old event I don't even remember. DrivePool doesn't recognize it as such though. I'll try and do a systemwide archives scan using ClamWin and see if that fixes the issue.

-

It seems awful overkill that Stablebit DrivePool does 'measuring' whenever I rebooted the OS. Can't it just continue where it left off, recall last state? Is there a config switch I've overlooked, where I can tell it not to rescan all files on all drives at boottime?

-

Like I already wrote; For me backup policies only exist in order to have data off-site and/or offline. I use my own nextcloud cluster servers, syncthing and rsync for that, in several different sync to backup setups.

-

I've been an avid (paying) user of SoftPerfect RAM Disk. I wonder if I could set aside some RAM for my DrivePool using that. Any ideas? Generally I have all my user profiles and portable apps on a RAM disk. That gets stored away at shutdown (and at a set interval, like with primo) and re-opens at startup.

-

Again, this is highly exaggerated. I have USB flash drives that have been used since the early 2000s that are still holding the data that was written on it the last time, somewhere in 2004. If your USB flash storage medium is of any decent brand, and not written to extensively, it'll hold its data just fine for years on end. They have a limits of a couple of terabytes to endure to have written to them, and in my career I have only seen 1 or 2 that gave up on me because they had seen bits written to one time too many, that's about 5% of the USB flash-media I've ever touched or worked with.

-

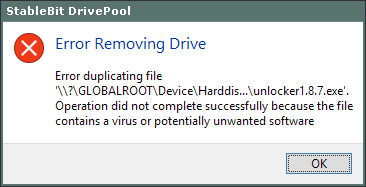

Can't seem to be able to remove a drive from the pool, it keeps coming up with files that are assumed to be 'potentially unwanted software' (which they are not, they are just suspicious executables because they do things on lower levels, the usual Windows Defender false positives crap). An example dialog Window is attached. Please tell me how to get this nonsensical issue resolved. I realize it's probably Windows 10 that's causing this. But since I have already disabled virus scanning, and excluded the pooled drive for Win Defender, it is extra strange it's still trying to avoid moving its content and checks it again. How do I tell Win10 that ALL software that is currently on my PC's storage *is* wanted software. If it would be 'potentially unwanted', I would not have it on there. I've been using PC's since the late 80s, and have not been 'hit by a virus', ever, so I'd day that counts for me not requiring 3rd party software to make these decisions for me. If I want to research a virus with a hex-editor, I want to be able to do so, and not be annoyed with Windows trying to quarantine the file without interacting with me.

-

That can go two ways: Say you make auto backups every hour, so the backup can potentially be 59 minutes old if you've got a bad day ;-) You're working on something, you save the last bit of work, you work on it some more, then a disk stops working. Phew, you still have the other disk in the pool, where it did write your last work to. A backup would be useless, because it misses all that last work. Plus, restoring from the pool dir on that disk in the pool is a LOT easier than from any backup solution, most likely.

-

Have you considered it may not be the flash-drive that's causing this? Try the USB controller or the motherboard. Especially check if the board gets enough power. I've seen USB drives unjustifiably getting the blame for issues more often than I can count, while a mainboard was broken, or PSU didn't have enough power left for the USB lanes. If that's ruled out, and it still fails, try running SpinRite level 2 scan on the USB-drive (or similar software). May work wonders.

-

Umfriend and amateurphotographer, I don't know what your ages are, but I'm 52 and been using computers to store data for about 36 years now, and let me just throw this in here; The times when I 'accidentally' deleted a file or files and lost them that way can be counted on one hand. Especially since we have the RECYCLE BIN or Trash can that problem has disappeared for me, if I even ever accidentally do hit delete (in TotalCommander or DxO Photolab or some other program) and confirm its deletion, because the confirmation is still not automatic for me. So, I don't know about you guys, but that barely ever happens, if at all, in life. What *did* happen a lot more in the past 36 years is storage media suddenly deciding to crap out on me , floppies or drives becoming entirely or partly inaccessible. I've even lost 16 GB of stored data when an at the time very expensive Intel SLC SSD decided to stop working a few years back, it went from 1 to 0. No software out there could get it back, not even SpinRite, probably a charged spark borked the controller chip. Luckily it was mostly an OS installed boot-drive, but even so, it warned me about not trusting on the high MTBF of SSDs too much. What makes backup policies suck the most for me is the fact that they're almost always dated or too old, not current or not current enough. HDDs or SSDs breaking down almost always happens at moments you least hoped they would, and in ways you never expect. This, for me, is where DrivePool comes in. It has already saved me from losing data several times by having a direct dual or tripple written down copy of what I was working on. These days I have big data passing through my storage media, and it's really comforting to know I can access the functional left-over storage whenever one or more stop working. RAID storage methods for redundancy are horribly overrated, in my experience. Oh the times I've tried to restore data from broken RAID-arrays probably outnumbers those for just broken disks, and the time it took, good grief, incomparable to having StableBit DrivePool create standalone copies. So, backup or not, for me backup policies only exist in order to have data off-site and/or offline.

-

Dear true humans at Covecube, I have a stringent question that has been haunting me for weeks now: I currently have 11 physical HDD/SSD media that are all part of just ONE pool (and hence represented by one drive-letter..). Assuming I have lots of RAM free, and lots of CPU cores doing very little; Does this setup make sense, performance-wise, or is it faster or otherwise smarter to create more than one pool, say, for different directories or using different policies? I have a lot of placement rules active on this one pool, and lots of levels of multiplication set for different folders, so it does not feel like I *need* to separate or divide stuff over more pools. I do notice that Measuring takes a long time. Would it go faster with more than one pool with the same data (virtually) separated? Perhaps this is more or less the same as "how many file-streams at once can be copied from one drive to another?" where I often found that the sweet spot was with 3 streams, for USB 3 or faster external drives that is. Perhaps with SSD or virtual drives that sweet spot is a higher number. I'm hoping my heroes at Covecube know best. TIA!

- 1 reply

-

- amount

- number of pools

-

(and 1 more)

Tagged with: