RottenBit

Members-

Posts

12 -

Joined

-

Last visited

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

RottenBit's Achievements

Member (2/3)

0

Reputation

-

I'm having trouble with SSD Optimizer plugin to behave like I thought it was supposed to. So I did the following with default settings, for both Balancer and SSD Optimizer: HybridPool consist of 2 nested pools as Archive disks and two SSDs as the cache disk. I've enabled 2x duplication on HybridPool. - Cache: Local SSD drive - Cache: Local SSD drive - Archive: LocalPool (nested pool) - Archive: InTheCloudPool (nested pool) LocalPool (no duplication) consist of: 2x Local HDD InTheCloudPool (no duplication) consist of: 1x CloudDrive via Google Drive (only 1 drive for now) As I said I've enabled 2x duplication on HybridPool. When I copy a new file (500 mb) to HybridPool the file gets copied to both SSDs. When the copy is done, DrivePool only moves one of the duplicated files from the SDD to the Archive. So the files are now located on one SSD and on one Archive. I want them both of them to be moved to both the archive drives, duplicated, leaving the SSDs empty. Do you see what I'm doing wrong or is it simply just not possible? Let me know if anything isn't clear.

-

Thanks for the response from you and Alex, appreciate it.

-

Did you get to double check on this? Although I agree it makes sense it wouldn't get to pin the data. Also, do you know if BitLocker affects the uploading? Using Expandable as the cache type, I copied a lot of data, small files to large files, to the drive and it wouldn't start uploading until I paused the copying. When I resumed, upload went to 0 mbit again. And if I forced the cache to be Fixed or Proportional, it would only start to upload when it reached the cache limit.

-

When BitLocker is enabled on the clouddrive, does metadata pinning work? I've copied hundreds of thousands of files and "Pinned" is just using 336 KB, regardless of cache size. Last time I copied the same files without BitLocker, metadata pinning used a few GBs.

-

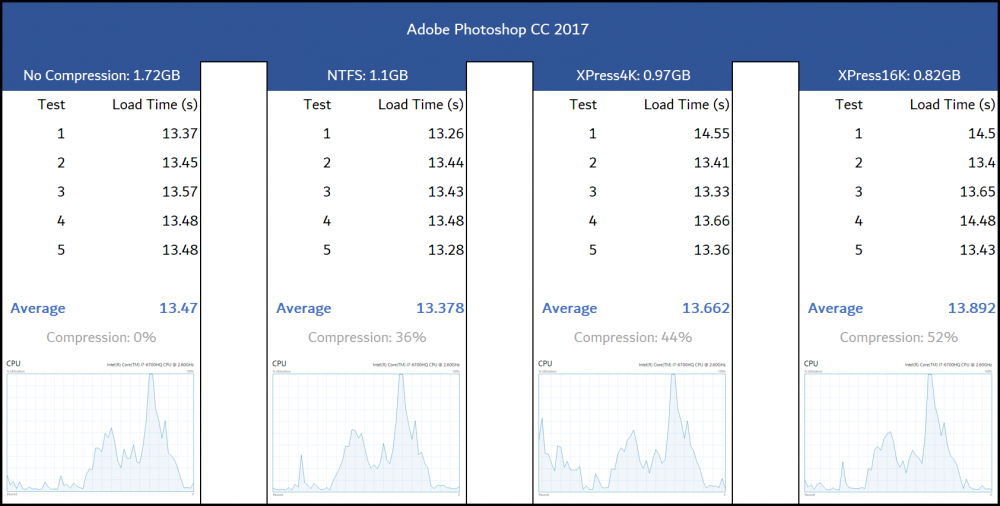

I didn't expect this would be easy, awesome features rarely are. AFAIK you could get an 'easy' start by using the native kernel compression methods in Windows like MSZIP, XPRESS, XPRESS_HUFF, and LZMS. LZMS is best (not shown on picture). For instance I just compressed my entire C: drive now, 242 GB used compressed to 147 GB. 95 GB saved, that's 40% size reduction. Didn't take much longer than raw copy either, it sometimes is actually faster because you don't need to write as much data. And this was achieved with a one-size-fits all compression method. I guess I'll be doing some more file system development myself.

- 7 replies

-

- compression

- feature

-

(and 1 more)

Tagged with:

-

One should always compress first before encrypting. It's a very common combination and widely used.

- 7 replies

-

- compression

- feature

-

(and 1 more)

Tagged with:

-

Thanks for adding the request. I did search thoroughly on the forums on this topic and was actually very surprised that I didn't find any discussion about compression at all. I then searched the web for alternative solutions that offered a virtual drive with native compression, but again I didn't find anything usable. So I actually started working on my own filesystem with this in mind, having a virtual drive with built in compression. But I took to my senses and at least wanted to ask you guys to implement this before going down that dark road myself... But for you guys I believe applying real-time compression on the file streams would be very easy... unless of course there is something else complicating things.

- 7 replies

-

- compression

- feature

-

(and 1 more)

Tagged with:

-

Since CloudDrive uses its own filesystem and has full control over it, has it been considered to implement native compression? And by compression, I don't mean NTFS file level compression, I mean optional native compression in CloudDrive's filesystem. This would reduce the amount to transfer data over the network at the expense of CPU usage (and in some cases RAM) during compression. I guess we could have two types of implementations of the compression feature. A simple mode and one advanced mode. The simple mode would just use one compression method that applies to whatever content is stored, for instance LZMA. It would be nice if the user could at least control the compression level (low / medium / high). And it would be useful if it was an adaptive compression technique where it is smart enough to not compress already compressed data like compressed pictures and movies. The advanced mode would give the user choice to tinker more with the settings. The adaptive compression technique could be extended, for instance different compression methods for different types of data. For instance, a rule could be set up to use PPMd for data containing a lot of text (text files, word, databases etc) which is highly effective on this kind of data, and other compression methods for other kind of data. Automatic compression that magically works behind the scenes in CloudDrive would be a very powerful feature. It would reduce the amount of data to transfer, and thus reduce the cost for both bandwidth and cloud storage since less data is stored. It has a cost of course; depending on the compression method used, CloudDrive would require more CPU and sometimes more RAM usage during file transfer. The time to download / upload data would be reduced but the time to compress and decompress would be increased. Giving the user to choice to enable compression would be very nice, and giving power users to control even more settings would be the cherry on top. So I'm curious - is this something you have considered? Is it something that users want? Needless to say I would like it a lot I believe compression, encryption and cloud storage go hand in hand.

- 7 replies

-

- compression

- feature

-

(and 1 more)

Tagged with:

-

jik0n: I think he has attached a drive as read only on one machine, and attached the same drive on another machine simultaneously. This is possible, but there isn't live sync between them. There might be risks as well, not sure. Hopefully one day we get a proper "Attach as read only with live sync" I guess. (which will be a fantastic feature). I also don't think this particular setup has anything to do with the bug, as far as I know it's just a random bug that happens when you edit some of the IO settings. Would be interesting to learn the real reason why this bug sometimes occurred.

-

That's fantastic news! I hope it's the same bug you triggered Hopefully good things come to those who wait.

-

I started with the latest RC of CloudDrive from the regular download page before it went RTM, which I then upgraded to. I'm quite certain that the issue existed in both the RC and RTM versions, because I remember that I really hoped this bug was fixed in RTM. I'm also pretty sure that I haven't tried any internal builds since I can't remember doing that. And yes, the steps provided in your link does fix the issue temporary, but no more than using the "Reset all settings..." function, which is obviously a lot more easier and faster than uninstalling, removing from registry, deleting and reinstalling etc. But the bug can still occur at any time after doing either method.

-

This is happening to me too. It happens when I edit one or more properties (or just click the save button without any changes) for "I/O Performance" settings. The risk for this bug to occur is increased with the frequency I edit the settings. When I've clicked the save button and the bug occurs the windows freezes a second before closing the window. When this has happened I can't open that window anymore. It has probably corrupted some configuration file or something. The fix for me is to click the gear icon, then "Troubleshooting" and finally "Reset all settings...". This means that I need to reauthorize all drives again, and I loose all my custom settings for all drives so it's a pain for sure. 1.0.2.976 on Windows 10.