andy0123456

Members-

Posts

11 -

Joined

-

Last visited

-

Days Won

1

Everything posted by andy0123456

-

This was meant to go into the cloud drive section 🤦♂️

-

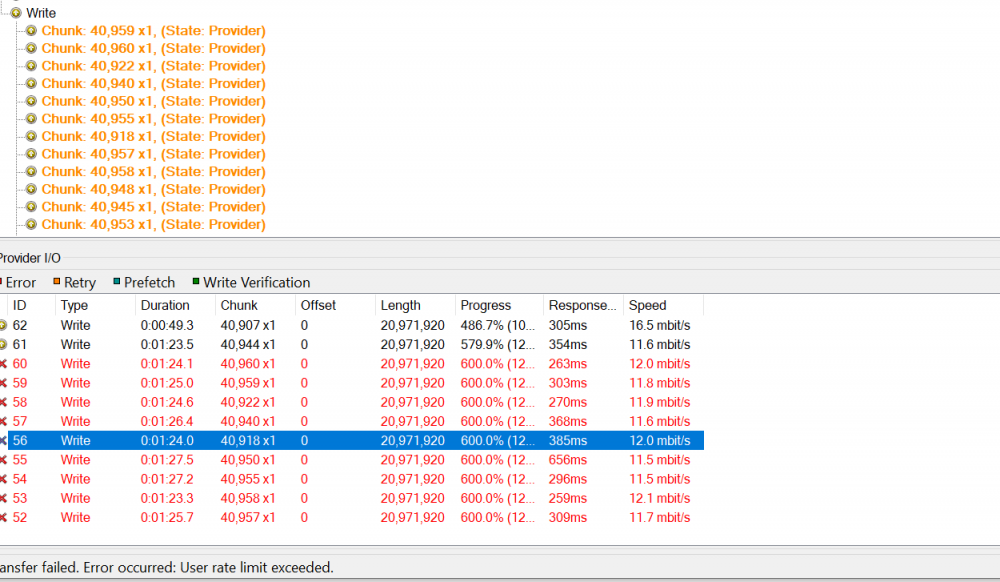

Something happened a few days ago where clouddrive just wiped my license details and the drives went into readonly mode. I put the license activation id back in and it seemed fine. Until today when I've noticed for one of my drives, the to upload keeps growing, and nothing is uploading or has uploaded since that issue that wiped my license, it's just constantly failing to write 1 chunk and the service log shows this error: 0:50:15.8: Warning: 0 : [WholeChunkIoImplementation:131] Error on read when performing shared partial write. Checksum mismatch. Data read from the provider has been corrupted. 0:50:15.8: Warning: 0 : [IoManager:131] Error performing read-modify-write I/O operation on provider. Retrying. Checksum mismatch. Data read from the provider has been corrupted. 0:50:15.8: Warning: 0 : [WholeChunkIoImplementation:50] Error when performing master partial write. Checksum mismatch. Data read from the provider has been corrupted. 0:50:15.8: Warning: 0 : [IoManager:50] Error performing read-modify-write I/O operation on provider. Retrying. Checksum mismatch. Data read from the provider has been corrupted. 0:50:16.5: Warning: 0 : [ChecksumBlocksChunkIoImplementation:131] Expected checksum mismatch for chunk 166712, ChunkOffset=0x00B000DC, ExpectedChecksum=0x6752bc67bd737505, ComputedChecksum=0xefc3cd06e327575b. 0:50:17.9: Warning: 0 : [WholeChunkIoImplementation:131] Error on read when performing master partial write. Checksum mismatch. Data read from the provider has been corrupted. 0:50:17.9: Warning: 0 : [WholeChunkIoImplementation:50] Error on read when performing shared partial write. Checksum mismatch. Data read from the provider has been corrupted. 0:50:17.9: Warning: 0 : [IoManager:50] Error performing read-modify-write I/O operation on provider. Retrying. Checksum mismatch. Data read from the provider has been corrupted. 0:50:17.9: Warning: 0 : [WholeChunkIoImplementation:131] Error when performing master partial write. Checksum mismatch. Data read from the provider has been corrupted. 0:50:17.9: Warning: 0 : [IoManager:131] Error performing read-modify-write I/O operation on provider. Retrying. Checksum mismatch. Data read from the provider has been corrupted. 0:50:18.7: Warning: 0 : [ChecksumBlocksChunkIoImplementation:50] Expected checksum mismatch for chunk 166712, ChunkOffset=0x00B000DC, ExpectedChecksum=0x6752bc67bd737505, ComputedChecksum=0xefc3cd06e327575b. 0:50:19.7: Warning: 0 : [WholeChunkIoImplementation:50] Error on read when performing master partial write. Checksum mismatch. Data read from the provider has been corrupted. 0:50:19.7: Warning: 0 : [WholeChunkIoImplementation:131] Error on read when performing shared partial write. Checksum mismatch. Data read from the provider has been corrupted. 0:50:19.7: Warning: 0 : [WholeChunkIoImplementation:50] Error when performing master partial write. Checksum mismatch. Data read from the provider has been corrupted. 0:50:19.7: Warning: 0 : [IoManager:131] Error performing read-modify-write I/O operation on provider. Retrying. Checksum mismatch. Data read from the provider has been corrupted. 0:50:19.7: Warning: 0 : [IoManager:50] Error performing read-modify-write I/O operation on provider. Retrying. Checksum mismatch. Data read from the provider has been corrupted. Is there any way to fix this, or just discard the provider side data? Do I have to delete this drive and recreate it? Have I lost the 52TB of data in it?

-

Clouddrive continues to attempt uploading when at gdrive upload limit

andy0123456 replied to andy0123456's question in General

Throttling to meet the limit means the drive has to be uploading 100% of the time or you artificially lower your limits (if you only upload for 12 hours in a 24 hour period you can now only reach 375gb). Was hoping for some better handling of this -

Clouddrive continues to attempt uploading when at gdrive upload limit

andy0123456 replied to andy0123456's question in General

So quick math the drive is set to 250mbps upload throttling, would hit upload limit in ~8 hours, then spend ~16 hours uploading things that will fail, still at 250mbps. To upload my final 90gb, I'm transferring ~1590gb. -

Clouddrive continues to attempt uploading when at gdrive upload limit

andy0123456 posted a question in General

Is there any way to fix this? So I woke up to find the 750gb upload limit has hit, but cloud drive has 90gb left to upload and it keeps retrying even though it can't make progress. Is there any way to disable this functionality? We can pause writing on a throttling error, but then I assume a throttled connection that isn't related to the upload limit would then pause everything too, plus each time it happens you would have to manually unpause once the throttling is done. Maybe providers should have a circuit breaker on uploads or similar? -

Works fine for any other actual device assigned a letter, but choose the drive letter that DrivePool is running on and the install button does nothing and after a few seconds it reverts back to the C drive as the option. Tested on 561 and beta 651, same issue on both.

-

Setting is only changeable when attaching or creating drives, it's "minimum download size". It can't be set higher then cache chunk size.

-

That sounds like a really good idea, I can see other uses too for example I have 1.07TB upload queue on a pre-release drive currently and while all new writes go to a newer drive I've made, I'd like to quickly get rid of the queue on the older drive and move it to the newer one.

-

With the speeds you have, I think what you're looking for is increasing the prefetcher chunk size. This is part of the drive attach process and is labelled "minimum download size". By default the prefetcher operates in 1mb chunks, I assume it sacrifices throughput for response time, but it gets quickly throttled by google, for example my drive is unused most of today and google starts throttling me after ~10 seconds of prefetching at 1mb chunks.

-

Extremely poor download performance with Google Drive

andy0123456 replied to CalvinNL's question in Providers

Edit: you are correct, "minimal download" appears to just be "download", there's nothing minimal about it, it will never download anything larger. Also with this setting on "off" all reads are 1mb. -

Extremely poor download performance with Google Drive

andy0123456 replied to CalvinNL's question in Providers

Me again (can't remember password for other account, is saved on other PC). Here is a drive created on 763 and being used on 763 setup with 20mb chunks. Note all reads at 1mb and all writes are 20mb. http://i.imgur.com/XbCktRC.png And here is the current throughput on the UI http://i.imgur.com/ruobEkh.png 463 was actually better because the reads were in 2mb chunks.