chcguy88

Members-

Posts

36 -

Joined

-

Last visited

-

Days Won

1

Everything posted by chcguy88

-

The current behavior of cloud drive only allows for storage of files in the "StableBit CloudDrive" folder in "My Drive". I have access to an unlimited shared drive which doesn't use my 15 gb of storage that google currently has for free accounts. Is there anyway to change the location of which stablebit uses on google drive. I have other truly unlimited accounts, but this would be great for stuff that I want to store without hitting api bans on my unlimiteds. Check the image for more information.

-

I would like to request a feature of setting the software to have a daily cap on uploads so that the api limit of around 750gb is not hit. I know speed limits are in place and having it at 75 mbps is possible, but its a waste of power to leave on a machine at a slower rate. Since the software is already tracking how much to upload, could this be easily added to give the user more control over the product. Thanks!

-

I don't know what changed recently, but speeds are much better with the latest 1.1.0.1051 update. (I just got the update the other day).

-

That is exactly what I am looking to do. I looked into Cryptomator and while it seems like a good solution, unfortunately it has a limitation of max 4gb file limitation in Windows with no chunk type system in place. Clearly Stablebit Cloud Drive is the superior software, its the limitations by Google which is causing the issues. Stablebit Cloud Drive would be amazing with this system as it would split the files, have verification of the files, and would run much faster. I can't test it because of the software restriction (for good reason in other cases).

-

While in theory you are correct in that it adds an extra step in the process, the speeds that Stablebit is able to obtain is much less than what google's official Filestream solution can do. How about a thought experiment? If you were to release a software for your own cloud platform, would you optimize it for API users or your own product where you can see what files and patterns your users are performing. After all - Google is an advertising company and more information the better. Additionally, having speeds of 150-200mbps on a good day for clouddrive is much much less than the 1gbps + that filestream can do. That means that regardless of the amount of jumps, Filestream is much faster. At 200mbps (giving clouddrive the best chance) a 5gig file takes 03:34. At 1gbps it takes 00:42. To transfer a 5gb file at 120MB per second, it takes 0.66 minutes. That is about 1.3 minutes total time - Almost half the time.

-

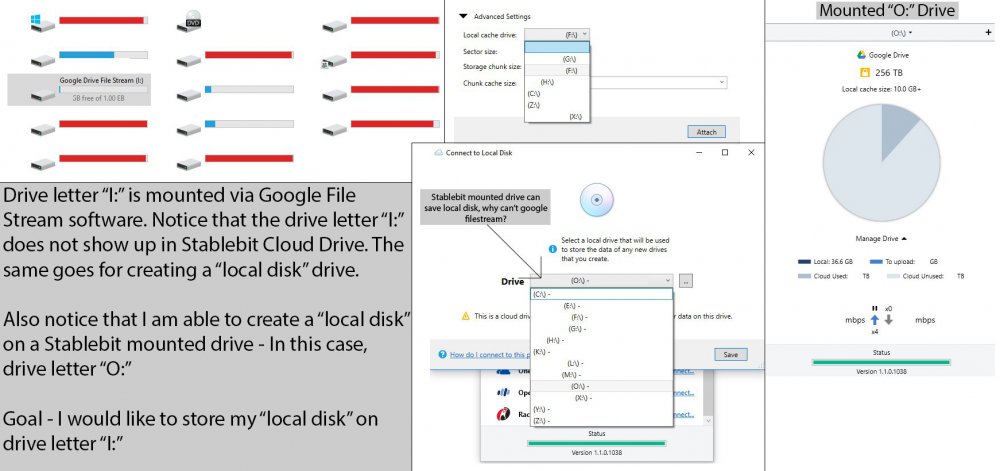

What I am trying to do with filestream. So to sum it up, I am looking to let Stablebit Cloud Drive create the chunks as if it was a local storage location. The only difference is that it would be reading and writing to the Filestream mounted drive. In this scenario, Stablebit Cloud Drive would handle drive (and by extention - chunk) creation/reading/writing, but Filestream would be in charge of actually downloading/uploading the chunks from Google Drive. The speed difference alone for upload and download are enormous if Stablebit Cloud Drive was mounted this way. (maxes out 1 gigabit line up and down via filestream)

-

I understand the 75 mbps limitation. This is with that enabled that I am getting 50mbps. How can I set a local cache that is not physically on the system ie - the filestream mounted drive? It would save me a ton of headaches.

-

As an owner of 3 cloud drive licenses, I'm pretty disappointed with the current performance of the product with google drive. With a 1gbps line up and down, I can't seem to keep cloud drive happy and disconnects are daily at this point. I do not upload more than 1 gb a day normally and the software is on idle, so I know its not hitting the API limit from google. My max speeds are terrible too with a whopping average of 50 mbps download before hitting throttling. This is on the current 1.1.0.1038 version. The product isn't inherently bad, but with google drive speed limitations while using clouddrive, it has become unusable. Solving this issue : I would like to combine Google filestream (I get speeds of 950 mbps from filestream - loading is basically instant) and clouddrive local stored on the filestream mounted system. I can't accomplish this as clouddrive will not let me save to non-local sources that are not physically connected internally. I would like to remove this limitation so I can test this setup. Since google filestream is mounted as a fat32, having 20mb chunks will be optimal to not hit the 4gb limit of that file format. My only alternative to this setup is to manually encrypt my files, upload, and re-download again as it is vastly quicker at 1gbps than 50mbps.

-

This fixed the issue! Thank you so much for commenting on this thread. The drive was listed as "offline" due to administer policy in disk management. This is default on the installer for datacenter as I didn't change the defaults yet. (This is for an NTFS drive)

-

I am currently on that version on both machines (Server and Win 10 Pro)

-

I just mounted the drive again on my other machine. It seems the main difference is the I/O errors on the trace. On the other machine, those do not occur.

-

I was able to snag a copy of Windows Server Datacenter 2016 from my EDU account. The problem is when I load up clouddrive on that computer, my drive mounts(shows up in clouddrive software) but never shows as a readable/writable drive. Is there anything I can do to fix this issue. Here is my trace for the session. I replaced some of the possible id information with "*****" Note this same drive and google drive account mounts just fine on a Windows 10 64bit Pro machine. :01:28.6: Information: 0 : [CloudDrives] Drive ***************************** was previously unmountable. User is not retrying mount. Skipping. 0:01:28.6: Information: 0 : [CloudDrives] Started 0 cloud drives. 0:01:28.6: Information: 0 : [Main] Enumerating disks... 0:01:39.4: Information: 0 : [Disks] Updating disks / volumes... 0:01:45.0: Information: 0 : [Main] Starting disk metadata... 0:01:45.1: Information: 0 : [Main] Updating free space... 0:01:45.3: Information: 0 : [Main] Service started. 0:01:45.3: Information: 0 : [Main] Waiting for cloud drives... 0:01:45.3: Information: 0 : [Main] Cleaning up cloud drives... 0:02:08.5: Information: 0 : [ChunkIdSQLiteStorage:13] Cleaning up drives... 0:02:08.5: Information: 0 : [Main] All cloud drives ready. 0:02:29.3: Warning: 0 : [ValidateLogins] Error instantiating management provider. Security error. 0:02:29.3: Warning: 0 : [ValidateLogins] Error instantiating management provider. Security error. 0:03:29.3: Warning: 0 : [ValidateLogins] Error instantiating management provider. Security error. 0:03:29.3: Warning: 0 : [ValidateLogins] Error instantiating management provider. Security error. 0:04:25.6: Information: 0 : [CloudDrives] Synchronizing cloud drives... 0:04:25.6: Information: 0 : [CloudDrives] Drive ********************************** was previously unmountable. User is not retrying mount. Skipping. 0:04:25.6: Information: 0 : [CloudDrives] Synchronizing cloud drives... 0:04:25.6: Warning: 0 : [CloudDrives] Provider secret for Google Drive not valid. Security error. (ProviderUID=*************************************) 0:04:26.1: Warning: 0 : [CloudDrives] Cannot start I/O manager for cloud part *********************************** (security exception). Security error. 0:04:29.3: Warning: 0 : [ValidateLogins] Error instantiating management provider. Security error. 0:04:29.3: Warning: 0 : [ValidateLogins] Error instantiating management provider. Security error. 0:04:50.4: Warning: 0 : [Reauthorize] Unable to retrieve old secret to persist user name. Security error. 0:04:51.9: Information: 0 : [CloudDrives] Synchronizing cloud drives... 0:04:58.5: Information: 0 : [Disks] Got Pack_Arrive (pack ID: *************************************)... 0:04:58.5: Information: 0 : [Disks] Got Disk_Arrive (disk ID: ***************************************)... 0:04:59.2: Information: 0 : [CloudDrives] Synchronizing cloud drives... 0:04:59.5: Information: 0 : [Disks] Updating disks / volumes... 0:05:29.3: Warning: 0 : [ValidateLogins] Error instantiating management provider. Security error. 0:06:00.3: Warning: 0 : [PinDiskMetadata] Error pinning file system metadata. The request could not be performed because of an I/O device error 0:06:32.8: Warning: 0 : [ValidateLogins] Error instantiating management provider. Security error.

-

Constant "Name of drive" is not authorized with Google Drive

chcguy88 replied to irepressed's question in General

My upload is 1 gigabit up and down. Happened on throttled settings of 65-75 mbps upload and without the throttle. -

Constant "Name of drive" is not authorized with Google Drive

chcguy88 replied to irepressed's question in General

I am having this issue as well. I have multiple drives and 2 google drive unlimited EDU accounts. My 2 mounted drives (one on one edu account and the other on the other edu account) are always is disconnecting randomly in the same way as OP. I have limited my upload to the recommended amount to stay below API limits imposed by google and does not seem to matter if I'm uploading or not. My version is 1.0.2.975 -

Christopher- how would I use the local one for testing?

-

While I agree that Filestream is a bit new, for media consumption it is so much better than Cloud Drive mainly because of the Delay Cloud drive has to deal with. I think there would be a nice middle ground if cloud drive could utilize filestream. This would limit api calls and let Google do the work for uploading the chunks. Future feature?

-

My account finally was allowed to use google drive file stream and it is wonderful. It is what stablebit cloud drive should be if it were not for those pesky api call limits (which is out of your control). I am security conscious and want to use encryption like with cloud drive but instead use it via file stream (since it is much much faster). Does drive pool allow for this kind of behavior? Also it is important to split the disks up into 20mb chunks kind of like how clouddrive does it, as it wont force me to download a huge veracrypt image everytime I want to load a small file from a large archive of my data. I have licenses to all of your products so if anything works for this purpose please let me know. If not, I am looking for any solution to make the files in 20mb chucks and encrypt them. Google file stream does all the back end work of reading and writing to the drive platform. Thanks and please let me know asap.

-

I am having the same issue. I can't unlock nor can I remount an existing drive. Mine says "Internal error".

-

I was thinking about how this product could be better and I came up with an idea. Google drive allows for copies to be made of individual files. Could it be possible to implement a redundancy mode for people who have unlimited storage with drive using the chunk system? In other words, upload file, once uploaded the file would be put into 2 locations on drive (via chunks). The nice thing about this idea is that it would allow for incremental backups if the main drive would fail at any point. The secondary copy would be a rolling copy. So lets say the user specifies that every 2 days, the rolling backup would be updated to the most recent version of the drive. Stablebit would keep track of changes between the 2 and proceed to update the secondary copy. The benefit of this also would be to take out the issue of losing the whole drive if something goes wrong. If something does happen, recopy the chunks from the previous 2nd backup. No reupload would be necessary with this system as google drive would be doing the copying of the chunks. (if the api allows for this). I have noticed the main issues with this software is if something does go wrong, the whole thing is normally messed up = lose of data. With this system it allows for redundancy without too much overhead to accomplish this. Please let me know if this can be implimented. It would really set peoples mind at ease as they wouldn't lose an entire drive just because of a bad day/ bad upload. I have attached an image outlining the idea.

-

So I have been having an issue with using the product. I've been pretty happy with it but when I access my media archive (Sometimes 200+ videos and pictures) I like to use the thumbnail view to view the items to make sure I am copying the correct items from my drive. The problem is with these larger folders, it takes about 2-5 minutes of scrolling down the folder to get the thumbnails to appear. I have a dedicated drive for stablebit and I would like to cache all those images + file names so that all cloud drive has to do is copy the file when accessed, not stream everytime to generate file information/thumbnails. Feature request: Cache of all thumbnails + file index on client side via a local harddrive. This could be implemented as a check box in the settings. This would definitely help with the bandwidth impaired and save time when working with the drive.

-

I can understand these concerns for a cheap crappy drive/cheap hardware. I have some drives I would never dream of using as a cache. There are more stable drives out there but it is for a price. But if you say it is more risk than reward, I trust you guys know what you are doing with this system. (You did write the software after all). Time to go looking into getting an ssd.

-

I own a speedy usb 3.0 flash drive and would like to use it as the cache drive for one of my cloud drives. The software limits me to only using internal drives. While I understand that this is so that someone doesn't just yank out the flash drive while in operation and corrupt the cloud drive, my use case would be that the drive stays mounted as it was cheaper than buying a full on internal ssd drive. Is there anyway to bypass this limitation. My internal hdd gives me less than 5-10mb/s read/write at the best while my flash drive gives me 70-120mb/s read/write. I am not interested in spending another $100 just just use it as an internal drive because of a software limitation. Thank you for any help!

-

I am using version 1.0.0.802 Beta on Windows 7 64bit. I get a memory leak that immediately uses up all available memory on my system. This doesn't occur on Windows 8.1. It also seems linked to the size of the drive. I loaded up a 10 tb NTFS drive (encrypted) and cloud drive used 13 gigs of my memory (out of 32gb). I loaded up my 256tb NTFS drive (encrypted) and cloud drive uses 29 gb of my memory. Closing the drive immediatly brings my ram usage down to 2-3 gb. I saw this was fixed on newer versions of windows, but it seems like a current bug on windows 7 64 bit still. In addition to the memory leak - the cpu usage is 30% on a 6 core 12 thread cpu. As soon as stable bit is closed - it returns to a normal usage of 0-1% or less (with no other programs running). Can anything be done to fix this? Thanks!

-

I have noticed this. I really need to figure out a way to track this over time to get to the root of that problem. It doesn't seem too consistent, but when it happens, it does suck horribly.

-

My harddrive is working really hard when I am copying from one cloud drive to another cloud drive. (Understandably as it is reading and writing 2x or so times per file). As the title up top suggests- These are features that I would love to see in cloud drive-- 1. I would like to be able to use a external usb drive like this as the temporary storage when mounting a drive. https://www.amazon.com/SanDisk-Extreme-Flash-Drive-SDCZ80-064G-GAM46/dp/B00KT7DOSE/ref=sr_1_2?s=pc&ie=UTF8&qid=1481315279&sr=1-2&keywords=sandisk+extreme 2. Support the ability to use Ramdisk software drives as the temporary storage drive when mounting. I am using a laptop that has one ssd drive internal and one harddrive internal. As far as cloud drive is concerned, no other drives exist to the system. Since my ssd is tiny (128gb) I would like to use the ram in the system or this usb drive (which has read and writes at 260MB/s). This is a major issue when copying from one cloud drive to another. The software I am using is "ImDisk". ----- Thanks!