Parallax Abstraction

Members-

Posts

13 -

Joined

-

Last visited

-

Days Won

3

Everything posted by Parallax Abstraction

-

Hey all. I've been using DrivePool happily for years. I have a pool with a number of WD Red drives in it and DrivePool's been keeping it balanced automatically, including recently when one of my 4TB drives failed. I recently upgraded my main machine to all SSDs and took a 4TB WD Black out of that machine and added it to my pool. Some new data I write to the pool is ending up on that drive, but it seems to be completely ignoring it in terms of balancing rules, which are all largely just set at their defaults. I'm on the newly released update for the tool. Does anyone have any potential thoughts? Thanks!

-

Regularly Getting Permission Errors on Drive Pool

Parallax Abstraction replied to Parallax Abstraction's question in General

Sadly, spoke too soon. The new beta is installed and operating but I just tried copying some files out of a folder that I previously couldn't and am getting the same behaviour. I was able to nuke the folder from my server directly and restored it from my Backblaze cloud backup and now it seems to be OK. Very odd. -

Regularly Getting Permission Errors on Drive Pool

Parallax Abstraction replied to Parallax Abstraction's question in General

Cool, I will download the beta and try it out. I've been running the beta of Scanner for forever anyway so why not. Thanks. -

Hi all. I've been using DrivePool for quite some time now and am generally happy, near total lack of updates since I bought the software notwithstanding. I've got a problem though that seems to be getting worse and is becoming a concern. My DrivePool is attached to a Windows 10 Pro machine I use as a home server (it was previously on an older system but it's been upgraded, this problem actually started on that one.) The majority of the time, I access the pool via shares from other systems on my network. Lately, I've had a problem where with certain folders, I can't do anything with any files contained within. Whether I try to delete, move, rename or even copy off the files in the folders, I will get told that I need permission from the administrator to perform the action, even though I am a local admin on every system on my network. Even if I connect directly to the server and try to do something, I get the same result. It makes no sense as it even happens during read operations. Sometimes, this can be corrected by rebooting the server but for a couple of folders, it's persisting. The majority of my pool does have duplication on it and these are happening on duplicated folders. I'm a bit concerned as to what to do about this as it seems to be getting worse and has gotten really frustrating. Any ideas? Thanks.

-

Hey all! I'm using both DrivePool and Scanner. They're both generally working well but there's one problem with Scanner: Even when it's set to start/stop automatically, it isn't. The latest scans on all my drives are from late September, when I first started using it. I also recently replaced a drive in my pool and it never started scanning until I manually clicked the Start button and now it's running. I'm largely using the default scanner settings and I'm on Windows 10 Pro x64. Any ideas? Thanks! EDIT: Hmm, interestingly, it seems to be fixed now. It was set to only do the scans between midnight and 6am, which should have been fine as this is a home server that's always on. However, I don't care when it scans so I set it to do it any time. Then it automatically started right away. We'll see if this sticks. Thanks!

-

I might be wrong but I thought DrivePool was able to use striping to take ultra big files like this and spread them across multiple disks when writing. Indeed, if I look at my MediaSonic ProBox while running the backup, I can see lights for two different drives flashing. However, if it does do that, maybe it does it after individual files are written, I'm not sure. Oddly though, the WD Black drive in my pool has 1.97TB of free space and that's definitely more than the backup will be. Unless the balancing plugins are causing it to be written elsewhere first. Truth be told, it's not really a big deal if I have Reflect put a hard cap on the file size so I'll try to run a backup that way and see if DrivePool can handle it. I'll set the limit to 500GB per file and report the results. If the devs have any insights though, that's be awesome. EDIT: So it turns out, if you're using file splitting in Reflect, you can't use incremental backups. That's a major issue for me as my custom incremental retention settings are one of the main reasons I use this product. I'm going to try something else though. Rather than having the entirety of the DrivePool be duplicated, I'm going to switch to folder level duplication and have it exclude the backup folder, which I definitely don't need duplicated anyway. I'll see if that helps.

-

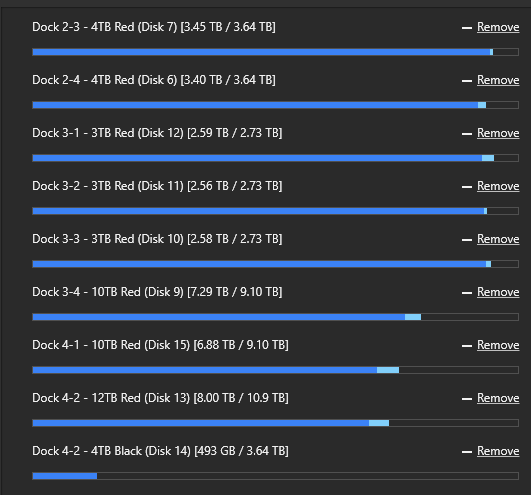

Hey all! I'm still on my trial of DrivePool but am overall liking it quite a bit. However, I've run into one major problem I don't know how to solve and I'm hoping someone here knows a solution. I use the paid version of Macrium Reflect to backup my entire gaming PC. It writes a main image that's a full backup (it usually comes out to about 1-1.3TB after compression) and then writes up for 4 incremental backups after that, then rotates those out, always keeping no more than 5 backups. My DrivePool lives on my home server under my desk and I run the Macrium Reflect backup set to a share I have on that server. When I tried to start my backup, my pool looked like the attached screenshot. About 4TB of space left between a few drives. When I execute my Macrium Reflect script, it also reports the same amount of space remaining. However, multiple times today I've tried to run the full backup and it's failed between 20% and 40%. According to the Reflect logs, it's because it...ran out of space. Even without compression, the total backup from my gaming PC is less than 2TB, it most certainly is not running out of space. As you can see from the screenshot, I do have duplication turned on but even with that taken into account, there's still more than enough space available. I'm not sure why this would be happening or what to do about it. I can engage Macrium support as well but I figured someone here might have a better idea. I can set Reflect to split the backup into multiple smaller files and may try that but I'm not sure why that would be necessary. I'm not sure why writing one large file over the network would keep failing this way. Anyone have an idea why this might be happening and if there's some kind of solution? Thanks all!

-

Tried FlexRAID and Frustrated - Will DrivePool work for me?

Parallax Abstraction replied to Parallax Abstraction's question in General

Duly noted! Even if I don't use CloudDrive, for $5 why not. -

Tried FlexRAID and Frustrated - Will DrivePool work for me?

Parallax Abstraction replied to Parallax Abstraction's question in General

Happy to say that it chugged and appeared frozen for a while but DrivePool did pull out the busted Seagate and I didn't lose anything! It's out of the pool now and when my new 8-bay MediaSonic enclosure arrives, I'll be putting in two other drives, which will give me the means to use full duplication. I am super impressed with DrivePool. When I get paid next, I'll be buying licenses for both it and Scanner and CloudDrive seems neat too and I'll be trying that out soon as well. Great work guys, I should have gone with this from the start! -

Tried FlexRAID and Frustrated - Will DrivePool work for me?

Parallax Abstraction replied to Parallax Abstraction's question in General

I do have it set to evacuate on SMART but of course, because Seagate, the drive wasn't reporting SMART failure and I still don't think it is. However, it's having major read issues in spite of that. I'll leave the drive removing until I get home from work tonight and see where it's at. Most of what's on this drive is already on CrashPlan so if I have to just yank it, it's not the end of the world. Fingers crossed. -

Tried FlexRAID and Frustrated - Will DrivePool work for me?

Parallax Abstraction replied to Parallax Abstraction's question in General

Thanks for the answers! So I was losing my mind with FlexRAID and got it out of my life last weekend. I'll never touch that product ever again. I decided to go ahead with a DrivePool trial and I gotta' say, I'm pretty damn impressed so far. There's a real test going on as I type though. So I have 4 WD 3TB Red drives and also added in a 4TB and 3TB Seagate drive I had from a previous Windows Storage Spaces pool. I was also running a trial of StableBit Scanner. Well, this morning, Scanner informed me that the 3TB Seagate is starting to fail. I'm not surprised, it's Seagate and I got it for free originally. I've I didn't have any duplication on yet but was seriously just about to enable it when this happened. Yeah, this conversion's been a nightmare. I've asked DrivePool to remove the Seagate (which has a lot less on it than the other volumes) and it's trying but it looks like that drive is failing very fast and it's really struggling. It says "Removing drive..." but also shows no read or write activity on the pool so I don't know if it's actually working. If it can manage to actually get the data off and let me passively remove it, that'll be pretty amazing. I think this combined with CrashPlan will be a great fit for my setup. My plan is to expand the pool enough that I can do full duplication so the CrashPlan issue that the other user had shouldn't really be a factor for me. We'll see how things go with this busted drive but I'll very likely be purchasing DrivePool and Scanner. I'm so glad I never got to the point of actually spending money on FlexRAID. -

Tried FlexRAID and Frustrated - Will DrivePool work for me?

Parallax Abstraction posted a question in General

Hey all. Forgive me if this post is long but I'm having a hard time making a decision here. So I have about 9TB of data that I am keeping on a small Windows 10 home server. It's mostly media, video projects and emulation stuff (I run a retro gaming YouTube channel and have tons of emulation sets.) It's about 485,000 files in total. Until recently, it was all kept on a Windows Storage Spaces JBOD volume through a 4-bay MediaSonic ProBox that was connected directly to my main PC. Problem is, because CrashPlan has such a horribly written client, backing all this up to the cloud was taking over 3GB of memory at any given time. So I built this home server and moved stuff there. The problem was, some of the data was still residing on my desktop PC and again because of CrashPlan's crap client, it was constantly rescanning terabytes of data off my main machine over the network which also was no good. So I decided to move everything to my home server. I bought 4 new 3TB WD Red drives and decided to try FlexRAID RAID-F. I copied everything off the old Storage Spaces volume to a bunch of other drives I had lying around, installed the new drives and created a FlexRAID volume. 3 data drives, 1 parity drive. This was way more effort than it should have been as FlexRAID's UI isn't great, the documentation is terrible (tons of pages on their wiki are outdated), their forums are useless and the developer won't even talk to you unless you pay a ridiculous amount of money. Still, I got it working. Then I spent the better part of 2 days copying all the data back. Then I discovered that because of the way CrashPlan reads the file system, it is not capable of doing real-time backups with FlexRAID RAID-F and can only discover changes when everything is rescanned. Because of the size and number of files in my backup, this takes literally hours each time. That's no good, I pay for CrashPlan in part for real-time backup. I'm fed up with FlexRAID and am ready to copy everything back off it again and dump it before the trial ends. I've been looking at alternatives and am intrigued by DrivePool. What you seem to offer isn't RAID per ce, it's storage pooling but with the option to have some or all of the data duplicated across multiple drives to protect against failure. As I understand it, this can't heal itself from a failure like a RAID can but it is possible to have a drive die without losing the whole pool. Truth be told, I think having a full RAID plus CrashPlan is probably overdoing it for my scenario. I have 12TB of storage available with this pool of Red drives but right now, only have access to 9TB of it as I have 1 drive being used for parity. If I convert the whole thing into DrivePool, I will have an extra 3TB I can use to duplicate the most important stuff, while entrusting the rest to CrashPlan. Hopefully that explains what I'm looking for. So, before I take the plunge and copy all this data twice yet again, here's my questions for confirmation: If a drive fails, I can remove/replace that drive without it taking out the rest of the pool? If I pool all 4 of these 3TB drives together, I'll get a combined pool of roughly 12TB? Will DrivePool appear as a normal NTFS volume so that CrashPlan can back it up in real-time? FlexRAID RAID-F does not but their T-RAID option apparently does. I read a recent thread where someone talked about it being a nightmare to restore FROM CrashPlan after a drive failure, largely because of CrashPlan's crap client again. Should I run into a failure and it's with unduplicated data, is this what I can expect to deal with? It's not a deal breaker, I just want to know. I hate CrashPlan's client but unfortunately, they're the only truly unlimited option I have available. Again, sorry for the long post but after wasting so many hours on FlexRAID, I really want to make sure what I choose next will do the job. As I said, I think with CrashPlan, I don't necessarily need full RAID with this data. But I want to make sure I'm not missing anything. Thank you very much!