steffenmand

-

Posts

418 -

Joined

-

Last visited

-

Days Won

20

Posts posted by steffenmand

-

-

On 6/22/2019 at 2:47 AM, Alex said:

I just want to clarify this.

We cannot guarantee that cloud drive data loss will never happen again in the future. We ensure that StableBit CloudDrive is as reliable as possible by running it through a number of very thorough tests before every release, but ultimately a number of issues are beyond our control. First and foremost, the data itself is stored somewhere else, and there is always the change that it could get mangled. Hardware issues with your local cache drive, for example, could cause corruption as well.

That's why it is always a good idea to keep multiple copies of your important files, using StableBit DrivePool or some other means.

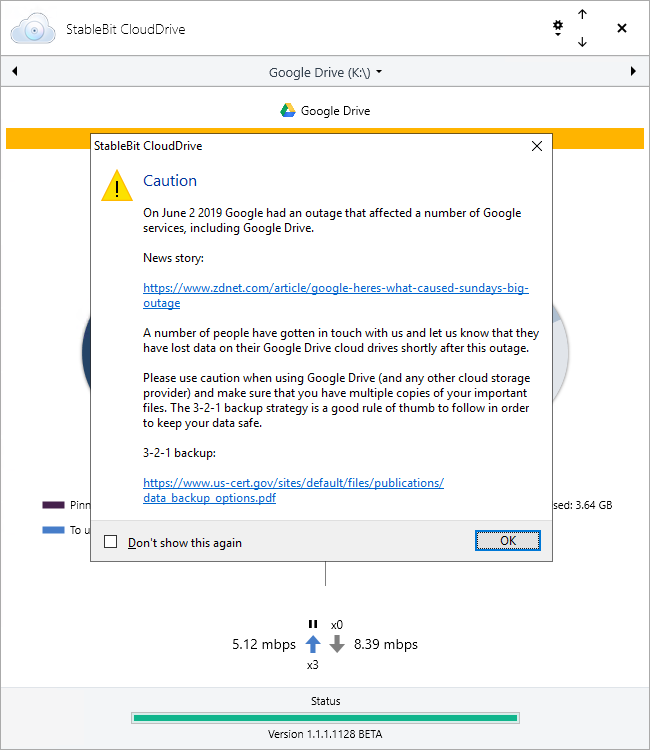

With the latest release (1.1.1.1128, now in BETA) we're adding a "Caution" message to all Google Drive cloud drives that should remind everyone to use caution when storing data on Google Drive. The latest release also includes cloud data duplication (more info: http://blog.covecube.com/2019/06/stablebit-clouddrive-1-1-1-1128-beta/) which you can enable for extra protection against future data loss or corruption.

My disc only lost reference to the files - recovery programs could find them all. So i think your first fix shouldve solved it as im certain its because an old chunk with data indexing was retrieved! Nice with the data duplication though.

Quick question - does drivepool make it possible to "raid" different google drive accounts into 1 for less throttling and better speeds ? - If not this could be a nice request for either stablebit or drivepool as it could help in many ways also regarding data safety. So pretty much building a raid based on drive accounts

-

This is because it will initially prefetch multiple chunks with multiple threads. After the prefetch is retrieved, it will only download as required to keep the same prefetched amount.

-

18 hours ago, Christopher (Drashna) said:

That's very odd.

Is this still happening?

Latest version doesn't do it! So starting the loooong process of moving files to new drives!

-

18 hours ago, Christopher (Drashna) said:

Sorry for the absence.

To clarify some things here.

During the Google Drive outages, it looks like their failover is returning stale/out of date data. Since it has a valid checksum, it's not corrupted, itself. But it's no longer valid, and corrupts the file system.

This ... is unusual, for a number of reasons, and we've only see this issue with Google Drive. Other providers have handled failover/outages in a way that hasn't caused this issue. So, this issue is provider side, unexpected behavior.

That said, we have a new beta version out that should better handle this situation, and prevent the corruption from happening in the future.

http://dl.covecube.com/CloudDriveWindows/beta/download/StableBit.CloudDrive_1.1.1.1114_x64_BETA.exeThis does not fix existing disks, but should prevent this from happening in the future.

Unfortunately, we're not 100% certain that this is the exact cause. and it won't be possible to test until another outage occurs.

I think you are on the right track - my drives lost reference to the latest files! The drives that were not affected were those ones i didnt touch for a while

-

11 hours ago, NMonky said:

Unfortunately, you're screwed. I suffered total data loss and you have as well.

The bug renders the product useless to me less than 4 months after I bought it. What has made me even more upset than the data loss is that Stablebit is refusing to issue a refund for a product that is fundamentally broken. They seem to think data loss is not a significant issue, which I find utterly perplexing.

Anyway, if they're not willing to issue the refund all we can do is spread word of this to as many communities as possible so that others do not waste their money on what is a fault product. If this was a physical product they would absolutely have to issue a refund. I don't see why it being digital changes this.

Simply not true - the issue is due to your provider having issues.

If it was google drive its because google sends old data back during outages - they think.

They have listened so much to their customers and the product is what it is today due to that.

You just cant blame them for issues caused by someone else :-)

-

Force attach just makes it iterate chunks - drive still works! Just takes a long time as it has to index all chunks

-

4 hours ago, WHS_Owner said:

I really don't want to have to remember to pause and unpause every night - I would like to automate it to run overnight. But as the devs haven't replied I guess they are not allowing that for some reason. Shame as it's the one thing that would make this software perfect. Without it, it's just...ok...

Well it's different based on the use-case

I would have no need for a feature like that, but i guess its because not everyone has flatrate internet or that their internet get saturated when it's running.

What about limiting the upload speed ? -

You could just pause uploads and manually start it when you want!

Pausing downloads is easily done by not accessing the data

-

23 hours ago, slim94 said:

What data recovery are people using? Recuva says no NTFS partition on my disk, i'm trying TestDisk now but it doesn't seem to find the lost partition either...

Think you got the samme issue that i get on all new drives... the ntfs is formatted wrong and the partition volume cant be read correctly. Thus neither chkdsk, testdrive or anything else can read it!

-

Got a notice on my support that they might have found a way to avoid this in the future! Hopefully a fix coming soon.

Only fixes it for future outages but better than nothing!

Almost used to constant recovery on drives anyway :-P

-

TestDrive says bad GPT data - are the drives being formatted wrong ?

-

19 minutes ago, Gandalf15 said:

I know. But if everytime an outage happens and you lose everything, then there is no point in using the software or I am wrong?

Cant something like a killswitch be implemented to not miss up the drive when an outtage happens?

This time I was lucky and had a 2nd drive not affected, last time I lost 3 drives and everything. I was basicly lucky that just 1 drive of the 2 was destroyed. This doesnt happen with rclone for example (I know both tools work different).

I was told upload verification solves the problem, but it didnt. It was pure luck that 1 drive that had the data duplicated didnt fail...Im also a bit in the blank as to how it can happen - but i guess it must be google throwing wrong messages back or something.

Usually i can get mine fixed with chkdsk /f - however all my new drives seem to get an error so chkdsk doesn't work

My ReFS drives never had any issues though - so maybe thats the way to go

-

1 hour ago, Gandalf15 said:

Since I had the same issue today again - thank fully only 1 of the 2 drives I used to duplicate, I deleted the raw drive. 25 TB to upload again.

This time I go with nested pool drive. I created 5 10TB drives, added them into a pooldrive (without duplication) and added this pooldrive to the other working (old) pooldrive. It started now to duplicate the stuff. I hope this problem wont come every 2 to 3 months, like this its almost useless to use stablebit....The issue is when Google Drive have outages. Then data gets corrupt.

-

Hi,

All new drives created on the newest version seems to give me:

"Unable to determine volume version and state. CHKDSK aborted."

whenever i run chkdsk on any of them.

Is the drive somewhat created in a wrong way?

-

3 hours ago, NMonky said:

I don't want to worry you, but my drive is now reporting as being corrupt and is completely inaccessible. I'm starting to panic.

Do a chkdsk /f on the drive. Google had an outtage yesterday so that can have caused some corrupted data to happen

-

3 minutes ago, Cobalt503 said:

Yeah and I'm saying if you can't figure it out shut your mouth and move on. Bringing a negative opinion to a new idea is a shit thing to do. Especially when it's as vague as "I don't see why" Who cares what you can see if you aren't the intended user of such feature. GOOD DAY.

Some one had a bad day for sure. its a forum and made for discussion.

-

7 hours ago, Cobalt503 said:

Seems like a rather ignorant response since no one mentioned legality of it. This is a preference and no one cares about the law when it comes to naming folders.

If I want to change the name of the folder and direct stablebit to that directory than it should work. It's actually a very reasonable and simple request. Personally I wanted to do the same thing with renaming the file folder because the cloud is often a SHARED environment and I would like to put a "DO NOT TOUCH" "DO NOT DELETE" "PERSONAL STORAGE" root directory above the stable bit folders.

See this isn't about legality it's about preference and why bring up points that don't matter unless you are ONLY trying to have a negative opinion or be contrary? You some kind of flat-earther or something?Calm down.

Just said i dont see the need for such a feature.

-

19 hours ago, Cobalt503 said:

I think the user is looking for a feature request to be able to rename or obfuscate the names of the folders. I'm sure if it was built into the software it would maintain the links.

Don't see the need - It aint illegal to use Stablebit CloudDrive

-

you can detach and reattach at another drive at any time - the code is just needed to attach

-

You can't recover the key - thats why they ask you to print it when making the drive.

If you prefer, try to make your drives with your own passphrase, then it might be easier to remember but easier to crack also most likely

-

You should never go above 60 TB for a drive as chkdsk wont work

-

3 hours ago, Digmarx said:

I've had the same performance hit since I started using Cloud Drive. Only thing I can think of is that it has something to do with the storage controller, because when I moved to an H300 (IT fw) I started getting about 10MB/s faster writes to the cache drive.

You are thinking the raid controller is the issue ?

This both happens on a raid or on a standalone pcie nvme card though

-

On 5/20/2019 at 10:09 PM, Christopher (Drashna) said:

We have a list of considered providers:

Why isn't XYZ Provider available?As for a timeline, we don't really have one. It's just Alex that does the coding. So it's whenever he has the time for it. And right now, we're working on StableBit Cloud, so not a lot of time for other new stuff. Sorry.

Once that's done, E'll see about pushing him for more providers.

what is stablebit cloud? sounds interesting :-)

-

Hi,

I was wondering what impacts the write speed to the disks. I've been upgrading some cache servers lately (although i couldn't software raid them

) and are able to transfer at around 500 MB/s normally to the disk (NVMe PCIe disk)

) and are able to transfer at around 500 MB/s normally to the disk (NVMe PCIe disk)

However, when mounting a drive and transferring to that drive, i get a maximum of 50 MB/s.

Could it be the encryption slowing it down ? and how do i figure out the bottleneck ? (HDD is far from fully used and CPU doesn't seem to be anywhere near to 100%)

I want to upgrade the hardware appropriately, but need to make sure what is the issue to purchase correctly!

I know the CPU's are a bit old atm. (2 x X5570) and will be upgrading soon to 4 x E7-4860v2

Anything else i can do to speed it up?

Cloudrive(google) is throtling my downloads what can i do about it?

in General

Posted

Did you create your drive with 20 MB chunks ?

If not you should do that and also increase the minimal download size to 20 MB.

If its currently at 1 MB you get limited by the thread count and response times for retrieving 1 MB / Thread