Thronic

Members-

Posts

52 -

Joined

-

Last visited

-

Days Won

1

Everything posted by Thronic

-

Used gsuite for 5 years or so, been through most of the hurdles, ups and downs, their weird duplicate file spawns. Had a clean rclone setup for it that worked as well as could be expected, but I have to say, never been happier to go back to local storage the last couple years, mostly due to performance. And also don't have to sorry about all this anymore..

-

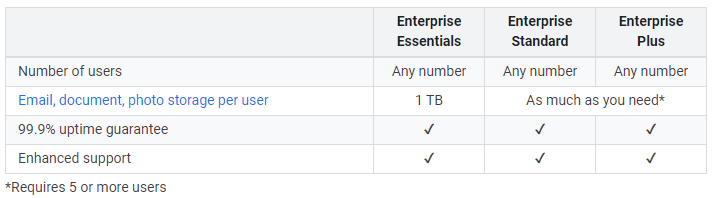

I got the same mail a few days ago and the change to Workspace Enterprise Standard with a single user was entirely painless, just changing from "more services" under invoicing. I have 22 TB and everything still works like usual. It seems Google support evade the question as much as possible, or try not to directly answer it. I personally interpret is as they want the business as long as it doesn't get abused too much. Only people I've heard about being limited or cancelled are those closing in on petabytes of data.

-

Any way to check if the pool state is OK via CLI?

Thronic replied to Thronic's question in Nuts & Bolts

Good point. But doing individual disks means more follow-up maintenance... I think I'll just move the script into a simple multithreaded app instead so I can loop and monitor it in a relaxed manner (don't wanna hammer the disks with SMART requests) and kill a separate sync thread on demand if needed. If I'm not mistaken, rclone won't start delete files until all the uploads are complete (gonna check that again). So that creates a small margin of error and/or delay. Thanks for checking the other tool. -

Any way to check if the pool state is OK via CLI?

Thronic replied to Thronic's question in Nuts & Bolts

Ended up writing a batch script for now. Just needs a copy of smartctl.exe in the same directory, and a sync or script command to be run respectively of the result. Checks the number of drives found as well as overall health. Writes a couple of log files based on last run. Commented in norwegian, but easy enough to understand and adapt to whatever if anyone wants something similar. @echo off chcp 65001 >nul 2>&1 cd %~dp0 set smartdataloggfil=SMART_DATA.LOG set sistestatusloggfil=SISTE_STATUS.LOG set antalldisker=2 echo Sjekker generell smart helse for alle tilkoblede disker. :: Slett gammel smart logg hvis den finnes. del %smartdataloggfil% > nul 2>&1 del %sistestatusloggfil% > nul 2>&1 :: Generer oppdatert smartdata loggfil. for /f "tokens=1" %%A in ('smartctl.exe --scan') do (smartctl.exe -H %%A | findstr "test result" >> %smartdataloggfil%) :: Sjekk smartdata loggen at alle disker har PASSED. set FAILEDFUNNET=0 set DISKCOUNTER=0 for /f "tokens=6" %%A in (%smartdataloggfil%) do ( if not "%%A"=="PASSED" ( set FAILEDFUNNET=1 ) set /a "DISKCOUNTER=DISKCOUNTER+1" ) :: Kjør synkronisering mot sky hvis alle disker er OK. echo SMART Resultat: %FAILEDFUNNET% (0=OK, 1=FEIL). echo Antall disker funnet: %DISKCOUNTER% / %antalldisker%. set ALTOK=0 :: Sjekker at SMART er OK og at riktig antall disker ble funnet. if %FAILEDFUNNET% equ 0 ( if %DISKCOUNTER% equ %antalldisker% ( set ALTOK=1 ) ) :: Utfør logging og arbeid basert på resultat. if %ALTOK% equ 1 ( echo Alle disker OK. Utfører synkronisering mot skyen. > %sistestatusloggfil% echo STARTING SYNC. ) else ( echo Dårlig SMART helse oppdaget, kjører ikke synkronisering. > %sistestatusloggfil% echo BAD DRIVE HEALTH DETECTED. STOPPING. ) -

I'm planning to run a scheduled rclone sync script to the cloud of my pool, but it's critical that it doesn't run if files are missing due to a missing drive, because it will match the destination to the source - effectively deleting files from the cloud. I don't wanna use copy instead of sync, as that will recover files I don't want to recover when I run the opposite copy script for disaster recovery in the future, creating an unwanted mess. So, I was wondering if there's any CLI tool I can use to check if the pool is OK (no missing drives), so I can use it as a basis for running the script. Or rather, towards the scanner. Halting the execution if there are any health warnings going on.

-

Bumping this a little instead of starting a new one.. I'm still running GSuite Business with same unlimited storage as before. I've heard some people have been urged to upgrade by Google, but I haven't gotten any mails or anything. I wonder if I'm grandfathered in perhaps. Actually, they didn't: If they did, I'd just upgrade to have everything proper. I have 2 users now, as there was rumors late-2020 that Google were gonna clean up single users soon who were "abusing" their limits (by a lot) but leave those with 2 or more alone. I guess that's a possible reason I may not have heard anything as well. 2 business users is just a tad above what I'd have to pay for a single enterprise std. The process looks simple, but so far I haven't had a reason to do anything ..

-

What I meant with that option is this: Since I haven't activated duplication yet, the files are unduplicated. And since the cloud pool is set to only duplicated, they should land on the local pool first. And when I activate duplication, the cloud pool will accept the duplicates. Working as intended so far, but I'm still hesitant about this whole setup. Like I'm missing something I'm not realizing, but will hit me on the ass later.

-

Sure is quiet around here lately... *cricket sound*

-

DrivePool doesn't care about assigned letters, it uses hardware identifiers. I've personally used only folder mounts since 2014 via diskmgmt.msc, across most modern Windows systems without issues. Drives "going offline for unknown reason" must be related to something else in your environment. Drive letters are inherently not reliable. The registry key MountedDevices under HKLM that's responsible for keeping track can become confused at random unknown drive related events, even to the point where you can't even boot the system properly. Doesn't need stablebit software for this to happen. Certain system updates mess around with the ESP partition for reboot-continuation purposes and may actually cause this if disturbed by anything during its process.

-

Seems tickets are on a backlog, so I'll try the forums as well. I've setting up a new 24 drive server and looking first at stablebit as a solution to pool my storage since I already have multiple licenses and somewhat experience with it, just not with clouddrive. I've done the following. Pool A: Physical drives only. Pool B: Cloud drives only. Pool C: A and B with 2x duplication. Drive Usage set to B only having duplicate files. This let me hold off turning on 2x duplication until I have prepared local data (I have everything in the cloud right now), so the server doesn't download and upload at the same time. Pool A has default settings. Pool B have turned off balancing, I don't want it to start downloading and uploading just to balance drives in the cloud. It's enough with the overfill prevention. My thought process is that if a local drive goes bad or need replacement, users of Pool C will be slowed down but still have access to data via the cloud redundancy. And when I replace a drive, the duplication on Pool C will download needed files to it again. Is read striping needed for users of Pool C to always prioritize Pool A resources first? This almost seems too good to be true, can I really expect it to do what I want? I have 16TB download as well as Pool B having double upload (2x cloud duplication for extra integrity) before I can really test it. Just wanted to see if there are any negative experiences with this before continuing. My backup plan is to just install a GNU/Linux distro instead as a KVM hypervisor and create a ZFS or MDADM pool of mirrors (for ease of expansion) with a dataset passed to a Windows Server 2019 VM on a SSD (backed up live via active blockcommit) and hope GPU passthrough really works. But it surely wouldn't be as simple ... I know there is unraid too, but it doesn't even support SMB3 dialect out of the box and I'm hesitant to the automatic management of all the open source software stacks involved.. Heard of freezes and lockups etc.. Dunno about it. Regardless, any of the backup solutions would simply use rclone sync as I've used so far for user data backups. Which would not provide live redundancy like hierarchical pools, so I'd loose local space for parity based storage or mirroring. I wont have to loose any local storage capacity at all, if this actually works as expected.

-

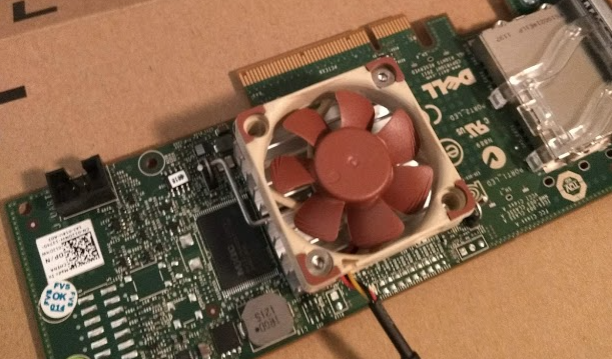

A small 40mm fan works wonders in desktop builds or "calmed down" rack chassis'. Brought my Dell H200 and LSI 9211-8i cards from being too hot to touch down to barely noticable heat. Just connect it to any random case fan pinout. The model on the picture is a Noctua NF-A4x10 FLX 40mm. I'm also a RC hobbyist, so finding small screws that fit ... anything .. is never a huge problem...

-

If you're going to use a cloud that has rollback of data, then using a solution that splits up your files is straight up a recipy for COMPLETE disaster. This is nonsensical to both argue and defend. In that scenario, uploading entire files is safer. No matter the software. I ignore nothing, I simply stay on topic. It doesn't matter how volatile you claim cloud storage is in general, this was about Google Drive specifically. And there is no argument available that can claim CloudDrive was safer, or equally safe, as a fully uploaded files in a situation where the cloud rolled back your data. The majority would agree that outdated data, probably just by seconds or minutes, is better than loosing it all. The volatility issue here was CloudDrive's expectation of that not happening, straight up depending on it, not using cloud itself. You seem to want to defend CloudDrive by saying that cloud is unsafe no matter what. But the fact stands, fully uploaded files vs fragmented blocks of them would have at least offered the somewhat outdated data that Google still had available, while CloudDrive's scrambled blocks of data became a WMD. CloudDrive lost that event, hands down. Claiming my nightly synched files via Rclone would not have been any safer is absolute nonsense.

-

Nonsense. Any rollback would roll back entire files, not fragments. While Rclone uploaded data would just be older versions of complete files, still entirely available, not blocking access to any other data. I'm not saying Rclone is universally better, but in this case it definitely was. Saying Rclone data is stagnant is nonsense too, it entirely depends on the usage. There are absolutely no valid arguments available to make Rclone look worse in the scenario that happened. I'll look into the pinned data changes.

-

Seems upload verification (or any action for that matter on client side) won't help much if the risk is Google doing rollbacks. CloudDrive splitting up files into blocks of data, will make everything fragmented and disastrous if some of those blocks gets mixed with rollback versions. This explains everything. So it will work, as long as Google doesn't mix old/new data blocks. All this makes rclone FAR safer to use, any rollback will not be fragmented, but complete files. But thanks for the explanation.

-

Hi It's been a while since I stepped away from stablebit software for a bit and have been using 100% cloud via rclone instead. Was wondering how CloudDrive is doing since I'm considering a hierarchy pool with drivepool:clouddrive duplication. It felt very volatile the last time I used it. Now I see this sticky: https://community.covecube.com/index.php?/topic/4530-use-caution-with-google-drive/ Is this a problem similar to write hole behavior on RAID, where blocks of data were being written via CloudDrive and considered uploaded, but interrupted and then corrupted? If so, it should not cause issues with connectivity to the drive and/or the rest of the data. If so, I'll be very tentative to use it.

-

Just did some spring cleaning in my movie folder and removed over 200 movies. Folders with the movie file and srt files. When I marked all of the folders and pressed delete, about 20 of them came back really fast. Then I marked those for deletion, pressed delete, and about 5 bounced back. And 2. And after deleting those 2 no more folders "bounced" back. I've probably had these files for 2-3 years, across a couple iterations of DP. Perhaps outdated ADS properties causing it? Not a huge deal, more of an awkward observation, didn't hurt my data but required a watchful eye to avoid leaving a mess. I'm about to turn off replication for my media as I feel the scanner read scans are good enough safety-net to discover realloc/pending sectors early. And with the evacuation balancer, I feel pretty safe. I have a strong feeling this was a replication related event, maybe race-condition based, internal OS/NTFS functions running side-by-side with what DP wants to do and being a little ignorant about eachother. Not thinking about turning off duplication anymore after a 3TB seagate just died suddenly in my desktop. It only hosted backups that would regenerate, but it's worse if it happens in the pool. Would render evacuation useless. So, just curious about the rubber-band dynamics of folders coming back from the dead now, but it's not a huge deal, mainly just curious. Thanks.

-

Yeah. It seemed to accumulate a little more data than it should over time but not much, but worked without errors and did test recovery OK too. I ran it for a few months. EDIT: oops I thought this was my old DP post. I've never done it with CD. I ended up with rclone and raw gdrive@gsuite instead.

-

I'd be interested in hearing the outcome of this. Tagging to follow.

-

Could it be the 90% default balancing rule in the prevent overfill plugin that starts overloading your USB array when trying to move things around continuously (will it actually do this, Christopher?)? USB is a very fickle thing and hubs/backplanes/controllers are extremely different in stability and actual bandwidth/multiple device handling. Slow drives, using system drive, and/or overfilling too much could have given you similar symptoms on SATA.

-

If using the technology by design, you should never need (or want) to know at all. Simplicity is the point. DrivePool will handle duplication and drives in the pool safely for you. Use file placement if you want to place certain files on certain drives. It really doesn't matter where the duplication is as long as it's somewhere in the pool.

-

By design you'd add new drive and press removal on the old which will move things out of it safely. You can copy the hidden poolpart folder to the new and then replace them while it's off or the service stopped, but it's not clean if there's activity on the pool while you do it.

-

I would probably just shut it down (unless you have hot/blind swap support) or make them offline via diskmgmt.msc so all are missing, then remove them from the pool in that state, like swapping a bad drive that can't be removed.

-

For rooms without people this is actually a very good concept. Wouldn't be bad at all, in reality all the valuable data in one building to another is already being done in major enterprise computing by time-based geo replication e.g. every 5 min. I compare that analogy more towards SMART and internal drive ECC. Like you say, mitigation is good even if it's not the entire solution. A pure system backup is perhaps 20-60GB max. The rest can be backup up in single files or through an atomic/browsable solution. I think this specific situation would be better served by you researching backup options that would make you happy rather than having Stablebit create something half-assed. I can't believe Alex would want to implementment only partial protection if ever moving in that direction, and behavioral/heuristic algorithms can quicly become a fickle beast to deal with. Think about it. If you only have a few files you want to protect, than your own solution won't even work. If you have many, your solution still includes accepting SOME damage but not be overrun by it, which is a horrible way to protect anything and a lot of users would rage against loosing anything if it was even an option that had the word "protection" in it. Why have that fire-door when you can easily protect it entirely with a scheduled separate copy and have no performance or usability penalties. Having to manually consider how much an application will modify your files (turning it on/off) is advanced/expert level computing. And at that level there are much better options, simple, obvious ones, to protect your data. Yeah I personally don't see why anyone would want something like this when 100% is so easy to achieve, and more often than not the stance is that you either accept loss or you don't. DP is loved over RAID because you'll rarely loose everything due to individual drives and their own intact FS, but this is a forward development from typical RAID where you would loose everything. What you're proposing is a backwards approach to rarely loose everything, from loosing nothing if you just bother to do basic separate copies in any of the multiple ways available even with native tools. If anything, I would rather prefer an option to have some files being copied to a read-only location for a retention of X days or something that would be a 100% protection with easier implementation. The very LAST thing I would like is to have DP try being intelligent and decide what's malware and not during normal operations. I don't want it to be its job at all, I just want GOOD pooling with GOOD integrity. I don't want anything non-related to even remotely possibly affect that. I'm a fan of tools that do mainly one job, and do it well instead of trying to adhoc anything and everything that comes to mind, especially partial solutions to data protection.

-

This is a horrible idea. First of all there would always be SOME damage done and there's lots of situations where you would write to a large amount of files at once. Second, ultimately backups are the only real data protection. DrivePool offers redundancy, which is not a backup. Look into 3-2-1. That said, you can ransomware protect your most important files by simply scheduling a separate copy of them locally on the server to a non-shared folder. I'd look into Veeam free agent for both GNU/Linux and Windows. It works great to local and network destinations, supports incremental backups and individual file browsing and recovery. I personally also use rclone to cloud for offsite copies.

-

I think they must be cleaned up at some point, or my drives all the way back to '14 would be incredibly messy by now but there are just the odd empty here and there.