-

Posts

62 -

Joined

-

Last visited

-

Days Won

4

Everything posted by Edward

-

Cheers srcrist, much appreciated. So I will destroy the existing clouddrives and start again. I will then use the same key for the newly formed cloud drives. Just created a new test cloudrive with encrption and that worked just fine. I also added that new test clouddrive to the existing (clouddrive) non encrypted drivepool and that also seem to have worked. But I will encrypt all cloud stuff once I recreate the drives. Edward

-

As per title. I have various Cloud Drives based on OneDrive. I did not enable encryption when I first created the CDs assuming I can enable later on given that encryption works on the data not on the container. I guess it is not programmed that way and I will need destroy all CDs, re-create them and re-upload all data? But asking just in case I can avoid this headache. On a related note do I have to provide the same H key to all the clouddrives? I have them pooled into a DrivePool. Cheers

-

Anyone?

-

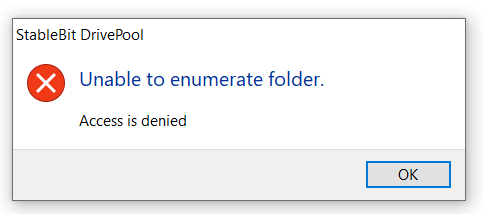

HI Terry Many thanks for your most helpful and comprehensive response. Much appreciated. In fact your message, and in particular 'take ownership' got me thinking and remembered that I had encountered this before. The solution proved quite simple and indeed worked just fine in my present case. In the previous case the issue arose when I moved a hard disc to a different computer. So I recalled that I had also moved the discs that contains my driverpool to a different machine that was some time ago and all has been well. However on close looking at the permissions for the 'denied access' folder (as reported in DP) they were different to the other folders in the DP. The other folders were created after I had moved the discs. So I simply changed the 'owner' (within File Explorer) to the same owner as seen in the fully accessible folders. The path to this in File Explorer is Folder > Properties > Security > Advanced > Owner > Change. What appears to have occurred is that, even though I 'shared' the folder in File Explorer when I swapped the discs to a new machine, the 'owner' stayed the same and DP retained the old 'owner' leading to 'Access Denied'. Given that DP was reading/writing to all the folders in the relevant parent folder and I could read/write to all folders in File Explorer and other utilities (in particular RDP, I assumed all was well. This is amplified by the fact that when I migrated the DP licence to the new machine, DP very happily rebuilt the pool without reporting any conflict in 'owners'. So I would recommend @Christopher (Drashna) have a look at this and if I'm correct ask the developers to implement a routine to check 'owner' consistency when a user migrates a pool. The Winaero app looks interesting. I'll have a play with it. Thanks again for responding. E edit: I had to reboot to propagate the changed 'owner'.

-

Looking for a clean strategy for backing up vital data to CloudDrive please. Up to now I've been using Crashplan for backing up vital data but want to migrate away from them (long story). Data in Crashplan comprises about 3tb. So far I have a DP (12tb with full x2 duplication) and a CD (2tb) using several OneDrive drives which I can readily enlarge/add to. My thinking, for simplicity, I would just synchronise my vital data (i.e. not all data) directly to the CD (one way synch with a synch/backup program). But it occurs to me that perhaps a better solution is to add my CD to the existing DP and do some form of folder duplication/balancing of vital data to the CD drives from the DP drives. Is this more optimal? Another alternative is to create a new DP and then include the existing DP and CD. Not sure what added benefit this would give me, but asking anyway. Data in the CD does not need to be duplicated within the CD (as by definition it will be stored locally and duplicated there and anyway I assume Microsoft's Onedrives are robust). However I need an easy way of including/excluding folders that get uploaded to the CD.

-

I'm working on folder/file placement duplication and for my largest folder(s) in my pool I'm getting 'Unable to enumerate folder - access is denied' error in DP. However in Windows explorer (and other programs) as well as over the LAN I can access all the folders without issue. Where do I start troubleshooting this issue please?

-

Interesting. I also use Macrium and will look at this with a view of moving my image files out of the pool.

-

Fully recognize that the current issue is not mine (but I'm the OP) however would highly appreciate if: 1. How do I find out which files on drives are unduplicated? 2. That this thread is anyway updated with recommended processes/commands need to be followed when a problem occurs. Or a link to such processes/commands. cheers Edward

-

I got notified about your new post on this old thread. Very disappointing to hear no progress has been made since I posted 4 years ago. Let's hope Christopher is able to sort out your immediate issues and longer term some progress is made on the issues Christopher and I discussed 4 years ago. I've not had any issues with drives since 4 years ago but I guess it is only time ....

-

Thx Christopher Did it. You will have received twp submissions. The first may simply be the default files from your troubleshooter app. The second hopefully the actual logs from cature log. But the main issue running this is that I did not complete the error within CD as I got stuck in a cert error at live.com and firefox did not allow me to progress further. The cert error said following: login.live.com uses an invalid security certificate. The certificate is not trusted because the issuer certificate is unknown. The server might not be sending the appropriate intermediate certificates. An additional root certificate may need to be imported. Error code: SEC_ERROR_UNKNOWN_ISSUER However when I run the CD process without the FiddlerCap process I don't get a live.com cert error. I guess this is due to having to accept the FiddlerCap temp cert or something like that. Anyway have a look at the submissions. Hopefully something there that you can work with. I cross referenced to this thread. By the way I could not find a way of navigating within your app to the FiddlerCap capture file which defaulted to my desktop. Not sure if your app picked the capture file anyway. cheers Edward

-

Hi I have 3 onedrive drives within 1 CloudDrive, two of which are not mounting. Retry in the CloudDrive app does not work. Reauthorize at first seems to work (it gives the 'authorized' message within the browser) but then within the CD app I immediatly get a message saying "Error Connecting to Onedrive - Cannot reauthoirze drive X. Drive Data was not found when using the provided credentials". I have not changed any passwords on the affected OneDrive drives. I did recently change the password on the non-affected Onedrive drive (and if I recall I had to reauthorize which worked just fine). Any ideas as to my next steps please? Should I simple 'destroy' the OneDrive drives and start again? I hope this is not the only solution as it defeats the integrity of any data in those drives. cheers Edward

-

Hi Christopher Yep it was enabled. I toggled it again and all drives now q'ed for a scan. Let's see if the specific drive get's scanned. Nope I had not messed with disk settings. In particular I did not exclude the specific drive from a scan. Is there any way of forcing a scan on a specific drive rather than all drives?

-

I changed my OS ssd about a month ago. From a 125gb to a 1tb disc - windows 10 Pro. WDC SSD. Disc is seen by Scanner (and Drivepool etc). However it never get checked. Settings for disc in Scanner is allowed. Partitions not in any Drivepool. Even if I force a check all the other drives get checked but not this new SSD. Previous SSD was checked just fine. How can I force a check please?

-

Thanks Chris I downloaded the dpcmd utility from here, however the paramater check-pool-fileparts does not seem to recognised. But In any event my unduplicated data is explicitly input by me in a partial endeavour of reducing disk usage. Thanks again for the link to Shane's and Alex's post about 'other' - all fully understood. Small question though if I may. How/where does DrivePool get the reported value? thanks/regards Edward

-

Thanks Christoper Since my last post I've been trying to sort things out. Specifically I moved about 500gb of duplicated data out of the pool to an external USB drive. This had two positive and one negative effects. The positives are that the free space in the pool increased by 2x (as expected) and 'other' decreased by about 400gb (not expected). The negative is that I have 500gb unprotected data. The current view of the main pool is as per screen shot below (I did not grab a screenshot of what it was before). What I'm not getting is why 'other' has reduced to such an extent (still significant but far better than ~700gb it was before). How is Drivepool calculating 'other' and do you have a utility that allows me to drill into the detail?

-

Hi Chris. Thanks for responding. I'm not getting the full picture here. I get the difference between 'size' and 'size on disk'. However the numbers are not consistent. I have 3 onedrive 'disks' (H, I and J) all within one CloudDrive Pool (Z). Currently I have nothing in the pool. When I look directly at H, I or J at the 'size on disks' the numbers are low (~200mb each). The size of the PoolParts on these drives are zero. Looking at the poolparts on the real physical drive (d:) the numbers are inconsistent. Two of the poolparts show 1gb used and the other 280gb used. All three of these Onedrives were set exactly the same in the CloudDrive app - namely with 1gb local cache and expandable drives albeit max sizes set at different values. Within the DrivePool app I'm seeing ~700gb of 'other' and I can't get anywhere near that number by summing the values I see as being either 'size on disk' or 'used' (fully recognise that there may be other stuff like metadata in 'other'). Having a way of drilling down into 'other' would help in the analysis. The app is reporting a value so must know what it comprises. Also it would be helpful for my understanding that once data is uploaded to the relevant CloudDrive at that point the local cache is deleted? Or is it the case that all data in a CloudDrive has to be mirrored physically at all times on a local physical drive? kr E

-

I had not fully appreciated that for all CloudDrive allocations it consumes the same amount of space on my local drives on a 1-2-1 basis. Specifically I have ~2.2tb of CloudDrives (over 3 allocations) and I also have 3 instances of CloudPart folders on 1 local drive. This has consumed the majority of of my spare local disk space. In my case the CloudParts are on my largest HDD which is now nearly full. Is this correct or is something wrong with my set up? If it is correct behaviour then CloudDrive is quite a bit more costly due to the requirement to provision local storage. I would have thought that these mirroring requirements could be done via some sort of efficient database handling of metadata or some such handling. I really do hope my set up is in error. Cheers Edward

-

Thanks Chris Since I posted last time I created 3 onedrive drives (totalling 2.2tb) and added that to my existing pool. I got terribly lost trying to work out how to do things like balancing and duplication. So I removed them and created a new (z:) pool just with the cloud drives. I was just researching how to backup things to this new clouddrive. Things like Allways Sync (which I know well) and opendedup were on my radar. However you mention creating a new pool encompassing the two pools (which I did not know was possible) is something I need to look at and see if it achieves what I want. Let me think on that. Allways Sync I like as it 'just works'. It does not have dedup and versioning but does allow deletions not to be propagated which is useful. I had another look at Crashplan and see that I can get 75% of their business account for the year following expiry of my account. Given that I still have about a year left on my plan it will only cost me $30 for two years of their business plan. So I may keep that as well as keep clouddrive (hey, one can't have too many backups!). I'll probably switch the backups from my local drives rather than the DrivePool so as to get round the shadow copy issues we spoke about (via a recent ticket). I thought my google accounts had 1tb attached to them but find it is only 15gb which is pretty useless for my purposes. So I'm stuck with onedrive for now - unless you know of some place that still does not charge a fortune? I really need an university account with 1pb of ftp storage. Anyway thanks again for your time on this. Edward

-

Christopher Thanks very much, most appreciated. Couple of quick Qs if I may? - What is the optimum way of organising the DrivePool? Create a new pool for the cloud stuff or simply add the cloud pools to the existing pool? In either case how do I ensure that data in the cloud is the backup and not live data being used? Or should I just add to the existing pool and let the balancers and the local caching just do its stuff with no hit on (perceived) performance? I have about 75m downstream bandwidth with no data cap. - Is versioning enabled either in CD or DP? The Veeam app looks very interesting. regards Edward

-

So Crashplan, which I have been using for years, has decided to give up on their retail clients - concentrating on their 'business' clients (more expensive and less functionality so far as I can see). Crashplan are giving two months free beyond whatever expiration a customer has - in my case this takes me to August 2018. However within a week of their announcement I start getting various major errors (no inbound connections, limited outbound connections and severe throttling). Must have been a coincidence (!) but anyways makes me now need to put in place different plans. </rant> So I was wondering if CloudDrive (CD) can be used instead, particularly as I have various storage (google, onedrive etc) available. My cloud backup is currently about 2.2tb So my wish list: - upload to the cloud the 2.2tb - with room to grow. - decide what data is backed up to the cloud - most of my local data is not 'critical' - a nuisance to lose but life would continue. - duplicate all data (so not reliant on one provider) - which would I assume mean I need 4.4tb storage. - ensure the CD pool is up even if one provider is down (i.e. auto switch to the duplicate data). - Ideally the duplication transmission occurs 'provider to provider' rather than via my local machine. - fully encrypt all data - both in its transmission and end storage - must simply be a secure blob from the provider's point of view. - deduplication (I often move large folders around) I already have DrivePool so I would be able to integrate with that nicely. Not sure if I would create a new pool (for the cloud stuff) or simply add the cloud drives to the existing pool. One other thing I do with Crashplan is to have several computers (locally and externally) back up to my local server. This is beyond the scope of Covecube but would highly appreciate any suggestions of how I can replicate this functionality. I'm thinking perhaps a mixture of rsync and a vpn? Or perhaps I can purchase a multi CD licence and sync things somehow (locally and externally - two machines 13k miles away!). I did try CD a short while ago but ran out of time, so I guess I need to purchase CD now. cheers Edward

-

Thanks Christopher (and thanks also for the other help via the ticket system). I did not see a "private" or "incognito" session settings anywhere in CloudDrive. However I did manually logout from the existing OneDrive sesstion and log into a different account. That seems to work. I'll need to double check that the permissions 'stick' and that the correct accounts are being consumed. I'll also add these cloud drives to either my existing DrivePool or create a new Drivepool. Hmm, I wonder if it is possible to access this CloudDrive directly from anywhere by say attaching a domain name to it? If so what would the IP details be? cheers Edward

-

Sorry, perhaps I'm just doing something wrong. I can't seem to be able to connect to a different OneDrive account (different username and different password). When I press 'Connect' I simply get to an 'Authorized' web page which allows me to create another (cloud) drive using the same OneDrive account. I don't see where I can connect to a different OneDrive account. Specically I have a 5 user Office 365 account which allows me to have 5 * 1tb OneDrive spaces. I want to use (say) 500gb in (say) 3 accounts and then pool those (1.5tb) into my existing local DrivePool account. cheers Edward

-

Thanks Chris. Permissions now seem all ok. Just wish there was an easier way of doing this. Pool organization has now settled down. All data now reported as duplicated with no wastage. Pleasantly surprised how fast that took. Scanner is now starting scanning disks. OS disk on an SSD reported as healthy. First drive in q already completed at ~25% on a 1.82tb drive. Interestingly scanner is incorrectly reporting the name on this drive. Actual drive is a Samsung but is being reported as a Crucial SSD. (I wish I had a 1.82tb SSD drive!!). Is there anything I can do to force Scanner to correctly report name? Now need to propagate to my various client machines the new server details (mainly JRiver media server, but also remote desktops). Also now need to re-adapt my crashplan cloud backup (always fearful of that in case I do something worng and delete my cloud backup which will take weeks to upload again). E

-

Just a quick update from my side. Good and annoying news. Transplanted 4 storage drives from old machine to new machine, ensured all drives online, reinstalled drivepool and old pool seen immediately. Drivepool went away measuring things. New pool shown as H: but, in Disk Management, changed that to P: However on opening folders within the pool I can't open the various folders, get error of "You don't currently have permission to access this folder. Click continue to permanently get access to this folder." Clicking 'continue, simply results in a long wait with nothing apparently going on. Slowly folder permissions coming through. Tried advanced security settings and found I'm not listed as having permission (even though the account is an Administrator). Added my account and that enumerated the folder tree. However still had to claim permissions when navigating the folder tree. All a massive time waste! Claiming permissions via the Pool also has set up permissions on underlying data disks (D: through G:). Scanner installed just fine. Now all q'ed for full scan. Currently undergoing 'temperature equalization' - whatever that means. E

-

@eduncan911. Thanks for that. NIC teaming is way above my experience level, but for sure I'm keen to learn (for all the apparent speed advances and flexibility). For now however I think will get my new server (Lenovo TS140) up and running with the 4 drives and then move forward from there. As recommended I will first uninstall covecube stuff and install once all drives are in place. But a quick question if I may? You say you are connecting/streaming only via wifi but yet you have a 4 port ethernet NIC on your host. Don't understand. How does that all work? @Chris (Drashna). I'm hoping that I will simply stick with P: as my main pool and it will automagically come to that once I adapt the already pooled discs. Having SMART data only at the host level will be fine. After all the whole purpose of scanner is risk mitigation only so it being reported only at host will be sufficient. However I'm hoping that the drivepool will be visible at the VM levels together with ability to read/write directly to the pool from a VM. Recent testing on my gb network was showing sustained throughput of about 105 mb/s which is not too shabby (using ssd to ssd as end points). However this is quite variable in real life situations (mix of machines, slow drives, poor cat6 termination etc). E