-

Posts

20 -

Joined

-

Last visited

-

Days Won

4

Everything posted by methejuggler

-

Font color issue with high contrast theme enabled

methejuggler replied to jerblack's question in General

I know this is an old post, but it's still an issue 4 years later. My monitor is 750 nit brightness and pure white windows are nearly blinding, so I run everything in dark mode. This works for 99% of applications these days, but the stablebit suite doesn't respect the dark mode setting. -

I can't replicate this. The buttons appear to be working fine for me. Were there any extra steps that you took to make this happen? Edit: I found it. Only happens if no drives are set as "SSD". I released a new version to fix this.

-

Very strange, it didn't used to do that when I was testing, I wonder when that bug crept in. I'll fix it when I get home

-

Shane beat me to it, but yes you still need the Scanner plugin. I'd imagine that it'll stop giving that warning in a week or two when enough people have downloaded it and it has enough "sample size" to know it's ok.

-

I don't know about that. The solution I went with is to add all suspect drives to a single pool (no duplication on that pool), then add that pool to my main pool, so it acts as a single drive - meaning only a single copy of the duplicated files will ever be placed on any suspect drive.

-

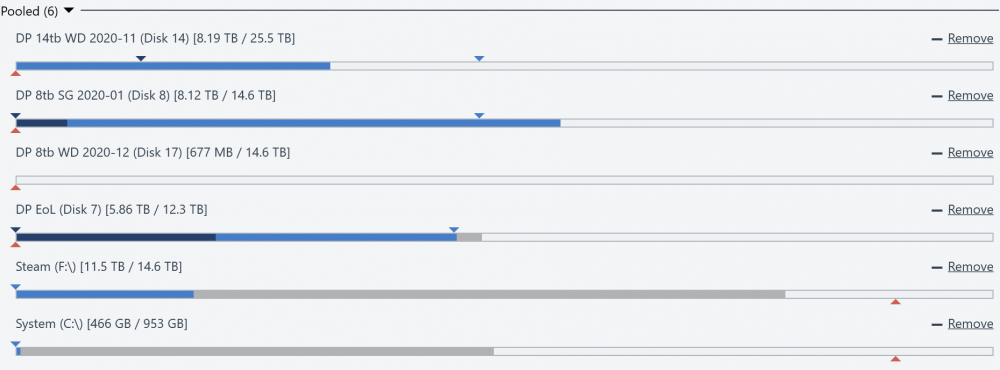

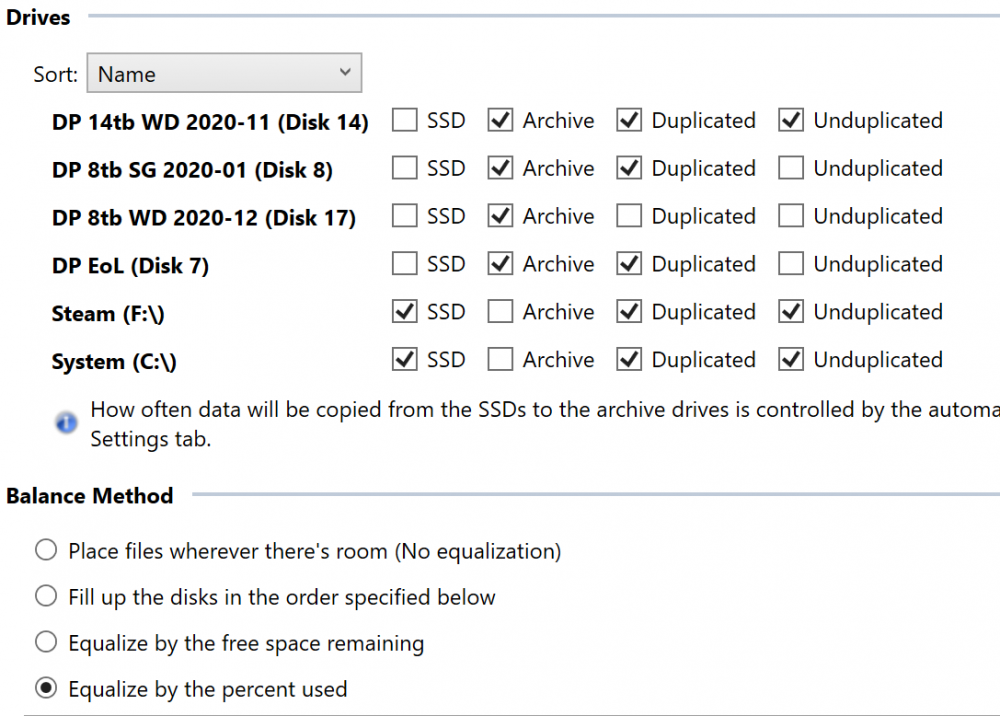

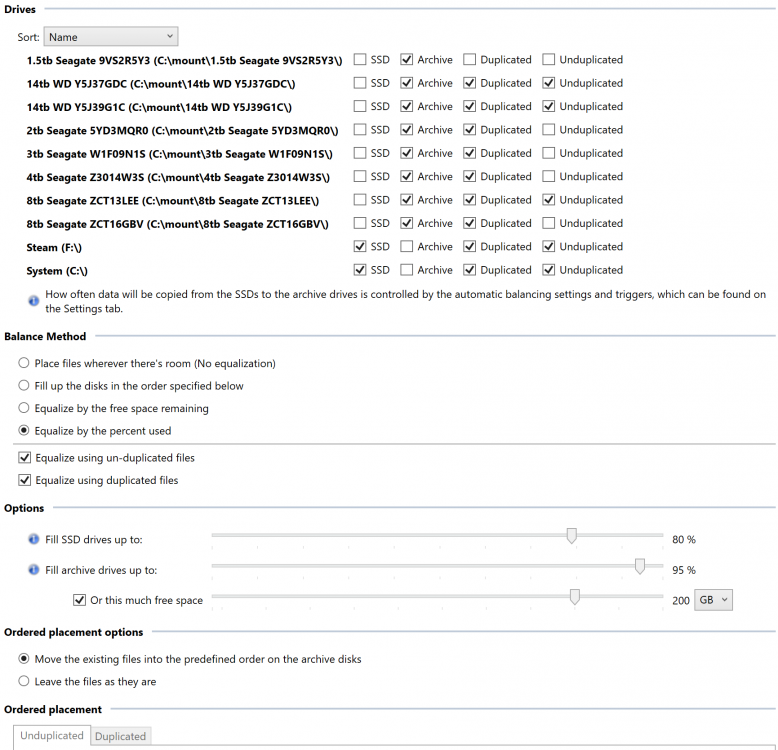

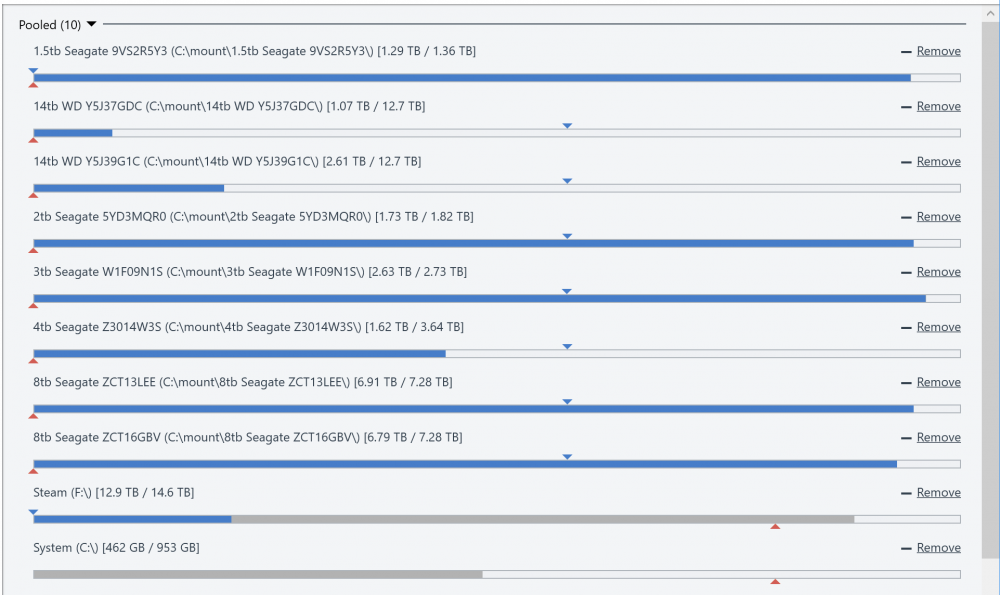

Example of my current setup with hierarchical pools: I have my end of life disks all in one pool, and each of the other pools has 2 drives each, purchased at the same time (I placed the purchase dates in the name to tell them apart easier). One of the 8tb seagate drives already has a pending sector on it, so I don't trust it (so no unduplicated content). Will probably RMA that soon. The 14tb drives are solid so far. The new Western Digital 8tb drives are still being scrubbed by the scanner, so I have it set not to place content on them yet until that finishes. Due to some strange glitch with DP while I was re-seeding and moving things to the new hierarchical pool setup, DP deleted a bunch of my duplicates, so it's in the process of re-duplicating, but you can see the balancer would be attempting to move all the unduplicated to the 14tb, nothing on the 8tb WD's, everything off the "SSDs", and then balance the remaining 3 evenly. Note that it takes the "unpooled other" files into account for the even percentage balancing.

-

Careful - DrivePool doesn't make any distinction between the two duplicates... they're both considered a "duplicate". So if it puts both duplicates of the same file on two different "bad" drives, and both of those drives go, you lose the file. This is the reason I was looking at drive groups (and ended up solving it with hierarchical pools).

-

These are the same options you'd find in the "Drive Usage Limiter" plugin. They limit what data can be placed on each drive. I'm not sure how useful they would be for SSD drives, but for archives they allow you to specify that a certain drive shouldn't have unduplicated data placed on it, for example. Having these checked for SSDs just specifies which type of content can be placed there initially. The content is still moved off onto the archive drives later. Exactly. It lets you specify suspect drives as only having duplicated data.

-

Take a look at the "Releases" link on the right side of the github page. I'll edit the post with a link to there too so people can find it easier.

-

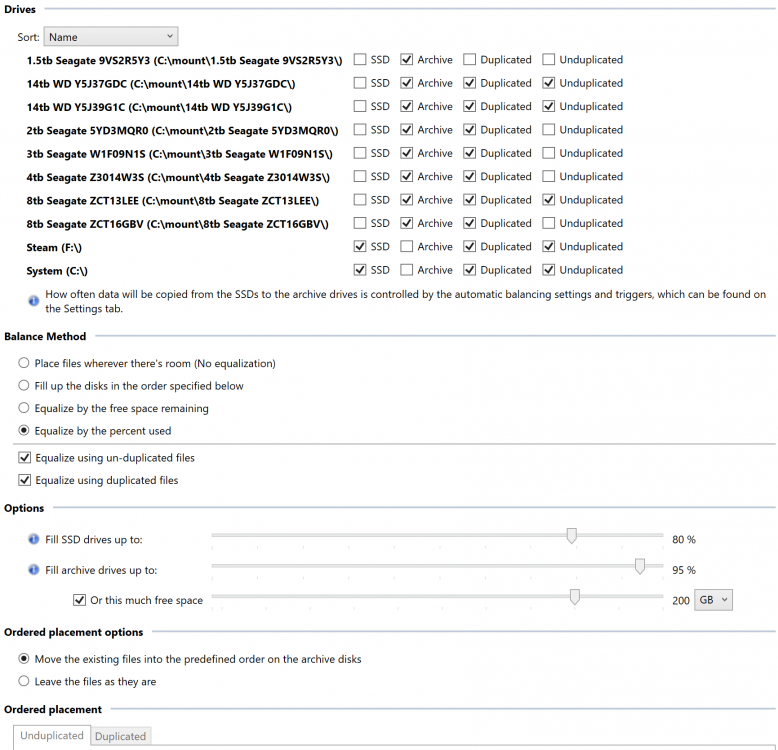

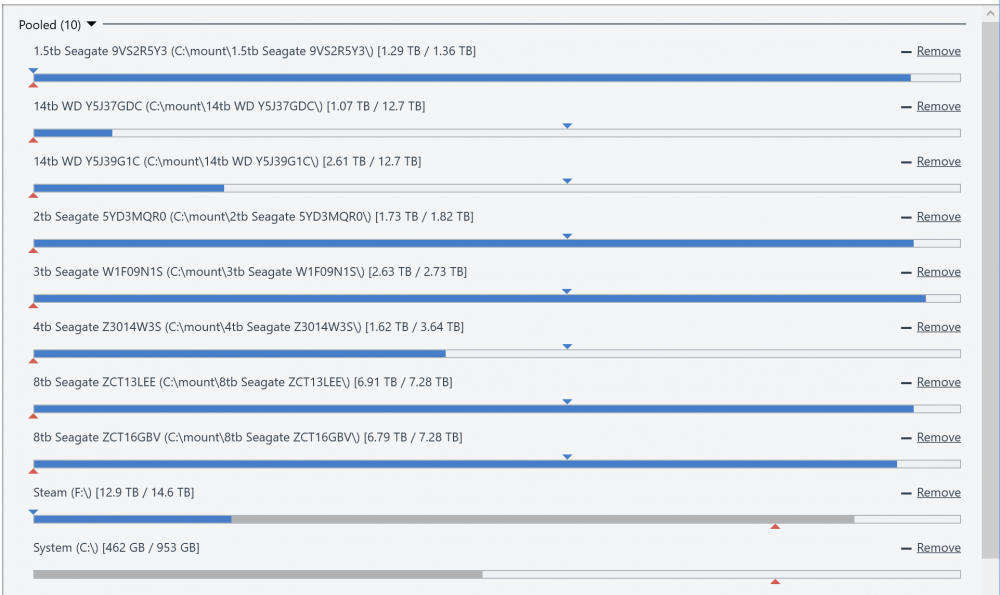

In an attempt to learn the plugin API, I created a plugin which replicates the functionality of many of the official plugins, but combined into a single plugin. The benefit of this is that you can use functionality from several plugins without them "fighting" each other. I'm releasing this open source for others to use or learn from here: https://github.com/cjmanca/AllInOne Or you can download a precompiled version at: https://github.com/cjmanca/AllInOne/releases Here's an example of the settings panel. Notice the 1.5 tb drive is set to not contain duplicated or unduplicated: Balance result with those settings. Again note the 1.5tb is scheduled to move everything off the disk due to the settings above.

-

Ok, I put it up on GitHub: https://github.com/cjmanca/AllInOne Feel free to give it a try if you'd like. I also created a new forum post about it so people can find it easier:

-

I actually just thought of a solution for this which doesn't require a plugin! I could make separate pools for each of the drives I bought at the same time and NOT set duplication on these, and then make one big pool that only consists of those smaller pools and only set duplication on the one big pool. Then it would duplicate between pools, and ensure that the duplicates are on different groups of drives. I'll probably do the same with any disks nearing their end of life. Place all near EoL disks in one pool to make sure it doesn't duplicate files on multiple near EoL disks.

-

Hybrid SSDs are nice for normal use, but in mixed-mode operating environments they get overwhelmed pretty quickly and start thrashing (ie. NAS with several users). There's also the problem of things like DrivePool mixing all your content up across the different drives, so the drive replaces your cached documents with a movie you decide to watch, and then the documents are slow again, even though you weren't going to watch the movie more than once. If there was a way to specify to only cache often written/edited files for increased speed, then maybe? But I think that would still run into issues with the balancer moving files between drives. The Hybrid drive wouldn't know the difference between that and legitimately newly written files.

-

Of course, I have Backblaze for cloud backup too, but re-downloading 10+ TB of data that could have been protected better locally isn't ideal. I'm glad to hear you've had good luck so far, but don't fool yourself - multiple drive failure happens. Keep in mind that drives fail more when they're used more. The most common situations of multiple drive failure is that one drive fails, and you need to restore those files from your redundancies. During the restore process, another drive fails due to the increased use. The most simultaneous failures I've heard of is 4 (not to me)... but that was in a larger raid. There's a reason for the parity drive count increasing every ~5 drives in parity based raids. So far, I've been quite lucky. I've never permanently lost any files due to a drive failure - but I don't want that to start due to lack of diligence on my part either, so if I can find ways to make my storage solution more reliable I will. In fact - one of the main reasons I went with DrivePool is that it seems more fault tolerant. Duplicates are spread between multiple drives (rather than mirroring, which relies entirely on one single other drive), so if you do lose two drives, you may lose some files, but not a full drive's worth. (Plus the lack of striping similarly makes sure that you don't lose the whole array if you can't restore.) I realize I don't need to explain any of this to someone who uses it, but just highlighting the reasons I found DP attractive in the first place - separating the duplicates amount multiple drives to reduce the chance of losses on failures. If that can be improved to further reduce those chances...

-

Settings from my plugin. Notice the 1.5 tb is set to not contain duplicated or unduplicated: Balance with those settings. Again note the 1.5tb is scheduled to move everything off the disk due to the settings above. It properly handles mixed content (duplicated, unduplicated and "unpooled other"), and equalizes accounting for all 3.

-

Currently I think my balancer works better than the official plugins. It offers all the features of all of them, but without any fighting. I also solved all the bugs I'm aware of in the disk space equalizer plugin (there were some corner cases where it balanced incorrectly). I'm working on some issues with the ordered placement portion and then I'll release it.

-

To my knowledge, there isn't a way to automatically designate frequently accessed files to SSD. You can manually set individual folders to specific drives if you know which folders will be frequently accessed, but this isn't always the case depending on file structure, and it's quite possible that you'll have some large files in those folders which aren't ever edited which would end up taking up space on the SSD then. I could never get the SSD optimizer plugin to work for some reason, but the "Archive plugin" worked for me, and appears to be essentially the same thing, just without ordered placement options, so I used that instead. This works great for initially copying files to the pool, but once those have been moved off the SSDs onto the archive disks, if you go back and edit them they edit in place on the archive drives. I don't think you're quite getting what I was saying about that section, so here's an example. I have 3 new 14tb drives all purchased at the same time, and several other drives purchased at different times (some in sets, some not, it's irrelevant to the example). If I set 2x or 3x duplication on a directory and copy a bunch of new files onto the pool with those new hard drives, there's a VERY high chance that the original PLUS all of the duplicates will be placed on those 3 new 14 tb drives (since they're empty, and unless you're using ordered placement all other balancers will end up filling up the least filled drives first). Since all 3 were purchased at the same time, the chances of more than one failing at the same time is higher than the chances of a different set of drives failing at the same time as these. This means that there's a *much* greater chance of permanently losing some of that data that was copied to the pool in the case of multiple drive failure. My proposal was to set those 3 drives as a "group" and tell it to try not to place multiple duplicates on the other disks in the group (spread the duplicates between the different drive groups) to reduce the chance of this.

-

I actually wrote a balancing plugin yesterday which is working pretty well now. It took a bit to figure out how to make it do what I want. There's almost no documentation for it, and it doesn't seem very intuitive in many places. So far, I've been "combining" several of the official plugins together to make them actually work together properly. I found the official plugins like to fight each other sometimes. This means I can have SSD drop drives working with equalization and disk usage limitations with no thrashing. Currently this is working, although I ended up re-writing most of the original plugins from scratch anyway simply because they wouldn't combine very well as originally coded. Plus, the disk space equalizer plugin had some bugs in a way which made it easier to rewrite than fix. I wasn't able to combine the scanner plugin - it seems to be obfuscated and baked into the main source, which made it difficult to see what it was doing. Unfortunately, the main thing I wanted to do doesn't seem possible as far as I can tell. I had wanted to have it able to move files based on their creation/modified dates, so that I could keep new/frequently edited files on faster drives and move files that aren't edited often to slower drives. I'm hoping maybe they can make this possible in the future. Another idea I had hoped to do was to create drive "groups" and have it avoid putting duplicate content on the same group. The idea behind that was that drives purchased at the same time are more likely to fail around the same time, so if I avoid putting both duplicated files on those drives, there's less likelihood of losing files in the case of multiple drive failure from the same group of drives. This also doesn't seem possible right now.

-

I'm interested in extending the behavior of the current balancing plugins, but don't want to re-write them from scratch. Is there any chance the current balancing plugins could be made open source to allow the community to contribute and extend them?

-

+1 for hardlink support! I'm in much the same situation where I'd like to be able to continue seeding torrents after they've been organized to other folders via hardlinks in the pool.