-

Posts

48 -

Joined

-

Last visited

-

Days Won

1

Posts posted by JulesTop

-

-

Yeah, Kinda...

I essentially want ordered file placement regardless of file placement rules. However, during balancing, the files are then moved based on file placement rules.

So, If I have 2 folders in my hybrid pool, and I want folder 1 to be in the cloud and folder 2 to be on the HDD (space permitting). I would like that no matter where I want my files placed, they all get moved to the HDD first and the files are then organizes to their preferred locations when balancing.

When I use the 'Ordered File Placement' plugin, it totally work unless I also apply file placement rules. Even if I have 'File placement rules respect real time file placement limits set by the balancing plugins', the files seem to move directly to the cloud (which fills up the cache and then slows down the file move).

So, right now, what I did is remove all of my file placement limits, which results in both folders being mixed across the cloud and HDDs, however, when I move files, I can at least get full speed as it fills the HDDs first.

So I guess what I'm looking for is almost the functionality of the SSD optimizer, but without the SSDs being emptied during balancing.

-

Thanks Christopher,

I tried this, but the issue I have after doing this is that when the balancer runs, it wants to completely empty the 'SSD' into the cloud... The 'SSD' beings my HDD Pool marked as SSD.

-

For me, it's like a breath of fresh air.... Everything has been running super smooth for days now. Not a single error or warning since the fresh windows install.

I've tried repairing windows before and doing a bunch of stuff, but it never worked out.

However, a couple of things to note. Before I reinstalled windows I upgraded my RAM from 4GB to 12GB, thinking it may be related. I still had the issues after that. Also, when I reinstalled windows, I took the opportunity to use a new SSD (Samsung EVO 860) instead of an old SSD which has been running my windows install from about 5 years now. So windows is installed on a new 500GB SSD, and my cloud drives are now caching to the same SSD as the OS.

I re-purposed the old SSD as a drivepool SSD cache.

The reason I decided to pull the trigger on the fresh install is that I noticed windows stability issues. It would randomly freeze and cause problems... so I wasn't sure if the old SSD was causing problems as well, so I decided to do both at that point.

I didn't do anything with the service file. Just detached the drives before reinstalling windows, and then reinstalled clouddrive and re-attached the drives. I also made sure to backup my configurations of all my must-have programs, like Plex, and transfer to the new OS. It was surprisingly easy to do when following the various programs tutorials on backing up and restoring configurations. Also, remember to de-activate your license for stablebit before re-installing windows.

-

So, it looks like my issue wasn't a clouddrive issue after all.

I seem to have had windows stability issues. I decided to clean install windows and restore the individual programs that I wanted to keep. The drive has been up for 24 hours without any errors.

Everything is normal now!

-

Hi,

I feel like this is possible, but I can't get the right combination of settings to work while using file placement rules.

I have a cloud pool and a HDD pool that are pooled together into a hybrid pool. I want file placement rules to be acted upon during balancing (sort certain folders into the cloud and others locally if space permits) but when moving files into any of the folders, I want it to behave using the 'Ordered File Placement' plugin. Essentially, use the HDD throughout the day, and balance later on to the cloud. It all works but without file placement rules. As soon as I place file placement rules, the files get moved to the cloud directly. This slows down my copies as soon as my local cloud drive cache fills up.

Anyone have suggestions or ideas?

I can share screenshots of my settings as well if that helps.

-

7 hours ago, schudtweb said:

Google seems to have big problems at the moment. I still have problems too while downloading data from gdrive. For the last three days i didnt had a forced unmount, but i got a lot of red errors...

Today i saw a lot of "Service unaivable" errors in the log. I found a post from a german guy, who could be part of the problem (his own words). He forked rclone and made some changes for using multiple service accounts and bypassing quotas, so he could transfer with 14Gbit/s and he know others who made it faster... He also wrote about 503 errors and an overloaded google backend.

I hope this doesn't result in google restricting accounts even further... or anything even more drastic from them.

-

Hi @Christopher (Drashna) and @Alex,

Just an FYI that I can easily reproduce this if it will help in troubleshooting.

I'm currently re-duplicating from one google drive to another as one of them went corrupt for some reason. (And I'm pretty sure I made it worst when I tried to recover it)

Since I'm re-duplicating, it is download from one google drive around 70Mbps 24/7, and uploading to the other. This results in a critical error every hour or two. These critical errors don't cause any dismounts or anything... but ideally they do get resolved.

If you'd like, I can turn on drive tracing again for a few hours and then upload from the troubleshooter again.

Let me know.

Thanks!

-

Are you able to comment or have Alex review my troubleshooting upload? I still get this issue daily... I have my own Client-ID, and I don't see errors on Google's end. I'm hoping that the logs, or information I uploaded can provide some answers.

I'm really hoping to be able to set this and forget it. But I want to make sure it's stable.

Thanks,

Jules

-

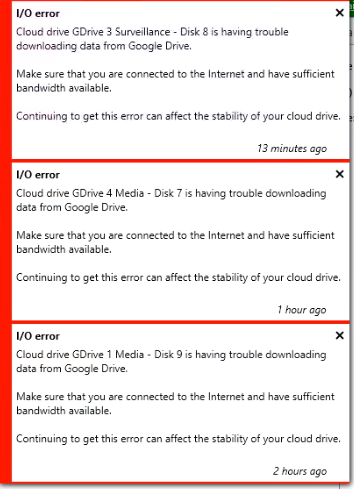

Has anyone experienced this ... Or any ideas?

Has anyone seen these IO Errors... I get them a few times a day.

-

I've always just checked the little checkbox that force closes all open files before detaching... I've never really had an issue doing ti that way.

-

Just an FYI, I enable Drive Tracer while this particular event happened in anticipation of it happening at some point.

I uploaded all the data via the troubleshooting tool and used this thread number (4398) as the ID.

It seems to happen every 1-2hrs of watching media.

-

Hi,

Is it possible that someone can help diagnose this issue...

I have witnessed the problem occur a few times. What happens when I'm watching a movie or TV show via Kodi is it just stops playing... and then closes the stream.

When I watch the downloads, I usually see the threads is x1 but nothing is downloading... so the download hangs. (It doesn't seem to abort or retry fast enough)

When I go to the service log I see the following. (Not sure why the time is way off, not close to my PC clock time)

10:06:30.3: Warning: 0 : [IoManager:236] Error performing Read I/O operation on provider. Retrying. The read operation failed, see inner exception.

10:06:30.4: Warning: 0 : [IoManager:236] Error processing read request. Thread was being aborted.

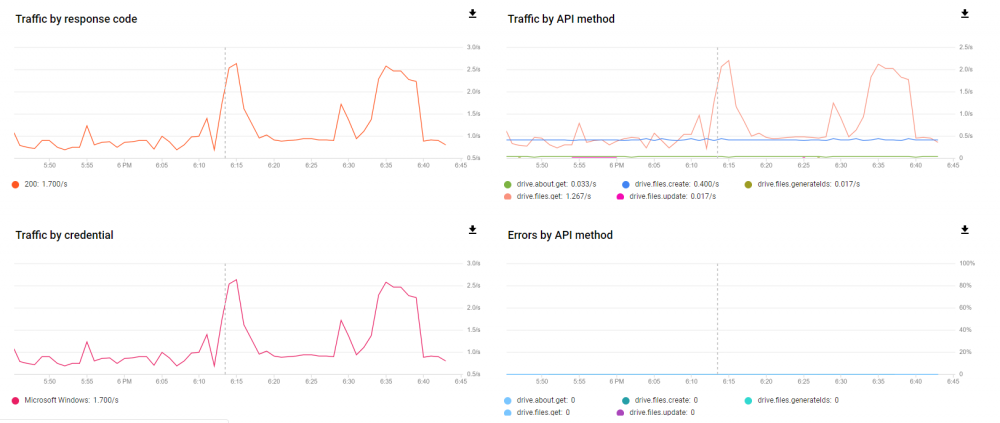

10:06:30.4: Warning: 0 : [IoManager:236] Error in read thread. Thread was being aborted.I then go to my Google Drive API Dashboard and see that everything seems OK on that front. (The issue occurred around 18:44 based on the last image)

Any help would be super duper appreciated!!!

-

I seem to still have this as well. I watched it happen yesterday where 1 thread seems to hang. I just see the thread count at 1 but nothing is downloading.

It caused my Plex stream to stop, which is why I went to look at it.

I adjusted my settings but didn't quite go as low as Christopher recommended as I was losing some performance. I may go that low now and see.

My settings are as follows right now.

DL threads: 7

UL threads: 5

Download size: 20MB

Prefetch Trigger: 10MB

Prefetch Forward: 250MB

Prefetch Time Window: 30sec

-

Also, You can try ordered file placement plugin. You have to download the plugin separately from the website as it doesn't come default.

You can also set file placement rules to place all files on the drive pool and uncheck the cloud pool.

-

Hi,

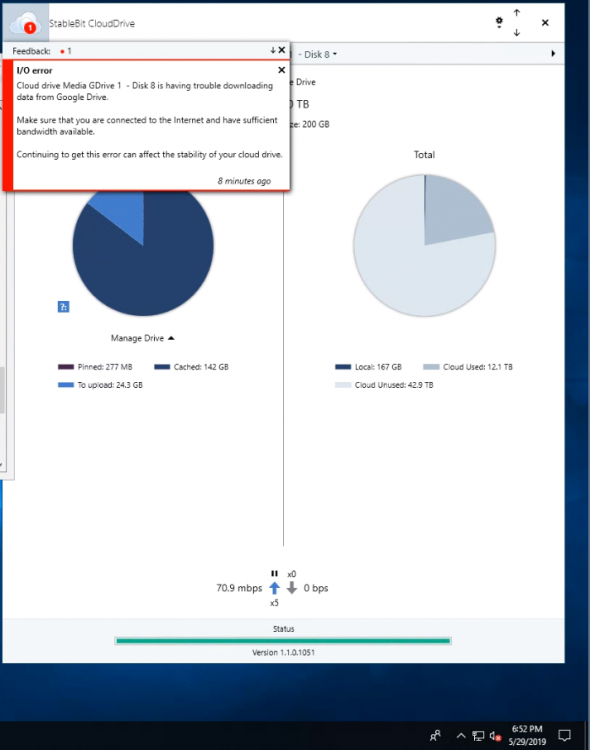

I get this error frequently... I'm not sure if it's because my cloud drive has been uploading for about 1.5 weeks so far and I am also downloading from it periodically for use with Plex... but I do get this error frequently enough. It seems to happen with all my cloud drives... I've detached and re-attached, but that doesn't seem to work... I suspect it might be my cache (SSD shared for all 4 cloud drives which is also being used for Windows OS), but I would like to have a way of making sure it's the SSD before I go out and by another one.

Thanks!

-

This setup looks good to me. Yes, all your duplication will go to the CloudPool, from what I see.

This setup will limit the size of your hybrid pool (with duplication fully enabled) to the size of your smallest sub pool, in this case the DrivePool.

-

Hi, I have a similar situation...

My OS is on my SSD, and I am using the same SSD as the cache for my 3 clouddrives. However, two out of the three are used for duplication. Is there a way to select one of them as the drive that the data gets read from all the time... because right now, although it looks like the data is always pulled from the same drive, I have no way of guaranteeing it (as far as I can tell).

-

The issue with duplicating in a hybrid pool (1 HDD-Pool combined with 1 Cloud-Pool) is that you won't be able to duplicate past the size of your HDD pool (or whichever disk is smaller). It tries to duplicate in the event of a drive failure, but in the hybrid pool, where your two drives are an HDD-Pool and a Cloud-Pool, it will take the smaller of the two drives and duplicate to the other. It won't be able to duplicate beyond that.

The reason I did mine by setting up duplication in the HDD pool via it's sub drives (in your case, the 10 HDDs would be setup with 2x Pool duplication to protect against 1 drive failure) and setup duplication in the cloud pool separately is because I want to have an ever growing drive (so all overflow goes to the cloud drives) while still protecting against any individual drive failure.

I also use the file order placement plugin (downloadable plugin) to prioritize placing files on the HDD-Pool and on top of that I place file placement rules on the folders I want to keep locally... the rest gets uploaded to the cloud.

So, the idea is to protect against any single drive failure while still having unlimited storage available on the PC.

-

On 3/5/2018 at 5:11 PM, Burken said:

i run one setup with 10 google accounts to get away from the daliy limits.

So yes it works!

Do you mind explaining this a bit? I have 3 gdrive accounts and currently uploading 10TB or so... and it's taking forever as I have real time duplication on... so my effective speed is about 75Mbps even though I'm using 225Mbps across my accounts.

I would love to share the load, unload my local HDDs faster and duplicate at a later time... but whenever I try turning off real time duplication, it still only uploads to one of my gdrives and just ignores the rest. I'm not sure at what point it switches from one gdrive to another... but it seems to focus on one.

I assumed that the issue was because the calculation is done on drive percentage free, and since they are 55TB drives, the imbalance of 30-50Gb (during my tests) wasn't triggering anything.

-

OK, I came up with a solution to my own problem which will likely be the best of both worlds.

Setup my Cloud Pool to Pool Duplicate, also setup my HDD Pool to Pool Duplicate.

Then use both the pools in the storage pool with no duplication as normal.

-

I have a very similar setup.

Look at the plugin, ordered file placement. Combine that with Prevent Drive overfill.

So the hybrid pool places all my files on HDDs first with ordered drive placement, then, during re-balancing if the prevent drive overfill plugin sees that the HDDs are getting too full (past 95%) it unloads 20% of the files to the cloud pool (down to 75%). The cloud pool is set to evenly distribute files to the 2 cloud accounts... Each cloud account has a cache, so theoretically, they should go on the cache first and queue up for upload.... so this results in simultaneous uploads.

-

Hi,

This has likely been asked before, but I didn't find an answer with a quick scan of the forums.

I have multiple HDDs pooled together making up a HDDPool. I also have two Cloud Drives making up a Cloud Pool. I combined both to make a Hybrid Storage Pool.

Now, this is setup with zero duplication but I recently had a drive failure and I am also worried about potential cloud account closure or limitations in the future.

So I have a third cloud drive that I would like to use for duplication... but ideally all three of my cloud drives would be used for duplication... So... do I add teh 3rd cloud drive to the Hybrid storage pool as a third drive and set it as duplication of storage, or is there a way I can add it to the cloud pool and get my cloud pool to share the load of the duplication for all the sub drives in the system. Essentially, I would like to be able to use the three cloud drives in conjunction to get better overall upload rate 3x 75Mbps instead of 2x 75Mbps and 1x 75Mbps for duplication.

Thanks!

Drive Duplication

in General

Posted

It would be awesome if after turning ON drive duplication, it would notice that it's missing parts and would automatically 'repair' so to speak...

I'm sure it's more complicated than that... I'm currently copying everything over to a new drive that has duplication turned ON from the get-go... but unfortunately takes twice as long as duplicating a drive that has already been populated as it would only be half the data being uploaded.